Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Even as its big investment partner OpenAI continues to announce more powerful reasoning models such as the latest o3 series, Microsoft is not sitting idly by. Instead, it’s pursuing the development of more powerful small models released under its own brand name.

As announced by several current and former Microsoft researchers and AI scientists today on X, Microsoft is releasing its Phi-4 model as a fully open-source project with downloadable weights on Hugging Face, the AI code-sharing community.

“We have been completely amazed by the response to [the] phi-4 release,” wrote Microsoft AI principal research engineer Shital Shah on X. “A lot of folks had been asking us for weight release. [A f]ew even uploaded bootlegged phi-4 weights on HuggingFace…Well, wait no more. We are releasing today [the] official phi-4 model on HuggingFace! With MIT licence (sic)!!”

Weights refer to the numerical values that specify how an AI language model, small or large, understands and outputs language and data. The model’s weights are established by its training process, typically through unsupervised deep learning, during which it determines what outputs should be provided based on the inputs it receives. The model’s weights can be further adjusted by human researchers and model creators adding their own settings, called biases, to the model during training. A model is generally not considered fully open-source unless its weights have been made public, as this is what enables other human researchers to take the model and fully customize it or adapt it to their own ends.

Although Phi-4 was actually revealed by Microsoft last month, its usage was initially restricted to Microsoft’s new Azure AI Foundry development platform.

Now, Phi-4 is available outside that proprietary service to anyone who has a Hugging Face account, and comes with a permissive MIT License, allowing it to be used for commercial applications as well.

This release provides researchers and developers with full access to the model’s 14 billion parameters, enabling experimentation and deployment without the resource constraints often associated with larger AI systems.

A shift toward efficiency in AI

Phi-4 first launched on Microsoft’s Azure AI Foundry platform in December 2024, where developers could access it under a research license agreement.

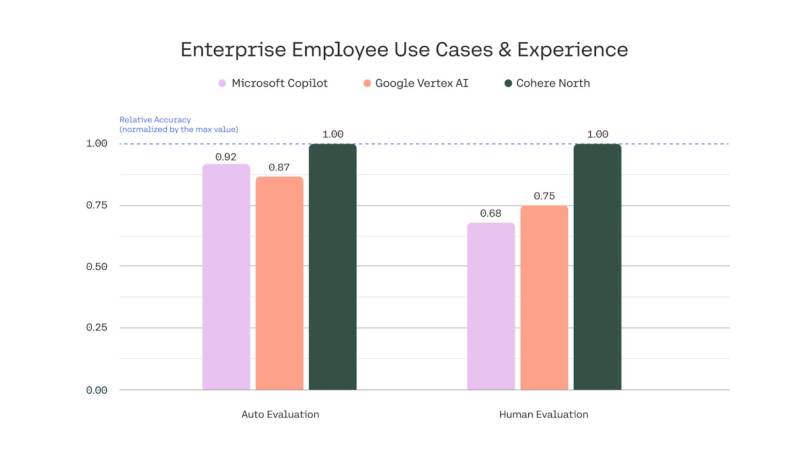

The model quickly gained attention for outperforming many larger counterparts in areas like mathematical reasoning and multitask language understanding, all while requiring significantly fewer computational resources.

The model’s streamlined architecture and its focus on reasoning and logic are intended to address the growing need for high performance in AI that remains efficient in compute- and memory-constrained environments. With this open-source release under a permissive MIT License, Microsoft is making Phi-4 more accessible to a wider audience of researchers and developers, even commercial ones, signaling a potential shift in how the AI industry approaches model design and deployment.

What makes Phi-4 stand out?

Phi-4 excels in benchmarks that test advanced reasoning and domain-specific capabilities. Highlights include:

• Scoring over 80% in challenging benchmarks like MATH and MGSM, outperforming larger models like Google’s Gemini Pro and GPT-4o-mini.

• Superior performance in mathematical reasoning tasks, a critical capability for fields such as finance, engineering and scientific research.

• Impressive results in HumanEval for functional code generation, making it a strong choice for AI-assisted programming.

In addition, Phi-4’s architecture and training process were designed with precision and efficiency in mind. Its 14-billion-parameter dense, decoder-only transformer model was trained on 9.8 trillion tokens of curated and synthetic datasets, including:

• Publicly available documents rigorously filtered for quality.

• Textbook-style synthetic data focused on math, coding and common-sense reasoning.

• High-quality academic books and Q&A datasets.

The training data also included multilingual content (8%), though the model is primarily optimized for English-language applications.

Its creators at Microsoft say that the safety and alignment processes, including supervised fine-tuning and direct preference optimization, ensure robust performance while addressing concerns about fairness and reliability.

The open-source advantage

By making Phi-4 available on Hugging Face with its full weights and an MIT License, Microsoft is opening it up for businesses to use in their commercial operations.

Developers can now incorporate the model into their projects or fine-tune it for specific applications without the need for extensive computational resources or permission from Microsoft.

This move also aligns with the growing trend of open-sourcing foundational AI models to foster innovation and transparency. Unlike proprietary models, which are often limited to specific platforms or APIs, Phi-4’s open-source nature ensures broader accessibility and adaptability.

Balancing safety and performance

With Phi-4’s release, Microsoft emphasizes the importance of responsible AI development. The model underwent extensive safety evaluations, including adversarial testing, to minimize risks like bias, harmful content generation, and misinformation.

However, developers are advised to implement additional safeguards for high-risk applications and to ground outputs in verified contextual information when deploying the model in sensitive scenarios.

Implications for the AI landscape

Phi-4 challenges the prevailing trend of scaling AI models to massive sizes. It demonstrates that smaller, well-designed models can achieve comparable or superior results in key areas.

This efficiency not only reduces costs but lowers energy consumption, making advanced AI capabilities more accessible to mid-sized organizations and enterprises with limited computing budgets.

As developers begin experimenting with the model, we’ll soon see if it can serve as a viable alternative to rival commercial and open-source models from OpenAI, Anthropic, Google, Meta, DeepSeek and many others.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.