ConocoPhillips has been contracted to deliver its Optimized Cascade Process liquefaction technology for the 26 million tonnes per annum (MTPA) liquefied natural gas (LNG) export facility in Cameron Parish, Louisiana, being developed by Monkey Island LNG.

“After an extensive technology selection study and analysis on multiple technologies, Monkey Island LNG selected the Optimized Cascade process based on its operational flexibility, quick restart capabilities, high efficiency, and proven performance above nameplate capacity”, Greg Michaels, CEO of Monkey Island LNG, said.

“The decision marks a major milestone in advancing Monkey Island LNG’s mission to deliver TrueCost LNG, a radically transparent, cost-efficient model that eliminates hidden fees and aligns incentives across the LNG value chain”, Michaels said.

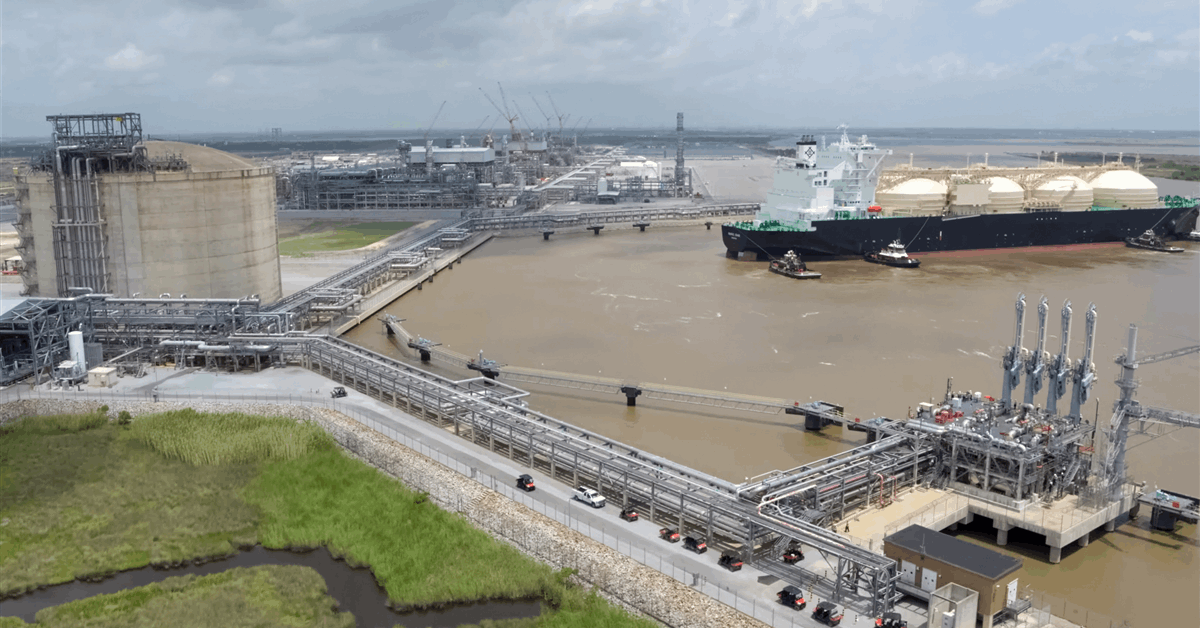

The 246-acre site in Cameron Parish, Louisiana, is located on a deepwater port along the Calcasieu Ship Channel, about 2 miles inland from the Gulf of Mexico. It offers additional marine access via the Cameron Loop on Monkey Island’s northern bank, ensuring flexibility during construction and operation, according to Monkey Island LNG.

The facility is also situated near one of North America’s most extensive natural gas transportation networks, which are directly connected to Henry Hub and the abundant Haynesville Shale gas basin, Monkey Island LNG noted.

The facility is designed to have five liquefaction trains, each capable of liquefying approximately 3.4 billion cubic feet per day of natural gas. Each train gas a production capacity of approximately 5 MTPA. The facility will also have three LNG storage tanks with the capacity to hold 180,000 cubic meters (6.4 million cubic feet) each.

Monkey Island LNG also said it has picked McDermott International as its engineering, procurement, and construction partner for the project.

To contact the author, email [email protected]

WHAT DO YOU THINK?

Generated by readers, the comments included herein do not reflect the views and opinions of Rigzone. All comments are subject to editorial review. Off-topic, inappropriate or insulting comments will be removed.

MORE FROM THIS AUTHOR