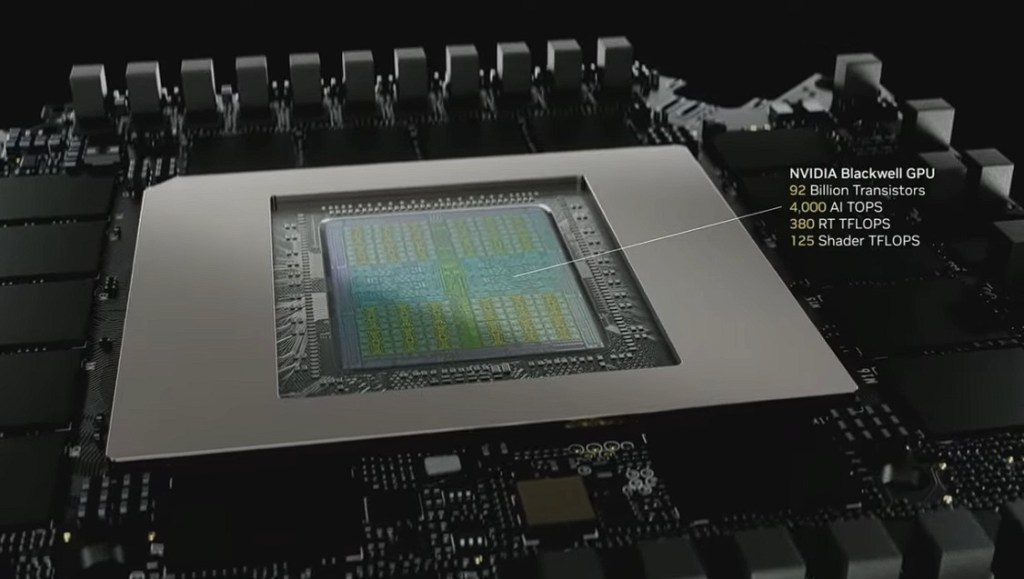

Nvidia announced that Nvidia Blackwell hardware will accelerate by 50 times the big computer-aided software engineering firms’ software for digital twins.

The vendors include Ansys, Altair, Cadence, Siemens and Synopsys. With such accelerated software, along with Nvidia CUDA-X libraries and blueprints to optimize performance such as automotive, aerospace, energy, manufacturing and life sciences can significantly reduce product development time, cut costs and increase design accuracy while maintaining energy efficiency.

“CUDA-accelerated physical simulation on Nvidia Blackwell has enhanced real-time digital twins and is reimagining the entire engineering process,” said Jensen Huang, founder and CEO of Nvidia, in a statement. “The day is coming when virtually all products will be created and brought to life as a digital twin long before it is realized physically.”

The company unveiled the news during Huang’s keynote at the GTC 2025 event. As noted in my recent Q&A with Ansys CTO Prith Banerjee, the gap between simulation and reality is closing, not just in things like video games but also with the similar technology used for engineering simulations.

Ecosystem support for Nvidia Blackwell

Software providers can help their customers develop digital twins with real-time interactivity and now accelerate them with NVIDIA Blackwell technologies.

The growing ecosystem integrating Blackwell into its software includes Altair, Ansys, BeyondMath, Cadence, COMSOL, ENGYS, Flexcompute, Hexagon, Luminary Cloud, M-Star, NAVASTO, an Autodesk company, Neural Concept, nTop, Rescale, Siemens, Simscale, Synopsys and Volcano Platforms.

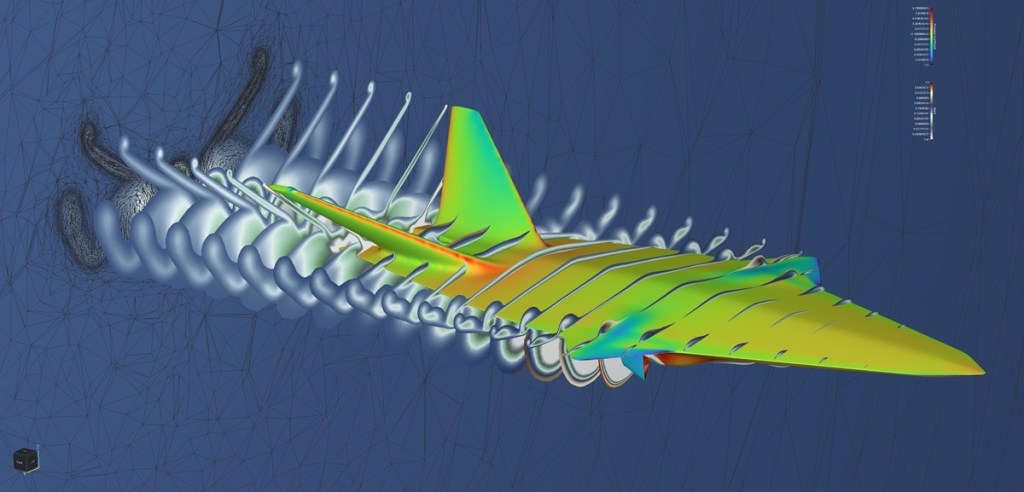

Cadence is using Nvidia Grace Blackwell-accelerated systems to help solve one of computational fluid dynamics’ biggest challenges — the simulation of an entire aircraft during takeoff and landing. Using the Cadence Fidelity CFD solver, Cadence successfully ran multibillion cell simulations on a single NVIDIA GB200 NVL72 server in under 24 hours, which would have previously required a CPU cluster with hundreds of thousands of cores and several days to complete.

This breakthrough will help the aerospace industry move toward designing safer, more efficient aircrafts while reducing the amount of expensive wind-tunnel testing required, speeding time to market.

Anirudh Devgan, president and CEO of Cadence, said in a statement, “Nvidia Blackwell’s acceleration of

the Cadence.AI portfolio delivers increased productivity and quality of results for intelligent system design — reducing engineering tasks that took hours to minutes and unlocking simulations not possible before. Our collaboration with Nvidia drives innovation across semiconductors, data centers, physical AI and sciences.”

Sassine Ghazi, president and CEO of Synopsys, said, “At GTC, we’re unveiling the latest performance results observed across our leading portfolio when optimizing Synopsys solutions for NVIDIA Blackwell to accelerate computationally intensive chip design workflows. Synopsys technology is mission-critical to the productivity and capabilities of engineering teams, from silicon to systems. By harnessing the power of Nvidia accelerated computing, we can help customers unlock new levels of performance and deliver their innovations even faster.”

Ajei Gopal, president and CEO of Ansys, said, in a statement: “The close collaboration between Ansys and NVIDIA is accelerating innovation at an unprecedented pace. By harnessing the computational performance of NVIDIA Blackwell GPUs, we at Ansys are empowering engineers at Volvo Cars to tackle the most complex computational fluid dynamics challenges with exceptional speed and accuracy — enabling more optimization studies and delivering more performant vehicles.”

James Scapa, founder and CEO of Altair, said in a statement, “The Nvidia Blackwell platform’s computing power, combined with Altair’s cutting-edge simulation tools, gives users transformative capabilities. This combination makes GPU-based simulations up to 1.6 times faster compared with the previous generation, helping engineers rapidly solve design challenges and giving industries the power to create safer, more sustainable products through real-time digital twins and physics-informed AI.”

Roland Busch, President and CEO of Siemens said: “The combination of Nvidia’s groundbreaking Blackwell architecture with Siemens’ physics-based digital twins will enable engineers to drastically reduce development times and costs through using photo-realistic, interactive digital twins. This collaboration will allow us to help customers like BMW innovate faster, optimize processes, and achieve remarkable levels of efficiency in design and manufacturing.”

Rescale CAE Hub With Nvidia Blackwell

Rescale’s newly launched CAE Hub enables customers to streamline their access to Nvidia technologies and CUDA®-accelerated software developed by leading independent software vendors. Rescale CAE Hub provides flexible, high-performance computing and AI technologies in the cloud powered by Nvidia GPUs and Nvidia DGX Cloud.

Boom Supersonic, the company building the world’s fastest airliner, will use the Nvidia Omniverse Blueprint for real-time digital twins and Blackwell-accelerated CFD solvers on Rescale CAE Hub to design and optimize its new supersonic passenger jet.

The company’s product development cycle, which is almost entirely simulation-driven, will use the Rescale platform accelerated by Blackwell GPUs to test different flight conditions and refine requirements in a continuous loop with simulation.

The adoption of the Rescale CAE Hub powered by Blackwell GPUs expands Boom Supersonic’s collaboration with Nvidia. Through the Nvidia PhysicsNeMo framework and the Rescale AI Physics platform, Boom Supersonic can unlock 4x more design explorations for its supersonic airliner, speeding iteration to improve performance and time to market.

The Nvidia Omniverse Blueprint for real-time digital twins, now generally available, is also part of the Rescale CAE Hub. The blueprint brings together Nvidia CUDA-X libraries, Nvidia PhysicsNeMo AI and the Nvidia Omniverse platform — and is also adding the first Nvidia NIM microservice for external aerodynamics, the study of how air moves around objects.

GB Daily

Stay in the know! Get the latest news in your inbox daily

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.