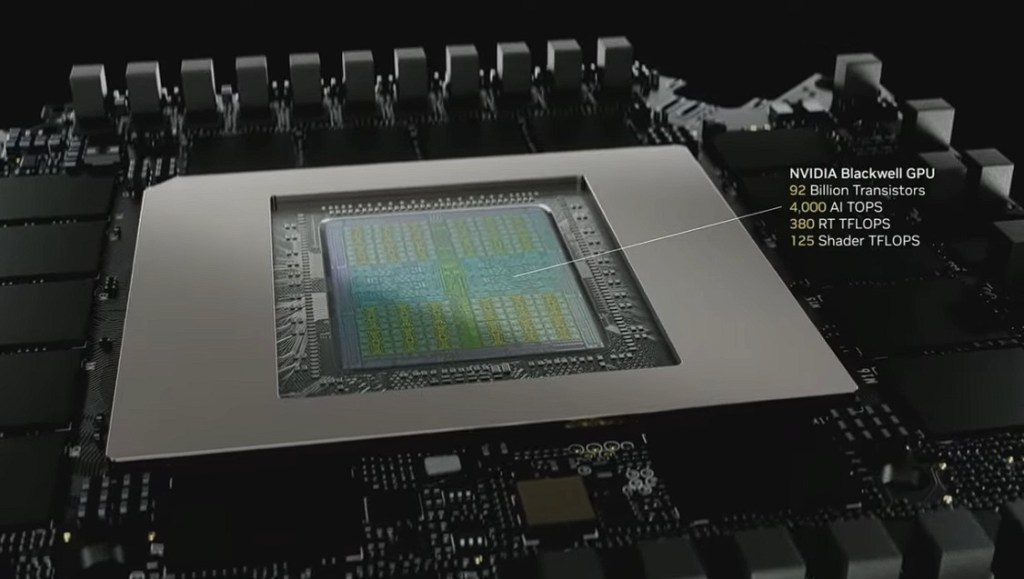

In the second half of 2027, Nvidia will ship the Rubin Ultra NVL576, which will be close to four times faster than Vera Rubin NVL144 systems.

“It’s … extreme scale up. Each rack is 600 kilowatts, 2.5 million parts, and obviously a whole lot of GPUs,” Huang said during the keynote.

The system will have 576 Rubin GPUs, 12,672 Vera CPU cores, 2,304 memory chips, 144 NVLink switches, 576 ConnectX-9 NICs and 72 Bluefield data processing units (DPUs). It will offer 15 exaflops of FP4 inferencing performance and 5 exaflops of FP8 performance, and include the faster HBM4e memory, which will transfer data at 4.6PBps, and the new NVLink 7 interconnect.

In 2028, Nvidia will release a GPU called Feynman, which will include next-generation HBM memory and will be paired with Vera CPUs in systems. Huang didn’t share additional information about the chip.

Huang also talked extensively about newer reasoning AI models, which can think more deeply to solve problems. The newer models, which will drive agentic AI, generate more tokens when reasoning, which is where faster GPUs step in.

“The amount of tokens generated is … higher. Easily 100 times more,” Huang said.