Nvidia announced that Taiwan’s leading system manufacturers are set to build Nvidia DGX Spark and DGX Station systems.

Growing partnerships with Acer, Gigabyte and MSI will extend the availability of DGX Spark and DGX Station personal AI supercomputers — empowering a global ecosystem of developers, data scientists and researchers with unprecedented performance and efficiency.

Enterprises, software providers, government agencies, startups and research institutions need robust systems that can deliver the performance and capabilities of an AI server in a desktop form factor without compromising data size, proprietary model privacy or the speed of scalability.

The rise of agentic AI systems capable of autonomous decision-making and task execution amplifies these demands. Powered by the Nvidia Grace Blackwell platform, DGX Spark and DGX Station will enable developers to prototype, fine-tune and inference models from the desktop to the data center.

“AI has revolutionized every layer of the computing stack — from silicon to software,” said Jensen Huang, CEO of Nvidia, in a keynote talk at Computex 2025 in Taiwan. “Direct descendants of the DGX-1 system that ignited the AI revolution, DGX Spark and DGX Station are created from the ground up to power the next generation of AI research and development.”

DGX Spark fuels innovation

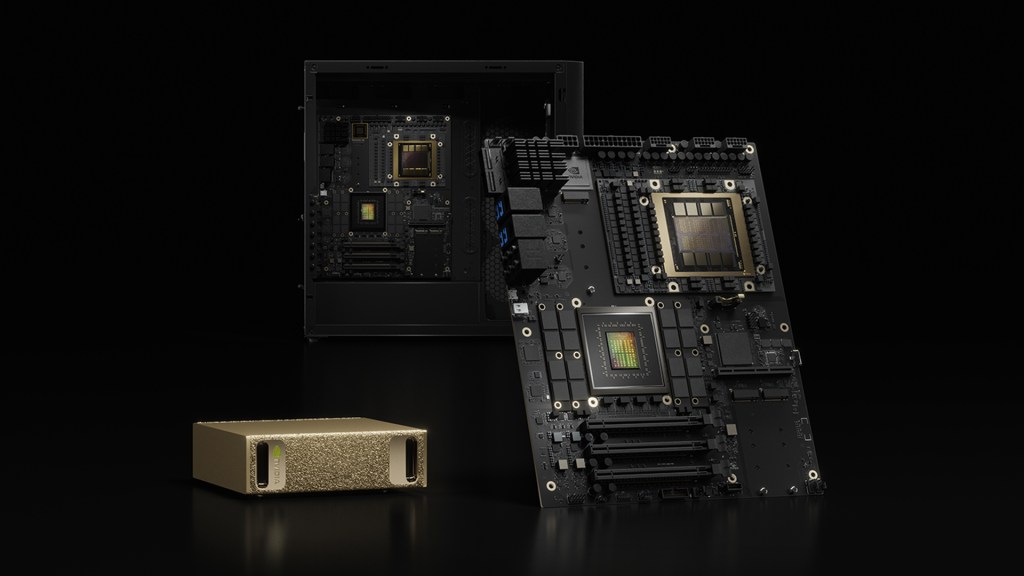

DGX Spark is equipped with the Nvidia GB10 Grace Blackwell Superchip and fifth-generation Tensor Cores. It delivers up to 1 petaflop of AI compute and 128GB of unified memory, and enables seamless exporting of models to Nvidia DGX Cloud or any accelerated cloud or data center infrastructure.

Delivering powerful performance and capabilities in a compact package, DGX Spark lets developers, researchers, data scientists and students push the boundaries of generative AI and accelerate workloads across industries.

DGX Station advances AI innovation

Built for the most demanding AI workloads, DGX Station features the Nvidia GB300 Grace Blackwell Ultra Desktop Superchip, which offers up to 20 petaflops of AI performance and 784GB of unified system memory. The system also includes the Nvidia ConnectX-8 SuperNIC, supporting networking speeds of up to 800Gb/s for high-speed connectivity and multi-station scaling.

DGX Station can serve as an individual desktop for one user running advanced AI models using local data, or as an on-demand, centralized compute node for multiple users. The system supports Nvidia Multi-Instance GPU technology to partition into as many as seven instances — each with its own high-bandwidth memory, cache and compute cores — serving as a personal cloud for data science and AI development teams.

To give developers a familiar user experience, DGX Spark and DGX Station mirror the software architecture that powers industrial-strength AI factories. Both systems use the Nvidia DGX operating system, preconfigured with the latest Nvidia AI software stack, and include access to Nvidia NIM microservices and Nvidia Blueprints.

Developers can use common tools, such as PyTorch, Jupyter and Ollama, to prototype, fine-tune and perform inference on DGX Spark and seamlessly deploy to DGX Cloud or any accelerated data center or cloud infrastructure.

Dell Technologies is among the first global system builders to develop DGX Spark and DGX Station — helping address the rising enterprise demand for powerful, localized AI computing solutions.

“There’s a clear shift among consumers and enterprises to prioritize systems that can handle the next generation of intelligent workloads,” said Michael Dell, chairman and CEO of Dell Technologies, in a statement. “The interest in Nvidia DGX Spark and Nvidia DGX Station signals a new era of desktop computing, unlocking the full potential of local AI performance. Our portfolio is designed to meet these needs. Dell Pro Max with GB10 and Dell Pro Max with Nvidia GB300 give organizations the infrastructure to integrate and tackle large AI workloads.”

HP Inc. is bolstering the future of AI computing by offering these new solutions that enable businesses to unlock the full potential of AI performance.

“Through our collaboration with Nvidia, we are delivering a new set of AI-powered devices and experiences to further advance HP’s future-of-work ambitions to enable business growth and professional fulfillment,” said Enrique Lores, president and CEO of HP, in a statement. “With the HP ZGX, we are redefining desktop computing — bringing data-center-class AI performance to developers and researchers to iterate and simulate faster, unlocking new opportunities.”

Expanded availability and partner ecosystem

DGX Spark will be available from Acer, ASUS, Dell Technologies, GIGABYTE, HP, Lenovo and MSI, as well as global channel partners, starting in July. Reservations for DGX Spark are now open on nvidia.com and through Nvidia partners.

DGX Station is expected to be available from ASUS, Dell Technologies, Gigabyte, HP and MSI later this year.

GB Daily

Stay in the know! Get the latest news in your inbox daily

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.