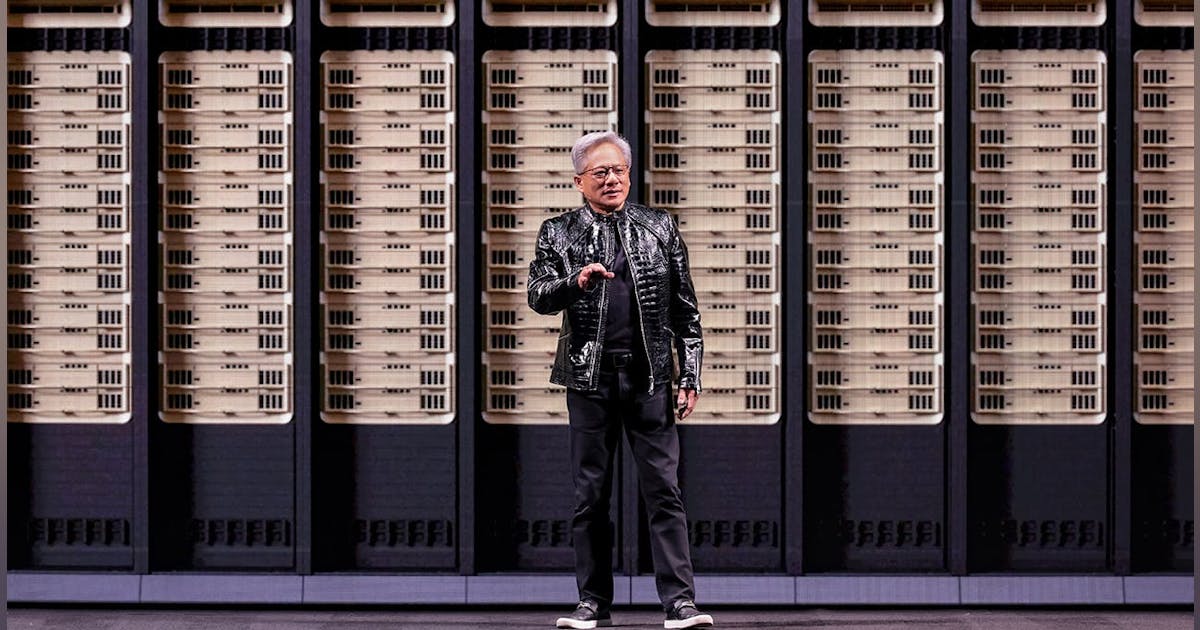

The Architecture Shift: From “GPU Server” to “Rack-Scale Supercomputer”

NVIDIA’s Rubin architecture is built around a single design thesis: “extreme co-design.” In practice, that means GPUs, CPUs, networking, security, software, power delivery, and cooling are architected together; treating the data center as the compute unit, not the individual server.

That logic shows up most clearly in the NVL72 system. NVLink 6 serves as the scale-up spine, designed to let 72 GPUs communicate all-to-all with predictable latency, something NVIDIA argues is essential for mixture-of-experts routing and synchronization-heavy inference paths.

NVIDIA is not vague about what this requires. Its technical materials describe the Rubin GPU as delivering 50 PFLOPS of NVFP4 inference and 35 PFLOPS of NVFP4 training, with 22 TB/s of HBM4 bandwidth and 3.6 TB/s of NVLink bandwidth per GPU.

The point of that bandwidth is not headline-chasing. It is to prevent a rack from behaving like 72 loosely connected accelerators that stall on communication. NVIDIA wants the rack to function as a single engine because that is what it will take to drive down cost per token at scale.

The New Idea NVIDIA Is Elevating: Inference Context Memory as Infrastructure

If there is one genuinely new concept in the Rubin announcements, it is the elevation of context memory, and the admission that GPU memory alone will not carry the next wave of inference.

NVIDIA describes a new tier called NVIDIA Inference Context Memory Storage, powered by BlueField-4, designed to persist and share inference state (such as KV caches) across requests and nodes for long-context and agentic workloads. NVIDIA says this AI-native context tier can boost tokens per second by up to 5× and improve power efficiency by up to 5× compared with traditional storage approaches.

The implication is clear: the path to cheaper inference is not just faster GPUs. It is treating inference state as a reusable asset; one that can live beyond a single GPU’s memory window, be accessed quickly, and eliminate redundant computation.

If the Blackwell generation was about feeding the transformer, Rubin adds the ability to remember what has already been fed, and to use that memory to make everything run faster.

Security and Reliability Move From Checkboxes to Rack-Scale Requirements

Two themes run consistently through the Rubin announcements: confidential computing and resilience.

NVIDIA says Rubin introduces third-generation confidential computing, positioning Vera Rubin NVL72 as the first rack-scale platform to deliver NVIDIA Confidential Computing across CPU, GPU, and NVLink domains.

It also highlights a second-generation RAS engine spanning GPU, CPU, and NVLink, with real-time health monitoring, fault tolerance, and modular, “cable-free” trays designed to speed servicing.

This is the AI factory idea expressed in operational terms. NVIDIA is treating uptime and serviceability as performance features. Because in a world of continuous inference, every minute of downtime is a direct tax on token economics.

Networking Gets a Starring Role: Co-Packaged Optics and AI-Native Ethernet

Rubin treats networking not as a support layer, but as a primary design constraint.

On the Ethernet side, NVIDIA is pushing Spectrum-X Ethernet Photonics and co-packaged optics, claiming up to 5× improvements in power efficiency along with gains in reliability and uptime for AI workloads.

In its technical materials, NVIDIA describes Spectrum-6 as a 102.4 Tb/s switch chip engineered for the synchronized, bursty traffic patterns common in large AI clusters, and shows architectures that scale into the hundreds of terabits per second using co-packaged optics.

What is notable is not just what NVIDIA is promoting, but what it is no longer centering. InfiniBand, long the default fabric for high-performance AI and HPC clusters, still appears in the Rubin ecosystem, but it no longer dominates the narrative. Ethernet, long treated as the “good enough” alternative, is being recast as an AI-native fabric when paired with photonics, co-packaged optics, and tight system co-design.

The logic is simple. At scale, the network becomes either the accelerator or the brake. Rubin is NVIDIA’s attempt to remove the brake by making Ethernet behave like an AI-native fabric, rather than a generic data network.

DGX SuperPOD as the “Production Blueprint,” Not a Reference Design

NVIDIA is positioning DGX SuperPOD as the standard blueprint for scaling Rubin, as opposed to a conceptual reference design.

In its Rubin materials, the SuperPOD is defined by the integration of NVL72 or NVL8 systems, BlueField-4 DPUs, the Inference Context Memory Storage platform, ConnectX-9 networking, Quantum-X800 InfiniBand or Spectrum-X Ethernet fabrics, and Mission Control operations software.

NVIDIA also anchors that blueprint with a concrete scale marker. A SuperPOD configuration built from eight DGX Vera Rubin NVL72 systems (576 Rubin GPUs in total) is described as delivering 28.8 exaflops of FP4 performance and 600 TB of fast memory.

This is not marketing theater. It is NVIDIA spelling out what a “minimum viable AI factory module” looks like in procurement terms: standardized racks, pods, and operating tooling, rather than one-off integration projects.

How Rubin Stacks Up: What Changes Versus Blackwell

NVIDIA’s own comparisons return to three core differences, all centered on cost and utilization:

-

Economics lead the message: Up to 10× lower inference token cost and 4× fewer GPUs required for mixture-of-experts training versus Blackwell.

-

Rack-scale coherence: NVLink 6 and NVL72 are positioned as the way to keep utilization high as model parallelism, expert routing, and long-context inference become more complex.

-

Infrastructure tiers for inference: The new context memory storage tier is an explicit acknowledgment that next-generation inference is about state management and reuse, not just raw compute.

If Blackwell marked the peak of the “bigger GPU, bigger box” era, Rubin is NVIDIA’s attempt to make the rack the computer, and to make operations part of the architecture itself.

What Rubin Signals for AI Compute in 2026 and Beyond

The center of gravity in AI is shifting from a race to train the biggest models to the industrialization of inference.

Rubin aligns NVIDIA’s roadmap with a world building products, agents, and services that run continuously, not occasional demos. Cost per token becomes the metric CFOs understand, and NVIDIA is positioning itself to own that number the way it once owned training performance.

Data centers will increasingly be designed around AI racks, not the other way around.

Between liquid-cooling assumptions, serviceability as a design requirement, and photonics-heavy networking, Rubin points toward a more specialized facility baseline: one that looks less like a generic data hall and more like a purpose-built factory floor for tokens. The AI factory will not be a slogan; it will define the generation.

The platform story also tightens NVIDIA’s grip, because the system itself becomes the differentiator. With six interdependent chips, rack-scale security, AI-native context storage, and Mission Control operations, Rubin is intentionally hard to unbundle. Even if competitors match a GPU on raw math, NVIDIA is betting they will not match a fully integrated AI factory on cost or performance.

And once inference state is treated as shared infrastructure, storage and networking decisions change. Economic value shifts toward systems that can reuse context efficiently, keeping GPUs busy without re-computing the world every session.