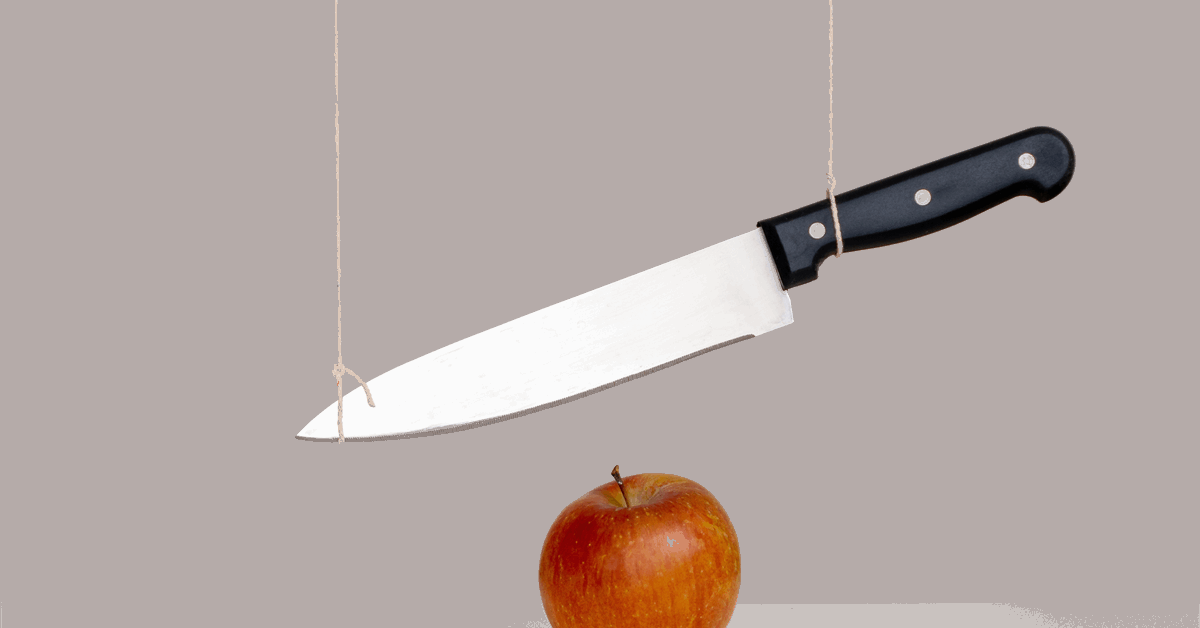

In a report sent to Rigzone by the Skandinaviska Enskilda Banken AB (SEB) team on Tuesday morning, Bjarne Schieldrop, chief commodities analyst at the company, said “the Damocles Sword of OPEC+” is “hanging over U.S. shale oil producers”.

“OPEC+ decided yesterday [Monday] to stick with its plan – to lift production by 120,000 barrels per day every month for 18 months starting April,” Schieldrop noted in the report.

“Again and again, it has pushed the start of the production increase further into the future. It could do it yet again. That will depend on circumstances of one – global oil demand growth and two – non-OPEC+ supply growth,” he added.

In the report, Schieldrop said “all oil producers in the world know that OPEC+ has … five to six million barrels per day of reserve capacity at hand” and noted that the group “wants to return two to three million barrels per day of this reserve to the market to get back to a more normal reserve level”.

“The now increasingly standing threat of OPEC+ to increase production in ‘just a couple of months’ is hanging over the world’s oil producers like a Damocles Sword. OPEC+ is essentially saying: ‘produce much more and we will do too, and you will get a much lower price’,” Schieldrop noted.

The chief commodities analyst at SEB went on to state in the report that, “if U.S. shale oil producers embarked on a strong supply growth path, heeding calls from Donald Trump for more production and a lower oil price, then OPEC+ would have no other choice than to lift production and let the oil price fall”.

“Trump would get a lower oil price as he wishes for, but he would not get higher U.S. oil production. U.S. shale oil producers would get a lower oil price, lower income, and no higher production,” he added.

“U.S. oil production might even fall in the face of a lower oil price with lower price and volume hurting U.S. trade balance as well as producers,” he continued.

Schieldrop said in the report that lower taxes on U.S. oil producers could lead to higher oil production but added that “no growth equals lots of profits”.

“Trump could reduce taxes on U.S. oil production to lower their marginal cost by up to $10 per barrel,” Schieldrop noted in the report.

“It could be seen as a four-year time-limited option to produce more oil at a lower cost as such tax-measures could be reversed by the next president in four years. It would be very tempting for them to produce more,” he added.

Rigzone has contacted OPEC, the American Petroleum Institute (API), the International Association of Oil & Gas Producers (IOGP), the Trump transition team, the White House, and the U.S. Department of Energy (DOE) for comment on the SEB report. At the time of writing, none of the above have responded to Rigzone’s request yet.

A statement posted on OPEC’s website on December 5 highlighted that OPEC+’s required production level for 2025 and 2026 is 39.725 million barrels per day. That statement pointed out that the required production level for Saudi Arabia, Russia, Iraq, United Arab Emirates, Kuwait, Kazakhstan, Algeria, and Oman “is before applying any additional production adjustments”. It also noted that UAE required production has been increased by 300,000 barrels per day and added that this increase will be phased in gradually starting April 2025 until the end of September 2026.

A separate statement posted on the OPEC site on the same day revealed that Saudi Arabia, Russia, Iraq, United Arab Emirates, Kuwait, Kazakhstan, Algeria, and Oman decided to extend additional voluntary adjustments of 1.65 million barrels per day, that were announced in April 2023, until the end of December 2026.

That statement also revealed that those countries will extend additional voluntary adjustments of 2.2 million barrels per day, that were announced in November 2023, until the end of March 2025. These will be “gradually phased out on a monthly basis until the end of September 2026”, that statement highlighted. The statement also noted that “this monthly increase can be paused or reversed subject to market conditions”.

To contact the author, email [email protected]