Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Researchers at Sentient Foundation have released Open Deep Search (ODS), an open-source framework that can match the quality of proprietary AI search solutions such as Perplexity and ChatGPT Search. ODS equips large language models (LLMs) with advanced reasoning agents that can use web search and other tools to answer questions.

For enterprises looking for customizable AI search tools, ODS offers a compelling, high-performance alternative to closed commercial solutions.

The AI search landscape

Modern AI search tools like Perplexity and ChatGPT Search can provide up-to-date answers by combining the knowledge and reasoning capabilities of LLMs with web search. However, these solutions are typically proprietary and closed-source, making it difficult to customize them and adopt them for special applications.

“Most innovation in AI search has happened behind closed doors. Open-source efforts have historically lagged in usability and performance,” Himanshu Tyagi, co-founder of Sentient, told VentureBeat. “ODS aims to close that gap, showing that open systems can compete with, and even surpass, closed counterparts on quality, speed, and flexibility.”

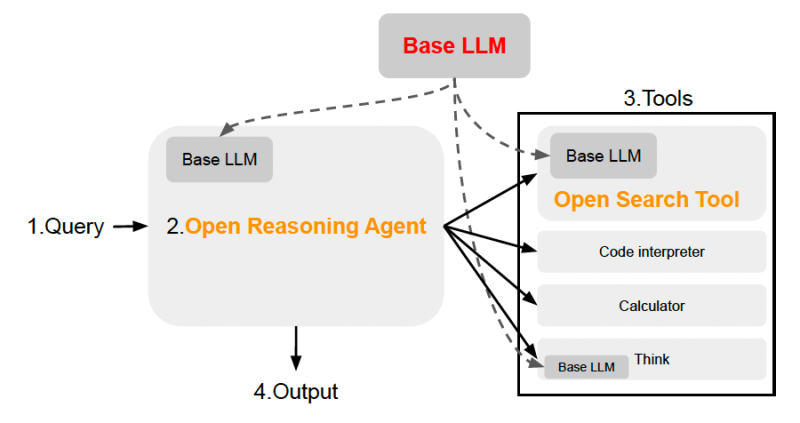

Open Deep Search (ODS) architecture

Open Deep Search (ODS) is designed as a plug-and-play system that can be integrated with both open-source models like DeepSeek-R1 and closed models such as GPT-4o and Claude.

ODS comprises two core components, both leveraging the chosen base LLM:

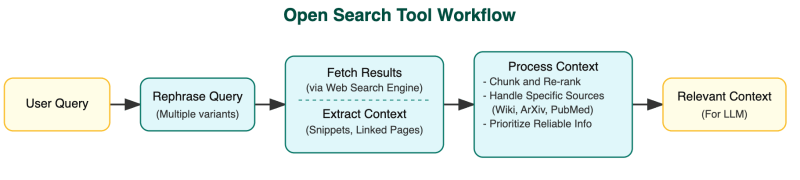

Open Search Tool: This component takes a query and retrieves information from the web that can be given to the LLM as context. Open Search Tool performs a few key actions to improve search results and make sure it provides relevant context to the model. First, it rephrases the original query in different ways to broaden the search coverage and capture diverse perspectives. The tool then fetches results from a search engine, extracts context from the top results (snippets and linked pages), and applies chunking and re-ranking techniques to filter for the most relevant content. It also has custom handling for specific sources like Wikipedia, ArXiv and PubMed, and can be prompted to prioritize reliable sources when encountering conflicting information.

Open Reasoning Agent: This agent receives the user’s query and uses the base LLM and various tools (including the Open Search Tool) to formulate a final answer. Sentient provides two distinct agent architectures within ODS:

ODS-v1: This version employs a ReAct agent framework combined with Chain-of-Thought (CoT) reasoning. ReAct agents interleave reasoning steps (“thoughts”) with actions (like using the search tool) and observations (the results of tools). ODS-v1 uses ReAct iteratively to arrive at an answer. If the ReAct agent struggles (as determined by a separate judge model), it defaults to a CoT Self-Consistency, which samples several CoT responses from the model and uses the answer that shows up most often.

ODS-v2: This version leverages Chain-of-Code (CoC) and a CodeAct agent, implemented using the Hugging Face SmolAgents library. CoC uses the LLM’s ability to generate and execute code snippets to solve problems, while CodeAct uses code generation for planning actions. ODS-v2 can orchestrate multiple tools and agents, allowing it to tackle more complex tasks that may require sophisticated planning and potentially multiple search iterations.

“While tools like ChatGPT or Grok offer ‘deep research’ via conversational agents, ODS operates at a different layer—more akin to the infrastructure behind Perplexity AI—providing the underlying architecture that powers intelligent retrieval, not just summaries,” Tyagi said.

Performance and practical results

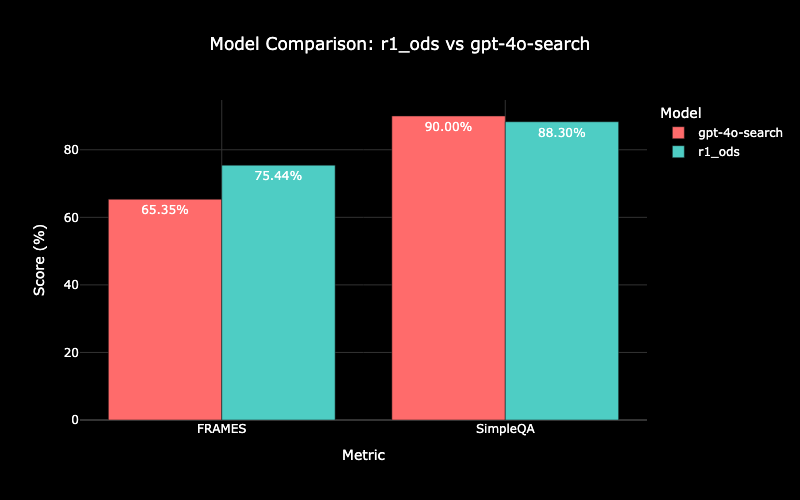

Sentient evaluated ODS by pairing it with the open-source DeepSeek-R1 model and testing it against popular closed-source competitors like Perplexity AI and OpenAI’s GPT-4o Search Preview, as well as standalone LLMs like GPT-4o and Llama-3.1-70B. They used the FRAMES and SimpleQA question-answering benchmarks, adapting them to evaluate the accuracy of search-enabled AI systems.

The results demonstrate ODS’s competitiveness. Both ODS-v1 and ODS-v2, when combined with DeepSeek-R1, outperformed Perplexity’s flagship products. Notably, ODS-v2 paired with DeepSeek-R1 surpassed the GPT-4o Search Preview on the complex FRAMES benchmark and nearly matched it on SimpleQA.

An interesting observation was the framework’s efficiency. The reasoning agents in both ODS versions learned to use the search tool judiciously, often deciding whether an additional search was necessary based on the quality of the initial results. For instance, ODS-v2 used fewer web searches on the simpler SimpleQA tasks compared to the more complex, multi-hop queries in FRAMES, optimizing resource consumption.

Implications for the enterprise

For enterprises seeking powerful AI reasoning capabilities grounded in real-time information, ODS presents a promising solution that offers a transparent, customizable, and high-performing alternative to proprietary AI search systems. The ability to plug in preferred open-source LLMs and tools gives organizations greater control over their AI stack and avoids vendor lock-in.

“ODS was built with modularity in mind,” Tyagi said. “It selects which tools to use dynamically, based on descriptions provided in the prompt. This means it can interact with unfamiliar tools fluently—as long as they’re well-described—without requiring prior exposure.”

However, he acknowledged that ODS performance can degrade when the toolset becomes bloated, “so careful design matters.”

Sentient has released the code for ODS on GitHub.

“Initially, the strength of Perplexity and ChatGPT was their advanced technology, but with ODS, we’ve leveled this technological playing field,” Tyagi said. “We now aim to surpass their capabilities through our ‘open inputs and open outputs’ strategy, enabling users to seamlessly integrate custom agents into Sentient Chat.”

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.