The shift to ‘energy sovereignty’

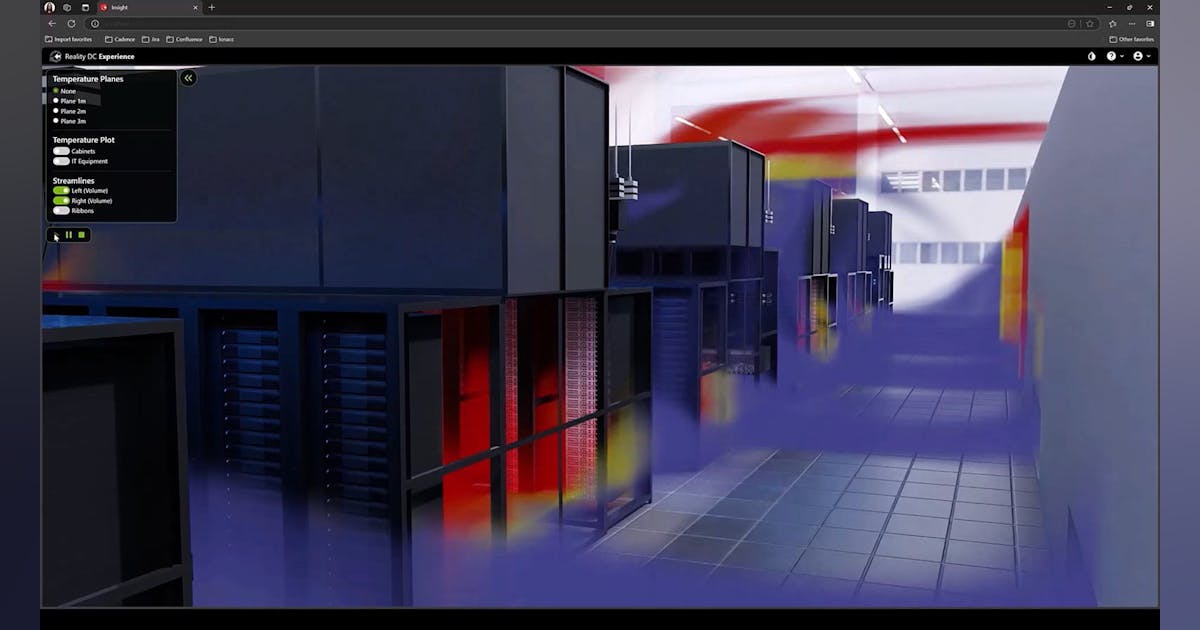

Analysts say the move reflects a fundamental shift in data center strategy, moving from “fiber-first” to “power-first” site selection.

“Historically, data centers were built near internet exchange points and urban centers to minimize latency,” said Ashish Banerjee, senior principal analyst at Gartner. “However, as AI training requirements reach the gigawatt scale, OpenAI is signaling that they will prioritize regions with ‘energy sovereignty’, places where they can build proprietary generation and transmission, rather than fighting for scraps on an overtaxed public grid.”

For network architecture, this means a massive expansion of the “middle mile.” By placing these behemoth data centers in energy-rich but remote locations, the industry will have to invest heavily in long-haul, high-capacity dark fiber to connect these “power islands” back to the edge.

“We should expect a bifurcated network: a massive, centralized core for ‘cold’ model training located in the wilderness, and a highly distributed edge for ‘hot’ real-time inference located near the users,” Banerjee added.

Manish Rawat, a semiconductor analyst at TechInsights, also noted that the benefits may come at the cost of greater architectural complexity.

“On the network side, this pushes architectures toward fewer mega-hubs and more regionally distributed inference and training clusters, connected via high-capacity backbone links,” Rawat said. “The trade-off is higher upfront capex but greater control over scalability timelines, reducing dependence on slow-moving utility upgrades.”