In April, Paul Graham, the founder of the tech startup accelerator Y Combinator, sent a tweet in response to former YC president and current OpenAI CEO Sam Altman. Altman had just bid a public goodbye to GPT-4 on X, and Graham had a follow-up question.

“If you had [GPT-4’s model weights ] etched on a piece of metal in the most compressed form,” Graham wrote, referring to the values that determine the model’s behavior. “how big would the piece of metal have to be? This is a mostly serious question. These models are history, and by default digital data evaporates.”

There is no question that OpenAI pulled off something historic with its release of ChatGPT 3.5 in 2022. It set in motion an AI arms race that has already changed the world in a number of ways and seems poised to have an even greater long-term effect than the short-term disruptions to things like education and employment that we are already beginning to see. How that turns out for humanity is something we are still reckoning with and may be for quite some time. But a pair of recent books both attempt to get their arms around it with accounts of what two leading technology journalists saw at the OpenAI revolution.

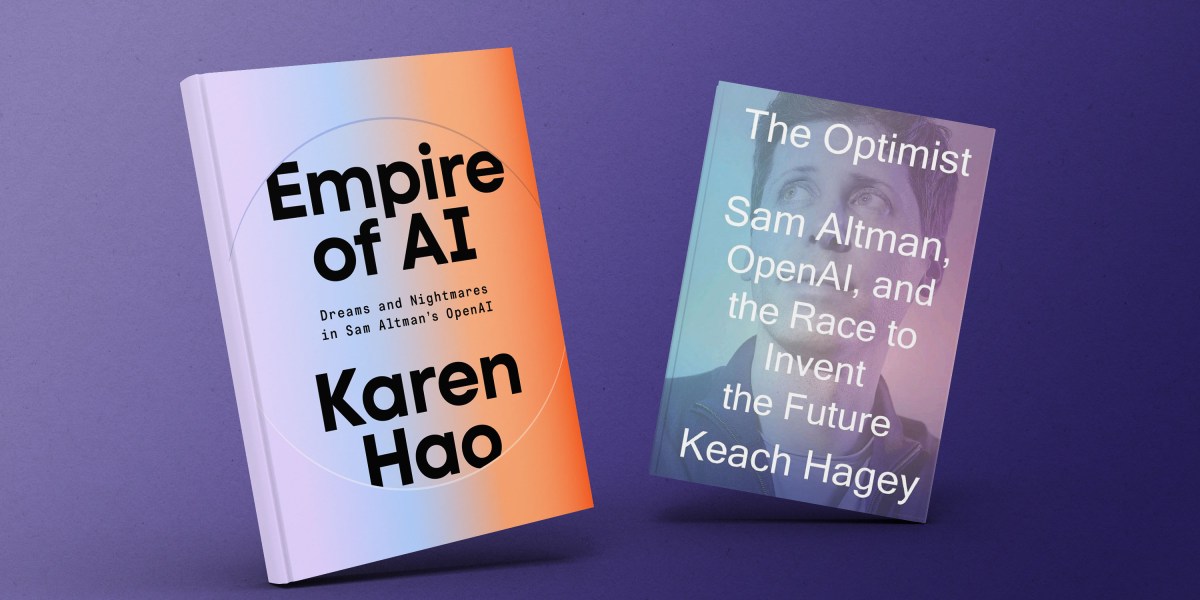

In Empire of AI: Dreams and Nightmares in Sam Altman’s OpenAI, Karen Hao of the Atlantic tells the story of the company’s rise to power and its far-reaching impact all over the world. Meanwhile, The Optimist: Sam Altman, OpenAI, and the Race to Invent the Future, by the Wall Street Journal’s Keach Hagey, homes in more on Altman’s personal life, from his childhood through the present day, in order to tell the story of OpenAI. Both paint complex pictures and show Altman in particular as a brilliantly effective yet deeply flawed creature of Silicon Valley—someone capable of always getting what he wants, but often by manipulating others.

Hao, who was formerly a reporter with MIT Technology Review, began reporting on OpenAI while at this publication and remains an occasional contributor. One chapter of her book grew directly out of that reporting. And in fact, as Hao says in the acknowledgments of Empire of AI, some of her reporting for MIT Technology Review, a series on AI colonialism, “laid the groundwork for the thesis and, ultimately, the title of this book.” So you can take this as a kind of disclaimer that we are predisposed to look favorably on Hao’s work.

With that said, Empire of AI is a powerful work, bristling not only with great reporting but also with big ideas. This comes across in service to two main themes.

The first is simple: It is the story of ambition overriding ethics. The history of OpenAI as Hao tells it (and as Hagey does too) is very much a tale of a company that was founded on the idealistic desire to create a safety-focused artificial general intelligence but instead became more interested in winning. This is a story we’ve seen many times before in Big Tech. See Theranos, which was going to make diagnostics easier, or Uber, which was founded to break the cartel of “Big Taxi.” But the closest analogue might be Google, which went from “Don’t be evil” to (at least in the eyes of the courts) illegal monopolist. For that matter, consider how Google went from holding off on releasing its language model as a consumer product due to an abundance of caution, to rushing a chatbot out the door to catch up with and beat OpenAI. In Silicon Valley, no matter what one’s original intent, it always comes back to winning.

The second theme is more complex and forms the book’s thesis about what Hao calls AI colonialism. The idea is that the large AI companies act like traditional empires, siphoning wealth from the bottom rungs of society in the forms of labor, creative works, raw materials, and the like to fuel their ambition and enrich those at the top of the ladder. “I’ve found only one metaphor that encapsulates the nature of what these AI power players are: empires,” she writes.

“During the long era of European colonialism, empires seized and extracted resources that were not their own and exploited the labor of the people they subjugated to mine, cultivate, and refine those resources for the empires’ enrichment.” She goes on to chronicle her own growing disillusionment with the industry. “With increasing clarity,” she writes, “I realized that the very revolution promising to bring a better future was instead, for people on the margins of society, reviving the darkest remnants of the past.”

To document this, Hao steps away from her desk and goes out into the world to see the effects of this empire as it sprawls across the planet. She travels to Colombia to meet with data labelers tasked with teaching AI what various images show, one of whom she describes sprinting back to her apartment for the chance to make a few dollars. She documents how workers in Kenya who performed data-labeling content moderation for OpenAI came away traumatized by seeing so much disturbing material. In Chile she documents how the industry extracts precious resources—water, power, copper, lithium—to build out data centers.

She lands on the ways people are pushing back against the empire of AI across the world. Hao draws lessons from New Zealand, where Maori people are attempting to save their language using a small language model of their own making. Trained on volunteers’ voice recordings and running on just two graphics processing units, or GPUs, rather than the thousands employed by the likes of OpenAI, it’s meant to benefit the community, not exploit it.

Hao writes that she is not against AI. Rather: “What I reject is the dangerous notion that broad benefit from AI can only be derived from—indeed will ever emerge from—a vision of the technology that requires the complete capitulation of our privacy, our agency, and our worth, including the value of our labor and art, toward an ultimately imperial centralization project … [The New Zealand model] shows us another way. It imagines how AI could be exactly the opposite. Models can be small and task-specific, their training data contained and knowable, ridding the incentives for widespread exploitative and psychologically harmful labor practices and the all-consuming extractivism of producing and running massive supercomputers.”

Hagey’s book is more squarely focused on Altman’s ambition, which she traces back to his childhood. Yet interestingly, she also zeroes in on the OpenAI CEO’s attempt to create an empire. Indeed, “Altman’s departure from YC had not slowed his civilization-building ambitions,” Hagey writes. She goes on to chronicle how Altman, who had previously mulled a run for governor of California, set up experiments with income distribution via Tools for Humanity, the parent company of Worldcoin. Hagey quotes Altman saying of it, “I thought it would be interesting to see … just how far technology could accomplish some of the goals that used to be done by nation-states.”

Overall, The Optimist is the more straightforward business biography of the two. Hagey has packed it full with scoops and insights and behind-the-scenes intrigue. It is immensely readable as a result, especially in the second half ,when OpenAI really takes over the story. Hagey also seems to have been given far more access to Altman and his inner circles, personal and professional, than Hao did, and that allows for a fuller telling of the CEO’s story in places. For example, both writers cover the tragic story of Altman’s sister Annie, her estrangement from the family, and her accusations in particular about suffering sexual abuse at the hands of Sam (something he and the rest of the Altman family vehemently deny). Hagey’s telling provides a more nuanced picture of the situation, with more insight into family dynamics.

Hagey concludes by describing Altman’s reckoning with his role in the long arc of human history and what it will mean to create a “superintelligence.” His place in that sweep is something that clearly has consumed the CEO’s thoughts. When Paul Graham asked about preserving GPT-4, for example, Altman had a response at the ready. He replied that the company had already considered this, and that the sheet of metal would need to be 100 meters square.