Join the event trusted by enterprise leaders for nearly two decades. VB Transform brings together the people building real enterprise AI strategy. Learn more

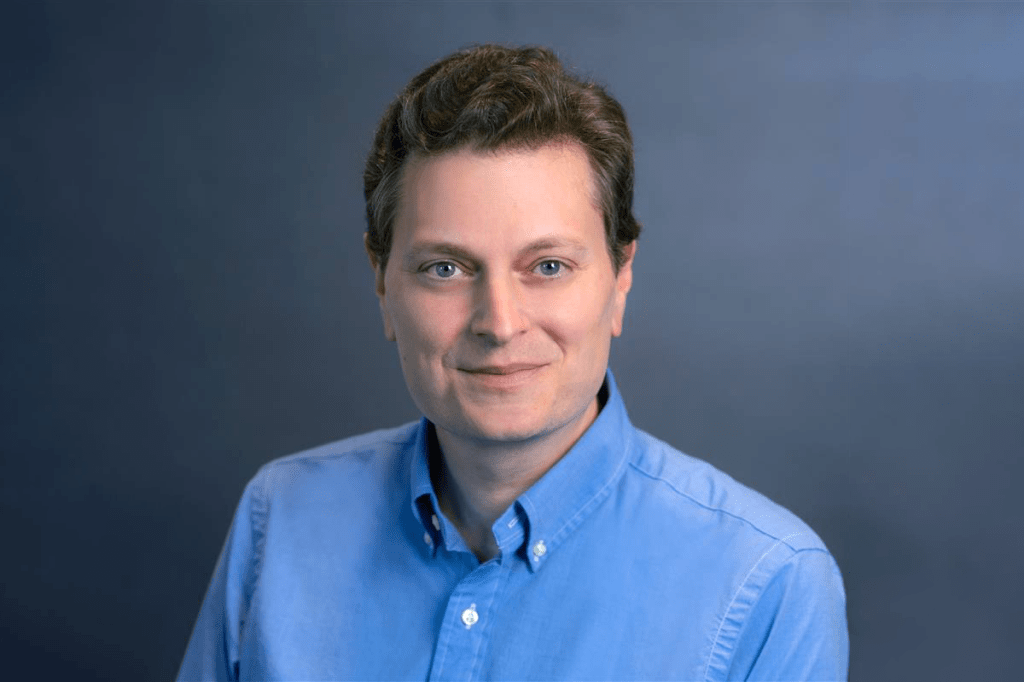

At VentureBeat’s Transform 2025 conference, Olivier Godement, Head of Product for OpenAI’s API platform, provided a behind-the-scenes look at how enterprise teams are adopting and deploying AI agents at scale.

In a 20-minute panel discussion I hosted exclusively with Godement, the former Stripe researcher and current OpenAI API boss unpacked OpenAI’s latest developer tools—the Responses API and Agents SDK—while highlighting real-world patterns, security considerations, and cost-return examples from early adopters like Stripe and Box.

For enterprise leaders unable to attend the session live, here are top 8 most important takeaways:

Agents Are Rapidly Moving From Prototype to Production

According to Godement, 2025 marks a real shift in how AI is being deployed at scale. With over a million monthly active developers now using OpenAI’s API platform globally, and token usage up 700% year over year, AI is moving beyond experimentation.

“It’s been five years since we launched essentially GPT-3… and man, the past five years has been pretty wild.”

Godement emphasized that current demand isn’t just about chatbots anymore. “AI use cases are moving from simple Q&A to actually use cases where the application, the agent, can do stuff for you.”

This shift prompted OpenAI to launch two major developer-facing tools in March: the Responses API and the Agents SDK.

When to Use Single Agents vs. Sub-Agent Architectures

A major theme was architectural choice. Godement noted that single-agent loops, which encapsulate full tool access and context in one model, are conceptually elegant but often impractical at scale.

“Building accurate and reliable single agents is hard. Like, it’s really hard.”

As complexity increases—more tools, more possible user inputs, more logic—teams often move toward modular architectures with specialized sub-agents.

“A practice which has emerged is to essentially break down the agents into multiple sub-agents… You would do separation of concerns like in software.”

These sub-agents function like roles in a small team: a triage agent classifies intent, tier-one agents handle routine issues, and others escalate or resolve edge cases.

Why the Responses API Is a Step Change

Godement positioned the Responses API as a foundational evolution in developer tooling. Previously, developers manually orchestrated sequences of model calls. Now, that orchestration is handled internally.

“The Responses API is probably the biggest new layer of abstraction we introduced since pretty much GPT-3.”

It allows developers to express intent, not just configure model flows. “You care about returning a really good response to the customer… the Response API essentially handles that loop.”

It also includes built-in capabilities for knowledge retrieval, web search, and function calling—tools that enterprises need for real-world agent workflows.

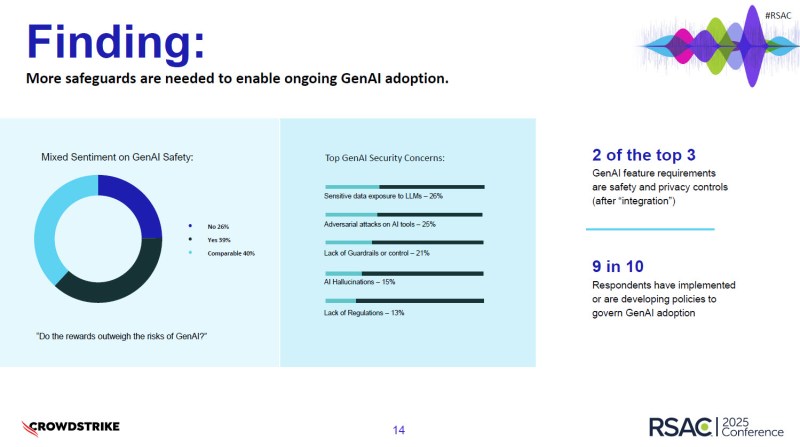

Observability and Security Are Built In

Security and compliance were top of mind. Godement cited key guardrails that make OpenAI’s stack viable for regulated sectors like finance and healthcare:

- Policy-based refusals

- SOC-2 logging

- Data residency support

Evaluation is where Godement sees the biggest gap between demo and production.

“My hot take is that model evaluation is probably the biggest bottleneck to massive AI adoption.”

OpenAI now includes tracing and eval tools with the API stack to help teams define what success looks like and track how agents perform over time.

“Unless you invest in evaluation… it’s really hard to build that trust, that confidence that the model is being accurate, reliable.”

Early ROI Is Visible in Specific Functions

Some enterprise use cases are already delivering measurable gains. Godement shared examples from:

- Stripe, which uses agents to accelerate invoice handling, reporting “35% faster invoice resolution”

- Box, which launched knowledge assistants that enable “zero-touch ticket triage”

Other high-value use cases include customer support (including voice), internal governance, and knowledge assistants for navigating dense documentation.

What It Takes to Launch in Production

Godement emphasized the human factor in successful deployments.

“There is a small fraction of very high-end people who, whenever they see a problem and see a technology, they run at it.”

These internal champions don’t always come from engineering. What unites them is persistence.

“Their first reaction is, OK, how can I make it work?”

OpenAI sees many initial deployments driven by this group — people who pushed early ChatGPT use in the enterprise and are now experimenting with full agent systems.

He also pointed out a gap many overlook: domain expertise. “The knowledge in an enterprise… does not lie with engineers. It lies with the ops teams.”

Making agent-building tools accessible to non-developers is a challenge OpenAI aims to address.

What’s Next for Enterprise Agents

Godement offered a glimpse into the roadmap. OpenAI is actively working on:

- Multimodal agents that can interact via text, voice, images, and structured data

- Long-term memory for retaining knowledge across sessions

- Cross-cloud orchestration to support complex, distributed IT environments

These aren’t radical changes, but iterative layers that expand what’s already possible. “Once we have models that can think not only for a few seconds but for minutes, for hours… that’s going to enable some pretty mind-blowing use cases.”

Final Word: Reasoning Models Are Underhyped

Godement closed the session by reaffirming his belief that reasoning-capable models—those that can reflect before responding—will be the true enablers of long-term transformation.

“I still have conviction that we are pretty much at the GPT-2 or GPT-3 level of maturity of those models….We are still scratching the surface on what reasoning models can do.”

For enterprise decision makers, the message is clear: the infrastructure for agentic automation is here. What matters now is building a focused use case, empowering cross-functional teams, and being ready to iterate. The next phase of value creation lies not in novel demos—but in durable systems, shaped by real-world needs and the operational discipline to make them reliable.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.