The obvious answer would be Nvidia’s new GB200 systems, essentially one giant 72-GPU server. But those cost millions, face extreme supply shortages, and aren’t available everywhere, the researchers noted. Meanwhile, H100 and H200 systems are plentiful and relatively cheap.

The catch: running large models across multiple older systems has traditionally meant brutal performance penalties. “There are no viable cross-provider solutions for LLM inference,” the research team wrote, noting that existing libraries either lack AWS support entirely or suffer severe performance degradation on Amazon’s hardware.

TransferEngine aims to change that. “TransferEngine enables portable point-to-point communication for modern LLM architectures, avoiding vendor lock-in while complementing collective libraries for cloud-native deployments,” the researchers wrote.

How TransferEngine works

TransferEngine acts as a universal translator for GPU-to-GPU communication, according to the paper. It creates a common interface that works across different networking hardware by identifying the core functionality shared by various systems.

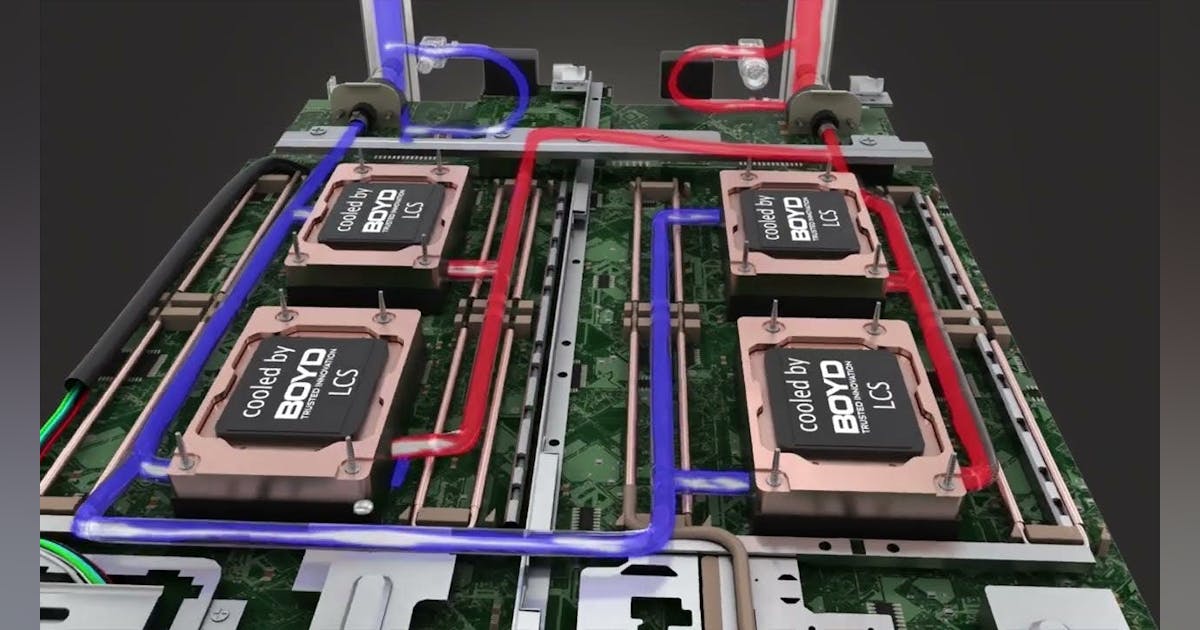

TransferEngine uses RDMA (Remote Direct Memory Access) technology. This allows computers to transfer data directly between graphics cards without involving the main processor—think of it as a dedicated express lane between chips.

Perplexity’s implementation achieved 400 gigabits per second throughput on both Nvidia ConnectX-7 and AWS EFA, matching existing single-platform solutions. TransferEngine also supports using multiple network cards per GPU, aggregating bandwidth for even faster communication.