The AI revolution is charging ahead—but powering it shouldn’t cost us the planet. That tension lies at the heart of Vaire Computing’s bold proposition: rethinking the very logic that underpins silicon to make chips radically more energy efficient.

Speaking on the Data Center Frontier Show podcast, Vaire CEO Rodolfo Rossini laid out a compelling case for why the next era of compute won’t just be about scaling transistors—but reinventing the way they work.

“Moore’s Law is coming to an end, at least for classical CMOS,” Rossini said. “There are a number of potential architectures out there—quantum and photonics are the most well known. Our bet is that the future will look a lot like existing CMOS, but the logic will look very, very, very different.”

That bet is reversible computing—a largely untapped architecture that promises major gains in energy efficiency by recovering energy lost during computation.

A Forgotten Frontier

Unlike conventional chips that discard energy with each logic operation, reversible chips can theoretically recycle that energy. The concept, Rossini explained, isn’t new—but it’s long been overlooked.

“The tech is really old. I mean really old,” Rossini said. “The seeds of this technology were actually at the very beginning of the industrial revolution.”

Drawing on the work of 19th-century mechanical engineers like Sadi Carnot and later insights from John von Neumann, the theoretical underpinnings of reversible computing stretch back decades. A pivotal 1961 paper formally connected reversibility to energy efficiency in computing. But progress stalled—until now.

“Nothing really happened until a team of MIT students built the first chip in the 1990s,” Rossini noted. “But they were trying to build a CPU, which is a world of pain. There’s a reason why I don’t think there’s been a startup trying to build CPUs for a very, very long time.”

AI, the Great Enabler

The rise of AI may have unlocked reversible computing’s real-world potential. Rossini described how the shift from CPUs to GPUs and specialized AI accelerators created an opening for new logic architectures to gain traction.

“It’s still general-purpose enough to run a model that can do a lot of different things,” he said, “But the underlying architecture is much simpler.”

Rossini founded Vaire after a personal epiphany. “I saw that the future of AI was going to be brilliant—everything was going to be AI. But at the same time, we were going to basically boil the oceans to power our data centers.”

So he started looking for alternatives. One obscure MIT project—and a conspicuous lack of competition—led him to reversible computing.

“Nobody was working on this, which is usually peculiar,” he said. “Dozens of quantum computing companies, hundreds of photonic companies… and this area was a bit orphan.”

From Theory to Silicon

Today, Vaire has completed a tape-out of its first test chip. It’s not commercial yet, but it proves the design works in silicon.

“We proved the theory. Great. Now we proved the practice. And we are on to proving the commercial viability,” Rossini said.

And while he’s quick to acknowledge the difficulty of building chips from scratch, Rossini is bullish on the comparative simplicity of their approach.

“I’m not saying it’s super simple,” he said. “But it’s not the order of magnitude of building a quantum computer. It’s basically CMOS.”

Vaire has also secured foundational patents in a space that remains curiously underexplored.

“We found all the basic patents and everything in a space where no one was working,” Rossini said.

Product, Not IP

Unlike some chip startups focused on licensing intellectual property, Vaire is playing to win with full-stack product development.

“Right now we’re not really planning to license. We really want to build product,” Rossini emphasized. “It’s very important today, especially from the point of view of the customer. It’s not just the hardware—it’s the hardware and software.”

Rossini points to Nvidia’s CUDA ecosystem as the gold standard for integrated hardware/software development.

“The reason why Nvidia is so great is because they spent a decade perfecting their CUDA stack,” he said. “You can’t really think of a chip company being purely a hardware company anymore. Better hardware is the ticket to the ball—and the software is how you get to dance.”

A great metaphor for a company aiming to rewrite the playbook on compute logic.

The Long Game: Reimagining Chips Without Breaking the System

In an industry where even incremental change can take years to implement, Vaire Computing is taking a pragmatic approach to a deeply ambitious goal: reimagining chip architecture through reversible computing — but without forcing the rest of the computing stack to start over.

“We call it the Near-Zero Energy Chip,” said Rossini. “And by that we mean a chip that operates at the lowest possible energy point compared to classical chips—one that dissipates the least amount of energy, and where you can reuse the software and the manufacturing supply chain.”

That last point is crucial. Vaire isn’t trying to uproot the hyperscale data center ecosystem — it’s aiming to integrate into it. The company’s XPU architecture is designed to deliver breakthrough efficiency while remaining compatible with existing tooling, manufacturing processes, and software paradigms.

Build the Future—But Don’t Break the Present

Rossini acknowledged that other new compute architectures, from FET variants to exotic materials, often fall short not because of technical limitations, but because they require sweeping changes in manufacturing or software support.

“You might have the best product in the world. It doesn’t matter,” Rossini said. “If it requires a change in manufacturing, you have to spend a decade convincing a leading foundry to adopt it—and that’s a killer.”

Instead, Vaire is working within the existing CMOS ecosystem—what Rossini calls “the 800-pound gorilla in chip manufacturing”—and limiting how many pieces they move on the board at once.

“We could, in theory, achieve much better performance if we changed everything: software stack, manufacturing method, and computer architecture,” he said. “But the more you change, the more problems you create for the customer. Suddenly they need to support a new architecture, test it, and port applications. That’s a huge opportunity cost.”

One Vaire customer, Rossini noted, is locked in a tight competitive race. If they spent even six months porting software to a novel architecture, “they basically lost the race.”

So Vaire’s first objective is to prove that its chip can meet or exceed current expectations while fitting into existing systems as seamlessly as possible.

A Roadmap, Not a Revolution

The company’s ambitions stretch over the next 10 to 15 years—but Rossini was quick to clarify that this isn’t a long wait for a product.

“I don’t mean we ship a product in 10 years. We want to ship a product in three,” he said. “But we think we’ll have multiple iterations of products before we reach the true end of CMOS.”

He likened the strategy to Apple’s evolution of the iPhone—from version 1 to version 16, the progress was steady, deliberate, and transformative without ever being jarring.

“There isn’t really a quantum jump from one version to another,” Rossini said. “But there’s constant improvement. You ship a product every year or 18 months, and customers keep getting better and better performance.”

Software Matters—But So Does Timing

Asked whether Vaire is building a parallel software stack, Rossini responded with a nuanced yes.

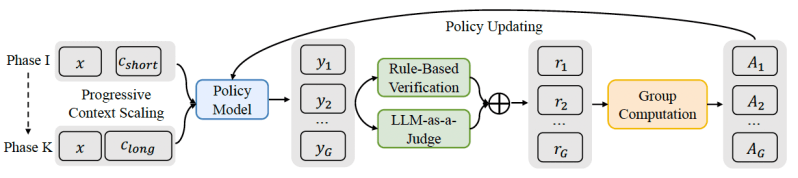

“The greatest thing is that creating a software stack in 2025 is not the same thing as doing it in 2006,” he said. “There’s a very clear dominant open-source ecosystem that we have to support. That means PyTorch, possibly TensorFlow. But we don’t have to support everything.”

Vaire is focusing first on inference workloads rather than training—an important decision that narrows the company’s engineering scope and accelerates go-to-market potential.

“Training is a really complicated beast,” Rossini admitted. “Inference is where we want to play.”

Even more interesting is Vaire’s counterintuitive take on software efficiency. The company is betting that even if the software isn’t fully optimized at launch, the chip’s energy efficiency and performance gains will be so significant that users will adopt it anyway.

“Basically, the idea is that the car is inefficient, but it’s so comfortable and beautiful that—even if the software isn’t perfect—it’s still a net gain,” Rossini said. “And over time, you refine the software, you push the limits of the hardware.”

Reversible, Relatable, Ready for Reality: Disruptive—but Not Destructive

As Vaire moves from test chip to commercial product, Rossini’s team is walking a tightrope: introducing genuinely novel compute logic in a way that doesn’t break developer workflows, supply chains, or hyperscaler procurement models.

“Reversible computing is disrupting enough,” said Rossini. “We don’t want to break everything at the same time.”

In an AI-powered world where compute demand is exploding and energy is the limiting factor, that kind of surgical disruption may be exactly what the industry needs.

So what do you call a chip that’s not quite a CPU, not exactly a GPU, and is building a new compute architecture without breaking the software world? For now, it’s “XPU,” says Rodolfo. “It’s CPU-esque, GPU-esque… but we’re not building a graphics pipeline.”

Getting that XPU into data centers, though, is not about trying to reinvent the wheel—or the hyperscale rack. Rodolfo’s playbook draws on his experience working with the Department of Defense, “probably the most complex supply chain in the world.” You don’t sell directly to the big players; instead, you embed into the existing machinery. “You partner with Tier 2 and Tier 3 suppliers,” he explains. “They already know the power budgets, size, space, design constraints… so we co-design with them to get into the customer’s hands.”

You can’t stop the train of computing, he adds. “We still use COBOL and Fortran in production. You can’t say, ‘Hey, let’s stop everything for a better mousetrap.’” But you can jump onboard, just in the right railcar. “We’re injecting ourselves into existing supply chains,” he says. “Maybe we’re selling IP, maybe chips—but always in the path of upgrade, not disruption for disruption’s sake.”

Less Heat, More Compute

One of the central advantages of reversible computing is thermal efficiency. Traditional chips discard charge with every gate switch. That’s fine when you’ve got a handful of transistors—but in the modern era of ultra-dense chips, that adds up fast. “If you have low-leakage nodes,” Rodolfo notes, “that discarded charge is actually the majority of the energy dissipated.”

Reversible chips operate a little slower, but they recover that charge, making them ideal for multicore environments. “That’s why Nvidia chips are slower than Intel’s, but use tons of cores,” he adds. “Same idea—slow down a little, parallelize a lot.”

The result is a system that’s more thermally efficient and more sustainable in the long run. “We’ve figured out how to make a reversible system talk to classical systems—without them even knowing it’s a different architecture. It’s completely alien under the hood, but seamless on the outside.”

And while ultra-low power use cases are on the roadmap, the short-term problem is more about power envelopes. “Customers already have power coming into the building,” he says. “What they don’t have is headroom. They want more compute without increasing draw. That’s the opportunity.”

Modular Visions and the Edge

When asked if this technology lends itself to edge inference, Rodolfo steers the conversation toward modularity—but not edge computing. “We’re talking about data centers here,” he says. “The market is clear. The price per chip is higher. And the pain points—power, cooling—are urgent.”

But he sees that modular vision evolving. “If you don’t need massive centralized cooling, maybe you don’t need massive centralized data centers either,” he muses. “We could be heading toward a new generation of modular units, kind of like the old Sun Microsystems container idea.”

That’s down the line, though. Edge devices still face huge cost constraints and BOM sensitivities. “Consumer electronics can’t just absorb premium chip prices—even if the chip is magic.”

A New Path, Rooted in Reversibility

Zooming out, Rodolfo sees this as a foundational transition in computing. “In 15 years, every chip will be reversible,” he predicts. “Not all made by us, of course—but that’s the direction. CMOS is bound by thermal limits. Photonics will help, but computing with light still needs breakthroughs. Quantum? It’s still niche. But here’s the thing—quantum computers are also reversible. They have to be.”

That’s not a coincidence. It’s a trend line. “Reversible is the way forward,” says Rossini

To be clear, Rodolfo’s company isn’t in the quantum business. “We have nothing to do with quantum,” he says. “They use reversibility for coherence. We do it for heat.” But both share a deeper implication: Moore’s Law has run its course. Classical computing gave us an extraordinary 50-year run—but scaling it further demands a new direction. And for Rodolfo and his team, that direction is irreversibility’s mirror twin: reversible logic.

That’s not the mainstream view in the chip world, of course. “This is very much not the orthodoxy,” he admits. But rather than chasing academic acceptance, VAR’s strategy was all-in from day one. “We didn’t want to write papers—we did publish, but that’s not the goal,” he says. “We raised money, built a team, and spent three years in stealth. Because once you put silicon in a customer’s hands, the argument ends.”

Pacing Progress: Balancing Ambition and Real-World Delivery

So when will that first chip hit real workloads? The company’s current thinking pegs 2027 as the start of commercial availability—but it’s a moving target. “We’re in that tension of ‘How cool can this car be?’ versus ‘Can we just ship the damn motorcycle?’” Rodolfo jokes.

As VAR works closely with early customers, new ideas and possibilities keep pushing the roadmap out. “We’re wrestling with scope—people see what we’re building and say, ‘Can it do this? Can it do that?’ And suddenly the product gets bigger and more ambitious.”

Still, VAR has a structure. They plan on taping out at least one new chip per year leading up to 2027, each tackling a specific, foundational breakthrough—resonators, adiabatic switching, reversible clocking, and other techniques that make this novel architecture viable in silicon. “Every year, we take on a hard problem we think we can solve on paper. Then we build it,” he explains. “If it works—great. If it doesn’t—we go back to the drawing board.”

So far, they’re on track. And that steady drumbeat is what gave the team the confidence to finally come out of stealth. “We didn’t start talking until we were sure we wouldn’t fall flat on our faces.”

From Moonshot to Minimum Viable Product

When DCF asked Rossini whether Vaire Computing’s technology could be considered a moonshot, the CEO didn’t hesitate: “Yes, it absolutely is,” he affirmed.

This candid acknowledgment speaks volumes about Vaire’s bold approach. The company is attempting nothing less than the reinvention of chip architecture itself, with a vision to fundamentally reshape the energy efficiency and scalability of computing in a way that could transcend the limitations of current technologies. While the challenge is daunting, it’s a calculated moonshot—one that strives to deliver a game-changing solution without requiring a complete overhaul of the ecosystem that currently supports data centers, software, and hardware.

Why a moonshot? The idea of reversible computing, which Vaire is pushing forward, is uncharted territory for most of the chip industry. It holds the promise of dramatically reduced energy consumption, enabling the next generation of computing to scale beyond the thermal limits of Moore’s Law. However, building such an architecture is no small feat. It involves overcoming deep technical challenges—ranging from charge recovery at the gate level to making this revolutionary architecture compatible with classical systems. It’s a high-risk, high-reward effort, and success could redefine how we think about the energy demands of computing.

For now, Vaire is tackling the challenge with incremental steps, working closely with customers and partners to ensure that their innovations are practical and scalable. But the long-term goal remains clear: to reshape the computing landscape by delivering chips that push beyond the boundaries of today’s energy constraints.

And if they succeed, Vaire Computing could very well light the way for the next major leap in data center technology, marking the beginning of a new era in energy-efficient computing.

While the long-term vision is big—“Every chip will be reversible,” Rodolfo repeats—it’s grounded in pragmatism. The Vaire team isn’t trying to boil the ocean. “We try to scope problems the customer has right now,” he says. “Maybe our tech can solve something today—in a year, not five.”

Along the way, they may find simpler, more narrowly scoped chips that solve very specific problems—side quests that still push the architecture forward, even if they’re not the ultimate XPU. “There’s a whole range of complexity in chip design,” Rodolfo notes. “We always try to build the least complex product that gets us to market fastest.”

The Beginning of Something Big

Reversible computing might not be orthodox, but it’s starting to sound inevitable. If heat is the ultimate barrier to scale—and it increasingly is—then it’s time to rethink what chips can do, and how they do it. For Vaire, that rethink has already started.

“We’re not here to stop the train,” Rodolfo says. “We’re jumping on board—and building the engine for what comes next.”