Overcoming the Barriers to Quantum Adoption

Despite the promise of quantum computing, widespread deployment faces multiple hurdles:

- High Capital Costs: Quantum computing infrastructure requires substantial investment, with uncertain return-on-investment models. The partnership will explore cost-sharing strategies to mitigate risk.

- Undefined Revenue Models: Business frameworks for quantum services, including pricing structures and access models, remain in development.

- Hardware Limitations: Current quantum processors still struggle with error rates and scalability, requiring advancements in error correction and hybrid computing approaches.

- Software Maturity: Effective algorithms for leveraging quantum computing’s advantages remain an active area of research, particularly in real-world AI and optimization problems.

SoftBank’s strategy includes leveraging its extensive telecom infrastructure and AI expertise to create real-world testing environments for quantum applications. By integrating quantum into existing data center operations, SoftBank aims to position itself at the forefront of the quantum-AI revolution.

A Broader Play in Advanced Computing

SoftBank’s quantum initiative follows a series of high-profile moves into the next generation of computing infrastructure. The company has been investing heavily in AI data centers, aligning with its “Beyond Carrier” strategy that expands its focus beyond telecommunications. Recent efforts include the development of large-scale AI models tailored to Japan and the enhancement of radio access networks (AI-RAN) through AI-driven optimizations.

Internationally, SoftBank has explored data center expansion opportunities beyond Japan, as part of its efforts to support AI, cloud computing, and now quantum applications. The company’s long-term vision suggests that quantum data centers could eventually play a role in supporting AI-driven workloads at scale, offering performance benefits that classical supercomputers cannot achieve.

The Road Ahead

SoftBank and Quantinuum’s collaboration signals growing momentum for quantum computing in enterprise settings. While quantum remains a long-term bet, integrating QPUs into data center infrastructure represents a forward-looking approach that could redefine high-performance computing in the years to come.

With the global demand for AI and high-performance computing on the rise, SoftBank’s commitment to quantum technology underscores its ambition to shape the future of computing. The partnership with Quantinuum could be “another first step” in making quantum data centers a reality, positioning SoftBank as a leader in the next phase of data center evolution.

Xanadu Unveils Aurora: A Scalable, Networked Approach to Quantum Data Centers

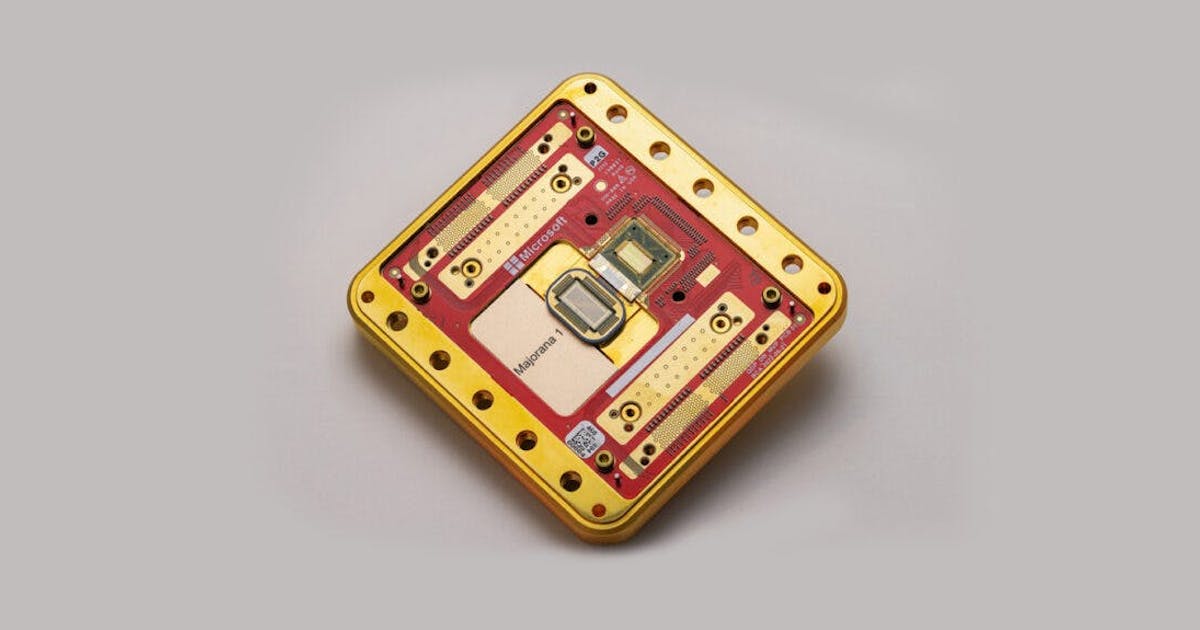

In a milestone moment for quantum computing, Xanadu has introduced Aurora, the first modular and networked quantum computer designed for large-scale deployment. This breakthrough advances the vision of quantum data centers, leveraging photonic technology to overcome one of the industry’s key challenges—scalability.

Aurora is built on four independent quantum server racks, interconnected through 13 kilometers of fiber optics and utilizing 35 photonic chips. Operating at room temperature, the system eliminates the extreme cooling demands of many quantum platforms, a factor that could significantly streamline future data center integration. More importantly, Aurora’s architecture allows for near-unlimited scaling, potentially expanding to thousands of racks and millions of qubits—an unprecedented leap toward utility-scale quantum computing.

A New Model for Quantum Scalability

Historically, quantum computing has been constrained by both qubit fidelity and the challenge of scaling beyond laboratory prototypes. Xanadu’s photonic approach fundamentally changes the equation by employing a modular networked system that can be expanded using commercially available fabrication techniques.

“Aurora demonstrates that scalability—the biggest challenge in quantum computing—is now within reach,” said Christian Weedbrook, CEO of Xanadu. “With this architecture, we could, in principle, scale up to millions of qubits. Now, our focus turns to performance improvements, particularly in error correction and fault tolerance.”

The system builds upon Xanadu’s previous work with Borealis and X8, integrating error-corrected quantum logic gates and real-time error mitigation strategies. By enabling quantum computations through interconnected modules, Aurora represents a viable blueprint for the first true quantum data centers, a shift that could redefine enterprise computing in the years ahead.

The Path to Utility-Scale Quantum Computing

While Aurora’s architecture provides a roadmap to large-scale deployment, further refinements are needed. Optical loss remains a key hurdle, with Xanadu now focusing on optimizing chip design and improving fabrication techniques in partnership with foundries. These improvements will be critical in ensuring fault tolerance, a necessary step for practical quantum applications.

For data center operators and cloud providers exploring quantum computing’s role in future infrastructure, Xanadu’s approach signals a potential turning point. The combination of room-temperature operation, scalable modularity, and networked computing could position photonics as a leading architecture for quantum-enabled data centers.

As the race toward fault-tolerant quantum computing accelerates, Aurora marks a significant step forward in bringing quantum systems out of the lab and into real-world enterprise environments.