At Supercomputing 2024 (SC24) in Atlanta, two announcements might have set a new course for the future of AI data centers.

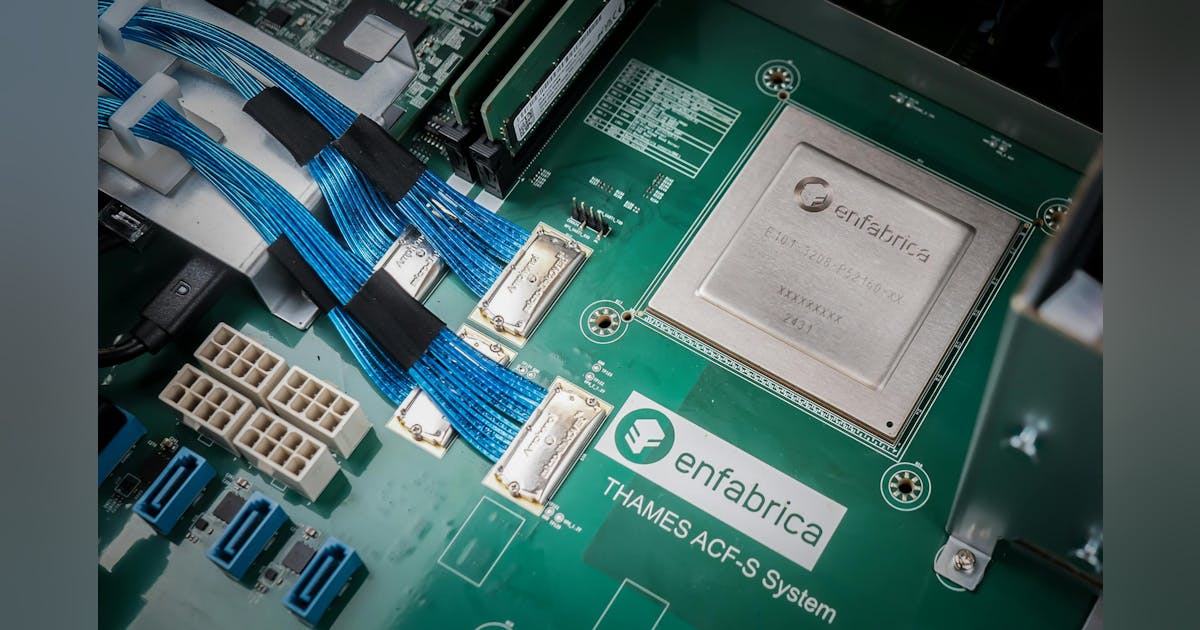

Enfabrica Corporation, a pioneer in high-performance networking silicon, revealed the general availability of its 3.2 Terabit/sec (Tbps) Accelerated Compute Fabric (ACF) SuperNIC chip, which promises advancements in GPU networking capabilities.

Meanwhile, Point2 Technology, known for its energy-efficient interconnect solutions, introduced its P1B121 UltraWire Smart Retimer SoC, designed to address the increasing energy demands of AI-driven hyperscale data centers.

With AI, Connectivity Is Crucial

With AI supercomputers typically being composed of thousands, sometimes millions, of interconnected processing units any technologies that impact those interconnections can have a significant impact.

High-speed, low-latency internal networks, such as InfiniBand or high-performance Ethernet (currently 400/800 Gbe), are critical to facilitating this communication. Without such connectivity, the synchronization of tasks across processing units suffers, resulting in inefficiencies and reduced computational performance.

In AI research and development, many supercomputers are part of global collaborations. Shared datasets, federated AI model training, and distributed simulations necessitate secure and ultra-reliable network connectivity across international boundaries.

Any interruptions or inefficiencies in these connections can disrupt the research process, slowing down potential breakthroughs due to connectivity issues.

Speed, Speed, and More Speed

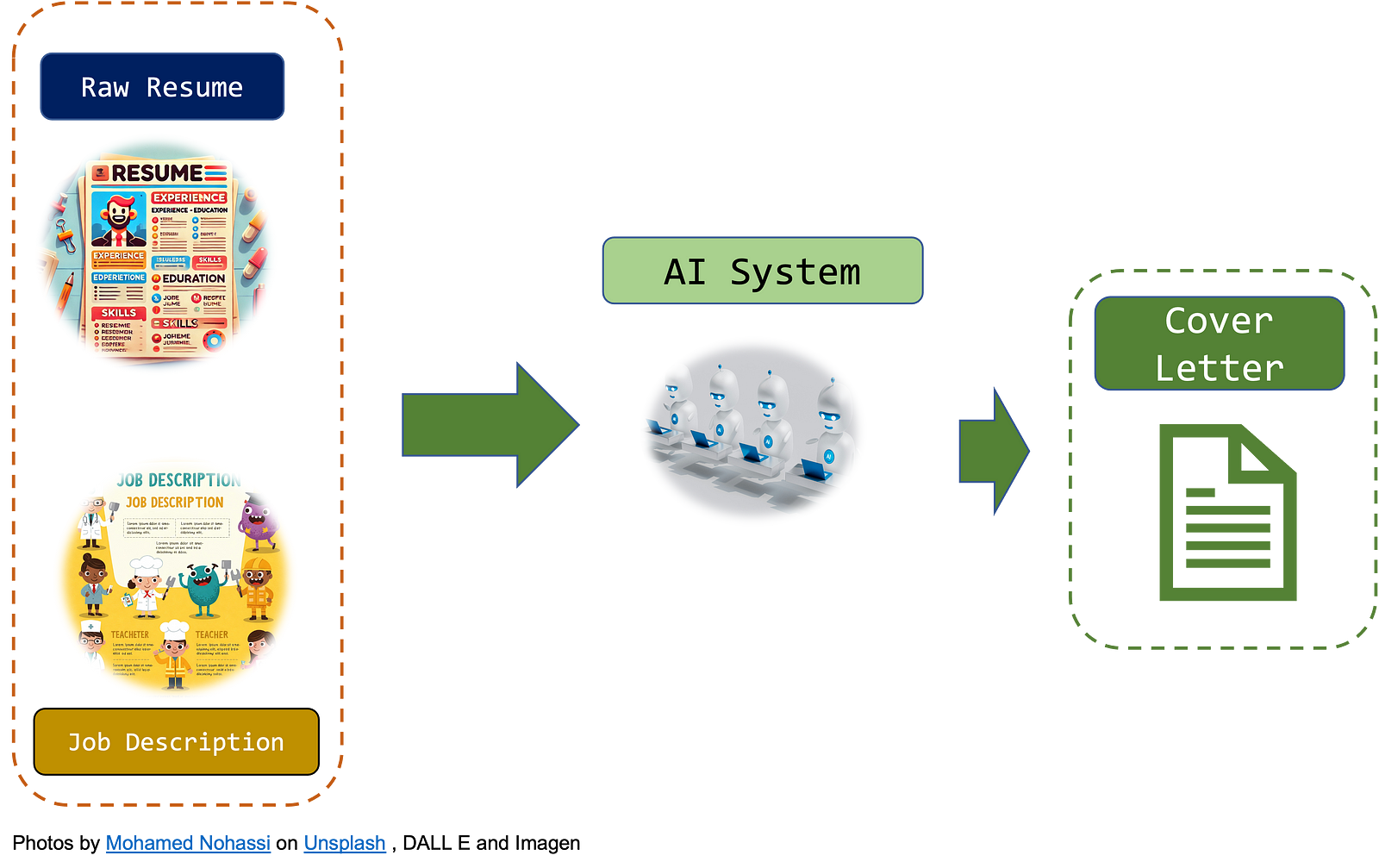

Enfabrica’s 3.2 Tbps ACF SuperNIC looks to accelerate AI data center technology, delivering four times the bandwidth compared to the current standard of GPU-attached network interface card.

Built from the ground up to cater to the needs of large-scale AI models, such as training, inference, and retrieval-augmented generation (RAG) workloads. Enfabrica identifies 3 key features that make their NIC a strong contender as an AI connectivity choice:

- Bandwidth and scalability

- Each ACF-S chip includes 32 network ports and 160 PCIe lanes, enabling GPU clusters of over 500,000 units.

- The solution supports 800, 400, and 100 Gigabit Ethernet interfaces, offering unprecedented scale-out throughput.

- Software defined networking adds resiliency and flexibility

- With Resilient Message Multipathing (RMM) technology, the minimizes job stalls due to network failures, boosting GPU utilization without altering existing AI software stacks.

- The chip’s Software-Defined RDMA Networking enhances flexibility, allowing operators to customize transport layers for optimized cloud-scale networks.

- Simplifies operation

- By integrating zero-copy data transfers and optimized memory management, to enhance floating-point operations per second (FLOPs) utilization, critical for large-scale AI clusters.

Enfabrica CEO Rochan Sankar described the launch as a pivotal moment: