The price of constructing new underground transmission lines is, on average, around 4.5 times more expensive than overhead lines, according to a report.

According to research from the Institute of Engineering and Technology (IET), it costs around £1,190 to transmit 1MW of power 1km through an overhead line compared to around £5,330 per MW per km for underground cables

The report noted an example of a 15km long 5GW overhead line, which it estimated would cost around £40m. This compares with an equivalent underground cable costing around £330m and, in a new tunnel, £820m.

In addition, it said that offshore high voltage direct current (HVDC) point-to-point cable is around 5 times more expensive; while an offshore HVDC network connecting multiple sites to the onshore grid is around 11 times more expensive.

Chairman of the project board for the IET Transmission Technologies report Professor Keith Bell said: “As an essential part of the country’s aim to reach net zero, the UK is decarbonising its production of electricity and electrifying the use of energy for heating, transport and industry.

“Access to a cleaner, more affordable, secure supply of energy requires the biggest programme of electricity transmission development since the 1960s.”

Mixed approach

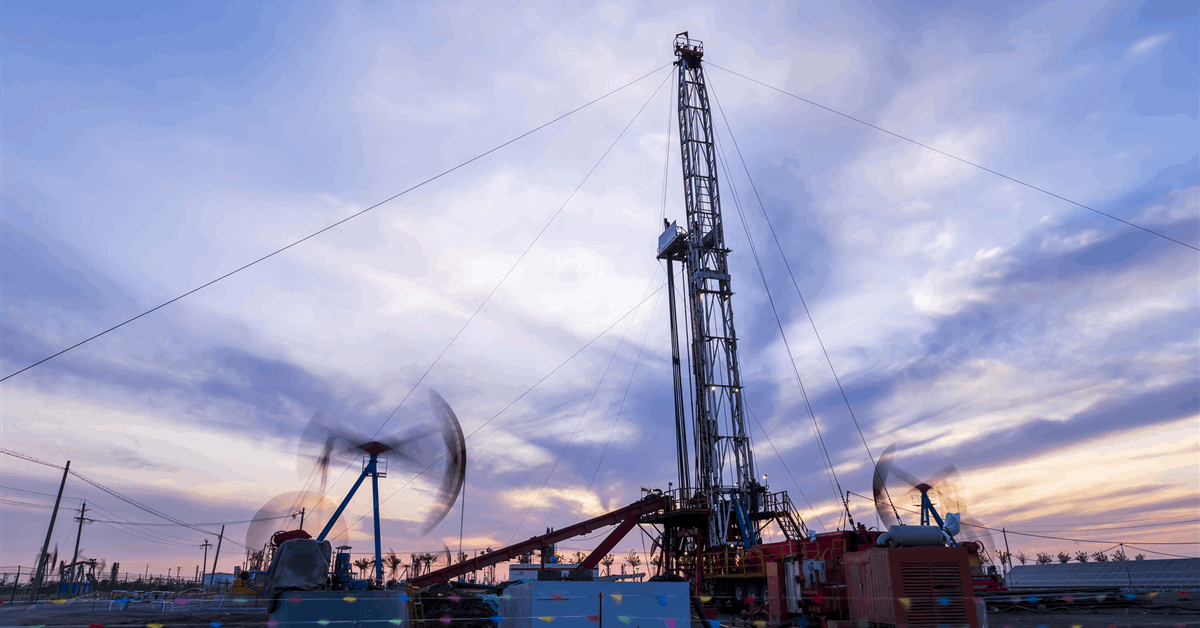

Overhead transmission lines have been a controversial issue due to the visual impacts they have on the surrounding landscapes, and concerns they have a negative effect on nearby property prices.

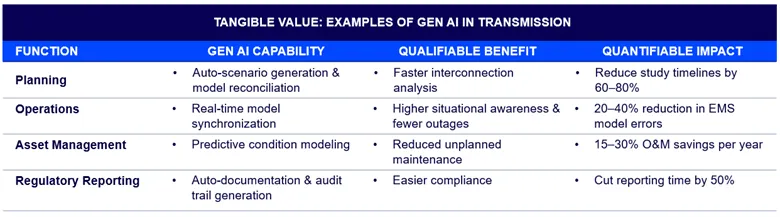

The IET’s report compared a range of electricity transmission technologies by costs, environmental impacts, carbon intensity, technology readiness, and delivery time.

The group added that each technology should be judged on its merits in each specific context, taking into account environmental impact, engineering challenges and local impacts in addition to cost.

For example, underground cables have lower visual impact than overhead lines, but they are viable only in certain terrains, and have much higher cost; long high voltage AC cables also present electrical engineering challenges.

Subsea solutions still require onshore infrastructure to transport power from coastal landing points to areas of demand and have impacts on marine environments.

© DC Thomson

© DC ThomsonWest Aberdeenshire and Kincardine MP and acting shadow energy secretary Andrew Bowie was critical of relying on overhead transmission lines, saying: “The overall strategy should be a mix of above ground, underground and marine transmission. There are places where it just doesn’t make sense and that was my approach during my time as a government minister.”

He added: “Brute forcing an overground-only approach is the wrong thing to do, and the Conservatives will fight that every step of the way.”

Re-wiring the UK grid

The report aims to inform the UK government’s plans to re-wire the country as part of the largest grid upgrade since the 1960s.

With the UK looking to expand its offshore wind capacity, the grid will need a similar boost to handle the additional power. Connections will need to be created to take power from offshore in regions like Scotland and move them down to population centres, such as in the south of England.

© Erikka Askeland/DCT Media

© Erikka Askeland/DCT MediaCommenting on the report, energy minister Michael Shanks said: “This latest report shows that pylons are the best option for billpayers – as cables underground cost significantly more to install and maintain.

“At the same time, we want to ensure those hosting this infrastructure benefit, including by offering households near new pylons £2,500 off their energy bills over 10 years.”

In addition, the report also warned of supply chain bottlenecks, especially in cable manufacturing, which could affect delivery times and prices, and create a need for long-term relationships with providers.