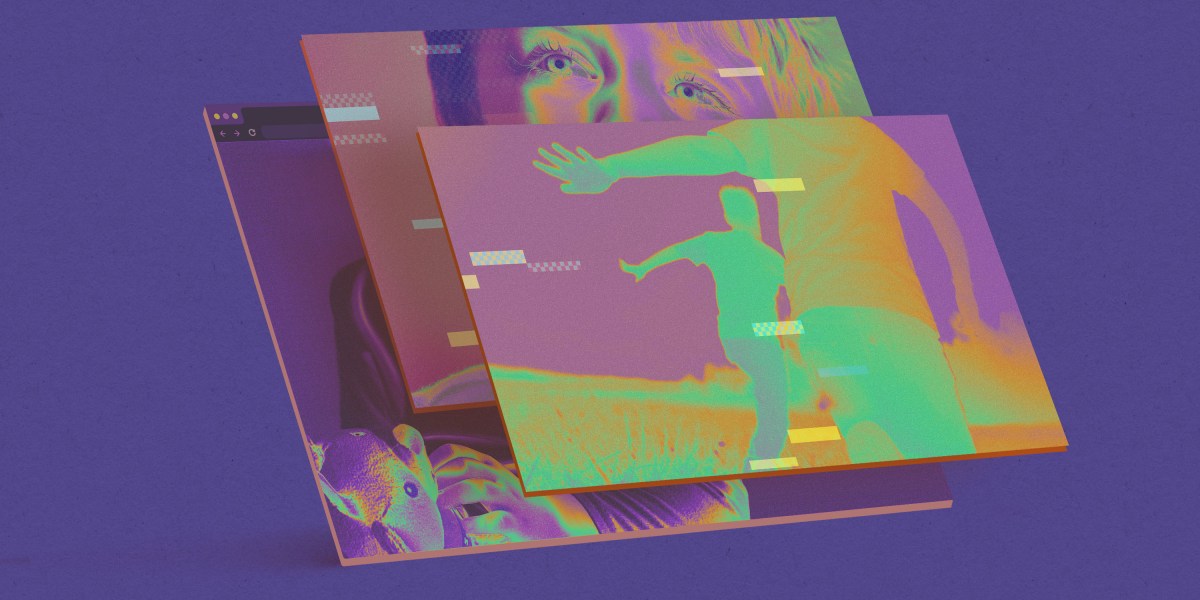

Generative AI has enabled the production of child sexual abuse images to skyrocket. Now the leading investigator of child exploitation in the US is experimenting with using AI to distinguish AI-generated images from material depicting real victims, according to a new government filing.

The Department of Homeland Security’s Cyber Crimes Center, which investigates child exploitation across international borders, has awarded a $150,000 contract to San Francisco–based Hive AI for its software, which can identify whether a piece of content was AI-generated.

The filing, posted on September 19, is heavily redacted and Hive cofounder and CEO Kevin Guo told MIT Technology Review that he could not discuss the details of the contract, but confirmed it involves use of the company’s AI detection algorithms for child sexual abuse material (CSAM).

The filing quotes data from the National Center for Missing and Exploited Children that reported a 1,325% increase in incidents involving generative AI in 2024. “The sheer volume of digital content circulating online necessitates the use of automated tools to process and analyze data efficiently,” the filing reads.

The first priority of child exploitation investigators is to find and stop any abuse currently happening, but the flood of AI-generated CSAM has made it difficult for investigators to know whether images depict a real victim currently at risk. A tool that could successfully flag real victims would be a massive help when they try to prioritize cases.

Identifying AI-generated images “ensures that investigative resources are focused on cases involving real victims, maximizing the program’s impact and safeguarding vulnerable individuals,” the filing reads.

Hive AI offers AI tools that create videos and images, as well as a range of content moderation tools that can flag violence, spam, and sexual material and even identify celebrities. In December, MIT Technology Review reported that the company was selling its deepfake-detection technology to the US military.

For detecting CSAM, Hive offers a tool created with Thorn, a child safety nonprofit, which companies can integrate into their platforms. This tool uses a “hashing” system, which assigns unique IDs to content known by investigators to be CSAM, and blocks that material from being uploaded. This tool, and others like it, have become a standard line of defense for tech companies.

But these tools simply identify a piece of content as CSAM; they don’t detect whether it was generated by AI. Hive has created a separate tool that determines whether images in general were AI-generated. Though it is not trained specifically to work on CSAM, according to Guo, it doesn’t need to be.

“There’s some underlying combination of pixels in this image that we can identify” as AI-generated, he says. “It can be generalizable.”

This tool, Guo says, is what the Cyber Crimes Center will be using to evaluate CSAM. He adds that Hive benchmarks its detection tools for each specific use case its customers have in mind.

The National Center for Missing and Exploited Children, which participates in efforts to stop the spread of CSAM, did not respond to requests for comment on the effectiveness of such detection models in time for publication.

In its filing, the government justifies awarding the contract to Hive without a competitive bidding process. Though parts of this justification are redacted, it primarily references two points also found in a Hive presentation slide deck. One involves a 2024 study from the University of Chicago, which found that Hive’s AI detection tool outranked four other detectors in identifying AI-generated art. The other is its contract with the Pentagon for identifying deepfakes. The trial will last three months.