MIT Technology Review’s What’s Next series looks across industries, trends, and technologies to give you a first look at the future. You can read the rest of them here.

In the early 2020s, a little-known aquaculture company in Portland, Maine, snagged more than $50 million by pitching a plan to harness nature to fight back against climate change. The company, Running Tide, said it could sink enough kelp to the seafloor to sequester a billion tons of carbon dioxide by this year, according to one of its early customers.

Instead, the business shut down its operations last summer, marking the biggest bust to date in the nascent carbon removal sector.

Its demise was the most obvious sign of growing troubles and dimming expectations for a space that has spawned hundreds of startups over the last few years. A handful of other companies have shuttered, downsized, or pivoted in recent months as well. Venture investments have flagged. And the collective industry hasn’t made a whole lot more progress toward that billion-ton benchmark.

The hype phase is over and the sector is sliding into the turbulent business trough that follows, warns Robert Höglund, cofounder of CDR.fyi, a public-benefit corporation that provides data and analysis on the carbon removal industry.

“We’re past the peak of expectations,” he says. “And with that, we could see a lot of companies go out of business, which is natural for any industry.”

The open question is: If the carbon removal sector is heading into a painful if inevitable clearing-out cycle, where will it go from there?

The odd quirk of carbon removal is that it never made a lot of sense as a business proposition: It’s an atmospheric cleanup job, necessary for the collective societal good of curbing climate change. But it doesn’t produce a service or product that any individual or organization strictly needs—or is especially eager to pay for.

To date, a number of businesses have voluntarily agreed to buy tons of carbon dioxide that companies intend to eventually suck out of the air. But whether they’re motivated by sincere climate concerns or pressures from investors, employees, or customers, corporate do-goodism will only scale any industry so far.

Most observers argue that whether carbon removal continues to bobble along or transforms into something big enough to make a dent in climate change will depend largely on whether governments around the world decide to pay for a whole, whole lot of it—or force polluters to.

“Private-sector purchases will never get us there,” says Erin Burns, executive director of Carbon180, a nonprofit that advocates for the removal and reuse of carbon dioxide. “We need policy; it has to be policy.”

What’s the problem?

The carbon removal sector began to scale up in the early part of this decade, as increasingly grave climate studies revealed the need to dramatically cut emissions and suck down vast amounts of carbon dioxide to keep global warming in check.

Specifically, nations may have to continually remove as much as 11 billion tons of carbon dioxide per year by around midcentury to have a solid chance of keeping the planet from warming past 2 °C over preindustrial levels, according to a UN climate panel report in 2022.

A number of startups sprang up to begin developing the technology and building the infrastructure that would be needed, trying out a variety of approaches like sinking seaweed or building carbon-dioxide-sucking factories.

And they soon attracted customers. Companies including Stripe, Google, Shopify, Microsoft, and others began agreeing to pre-purchase tons of carbon removal, hoping to stand up the nascent industry and help offset their own climate emissions. Venture investments also flooded into the space, peaking in 2023 at nearly $1 billion, according to data provided by PitchBook.

From early on, players in the emerging sector sought to draw a sharp distinction between conventional carbon offset projects, which studies have shown frequently exaggerate climate benefits, and “durable” carbon removal that could be relied upon to suck down and store away the greenhouse gas for decades to centuries. There’s certainly a big difference in the price: While buying carbon offsets through projects that promise to preserve forests or plant trees might cost a few dollars per ton, a ton of carbon removal can run hundreds to thousands of dollars, depending on the approach.

That high price, however, brings big challenges. Removing 10 billion tons of carbon dioxide a year at, say, $300 a ton adds up to a global price tag of $3 trillion—a year.

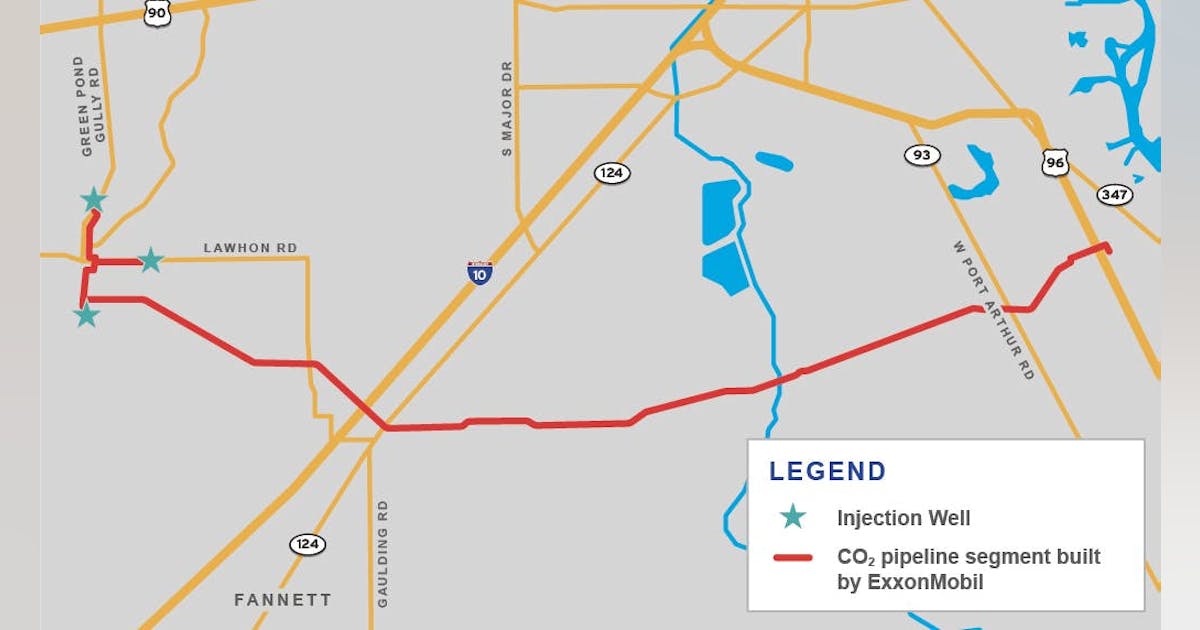

Which brings us back to the fundamental question: Who should or would foot the bill to develop and operate all the factories, pipelines, and wells needed to capture, move, and bury billions upon billions of tons of carbon dioxide?

The state of the market

The market is still growing, as companies voluntarily purchase tons of carbon removal to make strides toward their climate goals. In fact, sales reached an all-time high in the second quarter of this year, mostly thanks to several massive purchases by Microsoft.

But industry sources fear that demand isn’t growing fast enough to support a significant share of the startups that have formed or even the projects being built, undermining the momentum required to scale the sector up to the size needed by midcentury.

To date, all those hundreds of companies that have spun up in recent years have disclosed deals to sell some 38 million tons of carbon dioxide pulled from the air, according to CDR.fyi. That’s roughly the amount the US pumps out in energy-related emissions every three days.

And they’ve only delivered around 940,000 tons of carbon removal. The US emits that much carbon dioxide in less than two hours. (Not every transaction is publicly announced or revealed to CDR.fyi, so the actual figures could run a bit higher.)

Another concern is that the same handful of big players continue to account for the vast majority of the overall purchases, leaving the health and direction of the market dependent on their whims and fortunes.

Most glaringly, Microsoft has agreed to buy 80% of all the carbon removal purchased to date, according to CDR.fyi. The second-biggest buyer is Frontier, a coalition of companies that includes Google, Meta, Stripe, and Shopify, which has committed to spend $1 billion.

If you strip out those two buyers, the market shrinks from 16 million tons under contract during the first half of this year to just 1.2 million, according to data provided to MIT Technology Review by CDR.fyi.

Signs of trouble

Meanwhile, the investor appetite for carbon removal is cooling. For the 12-month period ending in the second quarter of 2025, venture capital investments in the sector fell more than 13% from the same period last year, according to data provided by PitchBook. That tightening funding will make it harder and harder for companies that aren’t bringing in revenue to stay afloat.

Companies that have already shut down also include the carbon removal marketplace Noya and Alkali Earth, which was attempting to use industrial by-products to tie up carbon dioxide.

Still other businesses are struggling. Climeworks, one of the first companies to build direct-air-capture (DAC) factories, announced it was laying off 10% of its staff in May, as it grapples with challenges on several fronts.

The company’s plans to collaborate on the development of a major facility in the US have been at least delayed as the Trump administration has held back tens of millions of dollars in funding granted in 2023 under the Department of Energy’s Regional Direct Air Capture Hubs program. It now appears the government could terminate the funding altogether, along with perhaps tens of billions of dollars’ worth of additional grants previously awarded for a variety of other US carbon removal and climate tech projects.

“Market rumors have surfaced, and Climeworks is prepared for all scenarios,” Christoph Gebald, one of the company’s co-CEOs, said in a previous statement to MIT Technology Review. “The need for DAC is growing as the world falls short of its climate goals and we’re working to achieve the gigaton capacity that will be needed.”

But purchases from direct-air-capture projects fell nearly 16% last year and account for just 8% of all carbon removal transactions to date. Buyers are increasingly looking to categories that promise to deliver tons faster and for less money, notably including burying biochar or installing carbon capture equipment on bioenergy plants. (Read more in my recent story on that method of carbon removal, known as BECCS, here.)

CDR.fyi recently described the climate for direct air capture in grim terms: “The sector has grown rapidly, but the honeymoon is over: Investment and sales are falling, while deployments are delayed across almost every company.”

“Most DAC companies,” the organization added, “will fold or be acquired.”

What’s next?

In the end, most observers believe carbon removal isn’t really going to take off unless governments bring their resources and regulations to bear. That could mean making direct purchases, subsidizing these sectors, or getting polluters to pay the costs to do so—for instance, by folding carbon removal into market-based emissions reductions mechanisms like cap-and-trade systems.

More government support does appear to be on the way. Notably, the European Commission recently proposed allowing “domestic carbon removal” within its EU Emissions Trading System after 2030, integrating the sector into one of the largest cap-and-trade programs. The system forces power plants and other polluters in member countries to increasingly cut their emissions or pay for them over time, as the cap on pollution tightens and the price on carbon rises.

That could create incentives for more European companies to pay direct-air-capture or bioenergy facilities to draw down carbon dioxide as a means of helping them meet their climate obligations.

There are also indications that the International Civil Aviation Organization, a UN organization that establishes standards for the aviation industry, is considering incorporating carbon removal into its market-based mechanism for reducing the sector’s emissions. That might take several forms, including allowing airlines to purchase carbon removal to offset their use of traditional jet fuel or requiring the use of carbon dioxide obtained through direct air capture in some share of sustainable aviation fuels.

Meanwhile, Canada has committed to spend $10 million on carbon removal and is developing a protocol to allow direct air capture in its national offsets program. And Japan will begin accepting several categories of carbon removal in its emissions trading system.

Despite the Trump administration’s efforts to claw back funding for the development of carbon-sucking projects, the US does continue to subsidize storage of carbon dioxide, whether it comes from power plants, ethanol refineries, direct-air-capture plants, or other facilities. The so-called 45Q tax credit, which is worth up to $180 a ton, was among the few forms of government support for climate-tech-related sectors that survived in the 2025 budget reconciliation bill. In fact, the subsidies for putting carbon dioxide to other uses increased.

Even in the current US political climate, Burns is hopeful that local or federal legislators will continue to enact policies that support specific categories of carbon removal in the regions where they make the most sense, because the projects can provide economic growth and jobs as well as climate benefits.

“I actually think there are lots of models for what carbon removal policy can look like that aren’t just things like tax incentives,” she says. “And I think that this particular political moment gives us the opportunity in a unique way to start to look at what those regionally specific and pathway specific policies look like.”

The dangers ahead

But even if more nations do provide the money or enact the laws necessary to drive the business of durable carbon renewal forward, there are mounting concerns that a sector conceived as an alternative to dubious offset markets could increasingly come to replicate their problems.

Various incentives are pulling in that direction.

Financial pressures are building on suppliers to deliver tons of carbon removal. Corporate buyers are looking for the fastest and most affordable way of hitting their climate goals. And the organizations that set standards and accredit carbon removal projects often earn more money as the volume of purchases rises, creating clear conflicts of interest.

Some of the same carbon registries that have long signed off on carbon offset projects have begun creating standards or issuing credits for various forms of carbon removal, including Verra and Gold Standard.

“Reliable assurance that a project’s declared ton of carbon savings equates to a real ton of emissions removed, reduced, or avoided is crucial,” Cynthia Giles, a senior EPA advisor under President Biden, and Cary Coglianese, a law professor at the University of Pennsylvania, wrote in a recent editorial in Science. “Yet extensive research from many contexts shows that auditors selected and paid by audited organizations often produce results skewed toward those entities’ interests.”

Noah McQueen, the director of science and innovation at Carbon180, has stressed that the industry must strive to counter the mounting credibility risks, noting in a recent LinkedIn post: “Growth matters, but growth without integrity isn’t growth at all.”

In an interview, McQueen said that heading off the problem will require developing and enforcing standards to truly ensure that carbon removal projects deliver the climate benefits promised. McQueen added that to gain trust, the industry needs to earn buy-in from the communities in which these projects are built and avoid the environmental and health impacts that power plants and heavy industry have historically inflicted on disadvantaged communities.

Getting it right will require governments to take a larger role in the sector than just subsidizing it, argues David Ho, a professor at the University of Hawaiʻi at Mānoa who focuses on ocean-based carbon removal.

He says there should be a massive, multinational research drive to determine the most effective ways of mopping up the atmosphere with minimal environmental or social harm, likening it to a Manhattan Project (minus the whole nuclear bomb bit).

“If we’re serious about doing this, then let’s make it a government effort,” he says, “so that you can try out all the things, determine what works and what doesn’t, and you don’t have to please your VCs or concentrate on developing [intellectual property] so you can sell yourself to a fossil-fuel company.”

Ho adds that there’s a moral imperative for the world’s historically biggest climate polluters to build and pay for the carbon-sucking and storage infrastructure required to draw down billions of tons of greenhouse gas. That’s because the world’s poorest, hottest nations, which have contributed the least to climate change, will nevertheless face the greatest dangers from intensifying heat waves, droughts, famines, and sea-level rise.

“It should be seen as waste management for the waste we’re going to dump on the Global South,” he says, “because they’re the people who will suffer the most from climate change.”