Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

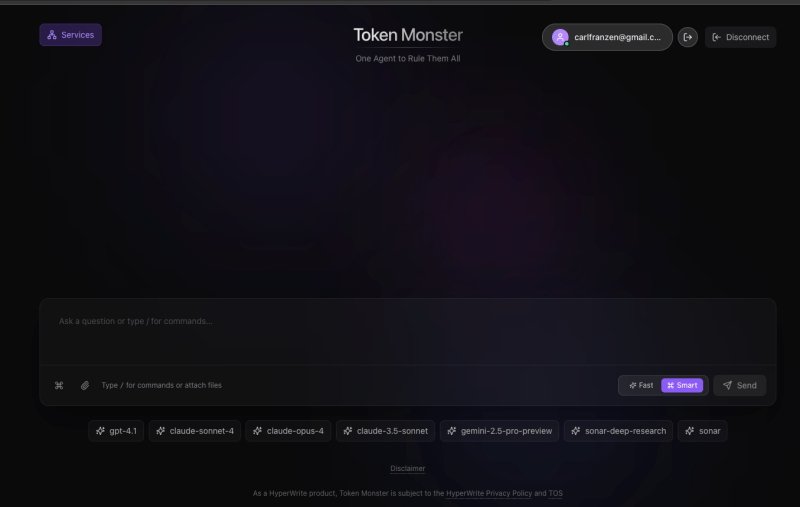

Token Monster, a new AI chatbot platform, has launched its alpha preview, aiming to change how users interact with large language models (LLMs).

Developed by Matt Shumer, co-founder and CEO of OthersideAI and its hit AI writing assistant Hyperwrite AI, Token Monster’s key selling point is its ability to route user prompts to the best available LLMs for the task at hand, delivering enhanced outputs by leveraging the strengths of multiple models.

There are seven major LLMs presently available through Token Monster. Once a user types something into the prompt entry box, Token Monster uses pre-prompts developed through iteration by Shumer himself to automatically analyze the user’s input, decide which combination of multiple available models and linked tools are best suited to answer it, and then provide a combined response leveraging the strengths of said models. The available LLMs include:

- Anthropic Claude 3.5 Sonnet

- Anthropic Claude 3.5 Opus

- OpenAI GPT-4.1

- OpenAI GPT-4o

- Perplexity AI PPLX (for research)

- OpenAI o3 (for reasoning)

- Google Gemini 2.5 Pro

Unlike other chatbot platforms, Token Monster automatically identifies which LLM is best for specific tasks — as well as which LLM-connected tools would be helpful such as web search or coding environments — and orchestrates a multi-model workflow.

“We’re just building the connectors to everything and then a system that decides what to use when,” said Shumer.

For instance, it might use Claude for creativity, o3 for reasoning, and PPLX for research, among others. This approach eliminates the need for users to manually choose the right model for each prompt, simplifying the process for anyone who wants high-quality, tailored results.

Feature highlights

The alpha preview, which is currently free to sign up for at tokenmonster.ai, allows users to upload a range of file types, including Excel, PowerPoint, and Docs.

It also includes features such as webpage extraction, persistent conversation sessions, and a “FAST mode” that auto-routes to the best model without user input.

At the heart of Token Monster is OpenRouter, a third-party service that acts as a gateway to multiple LLMs, and into which Schumer has invested a small sum, by his admission.

This architecture lets Token Monster tap into a range of models from different providers without having to build separate integrations for each one.

Pricing and availability

As of right now, Token Monster does not charge a flat monthly fee.

Instead, users only pay for the tokens they consume through OpenRouter, making it flexible for varying levels of usage.

According to Shumer, this model was inspired by Cline, a tool that enables high-spending users to access unlimited AI power, allowing them to achieve better outputs by simply using more compute resources.

Multi-step workflows produce richer LLM responses

Token Monster’s AI workflows extend beyond simple prompt routing.

In one example, the chatbot might start with a research phase using web search APIs, pass that data to o3 for identifying information gaps, then create an outline with Gemini 2.5 Pro, draft text with Claude Opus, and refine it with Claude 3.5 Sonnet.

This multi-step orchestration is designed to provide richer, more complete answers than a single LLM might be able to generate alone.

The platform also includes the ability to save sessions, with data securely stored using the open source online database service Supabase. This ensures that users can return to ongoing projects without losing their work, while still giving them control over what data is saved and what is ephemeral.

A non-traditional CEO

In a notable experiment, Token Monster’s leadership has been handed over to Anthropic’s Claude model.

Shumer announced that he is committed to following every decision made by “CEO Claude,” calling it a test to see whether an AI can manage a business effectively.

“Either we’ve revolutionized management forever or made a huge mistake,” he wrote on X.

Emerging from the Reflection 70-B controversy

Token Monster’s launch comes less than a year after Shumer faced controversy over his launch and ultimate retraction of Reflection 70B, a fine-tuned versio of Meta’s Llama 3.1 that was initially touted as the most highly performant open source model in the world, but which quickly became subject to criticism and accusations of fraud after third-party researchers were unable to reproduce its stated performance on third-party benchmark tests.

Shumer apologized and said the issues were born out of mistakes made due to speed. The episode underscored the challenges and risks of rapid AI development and the importance of transparency in model releases.

MCP integrations coming next

Shumer said his team on Token Monster is also exploring new capabilities, such as integrating with Model Context Protocol (MCP) servers that allow websites and companies to have LLMs make use of their knowledge, tools, and products to achieve higher-order tasks than just text or image generation.

This would enable Token Monster to connect with a user’s internal data and services, opening possibilities for it to handle tasks like managing customer support tickets or interfacing with other business systems.

Shumer emphasized that Token Monster is still very much in its early stages. While it already supports a suite of powerful features, the platform remains an alpha product and is expected to see rapid iterations and updates as more users provide feedback. “We’re going to keep iterating and adding things,” he said.

A promising experiment

For users who want to take advantage of the combined power of multiple LLMs without the hassle of model switching, Token Monster could be an appealing choice. It’s designed to work for people who don’t want to spend hours tweaking prompts or testing different models themselves, instead letting the system’s automated routing and multi-step workflows handle the complexity.

As Token Monster’s capabilities grow, it will be interesting to see how users and businesses adopt it — and how its experiment with AI-led management pans out. For now, it’s a promising addition to the rapidly expanding landscape of AI chatbots and digital assistants.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.