Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

As of a few days ago, only the nerdiest of nerds (I say this as one) had ever heard of DeepSeek, a Chinese A.I. subsidiary of the equally evocatively named High-Flyer Capital Management, a quantitative analysis (or quant) firm that initially launched in 2015.

Yet within the last few days, it’s been arguably the most discussed company in Silicon Valley. That’s largely thanks to the release of DeepSeek R1, a new large language model that performs “reasoning” similar to OpenAI’s current best-available model o1 — taking multiple seconds or minutes to answer hard questions and solve complex problems as it reflects on its own analysis in a step-by-step, or “chain of thought” fashion.

Not only that, but DeepSeek R1 scored as high or higher than OpenAI’s o1 on a variety of third-party benchmarks (tests to measure AI performance at answering questions on various subject matter), and was reportedly trained at a fraction of the cost (reportedly around $5 million) , with far fewer graphics processing units (GPU) under a strict embargo imposed by the U.S., OpenAI’s home turf.

But unlike o1, which is available only to paying ChatGPT subscribers of the Plus tier ($20 per month) and more expensive tiers (such as Pro at $200 per month), DeepSeek R1 was released as a fully open source model, which also explains why it has quickly rocketed up the charts of AI code sharing community Hugging Face’s most downloaded and active models.

Also, thanks to the fact that it is fully open source, people have already fine-tuned and trained many multiple variations of the model for different task-specific purposes such as making it small enough to run on a mobile device, or combining it with other open source models. Even if you want to use it for development purposes, DeepSeek’s API costs are more than 90% cheaper than the equivalent o1 model from OpenAI.

Most impressively of all, you don’t even need to be a software engineer to use it: DeepSeek has a free website and mobile app even for U.S. users with an R1-powered chatbot interface very similar to OpenAI’s ChatGPT. Except, once again, DeepSeek undercut or “mogged” OpenAI by connecting this powerful reasoning model to web search — something OpenAI hasn’t yet done (web search is only available on the less powerful GPT family of models at present).

An open and shut irony

There’s a pretty delicious, or maybe disconcerting irony to this given OpenAI’s founding goals to democratize AI to the masses. As NVIDIA Senior Research Manager Jim Fan put it on X: “We are living in a timeline where a non-US company is keeping the original mission of OpenAI alive – truly open, frontier research that empowers all. It makes no sense. The most entertaining outcome is the most likely.”

Or as X user @SuspendedRobot put it, referencing reports that DeepSeek appears to have been trained on question-answer outputs and other data generated by ChatGPT: “OpenAI stole from the whole internet to make itself richer, DeepSeek stole from them and give it back to the masses for free I think there is a certain british folktale about this”

But Fan isn’t the only one to sit up and take note of DeepSeek’s success. The open source availability of DeepSeek R1, its high performance, and the fact that it seemingly “came out of nowhere” to challenge the former leader of generative AI, has sent shockwaves throughout Silicon Valley and far beyond, based on my conversations and readings of various engineers, thinkers, and leaders. If not “everyone” is freaking out about it as my hyperbolic headline suggests, it’s certainly the talk of the town in tech and business circles.

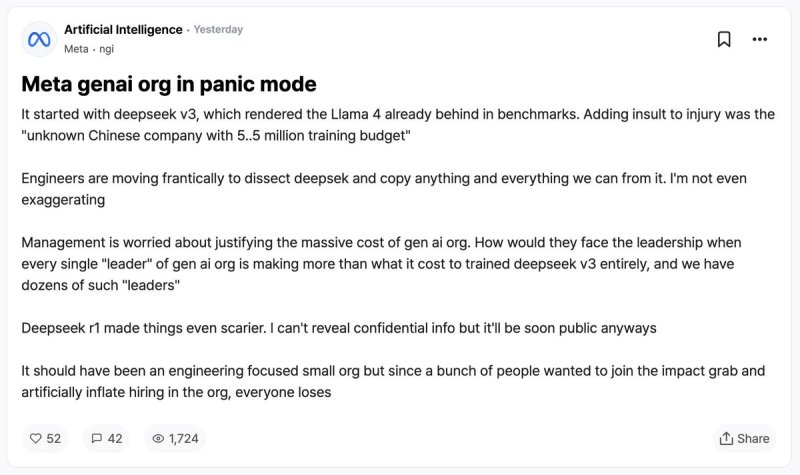

A message posted to Blind, the app for sharing anonymous gossip in Silicon Valley, has been making the rounds suggesting Meta is in crisis over the success of DeepSeek because of how quickly it surpassed Meta’s own efforts to be the king of open source AI with its Llama models.

‘This changes the whole game’

X user @tphuang wrote compellingly: “DeepSeek has commoditized AI outside of very top-end. Lightbulb moment for me in 1st photo. R1 is so much cheaper than US labor cost that many jobs will get automated away over next 5 yrs,” later noting why DeepSeek’s R1 is more enticing to users than even OpenAI’s o1:

“3 huge issues w/ o1:

1) too slow

2) too expensive

3) lack of control for end user/reliance on OpenAI

R1 solves all of them. A company can buy their own Nvidia GPUs, run these models. Don’t have to worry about additional costs or slow/unresponsive OpenAI servers”

@tphaung also posed a compelling analogy as a question: “Will DeepSeek be to LLM what Android became to OS world?”

Web entrepreneur Arnaud Bertrand didn’t mince words about the startling implications of DeepSeek’s success, either, writing on X: “There’s no overstating how profoundly this changes the whole game. And not only with regards to AI, it’s also a massive indictment of the US’s misguided attempt to stop China’s technological development, without which Deepseek may not have been possible (as the saying goes, necessity is the mother of inventions).”

The censorship issue

However, others have sounded cautionary notes on DeepSeek’s rapid rise, arguing that as a startup operated out of China, it is necessarily subject to that country’s laws and content censorship requirements.

Indeed, my own usage of DeepSeek on the iOS app here in the U.S. found it would not answer questions about Tiananmen Square, the site of the 1989 pro-democracy student protests and uprising, and subsequent violent crackdown by the Chinese military, resulting in at least 200, possibly thousands of deaths, earning it the nickname “Tiananmen Square Massacre” in Western media outlets.

Ben Hylak, a former Apple human interface designer and co-founder of AI product analytics platform Dawn, posted on X how asking about this subject caused DeepSeek R1 to enter a circuitous loop.

As a member of the press itself, I of course take freedom of speech and expression extremely seriously and it is arguably one of the most fundamental, inarguable causes I champion.

Yet I would be remiss not to note that OpenAI’s models and products including ChatGPT also refuse to answer a whole range of questions about even innocuous content — especially pertaining to human sexuality and erotic/adult, NSFW subject matter.

It’s not an apples-to-apples comparison, of course. And there will be some for whom the resistance to relying on foreign technology makes them skeptical of DeepSeek’s ultimate value and utility. But there’s no denying its performance and low cost.

And in a time when 16.5% of all U.S. goods are imported by China, it’s hard for me to caution against using DeepSeek R1 on the basis of censorship concerns or security risks — especially when the model code is freely available to download, take offline, use on-device in secure environments, and to fine-tune at will.

I definitely detect some existential crisis about the “fall of the West” and “rise of China,” motivating some of the animated discussion around DeepSeek, however, and others have already connected it to how U.S. users joined the app Xiaohongshu (aka “Little Red Book”) when TikTok was briefly banned in this country, only to be amazed at the quality of life in China depicted in the videos shared there. DeepSeek R1’s arrival occurs in this narrative context — one in which China appears (and by many metrics is clearly) ascendant while the U.S. appears (and by many metrics, also is) in decline.

The first but hardly the last Chinese AI model to shake the world

It also won’t be the last Chinese AI model to threaten the dominance of Silicon Valley giants — even as they, like OpenAI, raise more money than ever for their ambitions to develop artificial general intelligence (AGI), programs that outperform humans at most economically valuable work.

Just yesterday, another Chinese model from TikTok parent company Bytedance — called Doubao-1.5-pro — was released with performance matching OpenAI’s non-reasoning GPT-4o model on third-party benchmarks, but again, at 1/50th the cost.

Chinese models have gotten so good, so fast, even those outside the tech industry are taking note: The Economist magazine just ran a piece on DeepSeek’s success and that of other Chinese AI efforts, and political commentator Matt Bruenig posted on X that: “I have been extensively using Gemini, ChatGPT, and Claude for NLRB document summary for nearly a year. Deepseek is better than all of them at it. The chatbot version of it is free. Price to use it’s API is 99.5% below the price of OpenAI’s API. [shrug emoji]”

How does OpenAI respond?

Little wonder OpenAI co-founder and CEO Sam Altman today said that the company was bringing its yet-to-be released second reasoning model family, o3, to ChatGPT even for free users. OpenAI still appears to be carving its own path with more proprietary and advanced models — setting the industry standard.

But the question becomes: with DeepSeek, ByteDance, and other Chinese AI companies nipping at its heels, how long can OpenAI remain in the lead at making and releasing new cutting-edge AI models? And if it and when it falls, how hard and how fast will its decline be?

OpenAI does have another historical precedent going for it, though. If DeepSeek and Chinese AI models do indeed become to LLMs as Google’s open source Android did to mobile — taking the lion’s share of the market for a while — you only have to see how the Apple iPhone with its locked down, proprietary, all-in house approach managed to carve off the high-end of the market and steadily expand downward from there, especially in the U.S., to the point that it now owns nearly 60% of the domestic smartphone market.

Still, for all those spending big bucks to use AI models from leading labs, DeepSeek shows the same capabilities may be available for much cheaper and with much greater control. And in an enterprise setting, that may be enough to win the ballgame.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.