From Silicon to Stargate: Aligning with OpenAI, Oracle, and the Future of AI Infrastructure

The Ampere acquisition doesn’t stand alone. It is the latest and perhaps most strategic move in a broader chess game SoftBank is playing across the AI and data infrastructure landscape. To understand its full impact, the deal must be seen in context with SoftBank’s recent alignment with two other heavyweight players: OpenAI and Oracle.

As you might’ve heard, earlier this year, OpenAI unveiled plans for its Stargate project—a massive, multi-billion-dollar supercomputing campus set to come online by 2028. Stargate is expected to be one of the largest AI infrastructure builds in history, and Oracle will be the primary cloud provider for the project. Behind the scenes, SoftBank is playing a key financial and strategic role, helping OpenAI secure capital and compute resources for the long-term training and deployment of advanced AI models.

Oracle, in turn, is both an investor in Ampere and a major customer—one of the first hyperscale operators to go all-in on Ampere’s Arm-based CPUs for powering cloud services. With SoftBank now controlling Ampere outright, it gains a stronger seat at the table with both Oracle and OpenAI—positioning itself as an essential enabler of the AI supply chain from silicon to software.

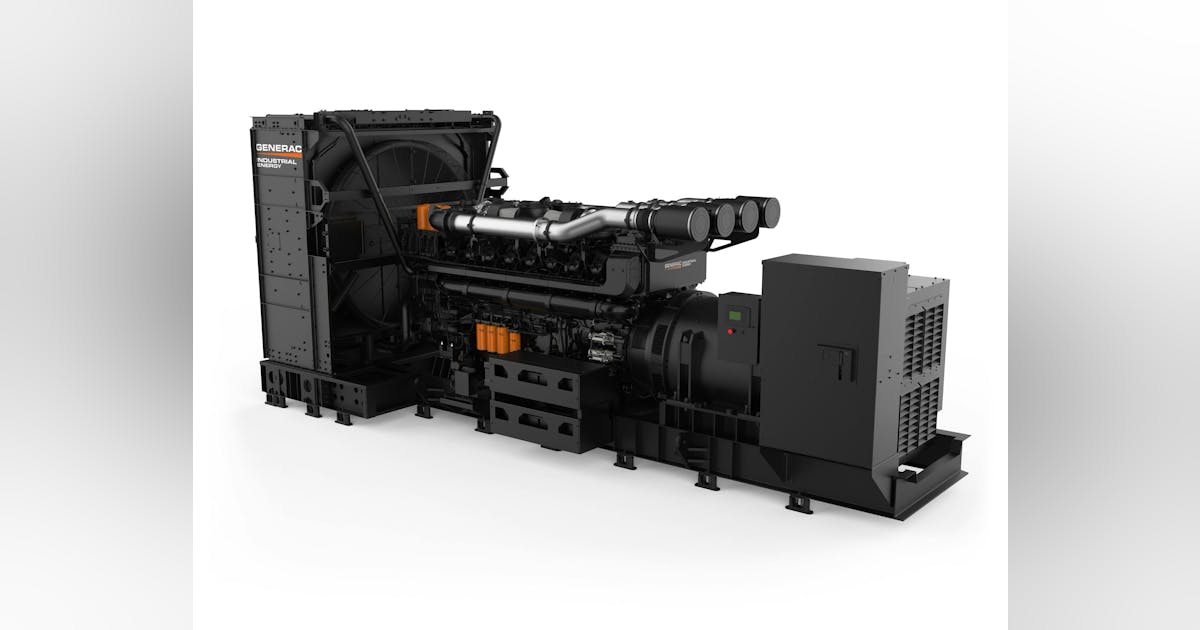

The Ampere deal gives SoftBank direct access to a custom silicon pipeline purpose-built for the kind of high-efficiency, high-throughput compute that AI inference and model serving demand at scale. Combine this with SoftBank’s ownership of Arm, the bedrock of energy-efficient chip design, and its portfolio now spans everything from the instruction set to the cloud instance.

More importantly, it gives SoftBank leverage. In a world where NVIDIA dominates AI training workloads, there’s growing appetite for alternatives in inference, especially at scale where power, cost, and flexibility become deciding factors. Ampere’s CPU roadmap, combined with Graphcore’s AI acceleration tech and Arm’s global design ecosystem, offers a compelling vision for an open, sustainable AI infrastructure stack—not just for SoftBank’s own ambitions, but for partners like OpenAI and Oracle who are building the next layer of the internet.

In short, SoftBank isn’t just assembling parts. It’s building a vertically integrated platform for AI infrastructure—one that spans chip design, data center hardware, cloud partnerships, and capital investment. The Ampere acquisition solidifies that platform, and in the long run, may prove to be the most pivotal piece yet.