A full day’s work for Dora Manriquez, who drives for Uber and Lyft in the San Francisco Bay Area, includes waiting in her car for a two-digit number to appear. The apps keep sending her rides that are too cheap to pay for her time—$4 or $7 for a trip across San Francisco, $16 for a trip from the airport for which the customer is charged $100. But Manriquez can’t wait too long to accept a ride, because her acceptance rate contributes to her driving score for both companies, which can then affect the benefits and discounts she has access to.

The systems are black boxes, and Manriquez can’t know for sure which data points affect the offers she receives or how. But what she does know is that she’s driven for ride-share companies for the last nine years, and this year, having found herself unable to score enough better-paying rides, she has to file for bankruptcy.

Every action Manriquez takes—or doesn’t take—is logged by the apps she must use to work for these companies. (An Uber spokesperson told MIT Technology Review that acceptance rates don’t affect drivers’ fares. Lyft did not return a request for comment on the record.) But app-based employers aren’t the only ones keeping a very close eye on workers today.

A study conducted in 2021, when the covid-19 pandemic had greatly increased the number of people working from home, revealed that almost 80% of companies surveyed were monitoring their remote or hybrid workers. A New York Times investigation in 2022 found that eight of the 10 largest private companies in the US track individual worker productivity metrics, many in real time. Specialized software can now measure and log workers’ online activities, physical location, and even behaviors like which keys they tap and what tone they use in their written communications—and many workers aren’t even aware that this is happening.

What’s more, required work apps on personal devices may have access to more than just work—and as we may know from our private lives, most technology can become surveillance technology if the wrong people have access to the data. While there are some laws in this area, those that protect privacy for workers are fewer and patchier than those applying to consumers. Meanwhile, it’s predicted that the global market for employee monitoring software will reach $4.5 billion by 2026, with North America claiming the dominant share.

Working today—whether in an office, a warehouse, or your car—can mean constant electronic surveillance with little transparency, and potentially with livelihood-ending consequences if your productivity flags. What matters even more than the effects of this ubiquitous monitoring on privacy may be how all that data is shifting the relationships between workers and managers, companies and their workforce. Managers and management consultants are using worker data, individually and in the aggregate, to create black-box algorithms that determine hiring and firing, promotion and “deactivation.” And this is laying the groundwork for the automation of tasks and even whole categories of labor on an endless escalator to optimized productivity. Some human workers are already struggling to keep up with robotic ideals.

We are in the midst of a shift in work and workplace relationships as significant as the Second Industrial Revolution of the late 19th and early 20th centuries. And new policies and protections may be necessary to correct the balance of power.

Data as power

Data has been part of the story of paid work and power since the late 19th century, when manufacturing was booming in the US and a rise in immigration meant cheap and plentiful labor. The mechanical engineer Frederick Winslow Taylor, who would become one of the first management consultants, created a strategy called “scientific management” to optimize production by tracking and setting standards for worker performance.

Soon after, Henry Ford broke down the auto manufacturing process into mechanized steps to minimize the role of individual skill and maximize the number of cars that could be produced each day. But the transformation of workers into numbers has a longer history. Some researchers see a direct line between Taylor’s and Ford’s unrelenting focus on efficiency and the dehumanizing labor optimization practices carried out on slave-owning plantations.

As manufacturers adopted Taylorism and its successors, time was replaced by productivity as the measure of work, and the power divide between owners and workers in the United States widened. But other developments soon helped rebalance the scales. In 1914, Section 6 of the Clayton Act established the federal legal right for workers to unionize and stated that “the labor of a human being is not a commodity.” In the years that followed, union membership grew, and the 40-hour work week and the minimum wage were written into US law. Though the nature of work had changed with revolutions in technology and management strategy, new frameworks and guardrails stood up to meet that change.

More than a hundred years after Taylor published his seminal book, The Principles of Scientific Management, “efficiency” is still a business buzzword, and technological developments, including new uses of data, have brought work to another turning point. But the federal minimum wage and other worker protections haven’t kept up, leaving the power divide even starker. In 2023, CEO pay was 290 times average worker pay, a disparity that’s increased more than 1,000% since 1978. Data may play the same kind of intermediary role in the boss-worker relationship that it has since the turn of the 20th century, but the scale has exploded. And the stakes can be a matter of physical health.

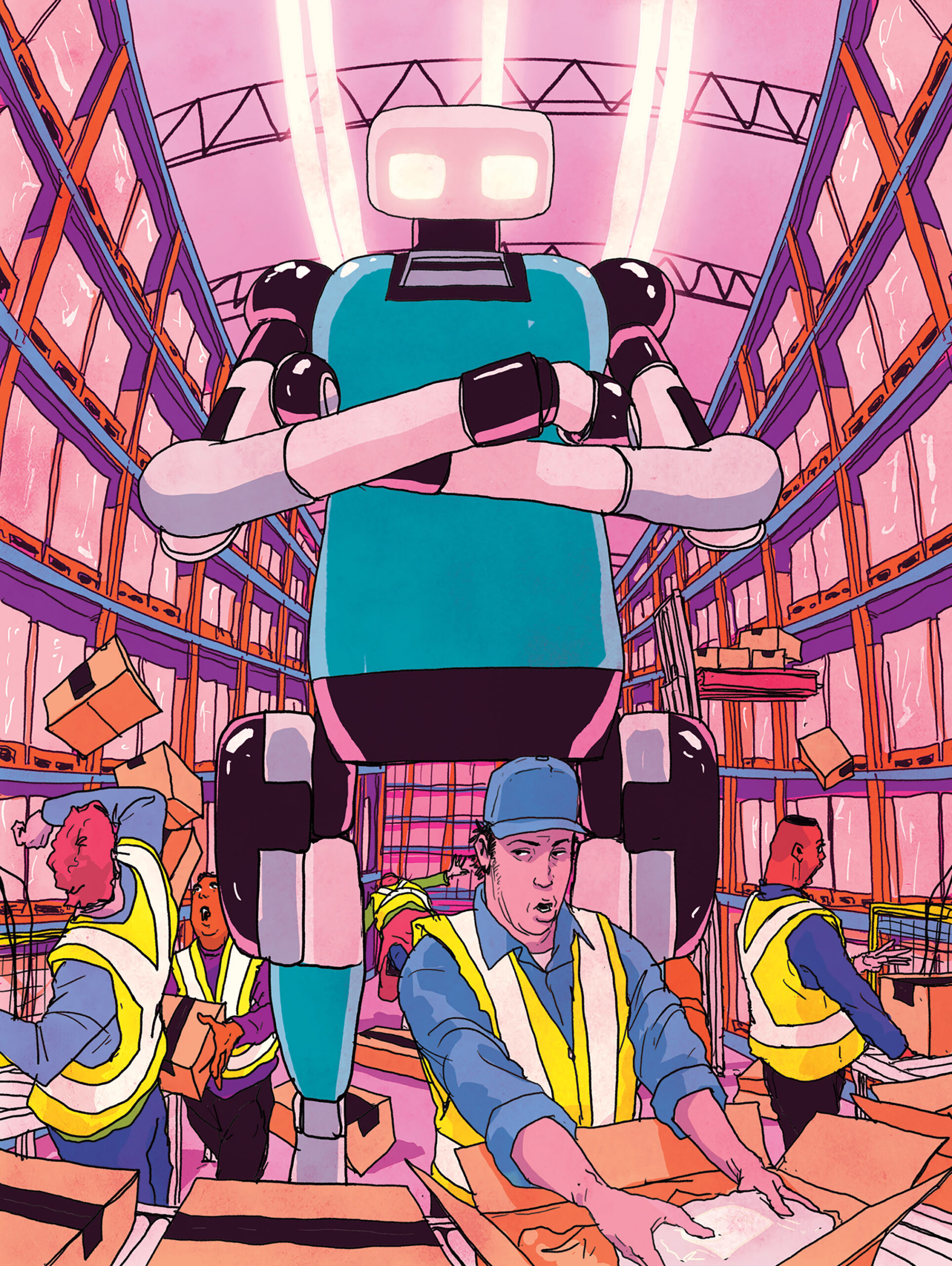

In 2024, a report from a Senate committee led by Bernie Sanders, based on an 18-month investigation of Amazon’s warehouse practices, found that the company had been setting the pace of work in those facilities with black-box algorithms, presumably calibrated with data collected by monitoring employees. (In California, because of a 2021 bill, Amazon is required to at least reveal the quotas and standards workers are expected to comply with; elsewhere the bar can remain a mystery to the very people struggling to meet it.) The report also found that in each of the previous seven years, Amazon workers had been almost twice as likely to be injured as other warehouse workers, with injuries ranging from concussions to torn rotator cuffs to long-term back pain.

An internal team tasked with evaluating Amazon warehouse safety found that letting robots set the pace for human labor was correlated with subsequent injuries.

The Sanders report found that between 2020 and 2022, two internal Amazon teams tasked with evaluating warehouse safety recommended reducing the required pace of work and giving workers more time off. Another found that letting robots set the pace for human labor was correlated with subsequent injuries. The company rejected all the recommendations for technical or productivity reasons. But the report goes on to reveal that in 2022, another team at Amazon, called Core AI, also evaluated warehouse safety and concluded that unrealistic pacing wasn’t the reason all those workers were getting hurt on the job. Core AI said that the cause, instead, was workers’ “frailty” and “intrinsic likelihood of injury.” The issue was the limitations of the human bodies the company was measuring, not the pressures it was subjecting those bodies to. Amazon stood by this reasoning during the congressional investigation.

Amazon spokesperson Maureen Lynch Vogel told MIT Technology Review that the Sanders report is “wrong on the facts” and that the company continues to reduce incident rates for accidents. “The facts are,” she said, “our expectations for our employees are safe and reasonable—and that was validated both by a judge in Washington after a thorough hearing and by the state’s Board of Industrial Insurance Appeals.”

A study conducted in 2021 revealed that almost 80% of companies surveyed were monitoring their remote or hybrid workers.

Yet this line of thinking is hardly unique to Amazon, although the company could be seen as a pioneer in the datafication of work. (An investigation found that over one year between 2017 and 2018, the company fired hundreds of workers at a single facility—by means of automatically generated letters—for not meeting productivity quotas.) An AI startup recently placed a series of billboards and bus signs in the Bay Area touting the benefits of its automated sales agents, which it calls “Artisans,” over human workers. “Artisans won’t complain about work-life balance,” one said. “Artisans won’t come into work hungover,” claimed another. “Stop hiring humans,” one hammered home.

The startup’s leadership took to the company blog to say that the marketing campaign was intentionally provocative and that Artisan believes in the potential of human labor. But the company also asserted that using one of its AI agents costs 96% less than hiring a human to do the same job. The campaign hit a nerve: When data is king, humans—whether warehouse laborers or knowledge workers—may not be able to outperform machines.

AI management and managing AI

Companies that use electronic employee monitoring report that they are most often looking to the technologies not only to increase productivity but also to manage risk. And software like Teramind offers tools and analysis to help with both priorities. While Teramind, a globally distributed company, keeps its list of over 10,000 client companies private, it provides resources for the financial, health-care, and customer service industries, among others—some of which have strict compliance requirements that can be tricky to keep on top of. The platform allows clients to set data-driven standards for productivity, establish thresholds for alerts about toxic communication tone or language, create tracking systems for sensitive file sharing, and more.

Electronic monitoring and management are also changing existing job functions in real time. Teramind’s clients must figure out who at their company will handle and make decisions around employee data. Depending on the type of company and its needs, Osipova says, that could be HR, IT, the executive team, or another group entirely—and the definitions of those roles will change with these new responsibilities.

Workers’ tasks, too, can shift with updated technology, sometimes without warning. In 2020, when a major hospital network piloted using robots to clean rooms and deliver food to patients, Criscitiello heard from SEIU-UHW members that they were confused about how to work alongside them. Workers certainly hadn’t received any training for that. “It’s not ‘We’re being replaced by robots,’” says Criscitiello. “It’s ‘Am I going to be responsible if somebody has a medical event because the wrong tray was delivered? I’m supervising the robot—it’s on my floor.’”

A New York Times investigation in 2022 found that eight of the 10 largest US private companies track individual worker productivity metrics, often in real time.

Nurses are also seeing their jobs expand to include technology management. Carmen Comsti of National Nurses United, the largest nurses’ union in the country, says that while management isn’t explicitly saying nurses will be disciplined for errors that occur as algorithmic tools like AI transcription systems or patient triaging mechanisms are integrated into their workflows, that’s functionally how it works. “If a monitor goes off and the nurse follows the algorithm and it’s incorrect, the nurse is going to get blamed for it,” Comsti says. Nurses and their unions don’t have access to the inner workings of the algorithms, so it’s impossible to say what data these or other tools have been trained on, or whether the data on how nurses work today will be used to train future algorithmic tools. What it means to be a worker, manager, or even colleague is on shifting ground, and frontline workers don’t have insight into which way it’ll move next.

The state of the law and the path to protection

Today, there isn’t much regulation on how companies can gather and use workers’ data. While the General Data Protection Regulation (GDPR) offers some worker protections in Europe, no US federal laws consistently shield workers’ privacy from electronic monitoring or establish firm guardrails for the implementation of algorithm-driven management strategies that draw on the resulting data. (The Electronic Communications Privacy Act allows employers to monitor employees if there are legitimate business reasons and if the employee has already given consent through a contract; tracking productivity can qualify as a legitimate business reason.)

But in late 2024, the Consumer Financial Protection Bureau did issue guidance warning companies using algorithmic scores or surveillance-based reports that they must follow the Fair Credit Reporting Act—which previously applied only to consumers—by getting workers’ consent and offering transparency into what data was being collected and how it would be used. And the Biden administration’s Blueprint for an AI Bill of Rights had suggested that the enumerated rights should apply in employment contexts. But none of these are laws.

So far, binding regulation is being introduced state by state. In 2023, the California Consumer Privacy Act (CCPA) was officially extended to include workers and not just consumers in its protections, even though workers had been specifically excluded when the act was first passed. That means California workers now have the right to know what data is being collected about them and for what purpose, and they can ask to correct or delete that data. Other states are working on their own measures. But with any law or guidance, whether at the federal or state level, the reality comes down to enforcement. Criscitiello says SEIU is testing out the new CCPA protections.

“It’s too early to tell, but my conclusion so far is that the onus is on the workers,” she says. “Unions are trying to fill this function, but there’s no organic way for a frontline worker to know how to opt out [of data collection], or how to request data about what’s being collected by their employer. There’s an education gap about that.” And while CCPA covers the privacy aspect of electronic monitoring, it says nothing about how employers can use any collected data for management purposes.

The push for new protections and guardrails is coming in large part from organized labor. Unions like National Nurses United and SEIU are working with legislators to create policies on workers’ rights in the face of algorithmic management. And app-based advocacy groups have been pushing for new minimum pay rates and against wage theft—and winning. There are other successes to be counted already, too. One has to do with electronic visit verification (EVV), a system that records information about in-home visits by health-care providers. The 21st Century Cures Act, signed into law in 2016, required all states to set up such systems for Medicaid-funded home health care. The intent was to create accountability and transparency to better serve patients, but some health-care workers in California were concerned that the monitoring would be invasive and disruptive for them and the people in their care.

Brandi Wolf, the statewide policy and research director for SEIU’s long-term-care workers, says that in collaboration with disability rights and patient advocacy groups, the union was able to get language into legislation passed in the 2017–2018 term that would take effect the next fiscal year. It indicated to the federal government that California would be complying with the requirement, but that EVV would serve mainly a timekeeping function, not a management or disciplinary one.

Today advocates say that individual efforts to push back against or evade electronic monitoring are not enough; the technology is too widespread and the stakes too high. The power imbalances and lack of transparency affect workers across industries and sectors—from contract drivers to unionized hospital staff to well-compensated knowledge workers. What’s at issue, says Minsu Longiaru, a senior staff attorney at PowerSwitch Action, a network of grassroots labor organizations, is our country’s “moral economy of work”—that is, an economy based on human values and not just capital. Longiaru believes there’s an urgent need for a wave of socially protective policies on the scale of those that emerged out of the labor movement in the early 20th century. “We’re at a crucial moment right now where as a society, we need to draw red lines in the sand where we can clearly say just because we can do something technological doesn’t mean that we should do it,” she says.

Like so many technological advances that have come before, electronic monitoring and the algorithmic uses of the resulting data are not changing the way we work on their own. The people in power are flipping those switches. And shifting the balance back toward workers may be the key to protecting their dignity and agency as the technology speeds ahead. “When we talk about these data issues, we’re not just talking about technology,” says Longiaru. “We spend most of our lives in the workplace. This is about our human rights.”

Rebecca Ackermann is a writer, designer, and artist based in San Francisco.