Twenty-five years ago, a data center boom helped fuel a race to build gas-fired power plants, with the energy secretary, utilities, politicians and experts warning of blackouts and economic stagnation if the country didn’t meet surging demand for electricity.

By 2001, however, the dot com bubble had burst, the economy was in recession and the huge demand increase never materialized. Efficiency and productivity improved rapidly, and demand remained more or less level for the next two decades, leaving many utilities with excess capacity and ratepayers footing the bill.

Some analysts and industry sources see parallels between then and now. Once again, headlines are warning of imminent energy shortfalls due largely to the power needs of artificial intelligence. Leading figures in government and industry are promoting more firm generation, and particularly gas, as a matter of economic and national security.

“Can the same thing happen? Definitely,” said Eugene Kim, Wood Mackenzie’s Americas Gas Research team director. “The utilities and anyone planning for power demand is forecasting unprecedented and, in some cases, even exponential growth. Whether that materializes or not – huge degree of uncertainty.”

Gas investment reaches new heights

Investment firms, utilities, tech giants, energy companies and others are pouring billions into acquiring existing gas plants or developing new ones to serve data centers. Gas power merger and acquisition valuations have doubled since 2024, reaching up to $1.93 million/MW in some markets, according to energy analytics firm Enverus.

While there are echoes of the millennium today, there are a few important differences.

The first is that the U.S. is producing and consuming more gas than ever before — driven largely by the rise of fracking — with production concentrated in Texas and Louisiana to the south, and Pennsylvania and West Virginia farther north.

Secondly, as the U.S. became a gas-producing powerhouse, the gas and electric power sectors have become much more interdependent. In 2000, the electric power sector accounted for about 22% percent of U.S. gas consumption, while gas accounted for around 16%of electricity produced, according to the U.S. Energy Information Administration.

By 2023, the electric power sector accounted for about 40% of total U.S. natural gas consumption, and gas accounted for about 42% to 43% of utility-scale electricity generation, making it the single largest fuel source, the EIA says. Over the years, the role of gas has grown, largely displacing coal as the latter became uneconomical.

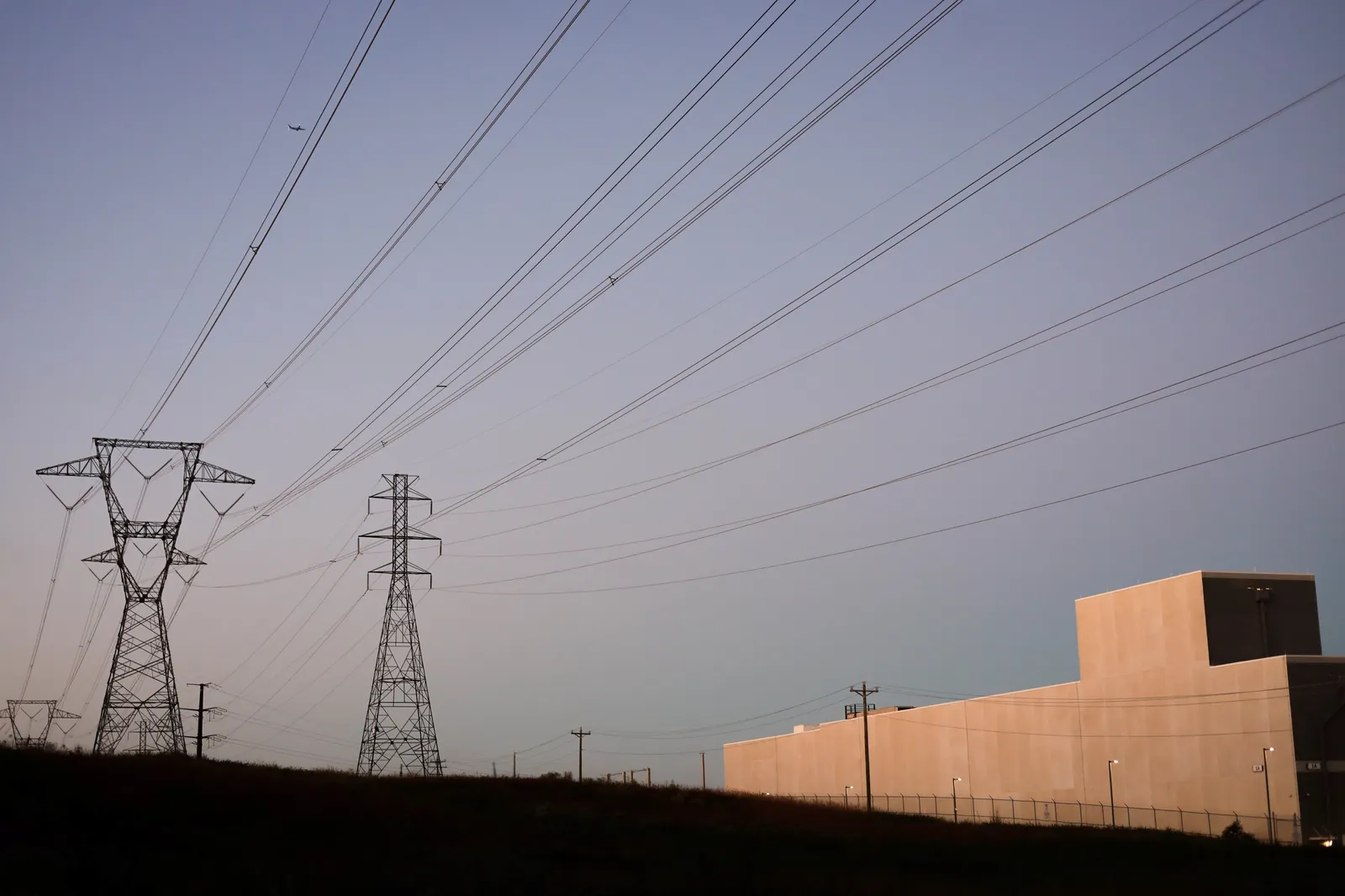

DataBank’s IAD4 data center under construction in Ashburn, Virginia.

Diana DiGangi/Utility Dive

The third key difference is the rise of renewables and storage on a large scale. While gas is the dominant source of fuel for U.S. power generation, it makes up a small fraction of new generation coming online this year, and energy storage is taking a small but growing share of daily peak demand when the sun goes down. Utility-scale wind and solar account for 83% of FERC’s “high probability” additions through July 2028, while gas makes up about 16%.

There is some evidence that could change.

The outlook for renewables has dimmed since President Donald Trump came to office this year and largely made good on his promises to repeal tax credits, permits and other government support for wind and solar while throwing his weight behind fossil fuels.

Scott Wilmot, an energy analyst at Enverus, said before the passage of the One Big Beautiful Bill Act in July, he would have said the quality and size of the renewable project pipeline was more than enough to meet projected energy demands. Now, he’s not so sure.

“The reality is the [levelized cost of electricity] for these renewable projects has gone up without those tax credits,” he said. “A lot of folks who are developing these projects are probably reconsidering a lot of their pipeline.”

The PJM interconnection, the nation’s largest grid operator, is fast tracking 11.8 GW of generation, mostly from gas. Gas also dominated MISO‘s fast track interconnection review, accounting for 19 GW out of 26 GW. In ERCOT, gas went from 6.8% of the interconnection queue in August 2024 to 9.1% as of August 2025.

At the same time, major gas pipeline expansions are planned or proposed.

Amy Andryszak, president and CEO of the Interstate National Gas Association of America, said existing pipelines are currently running at capacity, and her organization’s members are reporting a “record number” of inquiries for new pipelines from potential customers.

“They indicate they have not seen this level of interest in building since around 2010 when the fracking boom drove interest in pipeline development,” Andryszak said in an emailed statement. “Many members are already announcing new or revived pipeline projects, and we expect many more will file for certificates at [the Federal Energy Regulatory Commission] over the next 18 months.”

FERC is expected to issue guidance soon on colocation rules, which could potentially make it easier to route gas directly to data centers and other large loads.

What would be the largest gas-fired power plant in the country is a 4.5 GW project being developed by Knighthead Capital Management at the former Homer City coal plant in Pennsylvania as part of a 3,200-acre data center campus.

A Google data center in Ashburn, Virginia, located down the street from the Potomac Energy Center, a 774-MW natural gas plant.

Diana DiGangi/Utility Dive

Some developers say even without data centers, electricity demand is rising and gas, which is firm, dispatchable and proven, will be an important part of the energy mix for decades to come, especially as more coal generation retires.

Bilal Khan is an executive at Blackstone who oversaw the firm’s recent acquisition of the 620-MW natural gas power plant Hill Top Energy Center in Pennsylvania for about $1,600/kW. The state is part of PJM, which is already setting record capacity auction prices, making it an attractive market for producers. The grid operator has said that is due to supply and demand conditions, but its independent market monitor says data centers are the “primary reason” for the price surge.

Khan said that even putting aside data centers, he’s bullish on natural gas thanks to the other factors driving increased power demand, including manufacturing and electrification.

“We still need new power supply that’s reliable, consistent and affordable,” Khan said. “This moment is unique and in contrast to the last approximately 20 years when power demand was flat.”

A risky investment

Khan acknowledged, however, that new natural gas plants face significant obstacles. The backlog for new turbines, for example, can stretch up to seven years amid global competition for those and other components like transformers.

“There’s an equipment shortage, there’s a labor shortage and it’s more challenging to obtain all necessary permits,” Khan said.

And the cost of deploying new gas generation is rising fast.

According to Enverus, capital costs for new natural gas power plants now average $2,200/kW to $3,000/kW. An analysis by GridLab adds another $1,500/kW levelized for the 20-year cost of fuel and $500/kW for pipeline infrastructure, putting the cost of new gas generation at $4,000/kW to $4,500/kW.

Kevin Smith, CEO of Arevon Energy, spent years working on natural gas projects alongside nuclear and renewables before transitioning to focus on renewables exclusively. He suggested there was a ceiling to how much gas could be built to meet near-term demand given the prices, pipeline constraints and interconnection queues.

“Tens of gigawatts of new generation from natural gas is likely at least five years away, if not more,” Smith said.

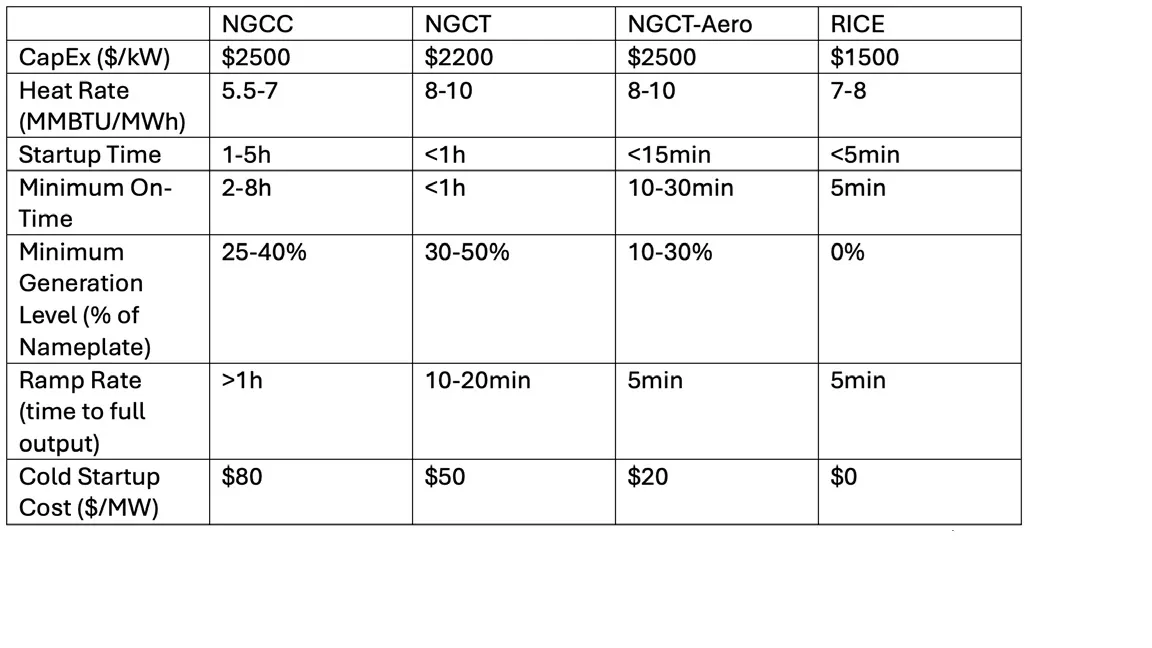

Changing market dynamics also mean new gas-powered generators face tighter conditions under which they would be economical to run, according to a recent report on thermal generation from Ascend Analytics.

Their competitiveness depends on the type of gas generation technology, which varies by efficiency, availability, ramp rate, cold startup cost and other factors. But overall, Ascend said, the value of gas assets will become increasingly concentrated in “narrow, infrequent windows of time,” increasing the importance of availability, secure fuel supply and strategically-scheduled preventative maintenance.

Permission granted by Ascend Analytics

“In the face of rising electricity demand and declining capacity accreditation for renewables and storage, the need for dispatchable, long-duration capacity resources will persist. To meet this need, new gas capacity will be built,” it concluded. “However, investments in new gas should be made cautiously, prudently, and strategically. Thermal generation will remain a risky investment, with stranded asset risks not going away.”

Have utilities learned their lesson?

Against a backdrop of rising residential power bills, utilities and regulators say they are taking precautions to protect ratepayers from the possibility of a bubble.

NRG Energy’s vice president of regulatory affairs, Travis Kavulla, said past experience has formed the industry’s current approach in which competitive generators carry the risk of load forecasts – at least in deregulated markets.

“All of that has ramifications for the extent to which people are comfortable rapidly building out generation,” he said during a recent panel discussion hosted by the Heritage Foundation. “That’s why it’s so important for long-term offtake agreements to be formalized in this industry.”

Some regulators and utilities are moving to place more of the risk on hyperscalers by creating new rate classes for large loads. At least 30 states have proposed or approved large-load tariffs over the past several years to manage growth and protect existing ratepayers, according to a database from the North Carolina Clean Energy Technology Center and the Smart Electric Power Alliance.

“Whether those power plants or the gas pipeline go underutilized because that boom didn’t realize is yet to be determined.”

Eugene Kim

Wood Mackenzie’s Americas Gas Research team director

The Ohio Public Utilities Commission recently approved AEP Ohio’s plan to make data centers pay for at least 85% of the energy they request, even if they use less, to cover the cost of infrastructure. It also requires data centers to show they are financially viable and to pay an exit fee if their project is canceled or they can’t meet obligations.

Since then, the utility said its data center pipeline has shrunk by half — a positive development, said Wilmot from Enverus.

“We just can’t have absolutely unmitigated data center demand growth,” he said. “Otherwise, things are going to be very challenging for the ratepayer.”

Dominion Energy, which serves the area around Ashburn, Virginia – home to the highest concentration of data centers in the world — has proposed a similar tariff. Under its proposal, large load customers would be required to make a 14-year commitment to pay for the power they request regardless of how much they actually use.

Aaron Ruby, spokesperson for Dominion, said the utility is focused on making sure residential customers aren’t subsidizing the cost of infrastructure needed by data center customers and has yet to encounter delays as it pursues 5.9 GW of new gas generation.

He suggested, however, that there was little danger of overbuilding. In the last 20 years, he could only recall one example of Dominion developing infrastructure for a data center that fell through.

“Within a year or two, another data center customer emerged and fully utilized the infrastructure,” he said.

Others expressed skepticism.

Abe Silverman, an assistant research scholar with Johns Hopkins University’s Ralph O’Connor Sustainable Energy Institute, said it was still very early to know what effects AI will have on power consumption.

“This is a baby industry, and we are building and paying for infrastructure today for data centers that don’t yet exist,” he said. “We are making an investment to build out that grid infrastructure based on these very frothy assumptions.”

DataBank’s IAD4 data center under construction in Ashburn, Virginia.

Diana DiGangi/Utility Dive

Kim, the Wood Mackenzie gas researcher, suggested that the power industry has matured since the last bubble burst.

Back then, the three dominant gas turbine manfacturers — GE Vernova, Mitsubishi and Seimens — were “burned significantly,” he said. “The utilities were burned significantly by overbuilding, and now they’re more cautious.”

Kim predicted gas generation will likely continue to increase due to coal retirements and growing demand for electricity, with or without data centers. Advanced manufacturing facilities require more energy. Summers are getting hotter as the climate changes, increasing demand for cooling, and larger renewable portfolios will require dispatchable generation that cannot currently be replaced by storage, he added.

But an economic slump, technological innovation, improved efficiency and any number of unforeseen factors could impact what is still a highly speculative growth scenario, he said.

“Nevertheless, as pipelines could take two years to build, four years to build, you then have to start building those pipelines if you’re going to build that power plant and support it,” Kim said. “Whether those power plants or the gas pipeline go underutilized because that boom didn’t realize is yet to be determined.”