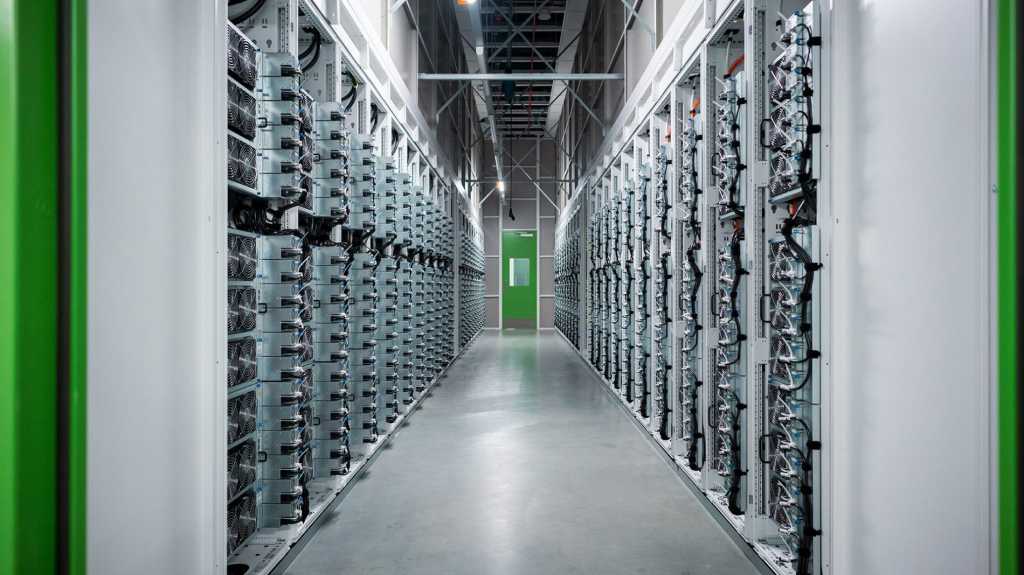

“The AWS AI Factory seeks to resolve the tension between cloud-native innovation velocity and sovereign control. Historically, these objectives lived in opposition. CIOs faced an unsustainable dilemma: choose between on-premises security or public cloud cost and speed benefits,” he said. “This is arguably AWS’s most significant move in the sovereign AI landscape.”

On premises GPUs are already a thing

AI Factories isn’t the first attempt to put cloud-managed AI accelerators in customers’ data centers. Oracle introduced Nvidia processors to its Cloud@Customer managed on-premises offering in March, while Microsoft announced last month that it will add Nvidia processors to its Azure Local service. Google Distributed Cloud also includes a GPU offering, and even AWS offers lower-powered Nvidia processors in its AWS Outposts.

AWS’ AI Factories is also likely to square off against from a range of similar products, such as Nvidia’s AI Factory, Dell’s AI Factory stack, and HPE’s Private Cloud for AI — each tightly coupled with Nvidia GPUs, networking, or software, and all vying to become the default on-premises AI platform.

But, said Sopko, AWS will have an advantage over rivals due to its hardware-software integration and operational maturity: “The secret sauce is the software, not the infrastructure,” he said.

Omdia principal analyst Alexander Harrowell expects AWS’s AI Factories to combine the on-premises control of Outposts with the flexibility and ability to run a wider variety of services offered by AWS Local Zones, which puts small data centers close to large population centers to reduce service latency.

Sopko cautioned that enterprises are likely to face high commitment costs, drawing a parallel with Oracle’s OCI Dedicated Region, one of its Cloud@Customer offerings.