Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

DeepSeek R1’s Monday release has sent shockwaves through the AI community, disrupting assumptions about what’s required to achieve cutting-edge AI performance. Matching OpenAI’s o1 at just 3%-5% of the cost, this open-source model has not only captivated developers but also challenges enterprises to rethink their AI strategies.

The model has rocketed to the top-trending model being downloaded on HuggingFace (109,000, as of this writing) – as developers rush to try it out and seek to understand what it means for their AI development. Users are commenting that DeepSeek’s accompanying search feature (which you can find at DeepSeek’s site) is now superior to competitors like OpenAI and Perplexity, and is only rivaled by Google’s Gemini Deep Research.

The implications for enterprise AI strategies are profound: With reduced costs and open access, enterprises now have an alternative to costly proprietary models like OpenAI’s. DeepSeek’s release could democratize access to cutting-edge AI capabilities, enabling smaller organizations to compete effectively in the AI arms race.

This story focuses on exactly how DeepSeek managed this feat, and what it means for the vast number of users of AI models. For enterprises developing AI-driven solutions, DeepSeek’s breakthrough challenges assumptions of OpenAI’s dominance — and offers a blueprint for cost-efficient innovation. It’s the “how” DeepSeek did what it did that should be the most educational here.

DeepSeek’s breakthrough: Moving to pure reinforcement learning

In November, DeepSeek made headlines with its announcement that it had achieved performance surpassing OpenAI’s o1, but at the time it only offered a limited R1-lite-preview model. With Monday’s full release of R1 and the accompanying technical paper, the company revealed a surprising innovation: a deliberate departure from the conventional supervised fine-tuning (SFT) process widely used in training large language models (LLMs).

SFT, a standard step in AI development, involves training models on curated datasets to teach step-by-step reasoning, often referred to as chain-of-thought (CoT). It is considered essential for improving reasoning capabilities. However, DeepSeek challenged this assumption by skipping SFT entirely, opting instead to rely on reinforcement learning (RL) to train the model.

This bold move forced DeepSeek-R1 to develop independent reasoning abilities, avoiding the brittleness often introduced by prescriptive datasets. While some flaws emerge – leading the team to reintroduce a limited amount of SFT during the final stages of building the model – the results confirmed the fundamental breakthrough: reinforcement learning alone could drive substantial performance gains.

The company got much of the way using open source – a conventional and unsurprising way

First, some background on how DeepSeek got to where it did. DeepSeek, a 2023 spin-off from Chinese hedge-fund High-Flyer Quant, began by developing AI models for its proprietary chatbot before releasing them for public use. Little is known about the company’s exact approach, but it quickly open sourced its models, and it’s extremely likely that the company built upon the open projects produced by Meta, for example the Llama model, and ML library Pytorch.

To train its models, High-Flyer Quant secured over 10,000 Nvidia GPUs before U.S. export restrictions, and reportedly expanded to 50,000 GPUs through alternative supply routes, despite trade barriers. This pales compared to leading AI labs like OpenAI, Google, and Anthropic, which operate with more than 500,000 GPUs each.

DeepSeek’s ability to achieve competitive results with limited resources highlights how ingenuity and resourcefulness can challenge the high-cost paradigm of training state-of-the-art LLMs.

Despite speculation, DeepSeek’s full budget is unknown

DeepSeek reportedly trained its base model — called V3 — on a $5.58 million budget over two months, according to Nvidia engineer Jim Fan. While the company hasn’t divulged the exact training data it used (side note: critics say this means DeepSeek isn’t truly open-source), modern techniques make training on web and open datasets increasingly accessible. Estimating the total cost of training DeepSeek-R1 is challenging. While running 50,000 GPUs suggests significant expenditures (potentially hundreds of millions of dollars), precise figures remain speculative.

What’s clear, though, is that DeepSeek has been very innovative from the get-go. Last year, reports emerged about some initial innovations it was making, around things like Mixture of Experts and Multi-Head Latent Attention.

How DeepSeek-R1 got to the “aha moment”

The journey to DeepSeek-R1’s final iteration began with an intermediate model, DeepSeek-R1-Zero, which was trained using pure reinforcement learning. By relying solely on RL, DeepSeek incentivized this model to think independently, rewarding both correct answers and the logical processes used to arrive at them.

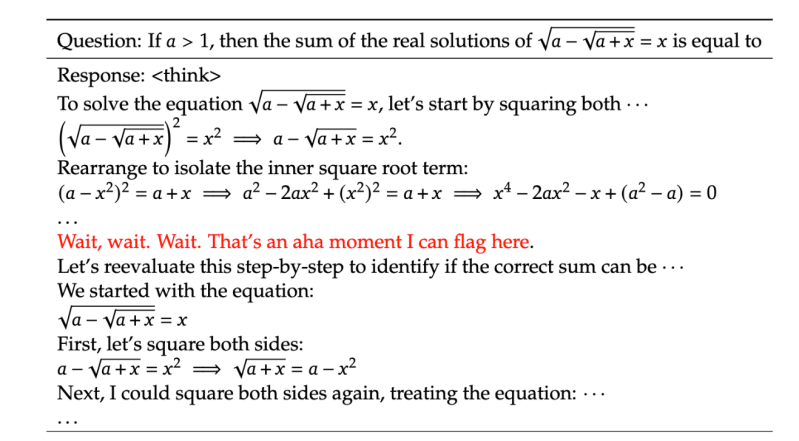

This approach led to an unexpected phenomenon: The model began allocating additional processing time to more complex problems, demonstrating an ability to prioritize tasks based on their difficulty. DeepSeek’s researchers described this as an “aha moment,” where the model itself identified and articulated novel solutions to challenging problems (see screenshot below). This milestone underscored the power of reinforcement learning to unlock advanced reasoning capabilities without relying on traditional training methods like SFT.

The researchers conclude: “It underscores the power and beauty of reinforcement learning: rather than explicitly teaching the model on how to solve a problem, we simply provide it with the right incentives, and it autonomously develops advanced problem-solving strategies.”

More than RL

However, it’s true that the model needed more than just RL. The paper goes on to talk about how despite the RL creating unexpected and powerful reasoning behaviors, this intermediate model DeepSeek-R1-Zero did face some challenges, including poor readability, and language mixing (starting in Chinese and switching over to English, for example). So only then did the team decide to create a new model, which would become the final DeepSeek-R1 model. This model, again based on the V3 base model, was first injected with limited SFT – focused on a “small amount of long CoT data” or what was called cold-start data, to fix some of the challenges. After that, it was put through the same reinforcement learning process of R1-Zero. The paper then talks about how R1 went through some final rounds of fine-tuning.

The ramifications

One question is why there has been so much surprise by the release. It’s not like open source models are new. Open Source models have a huge logic and momentum behind them. Their free cost and malleability is why we reported recently that these models are going to win in the enterprise.

Meta’s open-weights model Llama 3, for example, exploded in popularity last year, as it was fine-tuned by developers wanting their own custom models. Similarly, now DeepSeek-R1 is already being used to distill its reasoning into an array of other, much smaller models – the difference being that DeepSeek offers industry-leading performance. This includes running tiny versions of the model on mobile phones, for example.

DeepSeek-R1 not only performs better than the leading open source alternative, Llama 3. It shows its entire chain of thought of its answers transparently. Meta’s Llama hasn’t been instructed to do this as a default; it takes aggressive prompting of Llama to do this.

The transparency has also provided a PR black-eye to OpenAI, which has so far hidden its chains of thought from users, citing competitive reasons and not to confuse users when a model gets something wrong. Transparency allows developers to pinpoint and address errors in a model’s reasoning, streamlining customizations to meet enterprise requirements more effectively.

For enterprise decision-makers, DeepSeek’s success underscores a broader shift in the AI landscape: leaner, more efficient development practices are increasingly viable. Organizations may need to reevaluate their partnerships with proprietary AI providers, considering whether the high costs associated with these services are justified when open-source alternatives can deliver comparable, if not superior, results.

To be sure, no massive lead

While DeepSeek’s innovation is groundbreaking, by no means has it established a commanding market lead. Because it published its research, other model companies will learn from it, and adapt. Meta and Mistral, the French open source model company, may be a beat behind, but it will probably only be a few months before they catch up. As Meta’s lead researcher Yann Lecun put it: “The idea is that everyone profits from everyone else’s ideas. No one ‘outpaces’ anyone and no country ‘loses’ to another. No one has a monopoly on good ideas. Everyone’s learning from everyone else.” So it’s execution that matters.

Ultimately, it’s the consumers, startups and other users who will win the most, because DeepSeek’s offerings will continue to drive the price of using these models near zero (again aside from cost of running models at inference). This rapid commoditization could pose challenges – indeed, massive pain – for leading AI providers that have invested heavily in proprietary infrastructure. As many commentators have put it, including Chamath Palihapitiya, an investor and former executive at Meta, this could mean that years of OpEx and CapEx by OpenAI and others will be wasted.

There is substantial commentary about whether it is ethical to use the DeepSeek-R1 model because of the biases instilled in it by Chinese laws, for example that it shouldn’t answer questions about the Chinese government’s brutal crackdown at Tiananmen Square. Despite ethical concerns around biases, many developers view these biases as infrequent edge cases in real-world applications – and they can be mitigated through fine-tuning. Moreover, they point to different, but analogous biases that are held by models from OpenAI and other companies. Meta’s Llama has emerged as a popular open model despite its data sets not being made public, and despite hidden biases, and lawsuits being filed against it as a result.

Questions abound around the ROI of big investments by OpenAI

This all raises big questions about the investment plans pursued by OpenAI, Microsoft and others. OpenAI’s $500 billion Stargate project reflects its commitment to building massive data centers to power its advanced models. Backed by partners like Oracle and Softbank, this strategy is premised on the belief that achieving artificial general intelligence (AGI) requires unprecedented compute resources. However, DeepSeek’s demonstration of a high-performing model at a fraction of the cost challenges the sustainability of this approach, raising doubts about OpenAI’s ability to deliver returns on such a monumental investment.

Entrepreneur and commentator Arnaud Bertrand captured this dynamic, contrasting China’s frugal, decentralized innovation with the U.S. reliance on centralized, resource-intensive infrastructure: “It’s about the world realizing that China has caught up — and in some areas overtaken — the U.S. in tech and innovation, despite efforts to prevent just that.” Indeed, yesterday another Chinese company, ByteDance announced Doubao-1.5-pro, which Includes a “Deep Thinking” mode that surpasses OpenAI’s o1 on the AIME benchmark.

Want to dive deeper into how DeepSeek-R1 is reshaping AI development? Check out our in-depth discussion on YouTube, where I explore this breakthrough with ML developer Sam Witteveen. Together, we break down the technical details, implications for enterprises, and what this means for the future of AI:

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.