Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Prompt AI, a smart home and visual intelligence research and technology company, has launched Seemour, a visual intelligence platform for the home.

Founded by leading scientists and pioneers of computer vision, Seemour is designed to understand, describe, and act on what it sees in real time when connected to a home camera.

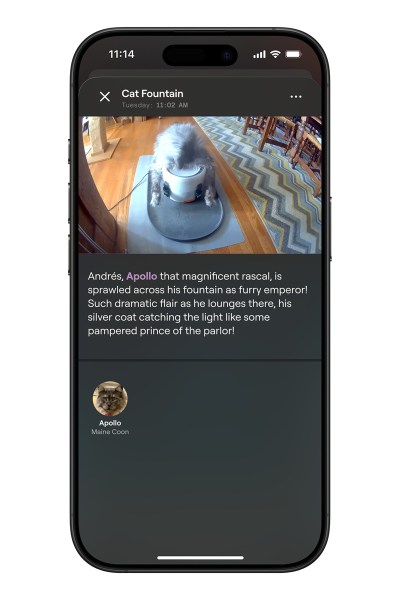

Seemour can summarize moments or hours of video footage, learn the names of family, friends, and pets, and even inform you which delivery service is at your door. Seemour is like if your home could talk, helping you feel safer and more connected to what’s happening around you, said Tete Xiao, CEO of Seemour, in an interview with GamesBeat.

Xiao started the company with fellow doctorate candidates and a professor from the University of California at Berkeley. (Go Bears). They started thinking about the combo of home cameras, which proliferated during the pandemic, and the explosion of AI and started the company in late 2023.

“We started the company to focus on visual intelligence, with streaming cameras as our first product,” Xiao said. “Seemour works with videos from streaming cameras so users don’t actually have to upload [and edit] these videos. They just need to have cameras already installed in their houses, which a lot of them do. And then whenever these cameras have some things going on, Seemour will actually leverage the video streams and understand this information for them.”

How to use Seemour

You can download Seemour today for free from the App Store or visit Seemour.ai for more information.

Seemour’s visual intelligence can reduce unnecessary camera notifications by as much as 70%. It harnesses the power of large vision-language models and machine learning to summarize multiple on-camera events into a single, easy-to-understand update.

This intelligence also allows Seemour to review hours of footage and understand what’s happening.

In an upcoming update, users will soon be able to ask Seemour questions, saving time and gaining insight.

“Imagine a future where you can ask your home what happened today or inform your roommate that you’ve stepped out to go to the grocery store when they open the fridge,” said Xiao. “That future is closer than you think, and we’re excited to bring it to you.”

Seemour is an app that works with the feeds from the security cameras, and the company is actively working on bringing more types of cameras into its system to leverage what is already in homes. The first adopters will be those who already use digital home security cameras.

The consumer reaction to tests has been good.

“When they first try Seymour, they’re very excited to actually see how it fundamentally changes their relationships with the cameras. You can be proactive, because Seemour delivers a contact notification within tens of seconds so you can get pretty much what’s going on in real time. You don’t even need to watch videos. You can just get every information.”

The types of cameras can range from outdoor-facing cameras, like front-door cameras, as well as petcams or baby cams inside the house.

“I can actually put the camera next to my cat’s food bowl. I don’t have the time to watch the videos, but now Seemour can watch the videos for me. We can actually to tell them what the cat is doing and also piece together what the cat’s day is like.”

If you’re concerned about housemates spying on each other, that’s probably a discussion to have before putting in cameras into a place in the first place. And regarding privacy of the content, Seemour said the tech is built with privacy and security at its core.

“Any data we store is protected using industry-leading security protocols, ensuring that your information remains private and under your control. We also use industry-leading authentication services and Google Cloud Platform, which is recognized for its industry-leading security and reliability,” the company said. “And we don’t stop there—unlike other platforms that quietly absorb user data, Seemour keeps you in control. Your data stays yours, and we never use it to train models without your explicit permission. Our goal isn’t just to secure your information—but to ensure you own it.”

Key features include:

● Intelligent Video Summaries. Get easy-to-read summaries of significant events captured by your cameras, saving you time and hassle, and never miss a moment.

● Personalized Notifications. Seemour learns and remembers familiar people, allowing you to label them for more personalized notifications.

● Specific Pet Notifications. Teach Seemour the names of all your dogs and/or cats, and Seemour can tell you which pet got into the trash.

● 70% Fewer Alerts. Stay informed without overload. Receive notifications only for the moments that matter, from familiar people and pets to potential intrusions.

● Suspicious Behavior Alerts. Seemour can instantly alert you if it sees unusual or suspicious activity, making your home safer and more secure.

● Delivery Service Announcements. Get instant notifications for who’s at your door, whether it’s FedEx, Amazon, or the mailman.

● Wildlife Detection. Capture and enjoy the beauty of nature with alerts for wildlife like birds, deer, or bears spotted on your property—helping you stay aware and connected to the world outside.

● Seamless Integration. See your camera feeds in one platform, with more integrations coming soon.

● Ask Seemour (coming soon). No need to review hours of footage just to know when something happened. Save time and gain insights by asking Seemour questions like, ‘Where did I leave my keys?’ or, ‘When did my daughter leave home today?’ Seemour reviews your camera footage, offering answers and highlights using naturally spoken language.

● Invisibility (coming soon). Seemour can remove individuals from video footage to protect their privacy.

● Custom Visual Alerts (coming soon). Get alerts when Seemour sees specific things like Fido digging in your backyard, even if it’s never seen that before.

Origins

Prompt AI is a pioneering visual intelligence research and technology company based in San Francisco that aims to create human-centered, innovative, and useful products for everyone.

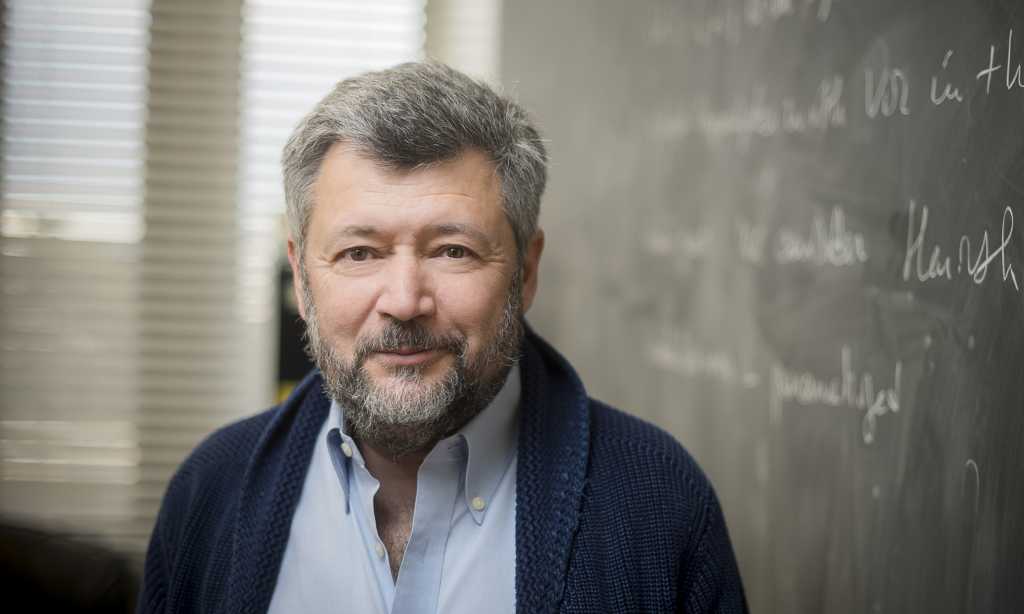

It is cofounded by Xiao and Seth Park, both of whom hold Ph.Ds in Computer Science from UC Berkeley, and Trevor Darrell, a founder and co-leader of Berkeley Artificial Intelligence Research (BAIR) lab, renowned for his significant contributions to the advancement of computer vision and AI research.

Darrow has worked with computer vision for a long time. Xiao has worked in the field for nearly a decade.

“One of the things that I think really fascinating about this technology is the bigger picture vision that it has for all AI. So now what we’re doing is to sort of understand every single video. But the technology can be be much more than just that. We’re we’re working on the feature to allow people to ask questions like, ‘How many times have my candy today?’” Xiao said.

The company is working on how to create a summary of day’s worth of videos from a petcam or something similar. That’s not available yet. Typical videos will be around 30 seconds or longer. One of the goals is to reduce the number of notifications you get in a day from the cameras.

With the market for cameras getting commoditized, better quality cameras with better sensors are available now. Many cameras record in 1080p and some with 2K quality, while some have night vision. The AI system can note a given person or pet and you can identify that person for the videos. Gen AI becomes useful in classifying events and describing them.

The idea is to find information on your behalf and summarize it or organize it for you. If your cat is on a counter, maybe Seemour could play a pre-recorded message from you to tell the cat to get off the corner. If a package is delivered by mistake, you could refuse it at the door.

There are big competitors in the space like Amazon and Apple, which have their own smart hubs for the home. But they haven’t entered this space just yet. Xiao said his team understands computer vision well and it is thinking about agentic features for its visual intelligence products in the future.

“Something we strive for is our reasoning capacity,” Xiao said. “So if three people go into a house and two come out, common sense says that there is still one person there. It’s basic reasoning. I think the technology is ready for that.”

The company has raised $6 million in seed led by prominent investors AIX Ventures and Abstract Ventures, with participation from several renowned angel investors. The team has 11 people.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.