Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Humans have always migrated, not only across physical landscapes, but through ways of working and thinking. Every major technological revolution has demanded some kind of migration: From field to factory, from muscle to machine, from analog habits to digital reflexes. These shifts did not simply change what we did for work; they reshaped how we defined ourselves and what we believed made us valuable.

One vivid example of technological displacement comes from the early 20th century. In 1890, more than 13,000 companies in the U.S. built horse-drawn carriages. By 1920, fewer than 100 remained. In the span of a single generation, an entire industry collapsed. As Microsoft’s blog The Day the Horse Lost Its Job recounts, this was not just about transportation, it was about the displacement of millions of workers, the demise of trades, the reorientation of city life and the mass enablement of continental mobility. Technological progress, when it comes, does not ask for permission.

Today, as AI grows more capable, we are entering a time of cognitive migration when humans must move again. This time, however, the displacement is less physical and more mental: Away from tasks machines are rapidly mastering, and toward domains where human creativity, ethical judgment and emotional insight remain essential.

From the Industrial Revolution to the digital office, history is full of migrations triggered by machinery. Each required new skills, new institutions and new narratives about what it means to contribute. Each created new winners and left others behind.

The framing shift: IBM’s “Cognitive Era”

In October 2015 at a Gartner industry conference, IBM CEO Ginni Rometty publicly declared the beginning of what the company called the Cognitive Era. It was more than a clever marketing campaign; it was a redefinition of strategic direction and, arguably, a signal flare to the rest of the tech industry that a new phase of computing had arrived.

Where previous decades had been shaped by programmable systems based on rules written by human software engineers, the Cognitive Era would be defined by systems that could learn, adapt and improve over time. These systems, powered by machine learning (ML) and natural language processing (NLP), would not be explicitly told what to do. They would infer, synthesize and interact.

At the center of this vision was IBM’s Watson, which had already made headlines in 2011 for defeating human champions on Jeopardy! But the real promise of Watson was not about winning quiz shows. Instead, it was helping doctors sort through thousands of clinical trials to suggest treatments, or to assist lawyers analyzing vast corpuses of case law. IBM pitched Watson not as a replacement for experts, but as an amplifier of human intelligence, the first cognitive co-pilot.

This framing change was significant. Unlike earlier tech eras that emphasized automation and efficiency, the Cognitive Era emphasized partnership. IBM spoke of “augmented intelligence” rather than “artificial intelligence,” positioning these new systems as collaborators, not competitors.

But implicit in this vision was something deeper: A recognition that cognitive labor, long the hallmark of the white-collar professional class, was no longer safe from automation. Just as the steam engine displaced physical labor, cognitive computing would begin to encroach on domains once thought exclusively human: language, diagnosis and judgment.

IBM’s declaration was both optimistic and sobering. It imagined a future where humans could do ever more with the help of machines. It also hinted at a future where value would need to migrate once again, this time into domains where machines still struggled — such as meaning-making, emotional resonance and ethical reasoning.

The declaration of a Cognitive Era was seen as significant at the time, yet few then realized its long-term implications. It was, in essence, the formal announcement of the next great migration; one not of bodies, but of minds. It signaled a shift in terrain, and a new journey that would test not just our skills, but our identity.

The first great migration: From field to factory

To understand the great cognitive migration now underway and how it is qualitatively unique in human history, we must first briefly consider the migrations that came before it. From the rise of factories in the Industrial Revolution to the digitization of the modern workplace, every major innovation has demanded a shift in skills, institutions and our assumptions about what it means to contribute.

The Industrial Revolution, beginning in the late 18th century, marked the first great migration of human labor on a mass scale into entirely new ways of working. Steam power, mechanization and the rise of factory systems pulled millions of people from rural agrarian life into crowded, industrializing cities. What had once been local, seasonal and physical labor became regimented, specialized and disciplined, with productivity as the driving force.

This transition did not just change where people worked; it changed who they were. The village blacksmith or cobbler moved to new roles and became cogs in a vast industrial machine. Time clocks, shift work and the logic of efficiency began to redefine human contribution. Entire generations had to learn new skills, embrace new routines and accept new hierarchies. It was not just labor that migrated, it was identity.

Just as importantly, institutions had to migrate too. Public education systems expanded to produce a literate industrial workforce. Governments adapted labor laws to new economic conditions. Unions emerged. Cities grew rapidly, often without infrastructure to match. It was messy, uneven and traumatic. It also marked the beginning of a modern world shaped by — and increasingly for — machines.

This migration created a repeated pattern: Modern technology displaces, and people and society need to adapt. This adaptation could happen gradually — or sometimes violently — until eventually, a new equilibrium emerged. But every wave has asked more of us. The Industrial Revolution required our bodies. The next would require our minds.

If the Industrial Revolution demanded our bodies, the Digital Revolution demanded new minds. Beginning in the mid-20th century and accelerating through the 1980s and ’90s, computing technologies transformed human work once again. This time, repetitive mechanical tasks were increasingly replaced with information processing and symbolic manipulation.

In what is sometimes called the Information Age, clerks became data analysts and designers became digital architects. Administrators, engineers and even artists began working with pixels and code instead of paper and pen. Work moved from the factory floor to the office tower, and eventually to the screen in our pocket. Knowledge work became not just dominant, but aspirational. The computer and the spreadsheet became the picks and shovels of a new economic order.

I saw this first-hand early in my career when working as a software engineer at Hewlett Packard. Several newly-minted MBA graduates arrived with HP-branded Vectra PCs and Lotus 1-2-3 spreadsheet software. It was seemingly at that moment when data analysts began proffering cost-benefit analyses, transforming enterprise operational efficiency.

This migration was less visibly traumatic than the one from farm to factory, but no less significant. It redefined productivity in cognitive terms: memory, organization, abstraction. It also brought new forms of inequality between those who could master digital systems and those who were left behind. And, once again, institutions scrambled to keep pace. Schools retooled for “21st-century skills.” Companies reorganized information flows using techniques like “business process reengineering.” Identity shifted again too, this time from laborer to knowledge worker.

Now, midway through the third decade of the 21st century, even knowledge work is becoming automated, and white-collar workers can feel the climate shifting. The next migration has already begun.

The most profound migration yet

We have migrated our labor across fields, factorie, and fiber optics. Each time, we have adapted. This has often been uneven and sometimes painful, but we have transitioned to a new normalcy, a new equilibrium. However, the cognitive migration now underway is unlike those before it. It does not just change how we work; it challenges what we have long believed makes us irreplaceable: Our rational mind.

As AI grows more capable, we must shift once more. Not toward harder skills, but toward deeper ones that remain human strengths, including creativity, ethics, empathy, meaning and even spirituality. This is the most profound migration yet because this time, it is not just about surviving the shift. It is about discovering who we are beyond what we produce and understanding the true nature of our value.

Accelerating change, compressed adaptation

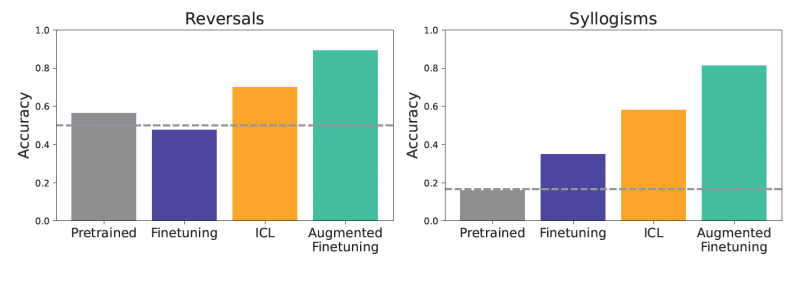

The timeline for each technological migration has also accelerated dramatically. The Industrial Revolution unfolded over a century, allowing generational adaptation. The Digital Revolution compressed that timeline into a few decades. Some workers began their careers with paper files and retired managing cloud databases. Now, the next migration is occurring in mere years. For example, large language models (LLMs) went from academic projects to workplace tools in less than five years.

William Bridges noted in the 2003 revision of “Managing Transitions:” “It is the acceleration of the pace of change in the past several decades that we are having trouble assimilating and that throws us into transition.” The pace of change is far faster now than it was in 2003, which makes this even more urgent.

This acceleration is reflected not only in AI software but also in the underlying hardware. In the Digital Revolution, the predominant computing element was the CPU that executed instructions serially based on rules coded explicitly by a software engineer. Now, the dominant computing element is the GPU, which executes instructions in parallel and learns from data rather than rules. The parallel execution of tasks provides an implicit acceleration of computing. It is no coincidence that Nvidia, the leading developer of GPUs, refers to this as “accelerated computing.”

The existential migration

Transitions that once evolved across generations are now occurring within a single career, or even a single decade. This particular shift demands not just new skills, but a fundamental reassessment of what makes us human. Unlike previous technological shifts, we cannot simply learn new tools or adopt new routines. We must migrate to terrain where our uniquely human qualities of creativity, ethical judgment and meaning-making become our defining strengths. The challenge before us is not merely technological adaptation but existential redefinition.

As AI systems master what we once thought as uniquely human tasks, we find ourselves on an accelerated journey to discover what truly lies beyond automation: The essence of being human in an age where intelligence alone is no longer our exclusive domain.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.