A year ago, capacity prices spiked in the PJM Interconnection. Last month they hit another record high of nearly $330/MW-day.

Companies including Exelon, FirstEnergy and PPL Corp., which have utilities in states that bar them from owning generation, have been pressing for state legislation that would lift that restriction. They contend that the jump in PJM capacity prices is increasing customer bills but failing to spur independent power producers to build power plants.

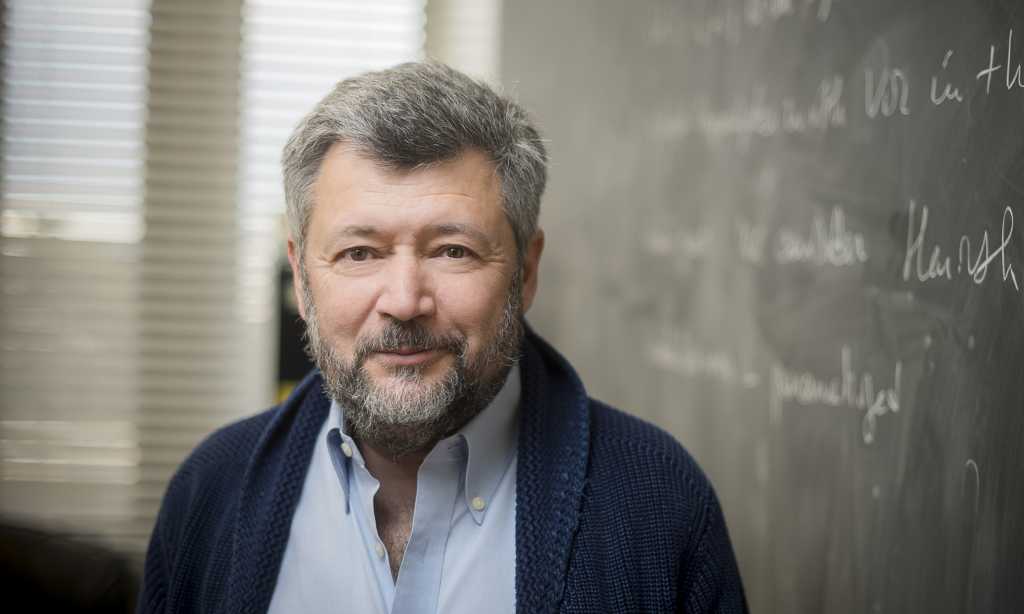

To get a nonutility perspective, on Monday Utility Dive talked with Todd Snitchler, president and CEO of the Electric Power Supply Association, a trade group for independent power producers, about how power providers are moving to bring electricity supplies to PJM, what’s behind rising electric bills and the challenges all companies face in building power plants.

EPSA members own and operate about 175,000 MW of capacity in U.S. regions with access to competitive wholesale electricity markets. Members include Invenergy, LS Power, NRG Energy, Talen Energy, Tenaska and Vistra.

This interview has been edited for length and clarity.

UTILITY DIVE: Have we seen a response by IPPs to increased capacity prices in PJM?

TODD SNITCHLER: I think you are seeing a response from the IPP or competitive generator sector in response to price signals, but not exclusively that.

You saw a significant response to the Reliability Resource Initiative process at PJM, which tried to accelerate projects to the front of the line that were ready to go or could be constructed and operational by 2030. Ninety-four projects were submitted and 51 selected. It was close to 10,000 MW that is supposed to come online by 2030.

And that was just a part of how independent producers tried to respond, before the second price signal was even sent in the July auction, which I think we would all agree continued to send the signal for new investment.

One of the challenges that we’re seeing is this compressed auction schedule. There hasn’t been an auction that’s been on time in several years now. So you’ve got these six-month increments, which really make it difficult for generators or investors to make a decision that says, “Okay, let’s move this project from development into ‘we’re going to move forward’ in that short of a time horizon.”

Normally, these auctions are spaced a year apart — it gives people an opportunity to respond.

Even so, you’re seeing people make affirmative decisions about investments, delaying retirement, projects that were not moving forward are now back on the drawing board. You see things like the Crane Clean Energy Center, formerly Three Mile Island … you are seeing that come back. So I think there are all kinds of indicators that show that the market is responding to the signals that are being sent.

Would it be helpful if we could move faster? Sure, it would be. Is that always possible? Honestly, no. We’ve got issues outside of queue reform, which everyone likes to blame, and which I think is kind of behind us, if you look at the statistics.

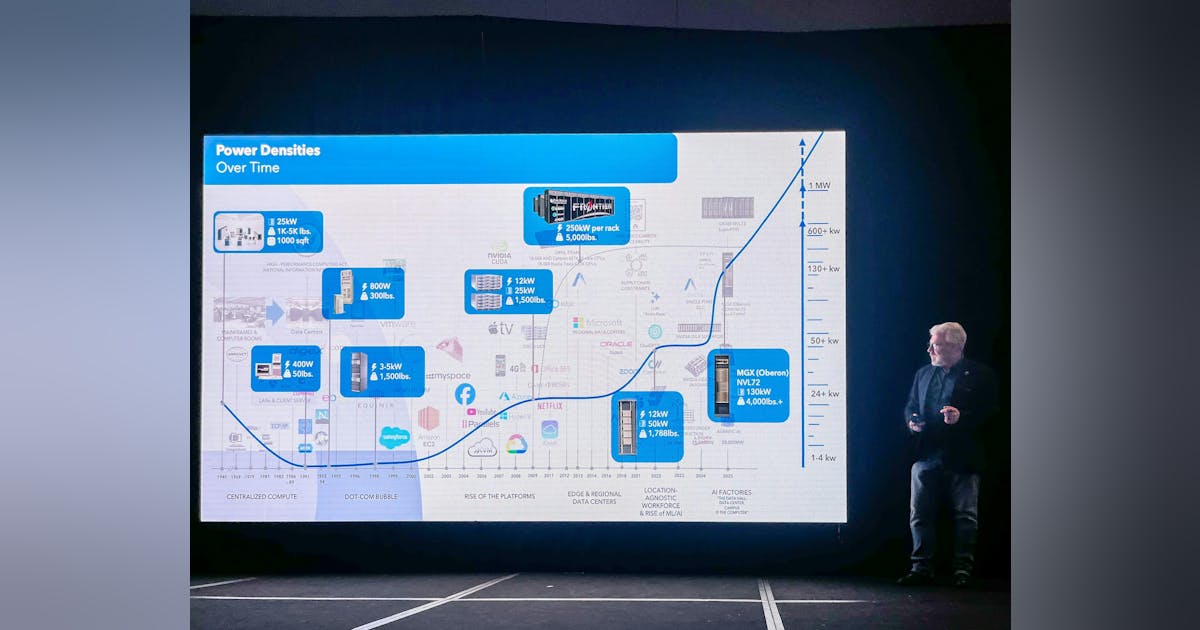

But there are supply chain issues that affect everybody, whether you’re a vertically integrated utility, or you’re a competitive power generator, or you’re a rural co-op or public power. We’re all subject to the same issues with regard to supply chain.

And there’s a workforce issue that we’re going to have to address because, assuming we all agree … that we’re going to need to build a whole lot of new megawatts in the coming near-term time horizon, everybody’s competing for the workforce that’s skilled and able to construct those facilities.

I hear some of our utility critics say, “Well, if you just let us do it, we’d have this problem solved.” Well, I’d like to know how you’re able to skip ahead and have your equipment, whether it’s switchgears or transformers or turbines. We’re all competing for the same equipment on the same time horizon, so it’s a little bit difficult to understand how they could do it so much faster, so much cheaper, when that’s historically never been the case.

If somebody were to order a turbine today, how long would it take to get it?

It varies, but conventional wisdom right now is it’s probably five years, maybe six. We’ve seen some discussion about how we are going to address additional manufacturing capability, and I think we’ll see that. But you don’t build a new factory overnight, and you certainly don’t turn out these pieces of equipment in a week or two.

What about getting fuel supply for gas-fired power plants? I’ve heard pipelines are very tight. Storage is tight.

It varies by region. So if you’re New England, you’ve got a different set of issues than if you’re in central Pennsylvania or Ohio, where you’re sitting on top of the gas, where it’s being produced, and it’s much easier to move around.

We are going to need to see some additional pipeline capacity that’s going to take gas from where it’s being produced into other parts of the country where they are bottlenecked, whether that’s the Southeast or the Northeast.

What about the cost of building a new gas-fired power plant? I’m seeing roughly $2,200 to $2,500 per kilowatt.

That range sounds pretty close to what we have heard, which is much more than it was three or five years ago. From our members’ perspective, that’s cost that they bear, that’s not anything that’s put on the backs of ratepayers. In a vertically integrated environment in contrast, that $2.2 billion to $2.5 billion for 1,000 megawatts has to be paid by someone, and that’s the captive utility customer with a non-bypassable charge on the bill. And so that’s a direct pass-through to customers, plus the rate of return that the utility gets, where, if our members build it, they’ve got to make sure that they build it as inexpensively but as reliably as they can so they can operate, and that has the effect of driving down costs for consumers.

How are your members navigating this new import tariff landscape?

We have some members that are in the process of trying to get equipment into the United States and not sure what the price will be when it hits the shore based on the tariff rate with the countries that it may be coming from. For one member, the price variation was potentially 40% higher. So if you’ve got a $100 million piece of equipment, and the price is either $100 million or $140 million, that’s meaningful dollars. That is an area that our members are hoping that we can get some greater degree of certainty because if we’re going to achieve the administration’s objective of beating China at AI, we’re going to need more energy. More energy that is going to be produced with the same turbines that potentially are exposed to some of the tariff-related issues. There is some disruptive effect to that, but I think the administration is aware of it.

How is EPSA thinking about the issue of affordability?

We are one of the best ways for people to mitigate those costs by avoiding non-bypassable charges and letting us be the ones who put the risk on shareholders and investors for the generation portion of your bill.

Transmission and distribution charges are up substantially over the last 10 years, 15 years, and those costs are real and have translated into increased costs on consumers’ bills. So I find it a little disingenuous for some of the utilities that have had the transmission and distribution charges go up substantially over that period of time to say that this one capacity auction and now a second capacity auction is what’s driving up customer bills, and that’s why they should be allowed back in the [generation] game, because if you look at it in raw data numbers, the facts just don’t support their thesis.

In fact, the generation portion is flat or declining, and it has been that way as a result of both fuel switching, improved efficiency and new resources that came onto the system. But because of the most recent auction results, I think it’s a useful tool for those people who are trying to push responsibility for price increases off of their portion of the bill and onto someone else.

Do you have concerns about politicians feeling the pressure around affordability and asking themselves if restructuring is getting us more needed generation?

We’re always mindful of the need for people to do something. Sometimes that means doing something that’s not necessarily good policy. We’re trying to educate policymakers and legislators about what markets are actually doing, what they have actually delivered.

I’m still hard-pressed to find an example where a vertically-integrated utility has delivered a new generating project on time and under budget and had any accountability in the way that wholesale power generators do.

Some people have been pointing to the consolidation in the power sector as a sign that the markets aren’t working. In PJM, people are preferring to just buy existing gas-fired power plants. What’s behind the consolidation?

Our members all have different perspectives that they are operating under. Some are typically more operator-driven and others are more development-driven. Those that are in the development space are working on a number of projects in PJM as we speak, so I would urge a little caution from those who are suggesting that there’s no new development in gas.

Some companies are trying to acquire resources, to build their portfolio, because those entities have a different investment thesis, which is, “We’re operators, not developers.”