NVIDIA’s AI Factory: Engineering the Future of Compute

NVIDIA’s keynote illuminated the invisible but indispensable heart of the AI revolution—the AI factory. This factory blends hardware and software innovation to achieve performance breakthroughs that transcend traditional limits. Technologies such as disaggregated rack-scale GPUs and the novel 4-bit floating point numerical representation move beyond incremental improvements; they redefine what is achievable in energy efficiency and cost-effectiveness.

The software ecosystem NVIDIA fosters, including open-source frameworks like Dynamo, enables unprecedented flexibility in managing inference workloads across thousands of GPUs. This adaptability is crucial given the diverse, dynamic demands of modern AI, where workloads can fluctuate dramatically in scale and complexity. The continuous leap in benchmark performance, often quadrupling hardware capabilities through software alone, continues to reinforce their accelerated innovation cycle.

NVIDIA’s framing of AI factories as both technology platforms and business enablers highlights an important shift. The value computed is not merely in processing raw data but in generating economic streams through optimizing speed, reducing costs, and creating new AI services. This paradigm is central to understanding how the new industrial revolution will operate through highly efficient AI factories uniting production and innovation.

AWS and the Cloud’s Role in Democratizing AI Power

Amazon Web Services (AWS) represents a key pillar in making AI capabilities accessible across the innovation spectrum. AWS’ focus on security and fault tolerance reflects maturity in cloud design, ensuring trust and resilience are priorities alongside raw compute power. The evolution towards AI agents capable of specification-driven operations signifies a move beyond traditional computing paradigms towards contextual, autonomous AI services embedded deeply in workflows.

Their launch of EC2 P6-B200 instances with next-generation Blackwell GPUs and specialized Trainium chips represents a continual drive to optimize AI training and inference at scale and out-of-box improvements in performance of 85% reduction in training time; their P6E-B200 further increases the powerful GPU offering to evolve performance. These advances reduce training times significantly, enhance performance per watt, and enable diverse customers from startups to enterprises to benefit from world-class AI infrastructure without massive capital expenditures.

AWS shared the example of Perplexity achieving a 40% reduction in AI model training time using AWS SageMaker HyperPod encapsulates the tangible business benefits of cloud innovations. By managing complexity behind the scenes and helping customers automate key training steps, AWS lowers barriers, speeds time to market, and broadens AI’s impact across industries.

Breaking Barriers Beyond Compute

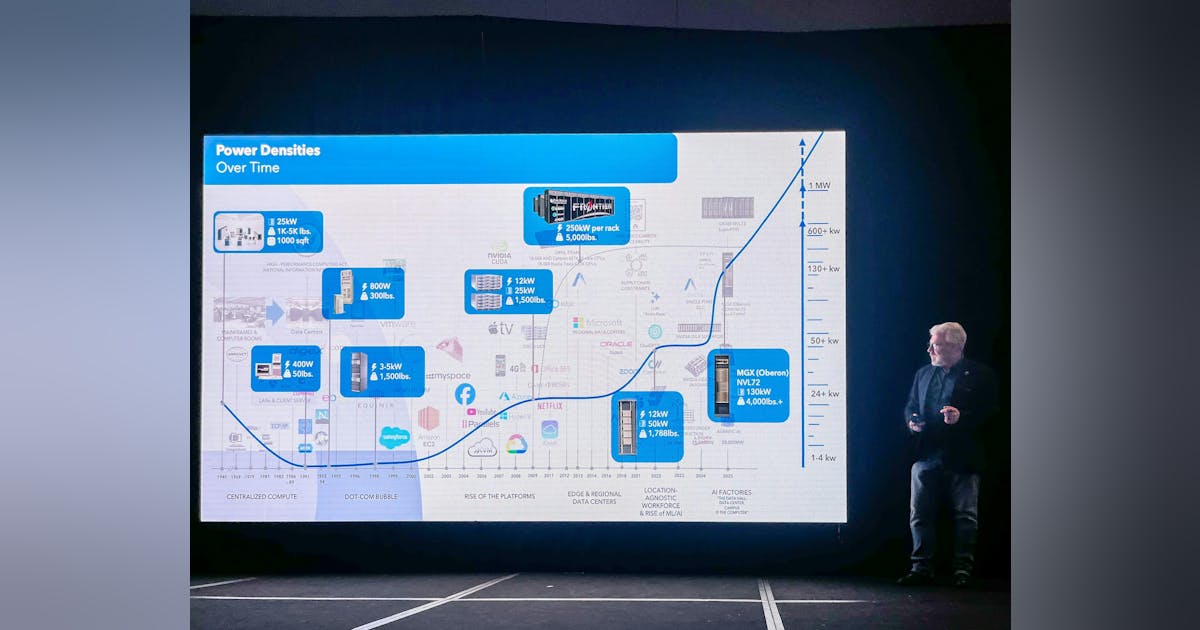

These key players are paving a path for the entire industry, in which innovations in AI infrastructure go far beyond faster chips. A major theme at the summit was breakthroughs in memory technology and chip design that address longstanding bottlenecks. Kove’s work on memory virtualization represents a leap towards breaking free from the physical confines of traditional DRAM, enabling far larger and more flexible AI models than were previously possible.

Meanwhile, Siemens demonstrated how AI-driven electronic design automation is redefining semiconductor and system design processes. The projected trillion-dollar semiconductor market by 2030 will be shaped by how quickly chip manufacturers can harness AI to accelerate design checks, optimize components, and meet ever-increasing model complexity.

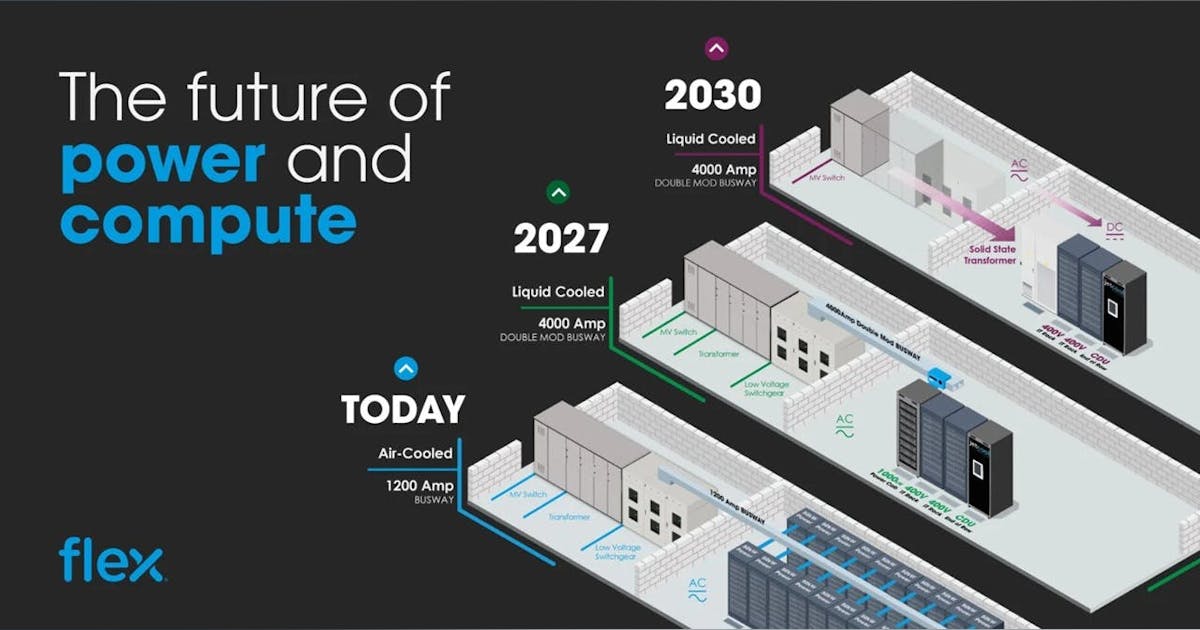

Together, these advances suggest a future where AI hardware infrastructure is not merely bigger but also smarter and more adaptable. This is critical as data centers evolve into living ecosystems continuously reconfiguring to meet changing workload demands and sustainability goals.

Neoclouds and Ecosystem Synergies: Accelerating Growth Through Collaboration

The rise of neocloud providers signals a still maturing, diversifying AI infrastructure market. These nimble, specialized operators complement hyperscalers by serving niche enterprise segments and experimenting with innovative business and financing models. Their agility accelerates infrastructure availability and expands overall market capacity, helping ease supply bottlenecks.

However, as we’ve covered in previous articles, this will shake out over the coming few years and many will not survive the capital demands and customer acquisition hurdles they will face in this CapEx-heavy, speed-to-ROI market. Financing remains a critical frontier, with neoclouds leveraging risk-sharing mechanisms like leasing real estate and hardware, parent guarantees, and collateralized GPU investments to compete. Companies like Coreweave have demonstrated how aggressive financing and strategic acquisitions can rapidly transform market profiles with new capital and scale.

This ecosystem synergy fosters competition that drives down costs, accelerates delivery timelines, and spurs innovation. The industry is transitioning from one dominated by a few hyperscalers to a vibrant landscape where varied players co-create solutions, amplifying AI’s growth potential.