Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

CISOs know precisely where their AI nightmare unfolds fastest. It’s inference, the vulnerable stage where live models meet real-world data, leaving enterprises exposed to prompt injection, data leaks, and model jailbreaks.

Databricks Ventures and Noma Security are confronting these inference-stage threats head-on. Backed by a fresh $32 million Series A round led by Ballistic Ventures and Glilot Capital, with strong support from Databricks Ventures, the partnership aims to address the critical security gaps that have hindered enterprise AI deployments.

“The number one reason enterprises hesitate to deploy AI at scale fully is security,” said Niv Braun, CEO of Noma Security, in an exclusive interview with VentureBeat. “With Databricks, we’re embedding real-time threat analytics, advanced inference-layer protections, and proactive AI red teaming directly into enterprise workflows. Our joint approach enables organizations to accelerate their AI ambitions safely and confidently finally,” Braun said.

Securing AI inference demands real-time analytics and runtime defense, Gartner finds

Traditional cybersecurity prioritizes perimeter defenses, leaving AI inference vulnerabilities dangerously overlooked. Andrew Ferguson, Vice President at Databricks Ventures, highlighted this critical security gap in an exclusive interview with VentureBeat, emphasizing customer urgency regarding inference-layer security. “Our customers clearly indicated that securing AI inference in real-time is crucial, and Noma uniquely delivers that capability,” Ferguson said. “Noma directly addresses the inference security gap with continuous monitoring and precise runtime controls.”

Braun expanded on this critical need. “We built our runtime protection specifically for increasingly complex AI interactions,” Braun explained. “Real-time threat analytics at the inference stage ensure enterprises maintain robust runtime defenses, minimizing unauthorized data exposure and adversarial model manipulation.”

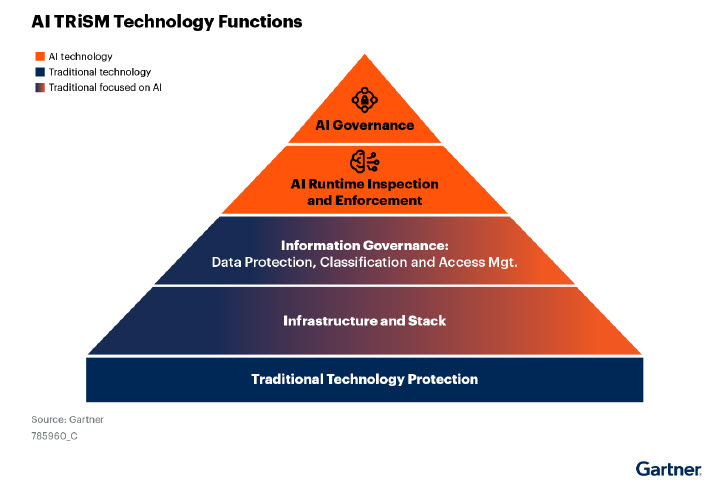

Gartner’s recent analysis confirms that enterprise demand for advanced AI Trust, Risk, and Security Management (TRiSM) capabilities is surging. Gartner predicts that through 2026, over 80% of unauthorized AI incidents will result from internal misuse rather than external threats, reinforcing the urgency for integrated governance and real-time AI security.

Gartner’s AI TRiSM framework illustrates comprehensive security layers essential for managing enterprise AI risk effectively. (Source: Gartner)

Noma’s proactive red teaming aims to ensure AI integrity from the outset

Noma’s proactive red teaming approach is strategically central to identifying vulnerabilities long before AI models reach production, Braun told VentureBeat. By simulating sophisticated adversarial attacks during pre-production testing, Noma exposes and addresses risks early, significantly enhancing the robustness of runtime protection.

During his interview with VentureBeat, Braun elaborated on the strategic value of proactive red teaming: “Red teaming is essential. We proactively uncover vulnerabilities pre-production, ensuring AI integrity from day one.”

“Reducing time to production without compromising security requires avoiding over-engineering. We design testing methodologies that directly inform runtime protections, helping enterprises move securely and efficiently from testing to deployment”, Braun advised.

Braun elaborated further on the complexity of modern AI interactions and the depth required in proactive red teaming methods. He stressed that this process must evolve alongside increasingly sophisticated AI models, particularly those of the generative type: “Our runtime protection was specifically built to handle increasingly complex AI interactions,” Braun explained. “Each detector we employ integrates multiple security layers, including advanced NLP models and language-modeling capabilities, ensuring we provide comprehensive security at every inference step.”

The red team exercises not only validate the models but also strengthen enterprise confidence in deploying advanced AI systems safely at scale, directly aligning with the expectations of leading enterprise Chief Information Security Officers (CISOs).

How Databricks and Noma Block Critical AI Inference Threats

Securing AI inference from emerging threats has become a top priority for CISOs as enterprises scale their AI model pipelines. “The number one reason enterprises hesitate to deploy AI at scale fully is security,” emphasized Braun. Ferguson echoed this urgency, noting, “Our customers have clearly indicated securing AI inference in real-time is critical, and Noma uniquely delivers on that need.”

Together, Databricks and Noma offer integrated, real-time protection against sophisticated threats, including prompt injection, data leaks, and model jailbreaks, while aligning closely with standards such as Databricks’ DASF 2.0 and OWASP guidelines for robust governance and compliance.

The table below summarizes key AI inference threats and how the Databricks-Noma partnership mitigates them:

| Threat Vector | Description | Potential Impact | Noma-Databricks Mitigation |

| Prompt Injection | Malicious inputs are overriding model instructions. | Unauthorized data exposure and harmful content generation. | Prompt scanning with multilayered detectors (Noma); Input validation via DASF 2.0 (Databricks). |

| Sensitive Data Leakage | Accidental exposure of confidential data. | Compliance breaches, loss of intellectual property. | Real-time sensitive data detection and masking (Noma); Unity Catalog governance and encryption (Databricks). |

| Model Jailbreaking | Bypassing embedded safety mechanisms in AI models. | Generation of inappropriate or malicious outputs. | Runtime jailbreak detection and enforcement (Noma); MLflow model governance (Databricks). |

| Agent Tool Exploitation | Misuse of integrated AI agent functionalities. | Unauthorized system access and privilege escalation. | Real-time monitoring of agent interactions (Noma); Controlled deployment environments (Databricks). |

| Agent Memory Poisoning | Injection of false data into persistent agent memory. | Compromised decision-making, misinformation. | AI-SPM integrity checks and memory security (Noma); Delta Lake data versioning (Databricks). |

| Indirect Prompt Injection | Embedding malicious instructions in trusted inputs. | Agent hijacking, unauthorized task execution. | Real-time input scanning for malicious patterns (Noma); Secure data ingestion pipelines (Databricks). |

How Databricks Lakehouse architecture supports AI governance and security

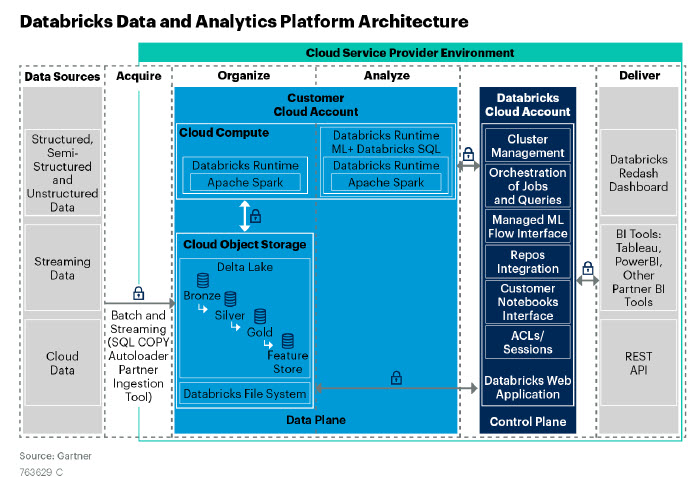

Databricks’ Lakehouse architecture combines the structured governance capabilities of traditional data warehouses with the scalability of data lakes, centralizing analytics, machine learning, and AI workloads within a single, governed environment.

By embedding governance directly into the data lifecycle, Lakehouse architecture addresses compliance and security risks, particularly during the inference and runtime stages, aligning closely with industry frameworks such as OWASP and MITRE ATLAS.

During our interview, Braun highlighted the platform’s alignment with the stringent regulatory demands he’s seeing in sales cycles and with existing customers. “We automatically map our security controls onto widely adopted frameworks like OWASP and MITRE ATLAS. This allows our customers to confidently comply with critical regulations such as the EU AI Act and ISO 42001. Governance isn’t just about checking boxes. It’s about embedding transparency and compliance directly into operational workflows”.

Databricks Lakehouse integrates governance and analytics to securely manage AI workloads. (Source: Gartner)

How Databricks and Noma plan to secure enterprise AI at scale

Enterprise AI adoption is accelerating, but as deployments expand, so do security risks, especially at the model inference stage.

The partnership between Databricks and Noma Security addresses this directly by providing integrated governance and real-time threat detection, with a focus on securing AI workflows from development through production.

Ferguson explained the rationale behind this combined approach clearly: “Enterprise AI requires comprehensive security at every stage, especially at runtime. Our partnership with Noma integrates proactive threat analytics directly into AI operations, giving enterprises the security coverage they need to scale their AI deployments confidently”.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.