Google Unveils Groundbreaking AI Partnership with PJM and Tapestry to Reinvent the U.S. Power Grid

In a move that underscores the growing intersection between digital infrastructure and energy resilience, Google has announced a major new initiative to modernize the U.S. electric grid using artificial intelligence. The company is partnering with PJM Interconnection—the largest grid operator in North America—and Tapestry, an Alphabet moonshot backed by Google Cloud and DeepMind, to develop AI tools aimed at transforming how new power sources are brought online.

The initiative, detailed in a blog post by Alphabet and Google President Ruth Porat, represents one of Google’s most ambitious energy collaborations to date. It seeks to address mounting challenges facing grid operators, particularly the explosive backlog of energy generation projects that await interconnection in a power system unprepared for 21st-century demands.

“This is our biggest step yet to use AI for building a stronger, more resilient electricity system,” Porat wrote.

Tapping AI to Tackle an Interconnection Crisis

The timing is critical. The U.S. energy grid is facing a historic inflection point. According to the Lawrence Berkeley National Laboratory, more than 2,600 gigawatts (GW) of generation and storage projects were waiting in interconnection queues at the end of 2023—more than double the total installed capacity of the entire U.S. grid.

Meanwhile, the Federal Energy Regulatory Commission (FERC) has revised its five-year demand forecast, now projecting U.S. peak load to rise by 128 GW before 2030—more than triple the previous estimate.

Grid operators like PJM are straining to process a surge in interconnection requests, which have skyrocketed from a few dozen to thousands annually. This wave of applications has exposed the limits of legacy systems and planning tools. Enter AI.

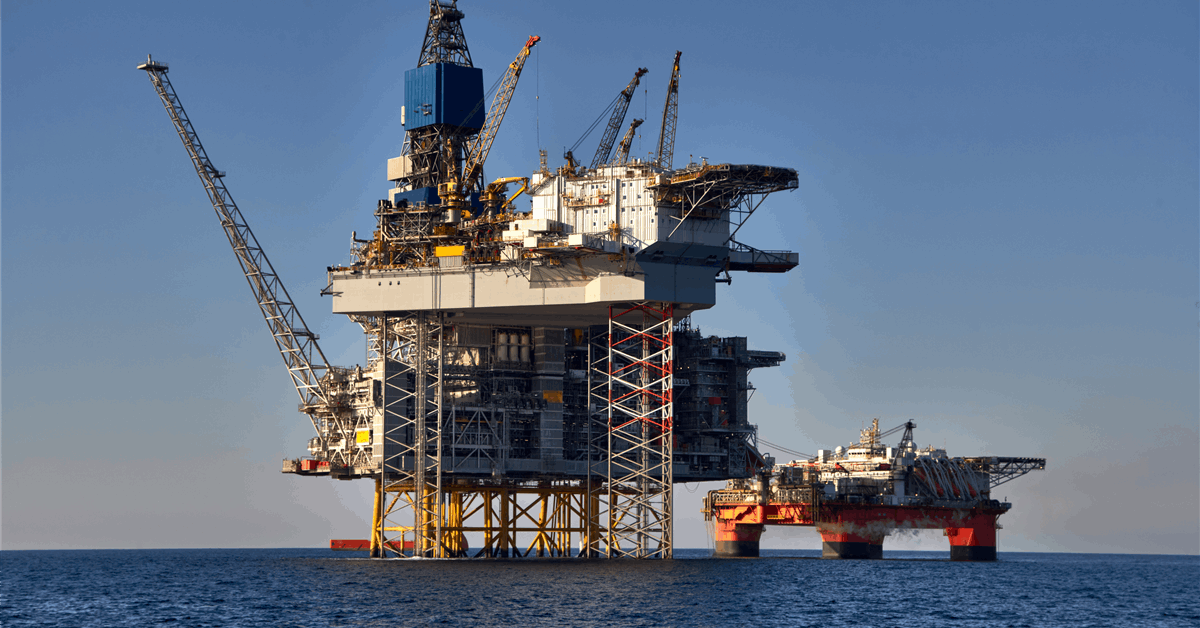

Tapestry’s role is to develop and deploy AI models that can intelligently manage and streamline the complex process of interconnecting power sources—renewables, storage, and conventional generation—across PJM’s vast network, which spans 13 states and the District of Columbia, serving 67 million people.

A Unified, AI-Powered Grid Management Platform

The partnership’s multi-year roadmap aims to cut the interconnection approval process from years to months. Key pillars of the effort include:

- Accelerating capacity additions: By automating time-intensive verification and modeling processes, AI tools from Tapestry will help PJM quickly assess and approve new energy projects. This could significantly reduce the development cycle for grid-connected power, addressing bottlenecks that have plagued renewable developers in particular.

- Driving cost-effective grid expansion: Tapestry will integrate disparate databases and modeling tools into a single secure platform. The goal is to create a unified model of PJM’s network where grid planners and developers can collaborate seamlessly, boosting transparency and planning agility.

- Integrating diverse energy resources: With variable renewables such as solar and wind comprising a large share of PJM’s queue, Tapestry’s AI solutions aim to enable more precise modeling and faster incorporation of these intermittent resources into the grid mix.

Strategic Implications for Data Centers and the AI Economy

For the data center industry—especially as AI workloads dramatically reshape infrastructure demand—Google’s announcement is more than a technical achievement. It’s a signal that the rules of engagement for grid interaction are changing. As hyperscalers seek not only to power their operations sustainably but also to help shape the energy systems around them, partnerships like this one may become a template.

Google is also backing complementary technologies such as advanced nuclear and enhanced geothermal, with the long-term goal of unlocking new, firm capacity. These efforts align with the industry’s growing push for direct grid participation and innovative procurement strategies to manage skyrocketing power needs.

As Porat noted, “Creative solutions from across the private and public sectors are crucial to ensure the U.S. has the energy capacity, affordability, and reliability needed to capitalize on the opportunity for growth.”

Backing PJM’s Long-Term Planning Reforms

This collaboration arrives at a critical juncture for PJM, which is already deep into a multi-year effort to reform its planning and interconnection processes. The AI-powered partnership with Google and Tapestry is designed to complement and accelerate this work—especially as PJM processes the final 67 GW of projects remaining in its interconnection transition phase, part of a broader 200 GW backlog.

“Innovation will be critical to meeting the demands on the future grid, and we’re leveraging some of the world’s best capabilities with these cutting-edge tools,” said Aftab Khan, Executive Vice President of Operations, Planning & Security at PJM. “PJM is committed to bringing new generation onto the system as quickly and reliably as possible.”

PJM plans to launch a new cycle-based process for interconnection applications in early 2026, and the AI partnership is expected to play a foundational role in that effort. As part of its broader grid modernization push, PJM has also rolled out the Reliability Resource Initiative, aimed at expediting selected projects within its current queue.

Tapestry General Manager Page Crahan described the effort as one that “will enable PJM to make faster decisions with greater confidence, making more energy capacity available to interconnect in shorter time frames.” For Google, this isn’t just about grid optimization—it’s a strategic necessity for a digital economy whose energy appetite is growing exponentially.

“This initiative brings together our most advanced technologies to help solve one of the greatest challenges of the AI era—evolving our electricity systems to meet this moment,” said Amanda Peterson Corio, Head of Data Center Energy for Google.

Looking Ahead: A Blueprint for Grid Innovation

Google’s collaboration with PJM and Tapestry represents more than a software upgrade. It’s an architectural rethinking of how intelligence and infrastructure must co-evolve. At the heart of this shift is the belief that AI isn’t just a driver of data center demand—it may also be the key to making that demand sustainable.

By aligning cutting-edge AI innovation with PJM’s operational depth and Tapestry’s moonshot ambition, this partnership lays the groundwork for something much larger: a national model for grid modernization. It represents a fusion of deep tech, institutional coordination, and real-world urgency—the very factors that will define the power landscape of the AI era.

For the data center industry and beyond, it’s a clear signal that the future grid won’t just be bigger—it will need to be smarter, faster, and more adaptive to the surging complexity of energy demand. As data center operators, energy developers, and policymakers look to the future, this initiative offers a compelling glimpse into what a smarter, faster, and more dynamic grid could look like—with AI at the helm.

Hyperscalers’ Growing Role in Grid Modernization: Expanding AI-Driven Initiatives

As Google embarks on its bold collaboration to modernize the U.S. power grid through artificial intelligence (AI), other hyperscalers are following suit with initiatives aimed at addressing the challenges posed by an increasingly strained energy infrastructure. The intersection of AI, data centers, and energy resilience is rapidly emerging as a central focus for major players like Microsoft, Amazon, and Meta, who are aligning their strategies to accelerate grid modernization and optimization.

Microsoft’s AI-Powered Grid Optimization

Microsoft is another hyperscaler at the forefront of AI applications in grid management. The company has been exploring the potential of AI for grid optimization as part of its broader commitment to sustainability and energy efficiency.

In partnership with the Bonneville Power Administration (BPA) and other utility providers in the Pacific Northwest, Microsoft is leveraging AI to forecast and balance electricity demand across the region. The initiative, known as the “Grid Optimization Project,” aims to reduce energy waste and enhance grid reliability by predicting shifts in energy consumption with unprecedented accuracy.

By applying machine learning algorithms to real-time grid data, Microsoft’s AI tools can anticipate fluctuations in renewable energy generation, such as solar and wind, and adjust load distribution accordingly. The goal is to integrate renewable energy more seamlessly into the grid while maintaining stability and avoiding blackouts.

In addition, Microsoft has committed to providing its AI solutions to help utilities across the U.S. improve grid flexibility and resilience, positioning itself as a key player in transforming the power sector through digital infrastructure.

Amazon’s Renewable Integration and Demand Response

Amazon has also recognized the critical role AI will play in the future of grid modernization. Through the Amazon Web Services (AWS) platform, the company is actively developing AI models to enhance renewable energy integration and optimize energy consumption for its massive network of data centers.

As part of its commitment to reaching net-zero carbon emissions by 2040, Amazon is using AI to balance energy use and improve grid demand response, particularly in areas where renewable energy penetration is high and intermittency poses challenges.

One of Amazon’s standout efforts is its partnership with the California Independent System Operator (CAISO) to develop an AI-based energy management platform that predicts and mitigates the risks associated with renewable energy volatility. The system not only helps Amazon data centers adjust their energy usage during periods of low supply but also assists CAISO in managing grid congestion by offering real-time insights into demand patterns.

Amazon’s continued innovation in AI-driven energy solutions reflects the company’s broader strategy to decarbonize its operations while ensuring the reliability and efficiency of the power systems it relies on.

Meta’s Strategic Energy Investments and AI Integration

Meta is similarly exploring AI applications in grid management, but with a focus on accelerating the transition to renewable energy sources for its data centers. As part of its strategy to reach 100% renewable energy for global operations by 2030, Meta has invested in AI technologies designed to optimize energy procurement and minimize carbon emissions.

Through its partnership with several utility providers, Meta is using AI to predict energy demand and automate the process of sourcing clean energy at the lowest cost. Meta’s AI system integrates data from smart grids and renewable energy sources to create an efficient energy portfolio, enabling Meta to adjust its data center energy usage in real-time.

The company is also investigating AI’s potential in demand-side management, which allows energy consumers to influence grid stability and optimize usage based on fluctuating supply. With its AI-powered solutions, Meta aims to demonstrate how large-scale energy consumption can be made more adaptable to the changing dynamics of the grid.

Strategic Implications for the Data Center Industry

For the data center industry, these AI-driven initiatives represent a new paradigm in grid interaction and energy management. As hyperscalers increasingly integrate AI into their operations, they are not only positioning themselves as innovators in energy optimization but also as active contributors to the broader grid modernization efforts.

By creating smarter, more adaptive energy ecosystems, hyperscalers are paving the way for a more resilient grid capable of meeting the surging demand for energy from digital infrastructure. The growing role of hyperscalers in grid modernization also highlights the broader trend of digital infrastructure and energy systems co-evolving.

As AI continues to drive advancements in both data center operations and energy grid management, these companies are well-positioned to influence the future of power distribution and generation. The efforts made by Google, Microsoft, Amazon, and Meta underscore a pivotal shift: that AI is not only a tool for powering the digital economy but also a critical enabler of sustainable and resilient energy systems for the future.

In this context, collaborations like Google’s with PJM and Tapestry are more than just technical partnerships—they signal a new approach to energy management in the AI era. For the data center industry and the grid operators that serve it, this intersection of digital and energy infrastructure is likely to define the future of how power is distributed, optimized, and consumed at scale.