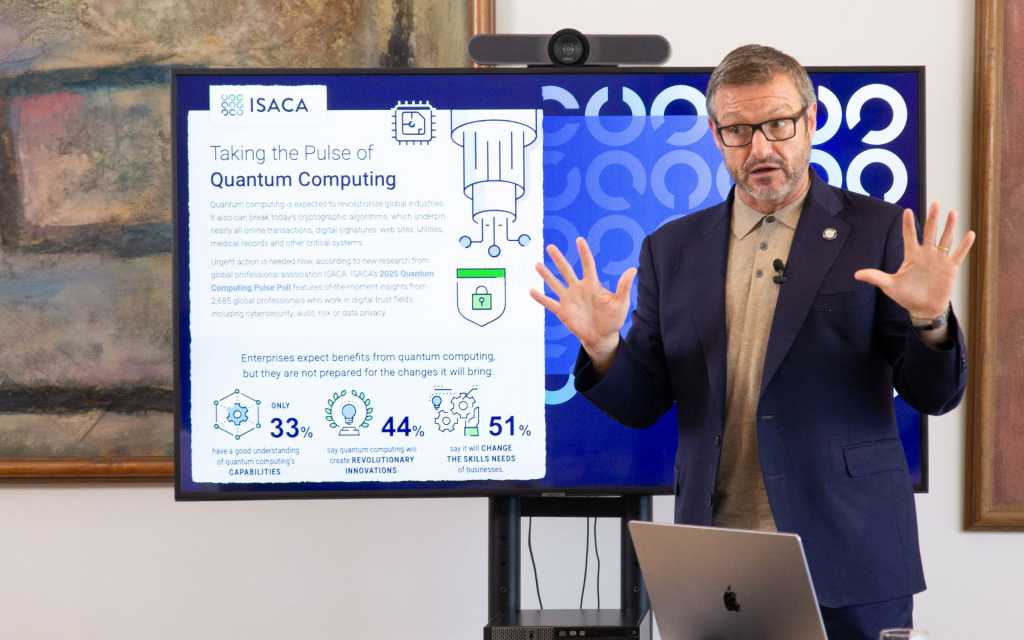

Gallego says the challenge is not theoretical but practical, adding, “We are already seeing clear warning signs.” He warns of “the so-called ‘harvest now, decrypt later’ attacks, which consist of intercepting encrypted data today to decrypt it in the future with quantum technology. This is not science fiction, but a concrete threat that requires immediate responses.

Prepare now for Q-day

European organizations are not prepared to deal with this type of threat, Gallego says: “Only 4% of European organizations have a formal quantum threat strategy. And just 2% of the professionals surveyed feel genuinely familiar with these technologies. That gap between risk awareness and actual action is worrying. Preparedness cannot be optional; it must be a strategic priority.”

Gallego believes that “there is a significant lack of quantum literacy.” In his opinion, quantum computing breaks technological molds and forces us to rethink how we manage privacy, identity, and data integrity. (See also: 9 steps to take to prepare for a quantum future)

“Some organizations believe this is a problem of the future, but the truth is that preparation must start today. Because when ‘Q-day’ comes—that moment when a quantum computer is able to break today’s encryption—it will be too late to react. What we don’t encrypt securely today will be vulnerable tomorrow,” he continues. “There are already standards developed by organizations such as NIST, and it is essential to start integrating them.”

Therefore, the first thing that organizations have to do is to train their professionals in quantum fundamentals, in new encryption algorithms, and in how to adapt their infrastructures to this new paradigm, he says. The second thing they have to do is to identify which sensitive data is protected with algorithms that may become obsolete in a short time. And the third thing, according to Gallego, is to start the transition to post-quantum cryptography as soon as possible.

“Finally, I strongly believe in public-private collaboration. Real innovation happens when the state, business, and academia are rowing in the same direction. The Spanish Government’s Quantum Strategy, with more than 800 million euros of investment, is a big step in that direction,” he adds. “The ‘Q-day’ will come, we don’t know if in five, 10, or 15 years, but it is an inevitable horizon. We cannot afford a scenario in which all of our confidential information is massively exposed. Encryption-based security is non-negotiable.”