OpenAI has also expanded Stargate internationally, with plans for a UAE data center announced during Trump’s recent Gulf tour. The Abu Dhabi facility is planned as a 10-square-mile campus with 5 gigawatts of power.

Gogia said OpenAI’s selection of Oracle “is not just about raw compute, but about access to geographically distributed, enterprise-grade infrastructure that complements its ambition to serve diverse regulatory environments and availability zones.”

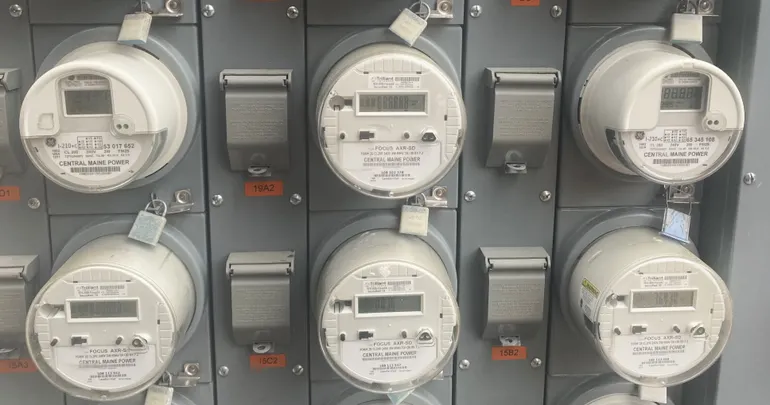

Power demands create infrastructure dilemma

The facility’s power requirements raise serious questions about AI’s sustainability. Gogia noted that the 1.2-gigawatt demand — “on par with a nuclear facility” — highlights “the energy unsustainability of today’s hyperscale AI ambitions.”

Shah warned that the power envelope keeps expanding. “As AI scales up and so does the necessary compute infrastructure needs exponentially, the power envelope is also consistently rising,” he said. “The key question is how much is enough? Today it’s 1.2GW, tomorrow it would need even more.”

This escalating demand could burden Texas’s infrastructure, potentially requiring billions in new power grid investments that “will eventually put burden on the tax-paying residents,” Shah noted. Alternatively, projects like Stargate may need to “build their own separate scalable power plant.”

What this means for enterprises

The scale of these facilities explains why many organizations are shifting toward leased AI computing rather than building their own capabilities. The capital requirements and operational complexity are beyond what most enterprises can handle independently.