There are 300 jobs at risk in the UK and Ireland as SSE and its renewables business launch a consultation on redundancies.

Unite the Union claims that more than 150 of the jobs at risk come from the “extremely profitable” SSE renewables, which operates the Viking onshore wind farm.

Among the jobs reportedly at risk are critical support staff for control rooms and those working in maintenance.

Simon Coop, national officer for the union, said: “Staffing levels have been a major issue for Unite before these redundancies were announced, and this will make the situation much worse as our members working in the renewables space are already overstretched and being asked to work more and more hours.

“Their voices must be heard and, we will ensure that this happens.

“Unite is calling on SSE to reconsider its decision.”

Additionally, the union claims that workers have alread “complained about already being overworked due to there not being enough staff and have been unable to take proper breaks or time off”.

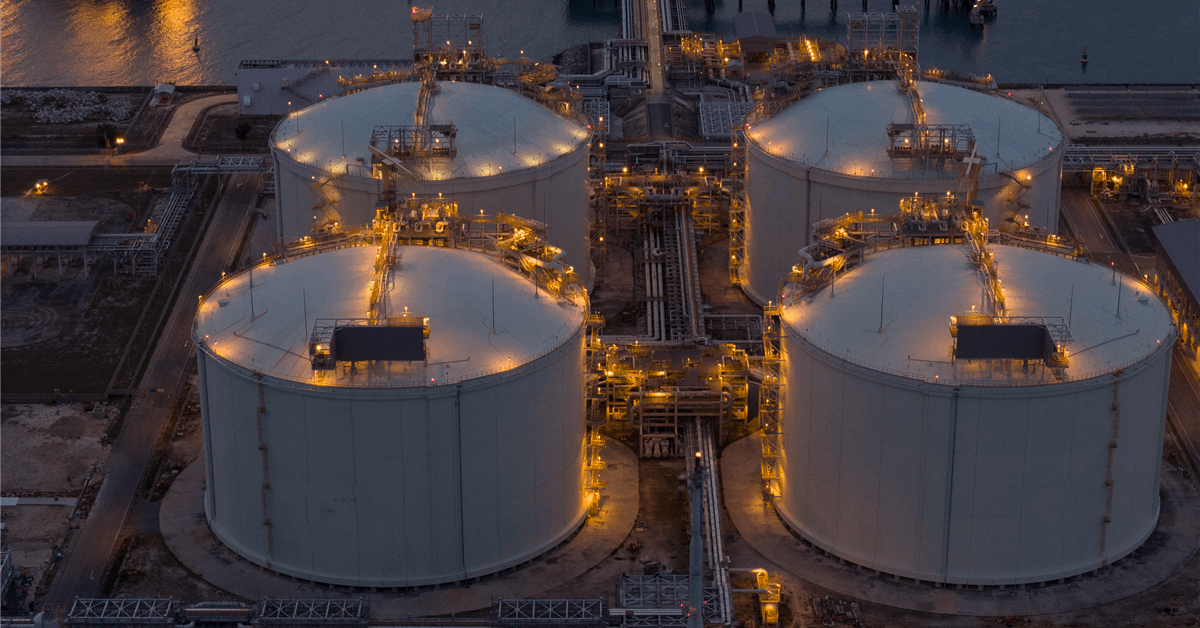

SSE’s renewables output increased by around 17% year-on-year in 2024. Generation output from SSE Renewables increased 26% in the first nine months to the end of December, compared to the same period in the previous year.

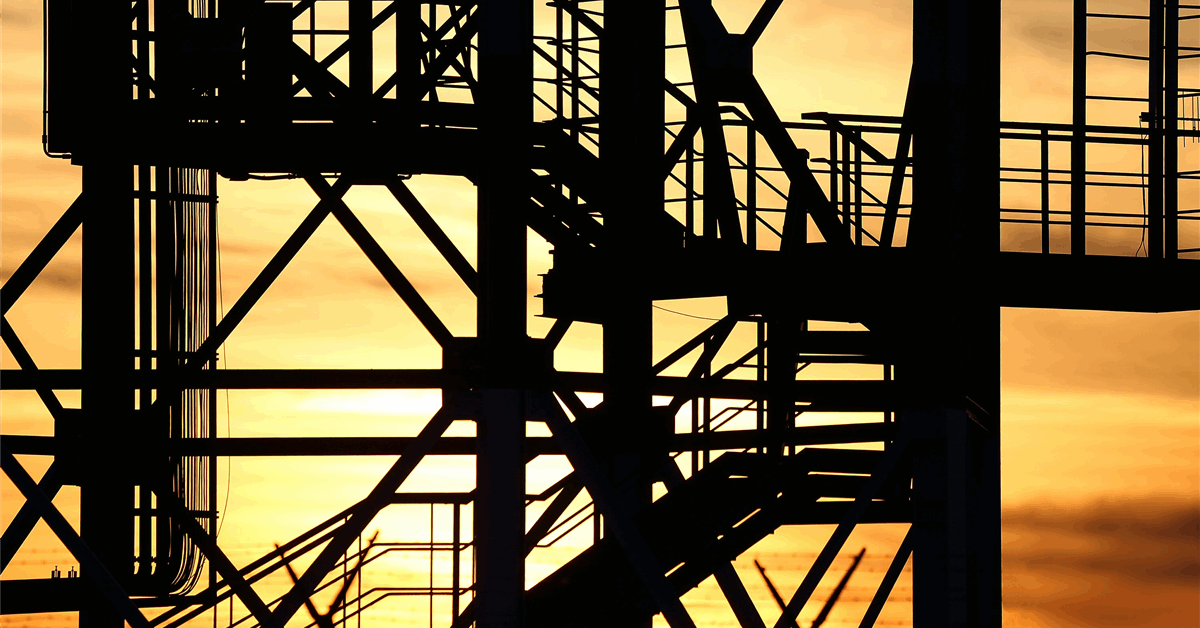

The business expects the first stage of its 3.6GW Dogger Bank wind development to come online this year.

The project is set to be the world’s largest fixed-bottom offshore wind farm.

SSE has a 40% stake in the project alongside Equinor (OSL: EQNR) 40% and Eni (IT: ENI) 20%.

However, its Berwick Bank wind farm is still awaiting approval by the Scottish Government and missed out on the opportunity to take part in last year’s government funding round as a result.

Once operational, Berwick Bank will also be one of the largest projects of its type on the planet.

Sharon Graham, general secretary for the union, said: “SSE’s renewables operation is already extremely profitable and set to become even more so as the demand for renewables increases.

“The threat of job losses is a cynical attempt driven to further boost the company’s profits and not in the interests of workers or consumers.

“Unite will not stand by and watch these workers lose their jobs while shareholders and bosses profit. They have the full support of the union throughout this consultation process.”

The firm is set to release its full-year results to the end of March 2024 on 21 May this year.

Adjusted operating profit for SSE Renewables increased by 287% to £335.6m from £86.8m in the first half of the year, the firm reported in November.

Throughout the first half of last year SSE Renewables delivered its onshore Viking wind farm in Shetland and its Seagreen offshore wind farm achieved commercial operations, adding to the business’ profits.

An SSE spokesperson responded to Unite’s comments: “After a period of sustained growth, we’re undertaking an efficiency review to ensure we continue to operate in the most efficient and effective way possible into the future.

“We have informed colleagues that this will unfortunately lead to reduced headcount in some parts of our business.

“We understand this process will be difficult for our teams, and we’ll be consulting trade unions and keeping colleagues informed throughout.”