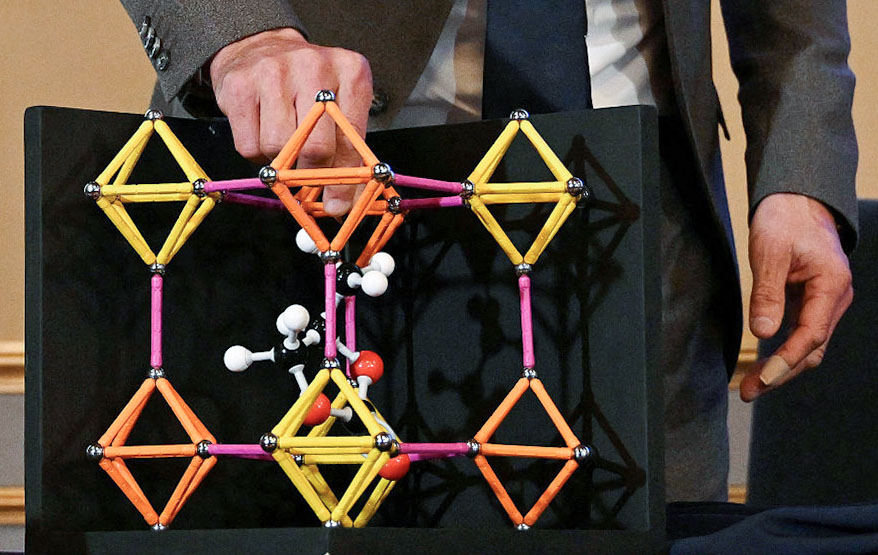

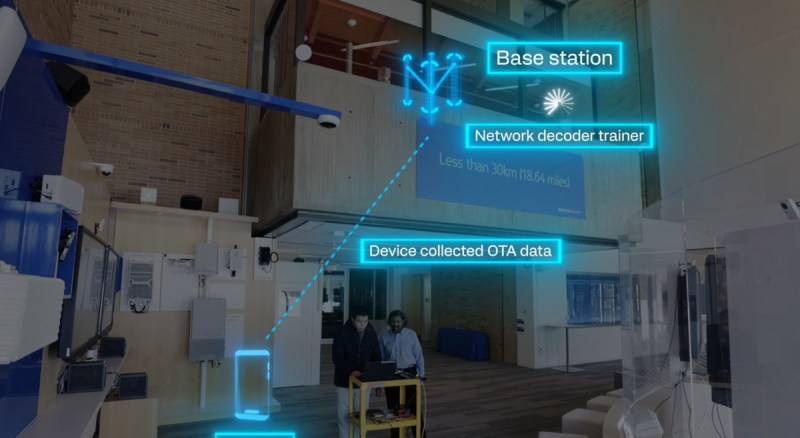

Qualcomm and Nokia Bell Labs showed how multiple-vendor AI models can work together in an interoperable way in wireless networks.

Carl Nuzman, Bell Labs Fellow at Nokia Bell Labs and Rachel Wang, principal engineer at Qualcomm, said in a blog post that they demonstrated the flexibility of sequential learning, which can facilitate network

decoder-first or device encoder-first training.

They said Qualcomm and Nokia Bell Labs are continuing to work together to demonstrate the value of interoperable, multi-vendor AI in wireless networks. At Mobile World Congress 2024, they first demonstrated over-the-air interoperability of AI-enhanced channel state feedback encoder and decoder models.

These were running in reference mobile devices with Qualcomm’s 5G modem-RF system and a Nokia prototype base station, respectively. These interoperable models were developed by the two companies using a new technique referred to as sequential learning. Now they’re back at MWC 2025 with more.

Channel state feedback helps the network figure out the best way to send data to your device. As wireless conditions change, so does the optimal direction a transmission takes from the network to the device. Qualcomm and Nokia were able to make the network smarter and more efficient by generating precise beams with AI.

With sequential learning, multiple companies can co-design interoperable AI models without needing to share proprietary details of their implementations. Instead, a training dataset of model input/output pairs is shared from one company to the other.

Building on this proof-of-concept, the companies have since continued working together to demonstrate the value, flexibility and scalability of interoperable AI for channel state feedback.

Wireless AI robustness in different physical environments

As AI technologies are deployed in real-world networks, it is important to ensure that models work robustly in diverse environments. Training datasets should be sufficiently diverse for AI models to learn effectively; however, it is unrealistic for them to cover all possible scenarios.

Thus, it is critical for AI models to generalize their training to handle new situations. In the collaboration, the firms studied three very different cell sites: an outdoor suburban location and two different indoor environments.

In the first scenario, they compared the performance of a common AI model trained with diverse datasets with hyper-local models that are trained at specific locations. They found the common AI model can work in different environments with comparable performance as hyper local models.

The companies later adapted the common model to include data from Indoor Site 2 (the Adapted Common model). Then they measured the user data throughput at four different locations inside Indoor Site 2. The common model came within 1% of the performance of the Adapted Common model in all cases, showing the robustness of the general common model to new scenarios.

AI-enhanced channel state feedback allows the network to transmit in a more precise beam pattern, improving the received signal strength, reducing interference, and ultimately providing higher data throughput. We measured this improvement by logging data throughputs experienced with AI-based feedback and grid-of-beam-based feedback (3GPP Type I) as the mobile user moved between various locations in the cell.

Use of the AI feedback yielded higher throughput, with per-location throughput gains ranging from 15% to 95%. The throughput gains that will be observed in commercial systems under AI-enhanced CSF will depend on many factors. However, the results of this proof-of-concept, together with numerous simulation studies, suggest that the throughput with AI enhancements will be consistently higher than the that achieved with legacy approaches.

Sequential learning can be carried out in two ways, either device encoder-first or network decoder first, which has different implications for deployment and standardization. To support 3GPP’s increasing interest in the decoder-first approach, this year we replaced our original encoder-first demonstrations with decoder-first model training.

With the encoder-first approach demonstrated in MWC 2024, Qualcomm designed an encoder model, generated a training dataset of input/output pairs, and then shared the dataset with Nokia, which subsequently designed an interoperable decoder.

This year, with the decoder-first approach, Nokia designed a decoder model and generated and shared a training dataset of decoder input/output pairs for Qualcomm Technologies to use in designing an interoperable encoder. We found that models designed by both modalities performed equally well, within a few percentage points.

Bottom line

The prototype that Qualcomm Technologies and Nokia Bell Labs have jointly demonstrated represents a key step in moving AI-enhanced communication from concept to reality. The results show that the user experience can be significantly improved, in a robust way, via multiple learning modalities. As we learn to design interoperable, multi-vendor AI systems, we can start to realize enhanced capacity, improved reliability, and reduced energy consumption.

GB Daily

Stay in the know! Get the latest news in your inbox daily

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.