A range of developments, primarily geo-political in nature, have transformed this outlook. Now, sovereignty is as much tied up with the growing sense that operational, political, and even technological independence is essential, especially for EU-based enterprises.

SAP has embraced this concern. “The digital resilience of Europe depends on sovereignty that is secure, scalable and future-ready,” said Martin Merz, president, SAP Sovereign Cloud. “SAP’s full-stack sovereign cloud offering delivers exactly that, giving customers the freedom to choose their deployment model while helping ensure compliance up to the highest standards.”

This reflects the company’s commitment to supporting the EU’s “digital autonomy,” he said. The company has made digital sovereignty a strategic priority, and will invest €20 billion ($23.3 billion) to develop new digital sovereignty products for the EU as well as for other territories.

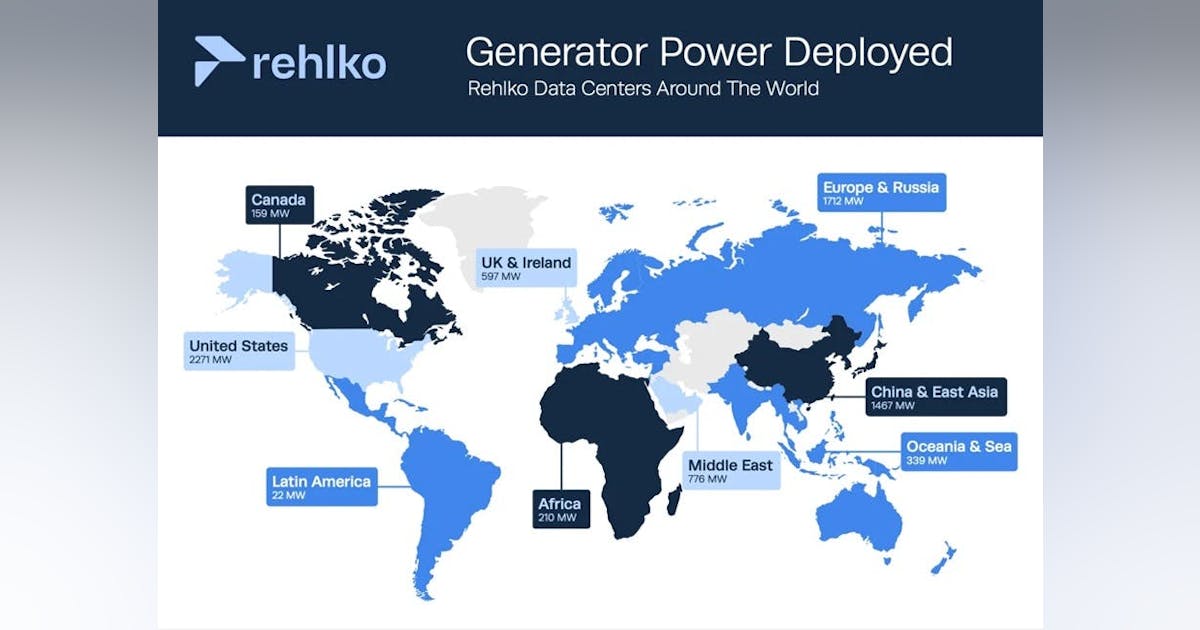

A decade ago, the idea of cloud services promoted the notion of a single global infrastructure market. Now it looks just as likely that there will be a balkanization of global cloud infrastructure into geographical domains.

“For decades, enterprises have handed over too much power to their cloud providers – power over infrastructure, power over availability, and most importantly, power over their own data,” commented Garima Kapoor, co-founder and co-CEO of US AI object storage company, MinIO.

“CIOs are realizing that outsourcing control to a public cloud provider is no longer an option. The concept of sovereignty is evolving. It’s no longer just as a means of maintaining compliance with data regulations but is now viewed as a strategic and architectural imperative for enterprises that want to own their digital destiny,” she said.