Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

If you hadn’t heard, there’s a new AI star in town: DeepSeek, the subsidiary of Hong Kong-based quantitative analysis (quant) firm High-Flyer Capital Management, has sent shockwaves throughout Silicon Valley and the wider world with its release earlier this week of a new open source large reasoning model, DeepSeek R1, that matches OpenAI’s most powerful available model o1 — and at a fraction of the cost to users and to the company itself (when training it).

While the advent of DeepSeek R1 has already reshuffled a consistently topsy turvy, fast moving, intensely competitive market for new AI models — previous months saw OpenAI jockeying with Anthropic and Google for the most powerful proprietary models available, while Meta Platforms often came in with “close enough” open source rivals — the difference this time is the company behind the hot model is based in China, the geopolitical “frenemy” of the U.S., and whose tech sector was widely viewed, until this moment, as inferior to that of Silicon Valley.

As such, it’s caused no shortage of hand-wringing and existentialism from U.S. and Western bloc techies, who are suddenly doubting OpenAI and the general big tech strategy of throwing more money and more compute (graphics processing units, GPUs, the powerful gaming chips typically used to train AI models) toward the problem of inventing ever more powerful models.

Yet some Western tech leaders have had a largely positive public response to DeepSeek’s rapid ascent.

Marc Andreessen, a co-inventor of the pioneering Mosaic web browser, co-founder of the Netscape browser company and current general partner at the famed Andreessen Horowitz (a16z) venture capital firm, posted on X today: “Deepseek R1 is one of the most amazing and impressive breakthroughs I’ve ever seen — and as open source, a profound gift to the world [robot emoji, salute emoji].”

Yann LeCun, the Chief AI Scientist for Meta’s Fundamental AI Research (FAIR) division, posted on his LinkedIn account:

“To people who see the performance of DeepSeek and think:

‘China is surpassing the US in AI.’

You are reading this wrong.

The correct reading is:

‘Open source models are surpassing proprietary ones.’

DeepSeek has profited from open research and open source (e.g. PyTorch and Llama from Meta)

They came up with new ideas and built them on top of other people’s work.

Because their work is published and open source, everyone can profit from it.

That is the power of open research and open source.”

And even Mark “Zuck” Zuckerberg, Meta AI’s founder and CEO, seemed to seek to counter the rise of DeepSeek with his own post on Facebook promising that a new version of Facebook’s open source AI model family Llama would be “the leading state of the art model” when it is released sometime this year. As he put it:

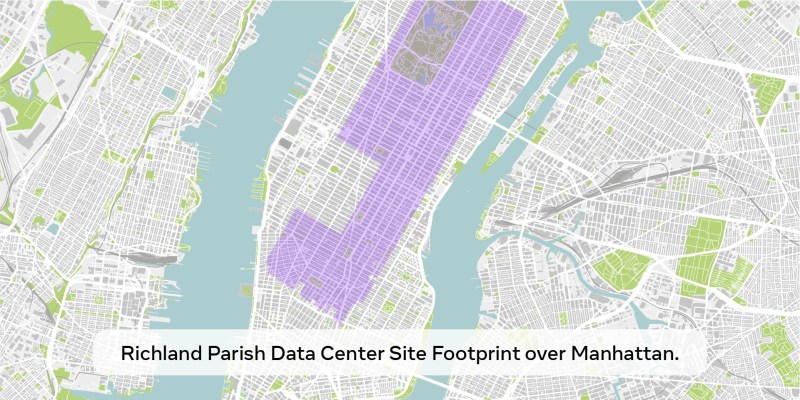

“This will be a defining year for AI. In 2025, I expect Meta AI will be the leading assistant serving more than 1 billion people, Llama 4 will become the leading state of the art model, and we’ll build an AI engineer that will start contributing increasing amounts of code to our R&D efforts. To power this, Meta is building a 2GW+ datacenter that is so large it would cover a significant part of Manhattan. We’ll bring online ~1GW of compute in ’25 and we’ll end the year with more than 1.3 million GPUs. We’re planning to invest $60-65B in capex this year while also growing our AI teams significantly, and we have the capital to continue investing in the years ahead. This is a massive effort, and over the coming years it will drive our core products and business, unlock historic innovation, and extend American technology leadership. Let’s go build!“

He even shared a graphic showing the 2 gigawatt datacenter mentioned in his post overlaid on Manhattan:

Clearly, even as he espouses a commitment to open source AI, Zuck is not convinced that DeepSeek’s approach of optimizing for efficiency while leveraging far fewer GPUs than major labs is the right one for Meta, or for the future of AI.

But with U.S. companies raising and/or spending record sums on new AI infrastructure that many experts have noted depreciate rapidly (due to hardware/chip and software advancements), the question remains which vision of the future will win out in the end to become the dominant AI provider for the world. Or maybe it will always be a multiplicity of models each with a smaller market share? Stay tuned, because this competition is getting closer and fiercer than ever.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.