Infrastructure and Supply Chain Race

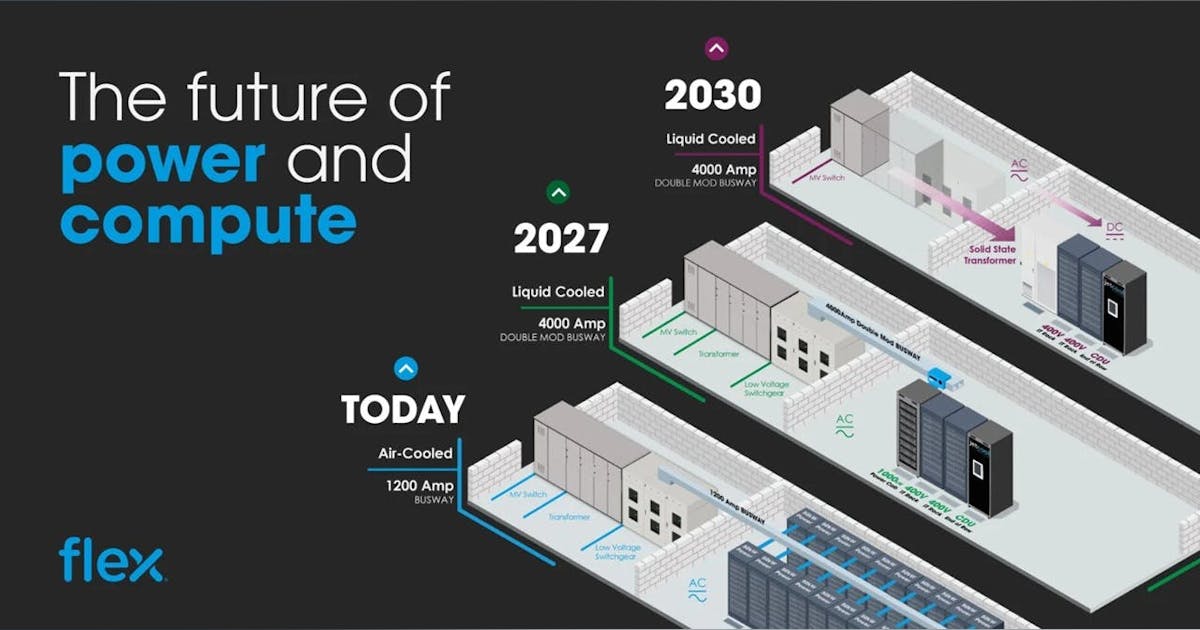

Cloud competition is increasingly defined by the ability to secure power, land, and chips— three resources that dictate project timelines and customer onboarding. Neoclouds and hyperscalers face a common set of constraints: local utility availability, substation interconnection bottlenecks, and fierce competition for high-density GPU inventory. Power stands as the gating factor for expansion, often outpacing even chip shortages in severity. Facilities are increasingly being sited based on access to dedicated, reliable megawatt-scale electricity, rather than traditional latency zones or network proximity.

AI growth forecasts point to four key ceilings: electrical capacity, chip procurement cycles, latency wall between computation and data, and scalable data throughput for model training. With hyperscaler and neocloud deployments now competing for every available GPU from manufacturers, deployment agility has become a prime differentiator. Neoclouds distinguish themselves by orchestrating microgrid agreements, securing direct-source utility contracts, and compressing build-to-operational timelines. Converting a bare site to a functional data hall with operators that can viably offer a shortened deployment timeline gives neoclouds a material edge over traditional hyperscale deployments that require broader campus and network-level integration cycles.

The aftereffects of the COVID era supply chain disruptions linger, with legacy operators struggling to source critical electrical components, switchgear, and transformers, sometimes waiting more than a year for equipment. As a result, neocloud providers have moved aggressively into site selection strategies, regional partnerships, and infrastructure stack integration to hedge risk and shorten delivery cycles. Microgrid solutions and island modes for power supply are increasingly utilized to ensure uninterrupted access to electricity during ramp-up periods and supply chain outages, fundamentally rebalancing the competitive dynamics of AI infrastructure deployment.

Creditworthiness, Capital, and Risk Management

Securing capital remains a decisive factor for the growth and sustainability of neoclouds. Project finance for campus-scale deployments hinges on demonstrable creditworthiness; lenders demand clear evidence of repayment capacity and tangible asset backing before underwriting multi-million or billion-dollar expansions. For neoclouds, which lack the diversified revenue streams of hyperscalers, creative financial engineering has become a necessity. Typical solutions include collateralizing GPU fleets, securing parent or investor guarantees, and offering equity partnerships, all designed to reassure institutional lenders or debt markets about the durability of their business model.

Risk management frameworks in the neocloud sector differ substantially from hyperscalers. Many neoclouds employ balance sheet leasing for real estate and hardware, using security deposits or collateral structures to mitigate capital exposure on short- and long-term projects. Larger project sponsors, especially those with global footprints, spread risk via diversified customer portfolios spanning regions or sectors. Assets—such as high-demand GPU clusters—are increasingly deployed as instruments to secure financing, with some contracts allowing for the pledge or resale of compute capacity in event of default. Hyperscalers, by contrast, leverage their credit grades and liquidity to access lower-cost debt and finance expansion through cash flow from broader technology businesses.11

The past year has seen a marked shift in debt pricing as banks and institutional investors have become more comfortable with the neocloud model, moving away from aggressive, venture-backed financing toward large syndicated facilities typical of hyperscaler transactions. CoreWeave’s evolution is representative: the company advanced from small, risk-tolerant capital structures to closing multi-billion dollar secured debt facilities—a transformation that has boosted its market cap to $50 billion and positioned it as a global creditworthy player in the field.12 Traditional banks, previously wary of AI-specialized cloud operations, have tightened spreads and offered rates closer to those granted to hyperscalers, restructuring the competitive cost landscape for all providers.13

Business Models and Risk Sharing

Neocloud providers have adopted multi-pronged risk management and investment strategies to achieve rapid scale and operational resilience. Leasing models, both for real estate and hardware, are foundational. By avoiding full upfront capital expenditure and leveraging balance sheet leasing, neoclouds can quickly establish new data halls or expand into high-density regions without locking in long-term asset risk.14 Hardware assets, particularly GPU clusters, are often financed with security deposits or structured collateral, providing lenders with additional safeguards while allowing for scalable asset turnover as market conditions evolve.15

Strategic risk sharing extends into investment stack layers and joint venture programs. Partnerships like Microsoft’s recent $10 billion development agreement with CoreWeave illustrate how hyperscalers hedge risk and access next-generation GPU capacity by integrating neocloud infrastructure into their service cycle. Similar co-development ventures between Oracle and OpenAI, or CoreWeave and Crusoe, have enabled shared infrastructure buildouts and capital flexibility. These models span from campus-wide property leases to modular GPU financing, with institutional partners contributing capital and operational expertise to manage project scale and market volatility.16

Operational risk management further benefits from geographic and portfolio diversification. Flagship campuses, like CoreWeave’s Pennsylvania project, are structured with extensive CapEx sharing and risk-mitigating contracts, targeting stable, long-term returns and enterprise anchor tenants.14 Smaller, rapid-deployment data halls utilize colocation and asset-light models, allowing for flexible tenancy and dynamic resource allocation as projects scale up or pivot. As these partnerships mature, there is a shift from shell programs and basic capital sharing to full development control, such as CoreWeave’s acquisition and full operational management of new campuses, blending short-term flexibility with long-term infrastructure ownership.

Technical Architecture and Design Strategies

Neoclouds distinguish themselves through architectural strategies tailored to AI’s intense computational demands. Unlike hyperscalers, whose legacy infrastructure largely evolved from CPU-first cloud models, neoclouds build their platforms on GPU-native frameworks from inception. This includes optimized full-stack AI toolchains that integrate seamlessly with the latest deep learning frameworks and provide developer environments engineered for rapid iteration and deployment.5, 6

In terms of hardware design, neoclouds frequently adopt Nvidia reference architectures, copying tested GPU module layouts and airflow management schemes for simplicity and scalability in GPU-as-a-Service. Conversely, hyperscalers invest heavily in custom stack integration, meshing proprietary interconnect fabrics and specialized cooling solutions customized to their diverse workload and SKU demands.6

Further, neoclouds benefit from rapid modification and upgrade cycles, positioned to retrofit or replace hardware without legacy system constraints. Legacy hyperscale data centers must balance the intensified introduction of new GPU nodes with the maintenance of broad-purpose CPU infrastructure and diverse tenant requirements, which slows overall infrastructure evolution. Neoclouds’ focused AI workload profile allows for consistent optimization of workload density and power utilization as fresh hardware iterations roll out.

Supply chain elongation for critical components, such as circuit boards, cooling systems, specialized chips, etc. remains a challenge across operators. Neocloud providers adopt long-term procurement programs and aggressive CapEx strategies to hedge these risks, typically partnering closely with chip manufacturers and specialized suppliers to lock in volumes and pricing well in advance.2

The Economic Sprint and Performance Edge

The financial stakes in accelerating AI infrastructure deployment are monumental. Every month shaved off the deployment cycle for 100 MW of GPU infrastructure translates to over $100 million in incremental value realized through earlier model training, faster product iterations, and quicker time-to-market.17 This equation elevates speed to deployment into the primary competitive metric for both neoclouds and hyperscalers alike.

Neoclouds leverage their lean business models and specialized architectures, supported by distributed micro data centers and GPU-optimized stacks, to aggressively compress build times, sometimes bringing new capacity online in weeks rather than quarters or years.18 This rapid delivery creates a differentiated value proposition for enterprises requiring immediate compute, as well as hyperscalers hedging supply risk by integrating external GPU pools through partnerships and contractual arrangements.

The concept of a network collective here presents a strategic advantage: neoclouds, hyperscalers, and AI service providers interconnect their capacities and platforms, facilitating workload migration and elasticity with minimal latency hiccups.19 This ecosystem mutually enhances individual operator performance, while collectively shifting competitive dynamics in favor of agility and specialized service delivery. As a result, neocloud players such as CoreWeave and Crusoe gain not only speed but also strategic partnerships that reinforce their market position and contribute to the broader acceleration of AI innovation cycles.

Strategic Implications and Future Outlook

The question of whether neoclouds represent a transitional phase or a sustainable challenge to hyperscaler dominance remains nuanced, pointing to a mixed economy in cloud infrastructure.

Hyperscalers currently maintain control over most commodity layers, including large-scale compute, storage, and global networking, which remain essential substrates for digital services. However, neoclouds excel at delivering specialized developer experiences and directly monetizing AI workloads, enabling faster experimentation and iteration cycles. This specialization allows neoclouds to focus intensely on GPU-native environments, providing performance and cost advantages for specific AI-centric use cases that hyperscalers struggle to serve efficiently due to their broad, generalized infrastructures.

The market is witnessing accelerating convergence between these two models. Multi-cloud strategies increasingly blend neocloud speed and flexibility with hyperscaler reliability and scale, offering enterprises hybrid deployment options to optimize cost, performance, and regulatory compliance. Recent hyperscaler investment and acquisition trends reflect a recognition that integrating neocloud capabilities into hyperscale platforms strengthens their competitive position while hedging supply risks.

Looking ahead, regulatory frameworks and capital allocation decisions will shape the evolution of this ecosystem. Neoclouds face challenges including dependence on hyperscaler substrates, margin pressure from competition, and the need to scale profitably beyond early adoption phases. Meanwhile, hyperscalers must balance innovation with legacy system complexity and increasingly vigilant antitrust environments. Enterprise partnerships, compliance demands, and supply chain resilience will dictate investment priorities and consolidation patterns in the coming five years.

Neoclouds embody disruption and evolution. Their focused specialization and agile risk models have unsettled the status quo, compelling hyperscalers to adapt through strategic collaboration and product portfolio adjustment. The future landscape will likely feature a poly-cloud ecosystem blending hyperscaler scale with neocloud specialization, delivering mixed economies of scale and innovation aligned to the growing AI-driven demands of enterprise customers. 20, 21

This evolving balance may redefine enterprise value extraction in the AI era, highlighting speed, flexibility, and nuance over pure scale. Enterprises and investors alike will need to track these dynamics closely to navigate a cloud future that is far less monolithic and much more specialized than the hyperscaler era alone suggested.