Many edge devices can periodically send summarized or selected inference output data back to a central system for model retraining or refinement. That feedback loop helps the model improve over time while still keeping most decisions local. And to run efficiently on constrained edge hardware, the AI model is often pre-processed by techniques such as quantization (which reduces precision), pruning (which removes redundant parameters), or knowledge distillation (which trains a smaller model to mimic a larger one). These optimizations reduce the model’s memory, compute, and power demands so it can run more easily on an edge device.

What technologies make edge AI possible?

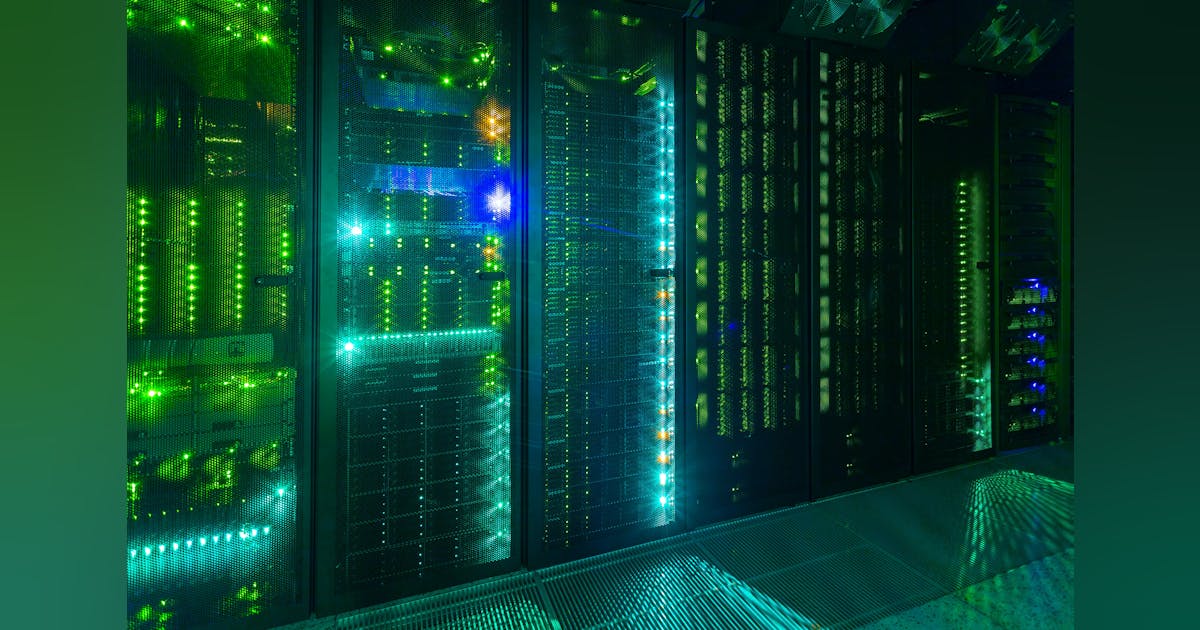

The concept of the “edge” always assumes that edge devices are less computationally powerful than data centers and cloud platforms. While that remains true, overall improvements in computational hardware have made today’s edge devices much more capable than those designed just a few years ago. In fact, a whole host of technological developments have come together to make edge AI a reality.

Specialized hardware acceleration. Edge devices now ship with dedicated AI-accelerators (NPUs, TPUs, GPU cores) and system-on-chip units tailored for on-device inference. For example, companies like Arm have integrated AI-acceleration libraries into standard frameworks so models can run efficiently on Arm-based CPUs.

Connectivity and data architecture. Edge AI often depends on durable, low-latency links (e.g., 5G, WiFi 6, LPWAN) and architectures that move compute closer to data. Merging edge nodes, gateways, and local servers means less reliance on distant clouds. And technologies like Kubernetes can provide a consistent management plane from the data center to remote locations.

Deployment, orchestration, and model lifecycle tooling. Edge AI deployments must support model-update delivery, device and fleet monitoring, versioning, rollback and secure inference — especially when orchestrated across hundreds or thousands of locations. VMware, for instance, is offering traffic management capabilities to support AI workloads.

Local data processing and privacy-sensitized architecture. Edge AI leverages local data collection and inference so that sensitive data doesn’t always travel to the cloud. That capability has been boosted by advances in hardware (secure enclaves, trusted execution environments) and software (privacy-preserving ML, on-device inference libraries), making it possible to run models offline. This makes edge deployment viable in regulated industries and low-connectivity environments.