Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

It’s 2:13 a.m. on a Sunday and the SOC teams’ worst nightmares are about to come true.

Attackers on the other side of the planet are launching a full-scale attack on the company’s infrastructure. Thanks to multiple unpatched endpoints that haven’t seen an update since 2022, they blew through its perimeter in less than a minute.

Attackers with the skills of a nation-state team are after Active Directory to lock down the entire network while creating new admin-level privileges that will lock out any attempt to shut them down. Meanwhile, other members of the attack team are unleashing legions of bots designed to harvest gigabytes of customer, employee and financial data through an API that was never disabled after the last major product release.

In the SOC, alerts start lighting up consoles like the latest Grand Theft Auto on a Nintendo Switch. SOC Analysts are getting pinged on their cell phones, trying to sleep off another six-day week during which many clocked nearly 70 hours.

The CISO gets a call around 2:35 a.m. from the company’s MDR provider saying there’s a large-scale breach going down. “It’s not our disgruntled accounting team, is it? The guy who tried an “Office Space” isn’t at it again, is he?” the CISO asks half awake. The MDR team lead says no, this is inbound from Asia, and it’s big.

Cybersecurity’s coming storm: gen AI, insider threats, and rising CISO burnout

Generative AI is creating a digital diaspora of techniques, technologies and tradecraft that everyone, from rogue attackers to nation-state cyber armies trained in the art of cyberwar, is adopting. Insider threats are growing, too, accelerated by job insecurity and growing inflation. All these challenges and more fall on the shoulders of the CISO, and it’s no wonder more are dealing with burnout.

AI’s meteoric rise for adversarial and legitimate use is at the center of it all. Getting the most significant benefit from AI to improve cybersecurity while reducing risk is what boards of directors are pushing CISOs to achieve.

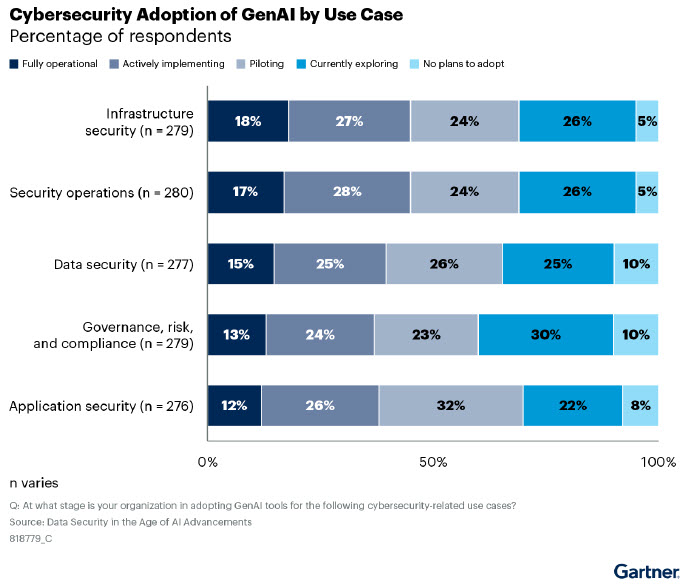

That’s not an easy task, as AI security is evolving very quickly. In Gartner’s latest Dataview on security and risk management, the analyst firm addressed how leaders are responding to gen AI. They found that 56% of organizations are already deploying gen AI solutions, yet 40% of security leaders admit significant gaps in their ability to effectively manage AI risks.

Gen AI is being deployed most in infrastructure security, where 18% of enterprises are fully operational and 27% are actively implementing gen AI-based systems today. Second is security operations, where 17% of enterprises have gen AI-based systems fully in use. Data security is the third most popular use case, with 15% of enterprises using gen AI-based systems to protect cloud, hybrid and on-premise data storage systems and data lakes.

Insider threats demand a gen AI-first response

Gen AI has completely reordered the internal threatscape of every business today, making insider threats more autonomous, insidious and challenging to identify. Shadow AI is the threat vector no CISO imagined would exist five years ago, and now it’s one of the most porous threat surfaces.

“I see this every week,” Vineet Arora, CTO at WinWire, recently told VentureBeat. “Departments jump on unsanctioned AI solutions because the immediate benefits are too tempting to ignore.” Arora is quick to point out that employees aren’t intentionally malicious. “It’s crucial for organizations to define strategies with robust security while enabling employees to use AI technologies effectively,” Arora explains. “Total bans often drive AI use underground, which only magnifies the risks.”

“We see 50 new AI apps a day, and we’ve already cataloged over 12,000,” said Itamar Golan, CEO and co-founder of Prompt Security, during a recent interview with VentureBeat. “Around 40% of these default to training on any data you feed them, meaning your intellectual property can become part of their models.”

Traditional rule-based detection models are no longer sufficient. Leading security teams are shifting toward gen AI-driven behavioral analytics that establish dynamic baselines of employee activities that can identify anomalies in real-time and contain risks and potential threats.

Vendors, including Prompt Security, Proofpoint Insider Threat Management, and Varonis, are rapidly innovating with next-generation AI-powered detection engines that correlate file, cloud, endpoint and identity telemetry in real time. Microsoft Purview Insider Risk Management is also embedding next-generation AI models to autonomously identify high-risk behaviors across hybrid workforces.

Conclusion – Part 1

SOC teams are in a race against time, especially if their systems aren’t integrated with each other and the more than 10,000 alerts a day they generate aren’t syncing up. An attack from the other side of the planet at 2:13 a.m. is going to be a challenge to contain with legacy systems. With adversaries being relentless in their fine-tuning of tradecraft with gen AI, more businesses need to step up and be smarter about getting more value out of their existing systems.

Push cybersecurity vendors to deliver the maximum value of the systems already installed in the SOC. Get integration right and avoid having to swivel chairs across the SOC floor to check alert integrity from one system to the next. Know that an intrusion isn’t a false alarm. Attackers are showing a remarkable ability to reinvent themselves on the fly. It’s time more SOCs and the companies relying on them did the same.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.