Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Agentic AI is the latest big trend in generative AI, but what comes after that?

While full artificial general intelligence (AGI) is likely still some time in the future, there might well be an intermediate step with an approach known as ambient agents.

LangChain, the agentic AI pioneer, introduced the term “ambient agents” on January 14. The technology that LangChain develops includes its eponymous open source LangChain framework that enables organizations to chain different large language models (LLMs) together to get a result. LangChain Inc. raised $24 million in funding in February 2024. The company also has a series of commercial products including LangSmith for LLM Ops.

With a traditional AI interface, users typically interact with an LLM via text prompts to initiate an action. Agentic AI generally refers to LLM-powered systems that take actions on the user’s behalf. The concept of ambient agents takes that paradigm a step further.

What are ambient agents?

Ambient agents are AI systems that run in the background, continuously monitoring event streams and then triggered to act when appropriate, according to pre-set instructions and user intent.

While the term “ambient agents” is new, the concept of ambient intelligence, where AI is always listening, is not. Amazon refers to its Alexa personal assistant technology as enabling ambient intelligence.

The goal of ambient agents is to automate repetitive tasks and scale the user’s capabilities by having multiple agents running persistently, rather than the human user having to call them up and interact with each one, one-on-one. This allows the user to focus on higher-level tasks while the agents handle routine work.

To help prove out and advance the concept of ambient agents, LangChain has developed a series of initial use cases, one that monitors emails, the other for social media, to help users manage and respond when needed.

“I think agents in general are powerful and exciting and cool,” Harrison Chase, cofounder and CEO of LangChain, told VentureBeat. “Ambient agents are way more powerful if there’s a bunch of them doing things in the background, you can just scale yourself way more.”

The tech leverages many open-source solutions, and LangChain did not indicate yet how much it would charge for use of any new tools.

How ambient agents work to improve AI usability

Like many great technology innovations, the original motivation for ambient agents wasn’t to create a new paradigm, but rather to solve a real problem.

For Chase, the problem is one that is all too familiar for many of us: email inbox overload. Chase began his journey to create ambient agents to solve email challenges. Six months ago he started building an ambient agent for his own email.

Chase explained that the email assistant categorizes his emails, handling the triage process automatically. He no longer has to manually sort through his inbox, as the agent takes care of it. Through his own use of the agent inbox over an extended period, Chase was able to refine and improve its capabilities. He noted that it started off imperfect, but by using it regularly and addressing the pain points, he was able to enhance the agent’s performance.

To be clear, the email assistant isn’t some kind of simplistic rules-based system for sorting email. It’s a system that actually understands his email and helps him to decide how to manage it.

The ambient agent architecture for the email assistant use case

The architecture of Chase’s email assistant is quite complex, involving multiple components and language models.

“It starts off with a triage step that’s kind of like an LLM and a pretty complicated prompt and some few short examples which are retrieved semantically from a vector database,” Chase explained. “Then, if it’s determined that it should try to respond, it goes to a drafting agent.”

Chase further explained that the drafting agent has access to additional tools, including a sub-agent specifically for interacting with the calendar:

“There’s an agent that I have specifically for interacting with the calendar, because actually LLMs kind of suck at dates,” Chase said. “So I had to have a dedicated agent just to interact with the calendar.”

After the draft response is generated, Chase said there’s an additional LLM call that rewrites the response to ensure the correct tone and formatting.

“I found that having the LLM try to call all these tools and construct an email and then also write in the correct tone was really tricky, so I have a step explicitly for tone,” Chase said.

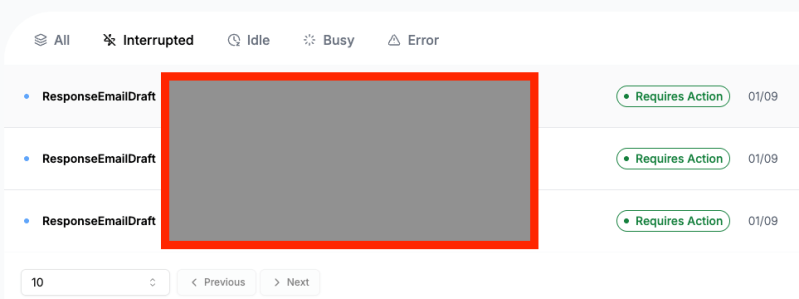

The agent inbox as a way to control and monitor agents

A key part of the ambient agent experience Is having control and visibility into what the agents are doing.

Chase noted that in an initial implementation, he just had agents message via Slack, but that quickly became unwieldy.

Instead, LangChain designed a new user interface, the agent inbox, specifically for interacting with ambient agents.

The system displays all open lines of communication between users and agents and makes it easy to track outstanding actions.

How to build an ambient agent

LangChain first and foremost is a tool for developers and it’s going to be a tool to help build and deploy ambient agents now too.

Any developer can use the open-source LangChain technology to build an ambient agent, though additional tools can simplify the process. Chase explained that the agent inbox he built is in some respect a view on top of the LangGraph platform. LangGraph is an open-source framework for building agents that provides the infrastructure for operating long-running background jobs.

On top of that, LangChain is using its commercial LangSmith platform, which provides observability and evaluation for agents. This helps developers put agents into production with the necessary monitoring and evaluation tools to ensure they are performing as expected.

Ambient agents: A step toward using generalized intelligence

Chase is optimistic that the concept of ambient agents will catch on with developers in the coming months and years.

Ambient agents bring the prospect of even more autonomy to AI, enabling it to monitor an event stream and take intelligent actions. Chase still expects that there will be a need for keeping humans in the loop as part of the ambient agent experience. But humans need only confirm and validate actions, rather than figure out what needs to be done.

“I think it’s a step towards harnessing and using more generalized intelligence,” Chase said.

Chase noted that it’s more likely that true AGI will come from improvements in reasoning models. That said, making better use of models is where the concept of ambient agents will bring value.

“There’s still a lot of work to be done to make use of the models, even after they become really intelligent,” Chase said. “I think the ambient agent style of interfacing with them will absolutely be an unlock for using this general form of intelligence.”

An open-source version of the email assistant is currently available. LangChain is releasing a new social media ambient agent today, and will make an open-source version of the agent inbox available on Thursday, January 16.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.