BP Plc’s “fundamental reset” on Wednesday is the most highly anticipated strategy shift for an oil major in several years.

For the first time since taking the reins, Chief Executive Officer Murray Auchincloss will lay out his new vision, with the stakes high after Elliott Investment Management bought up about 5% of the company in order to push for big changes.

The activist investor will decide its next move based on how the CEO’s presentation goes, and here are five things to watch:

New Narrative

Auchincloss took charge of BP a year ago pledging an “unchanged direction of travel” from his predecessor, who had been shrinking oil and gas production and expanding clean energy. That language has now emphatically changed, with a promise to forge a “new direction” that’s “NOT business as usual.”

The CEO has already taken some decisions that indicate how things will change, such as spinning off BP’s offshore wind business and stopping some biofuels and hydrogen projects. Clearer rhetoric on the company’s new priorities will be just as important as the numbers in Wednesday’s “make-or-break” strategy update, HSBC Holdings Plc analyst Kim Fustier said in a research note.

“How BP frames the shift and its openness in admitting its past mistakes are equally important,” Fustier said. She will be looking for the company to retire favorite phrases of former CEO Bernard Looney, such as “reimagining energy” and “transition growth engines.”

Balance Sheet

For some close observers, including UBS Group AG analyst Josh Stone, the minimum threshold Auchincloss must cross on Wednesday is a clear plan to strengthen BP’s balance sheet.

The company has had greater leverage than its peers for many years, but the gap has widened since Looney’s 2020 shift away from oil and gas. This is important because BP, alone among its peers, is seen as not having strong enough finances to maintain the pace of its share buybacks this year.

Analysts expect lower annual capital investment than the $14 billion to $18 billion range previously guided by BP, with less spending on renewables and more on oil and gas. The company could also raise cash to boost returns to investors by divesting some parts of its sprawling business.

Asset Sales

Some shareholders have told Bloomberg they expect BP to announce significant asset sales. The company has been weighing the divestment of its automotive lubricants business Castrol, which is valued around $10 billion, Bloomberg News reported.

Several analysts have also gamed-out the possibility that BP could sell its marketing and convenience division, or list its US shale oil and gas unit, BPX. The latter is something the company already did successfully with its Norwegian joint venture Aker BP ASA, said RBC analyst Biraj Borkhataria.

Assets sales can help to control net debt, but wouldn’t help BP to achieve some of its other potential goals, said Fustier.

Oil Production

BP is expected to scrap the ambition, created by Looney and continued by Auchincloss, of reducing 2030 oil and gas output by 25% from 2019 levels. Fresh production targets could instead focus on maintaining or even expanding production, according to some shareholders and analysts.

The company has recently been focused on a return to its roots in Middle East oil, specifically Iraq’s Kirkuk field. It has also emphasized the growth potential of its assets in the Gulf of Mexico, although there are questions about whether such assets could deliver a swift production turnaround.

Ultimately, “we think BP needs to show a path to sustaining its production, with potential for growth into the 2030s, in order to be comparable with its global peers,” Borkhataria said in a research note.

Renewable Power

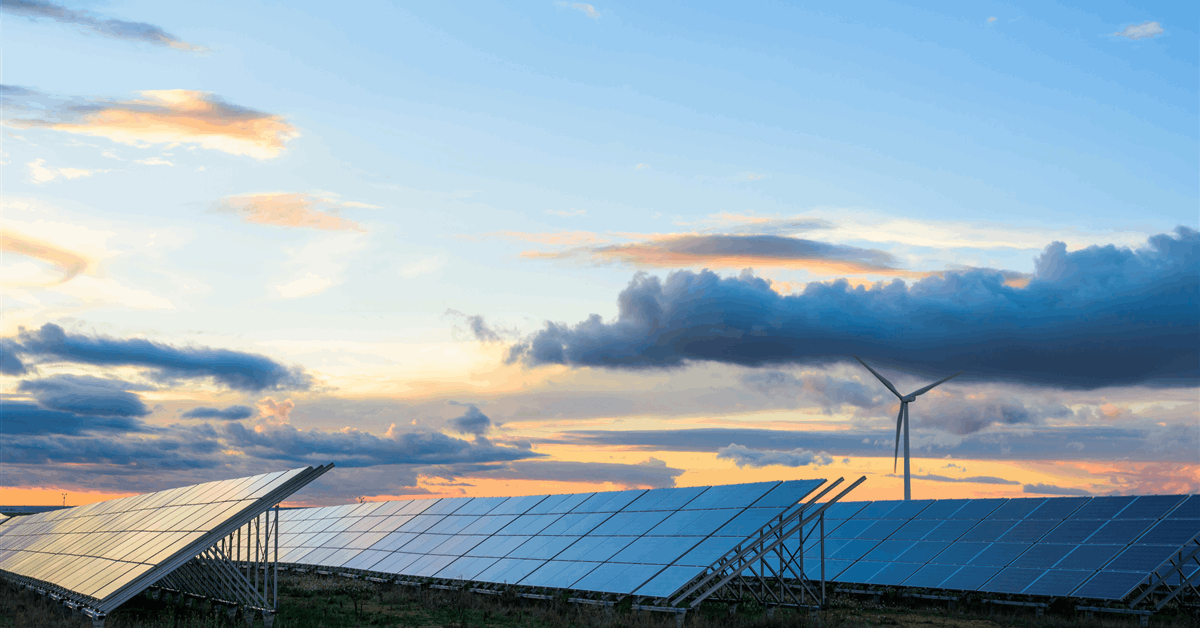

The offshore wind spin-off is seen as a model for how BP could proceed with other renewable energy projects in its portfolio, such as its solar and battery storage arm Lightsource BP. By parking these businesses joint ventures, the company would retain some exposure to clean energy but removes some of the spending burden from its balance sheet.

Speaking at International Energy Week in London on Tuesday, Gordon Birrell, BP’s executive vice president of production and operations, said wind and solar power, and carbon capture and storage are key for the company’s “green electrons” value chain. The Archaea biogas producer based in the US also still “fits nicely” in the business, he said.

Analysts and investors will be watching closely for BP’s plans for its globe-spanning electric vehicle charging business. It currently has big growth ambitions, especially in the US with the integration of the plug-in points at TravelCenters of America, which the company purchased for $1.3 billion in 2023.

Such plans may not sit comfortably alongside a broader retreat from renewable power, something Elliott is pushing the company to do.

WHAT DO YOU THINK?

Generated by readers, the comments included herein do not reflect the views and opinions of Rigzone. All comments are subject to editorial review. Off-topic, inappropriate or insulting comments will be removed.

MORE FROM THIS AUTHOR

Bloomberg