Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Colossal BioSciences has raised $200 million in a new round of funding to bring back extinct species like the woolly mammoth.

Dallas- and Boston-based Colossal is making strides in the scientific breakthroughs toward “de-extinction,” or bringing back extinct species like the woolly mammoth, thylacine and the dodo.

I would be remiss if I did not mention this is the plot of Michael Crichton’s novel Jurassic Park, where scientists used the DNA found in mosquitoes preserved in amber to bring back the Tyrannosaurus Rex and other dinosaurs. I mean, what could go wrong when science fiction becomes reality? Kidding aside, this is pretty amazing work and I’m not surprised to see game dev Richard Garriott among the investors.

TWG Global, a diversified holding company with operating businesses and investments in technology/AI, financial services, private lending and sports and media, jointly led by Mark Walter and Thomas Tull.

Since launching in September 2021, Colossal has raised $435 million in total funding. This latest round of capital places the company at a $10.2 billion valuation. Colossal will leverage this latest infusion of capital to continue to advance its genetic engineering technologies while pioneering new revolutionary software, wetware and hardware solutions, which have applications beyond de-extinction including species preservation and human healthcare.

“Our recent successes in creating the technologies necessary for our end-to-end de-extinction toolkit have been met with enthusiasm by the investor community. TWG Global and our other partners have been bullish in their desire to help us scale as quickly and efficiently as possible,” said CEO Colossal Ben Lamm, in a statement. “This funding will grow our team, support new technology development, expand our de-extinction species list, while continuing to allow us to carry forth our mission to make extinction a thing of the past.”

Colossal employs over 170 scientists and partners with labs in Boston, Dallas, and Melbourne, Australia. In addition, Colossal sponsors over 40 full time postdoctoral scholars and research programs in 16 partner labs at some of the most prestigious universities around the globe.

Colossal’s scientific advisory board has grown to include over 95 of the top scientists working in genomics, ancient DNA, ecology, conservation, developmental biology, and paleontology. Together, these teams are tackling some of the hardest problems in biology, including mapping genotypes to traits and behaviors, understanding developmental pathways to phenotypes like craniofacial shape, tusk formation, and coat color patterning, and developing new tools for multiplex and large-insert genome engineering.

“Colossal is the leading company working at the intersection of AI, computational biology and genetic engineering for both de-extinction and species preservation,” said Mark Walter, CEO of TWG Global, in a statement. “Colossal has assembled a world-class team that has already driven, in a short period of time, significant technology innovations and impact in advancing conservation, which is a core value of TWG Global. We are thrilled to support Colossal as it accelerates and scales its mission to combat the animal extinction crisis.”

“Colossal is a revolutionary genetics company making science fiction into science fact. We are creating the technology to build de-extinction science and scale conservation biology particularly for endangered and at-risk species. I could not be more appreciative of the investor support for this important mission,” said George Church, Colossal cofounder and a professor of genetics at Harvard Medical School and professor of Health Sciences and Technology at Harvard and the Massachusetts Institute of Technology (MIT).

In October 2024, the Colossal Foundation was launched, a sister 501(c)(3) focused on overseeing the deployment and application of Colossal-developed science and technology innovations. The organization currently supports 48 conservation partners and their global initiatives around the world.

This includes partners like Re:wild, Save The Elephants, Biorescue, Birdlife International, Conservation Nation, Sezarc, Mauritian Wildlife Foundation, Aussie Ark, International Elephant Foundation, Saving Animals From Extinction. Currently the Colossal Foundation is focused on supporting conservation partners who are working on new innovative technologies that can be applied to conservation and those who benefit from the development and deployment of new genetic rescue and de-extinction technologies to help combat the biodiversity extinction crisis.

Tracking Progress on Colossal’s De-Extinction Projects

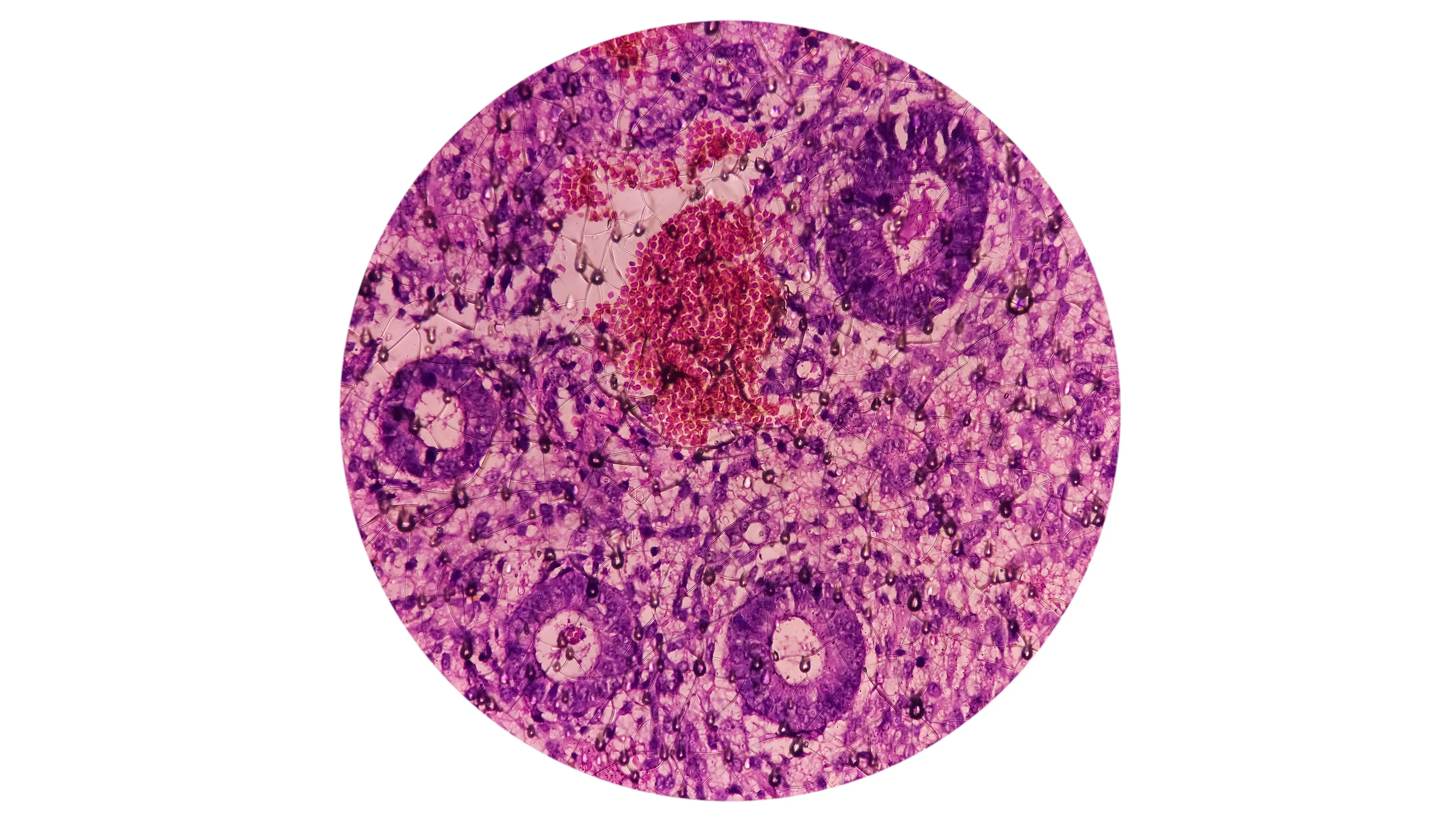

The first step in every de-extinction project is to recover and analyze preserved genetic material and use that data to identify each species’ core genomic components. In addition to recruiting Beth Shapiro, a global leader in ancient DNA research, as Colossal’s chief science officer, Colossal has built a team of Ph.D experts in ancient DNA among its scientific advisors, including Love Dalen, Andrew Pask, Tom Gilbert, Michael Hofreiter, Hendrik Poinar, Erez Lieberman Aiden, and Matthew Wooler.

With this team, Colossal continues to push advances in ancient DNA through support to academic labs and internal scientific research. All three core species – mammoth, thylacine, and dodo – have already benefited from this coalescence of expertise. As an example, Colossal now has the most contiguous and complete ancient genomes to date for each of these three species; these genomes are the blueprints from which these species’ core traits will be engineered.

The path from ancient genome to living species requires a systems model approach to innovation across computational biology, cellular engineering, genetic engineering, embryology, and animal husbandry, with refinement and tuning in each step along the de-extinction pipeline occurring simultaneously. To date, Colossal’s scientists have achieved monumental breakthroughs at each step for each of the three flagship species.

In the last three years, Colossal’s first major project to be announced, the woolly mammoth project, generated new genomic resources, made breakthroughs in cell biology and genome engineering, and explored the ecological impact of de-extinction, with implications for mammoths, elephants, and species across the vertebrate tree of life.

Woolly Mammoth De-extinction Project Progress

The mammoth team has generated chromosome-scale reference genomes for the African elephant, Asian elephant, and rock hyrax, all of which have been released on the National Center for Biotechnology Information database; it has generated the first de novo assembled mammoth genome – that is, a genome generated using only the ancient DNA reads rather than mapped to a reference genome. This genome identified several genetic loci that are missing in reference-guided assemblies.

And it has acquired and aligned 60+ ancient genomes for woolly mammoth and Columbian mammoth in collaboration with key scientific advisor, Love Dalen and Tom van der Valk. This data, in combination with 30+ genomes for extant elephant species including Asian, African, and Bornean elephants, have dramatically increased the accuracy of mammoth-specific variant calling.

The team has derived, characterized, and biobanked 10+ primary cell lines from acquired tissue for Asian elephants, rock hyrax, and aardvark for use in company conservation and de-extinction pipelines; and it became the first to derive pluripotent stem cells for Asian elephants. These cells are essential for in-vitro embryogenesis and gametogenesis. There are numerous other steps forward.

“These mammoth milestones mark a pivotal step forward for de-extinction technologies,” said Love Dalen, professor at the Centre for Paleogenetics, University of Stockholm, and a key advisor to the mammoth project, in a statement. “The dedication of the team at Colossal to precision and scientific rigor is truly inspiring, and I have no doubt they will be successful in resurrecting core mammoth traits.”

Thylacine De-extinction Project Progress

The Colossal thylacine team recently made announcements demonstrating progress on the various work streams critical for the de-extinction of the thylacine.

Since that team’s inception two years ago the Australia and Texas-based teams have generated the highest-quality ancient genome to date for a Thylacine, at 99.9% complete, using ancient long reads and ancient RNA – a world’s first and once thought to be an impossible goal – creating the genomic blueprint for Thylacine de-extinction.

They have generated ancient genomes for 11 individuals thylacines, thereby gaining understanding of fixed variants versus population-level variation in thylacines pre-extinction and enabling more accurate prediction of de-extinction targets.

And they have assembled telomere-to-telomere genome sequences for all dasyurid species – the evolutionary cousins of thylacines– providing resources both to improve Colossal’s understanding thylacine evolution and underpin thylacine engineering efforts, and to aid in the conservation of threatened marsupial species. They made numerous other advances as well.

“These milestones put us ahead of schedule on many of the critical technologies needed to underpin de-extinction efforts. At the same time, it creates major advances in genomics, stem cell generation and engineering, and marsupial reproductive technologies that are paving the way for the de-extinction of the thylacine and is revolutionizing conservation science for marsupials. Colossal’s work demonstrates that with innovation and perseverance, we can offer groundbreaking solutions to safeguard biodiversity— and the team is already doing this in many visionary ways,” said Andrew Pask, Ph.D., in a statement.

Dodo De-extinction Project Progress

The Colossal Avian Genomics Group is currently focused on the Dodo project as well as building a distinct suite of tools for avian genome engineering which differs from some of the company’s mammalian projects. The dodo specific team’s progress includes generating a complete, high coverage genome for the dodo, its sister extinct species the solitaire, and the critically endangered manumea (also known as the “tooth-billed pigeon” and “little dodo”).

They also generated and published a chromosome-scale assembly of the Nicobar pigeon (the dodo’s closest relative) as well as developed a population-scale data set of Nicobar pigeon genomes for computational identification of dodo-specific traits.

And the team developed a machine learning approach to identify genes associated with craniofacial shape in birds for gene-editing targets toward resurrect the dodo’s unique bill morphology; and they processed more than 10,000 eggs and optimized culture conditions for growing primordial germ cells (PGCs) for four bird species. The team also made a number of other strides.

“As we advance our understanding of avian genomics and developmental biology, we’re seeing remarkable progress in the tools and techniques needed to restore lost bird species,” said Colossal’s chief science officer Beth Shapiro, in a statement. “The unique challenges of avian reproduction require bespoke approaches to genetic engineering, for example, and our dodo team has had impressive success translating tools developed for chickens to tools that have even greater success in pigeons. While work remains, the pace of discovery within our dodo team has exceeded expectations.”

Colossal’s Support of Global Conservation and De-Extinction Efforts

By 2050, it is projected that over 50% of the world’s animal species may be extinct. Now around 27,000 species per year go extinct, compared to the natural rate of 10 to 100 species per year. Over the past 50 years (1970–2020), the average size of monitored wildlife populations has shrunk by 73%.

That extinction crisis will have cascading, negative impacts on human health and wellbeing including reductions in drinkable water, increases in land desertification and increases in food insecurity. While current conservation efforts are imperative to protecting species, more and newer technologies and techniques are required that can scale in response to the speed humanity is changing the planet and destroying ecosystems.

Colossal was created to respond to this crisis. And, Colossal’s growing de-extinction and species preservation toolkit of software, wetware and hardware solutions provides new, scalable approaches to this systems-level existential threat and biodiversity crisis.

“The technological advances we’re seeing in genetic engineering and synthetic biology are rapidly transforming our understanding of what’s possible in species restoration,” said Shapiro. “While the path to de-extinction is complex, each step forward brings us closer to understanding how we might responsibly reintroduce traits from lost species. The real promise lies not just in the technology, but also in how we might apply these tools to protect and restore endangered species and ecosystems.”

The breakthroughs in Colossal’s core projects create a ripple effect across species conservation. Each Colossal core species is tied to conservation efforts that support other endangered and at-risk species in the respective animal’s family group.

The company’s work toward mammoth restoration has simultaneously advanced reproductive and genetic technologies that can help preserve endangered elephant species, while the dodo program is pioneering avian genetic tools that will benefit threatened bird species worldwide. Through the Colossal Foundation and its partnerships with leading conservation organizations, Colossal is transforming these scientific advances into practical solutions that can help protect and restore vulnerable species across multiple taxonomic families.

It has key initiatives such as Colossal’s $7.5M in new donations to fund ancient DNA research across a diverse selection of species; the development of a gene-engineering solution to create cane toad toxin resistance for Australia’s endangered Northern Quoll; a partnership with the international conservation organization Re:wild on a suite of initiatives to preserve the world’s most threatened species.

It has a joint 10-year conservation strategy to save some of the world’s most threatened species by leveraging the power of Colossal’s genetic technologies and Re:wild’s experience and partnerships for species conservation across the world. There are a number of other efforts under way too.

“Colossal is advancing the development of genetic technologies for conservation at a rapid pace. Their cutting-edge technologies are changing what is possible in species conservation and are permitting us to envision a world where many more Critically Endangered species not only survive but thrive,” said Barney Long, PhD, senior director of conservation strategies for Re:wild, in a statement.

Colossal’s additional strategic investors include funds such as USIT, Animal Capital, Breyer Capital, At One Ventures, In-Q-Tel, BOLD Capital, Peak 6, and Draper Associates among others and private investors including Robert Nelsen, Peter Jackson, Fran Walsh, Ric Edelman, Brandon Fugal, Paul Tudor Jones, Richard Garriott, Giammaria Giuliani, Sven-Olof Lindblad, Victor Vescovo, and Jeff Wilke.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.