Microsoft’s newest AI data center in Wisconsin, known as “Fairwater,” is being framed as far more than a massive, energy-intensive compute hub. The company describes it as a community-scale investment — one that pairs frontier-model training capacity with regional development. Microsoft has prepaid local grid upgrades, partnered with the Root-Pike Watershed Initiative Network to restore nearby wetlands and prairie sites, and launched Wisconsin’s first Datacenter Academy in collaboration with Gateway Technical College, aiming to train more than 1,000 students over the next five years.

The company is also highlighting its broader statewide impact: 114,000 residents trained in AI-related skills through Microsoft partners, alongside the opening of a new AI Co-Innovation Lab at the University of Wisconsin–Milwaukee, focused on applying AI in advanced manufacturing.

It’s Just One Big, Happy AI Supercomputer…

The Fairwater facility is not a conventional, multi-tenant cloud region. It’s engineered to operate as a single, unified AI supercomputer, built around a flat networking fabric that interconnects hundreds of thousands of accelerators. Microsoft says the campus will deliver up to 10× the performance of today’s fastest supercomputers, purpose-built for frontier-model training.

Physically, the site encompasses three buildings across 315 acres, totaling 1.2 million square feet of floor area, all supported by 120 miles of medium-voltage underground cable, 72.6 miles of mechanical piping, and 46.6 miles of deep foundation piles.

At the rack level, each NVL72 system integrates 72 NVIDIA Blackwell GPUs (GB200), fused together via NVLink/NVSwitch into a single high-bandwidth memory domain capable of 1.8 TB/s GPU-to-GPU throughput and 14 TB of pooled memory per rack. This creates a topology that may appear as independent servers but can be orchestrated as a single, giant accelerator.

Microsoft reports that one NVL72 can process up to 865,000 tokens per second. Future Fairwater-class deployments (including those under construction in the UK and Norway) are expected to adopt the GB300 architecture, extending pooled memory and overall system coherence even further.

To scale beyond a single rack, Azure links racks into pods using InfiniBand and Ethernet fabrics running at 800 Gb/s in a non-blocking fat-tree topology, ensuring that every GPU can communicate with every other at line rate. Multiple pods are then stitched together across each building using hop-count minimization techniques, and even the physical layout reflects optimization: a two-story rack configuration minimizes distance to further reduce latency.

The model itself scales globally. Through Microsoft’s AI Wide Area Network (AI WAN), multiple regional campuses operate in concert as a distributed supercomputer, pooling compute, storage, and scheduling resources across geographies for both resiliency and elastic capacity.

What’s the big-picture significance here? Fairwater represents a systems-of-systems approach — from silicon to server, rack, pod, building, campus, and ultimately to WAN — with every layer optimized for frontier-scale AI training throughput, rather than general-purpose cloud elasticity. It signals Microsoft’s intent to build a worldwide, interlinked AI supercomputing fabric, a development certain to shape ongoing debates about the architecture and economics of AI.

Don’t Forget About the Storage Requirements

Behind Fairwater’s GPU-driven power lies a re-architected Azure storage stack, designed to aggregate both capacity and bandwidth across thousands of nodes and hundreds of thousands of drives, eliminating the need for manual sharding. Microsoft reports that a single Azure Blob Storage account can sustain more than two million operations per second, while BlobFuse2 provides low-latency, high-throughput access that effectively brings object storage to GPU node-local speed.

The physical footprint of this storage layer is equally revealing. Microsoft notes that the dedicated storage and compute facility at Fairwater stretches the length of five football fields, underscoring that AI infrastructure at scale is as much about data movement and persistence as it is about raw compute. In other words, the supercomputer’s backbone isn’t just made of GPUs and memory. It’s built on the ability to feed them efficiently.

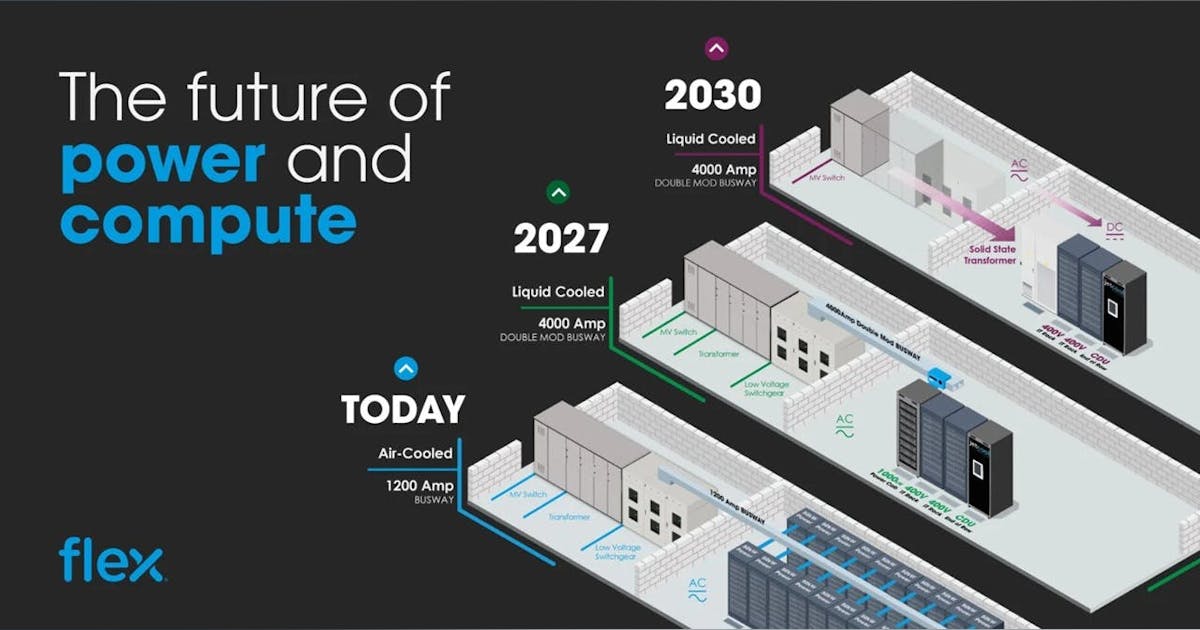

Keep Chill and Energized

As you might’ve heard, the days of relying solely on air cooling are effectively over, at least for AI-focused data centers. Traditional air systems simply can’t manage GB200-era rack densities. To meet the massive thermal loads of frontier AI hardware, Microsoft’s Fairwater campus employs facility-scale, closed-loop liquid cooling. The system is filled once during construction and recirculates continuously, using 172 twenty-foot fans to chill large heat-exchanger fins along the building’s exterior.

Microsoft reports that over 90% of the site’s capacity operates on this zero-operational-water loop, while the remaining ~10%—housing legacy or general-purpose servers—uses outside air cooling and switches to water-based heat exchange only during extreme heat events. Because the loop is sealed and non-evaporative, Fairwater’s total water use is both limited and precisely measurable, reinforcing Microsoft’s goal of predictable, sustainable operations.

Power strategy, meanwhile, remains a parallel priority. Although the company’s announcement did not detail electrical sourcing, Microsoft has committed to pre-paying grid upgrades to avoid costs being passed on to local ratepayers. The company will also match any fossil-based consumption 1:1 with new carbon-free generation, including a 250-MW solar power purchase agreement in Portage County.

Still, near-term reliability realities persist. Leadership has acknowledged that new natural-gas generation is likely to be located near the campus to meet startup demand and grid stability. As one executive told Reuters, “This is LNG territory,” underscoring the pragmatic challenge of powering multi-gigawatt campuses in constrained markets, where bringing capacity online often requires temporary fossil generation alongside long-term renewable investments.

One of Many

Fairwater is just the beginning. Microsoft is already replicating identical, AI-optimized data centers across the United States and abroad, with two major European campuses now advancing under the same architectural model:

-

United Kingdom (Loughton): Part of a $30 billion UK investment program extending through 2028, which includes $15 billion in capital expenditures to expand cloud and AI infrastructure. The effort—developed in partnership with nScale—will deliver the country’s largest supercomputer, incorporating more than 23,000 NVIDIA GPUs.

-

Norway (Narvik): In partnership with nScale and Aker, Microsoft is investing $6.2 billion to build a hydropower-backed AI campus designed around abundant, low-cost renewable energy and the cooling advantages of Norway’s northern climate. Initial services are expected to come online in 2026.

In Wisconsin, Microsoft has expanded its commitment to more than $7 billion, adding a second data center of similar scale. The first site will employ about 500 full-time workers, growing to roughly 800 after the second is operational, with as many as 3,000 construction jobs created during the build-out phase.

Globally, Microsoft has stated plans to invest approximately $80 billion in 2025 alone to expand its portfolio of AI-enabled data centers. To decarbonize the electricity supporting these facilities, the company has signed a framework agreement with Brookfield to deliver more than 10.5 gigawatts of new renewable generation over the next five years—at the time, the largest corporate clean-energy deal in history.

Microsoft has also entered into a first-of-its-kind 50-MW fusion power purchase agreement with Helion, targeting 2028 operations, alongside regional contracts such as the 250-MW solar PPA in Portage County, Wisconsin. Together, these initiatives illustrate how Microsoft is coupling massive infrastructure expansion with an aggressive clean-energy transition—an approach increasingly mirrored across the hyperscale landscape.

Is This a Picture of the Future or Just an Interim Step while AI Finds its Place?

Microsoft isn’t merely building larger GPU rooms. It’s industrializing a new architectural pattern for AI factories. The model centers on NVL72 racks as compute building blocks, non-blocking 800 Gb/s fabrics, exabyte-scale storage, facility-scale liquid cooling, and a global AI Wide Area Network that links these campuses into a distributed supercomputer. Wisconsin serves as the flagship; the UK and Norway are the next stamps in what is rapidly becoming a worldwide deployment strategy. The approach fuses ruthless systems engineering with a pragmatic energy posture, and the financial magnitude is staggering.

To put that investment into context: over the next five years, Microsoft, AWS, Google, Meta, and Oracle together are projected to spend roughly $2 trillion on data center expansion. By comparison, the entire U.S. Interstate Highway System, built over 35 years (from 1956 to its “completion” in 1991) cost just over $300 billion in 2025 dollars. That means today’s hyperscale AI buildout represents nearly seven times the inflation-adjusted cost of the national highway network.

While not an apples-to-apples comparison, the analogy underscores the scope of transformation underway. The AI infrastructure boom is poised to reshape economies, workforces, and regional development on a scale comparable to the infrastructure projects that defined the industrial age: only this time, the network being built is digital.