Your Gateway to Power, Energy, Datacenters, Bitcoin and AI

Dive into the latest industry updates, our exclusive Paperboy Newsletter, and curated insights designed to keep you informed. Stay ahead with minimal time spent.

Discover What Matters Most to You

AI

Lorem Ipsum is simply dummy text of the printing and typesetting industry.

Bitcoin:

Lorem Ipsum is simply dummy text of the printing and typesetting industry.

Datacenter:

Lorem Ipsum is simply dummy text of the printing and typesetting industry.

Energy:

Lorem Ipsum is simply dummy text of the printing and typesetting industry.

Discover What Matter Most to You

Featured Articles

Recent books from the MIT community

Launching from the Lab: Building a Deep-Tech StartupBy Lita Nelsen ’64, SM ’66, SM ’79, former director of the MIT Technology Licensing Office, and Maureen StancikBoyce, SM ’91, SM ’93, PhD ’95, with Sophie Hagerty MIT PRESS, 2026, $35 Empty Vessel: The Story of the Global Economy in One BargeBy Ian Kumekawa, lecturer in historyPENGUIN RANDOM HOUSE, 2025, $29 Taxation and Resentment: Race, Party, and Class in American Tax Attitudes By Andrea Louise Campbell, professor of political science PRINCETON UNIVERSITY PRESS, 2025, $29.95 Dr. Adventure: Danger and Discovery from Pole to PoleBy Warren M. Zapol ’62 THE ZAPOL FAMILY, 2025, $37.99

Long-Term Care Around the WorldEdited by Jonathan Gruber ’87, professor of economics and department head, and Kathleen McGarryUNIV. OF CHICAGO PRESS, 2025, $35 Plain Talk About Drinking Water (6th edition) By James Symons, SM ’55, ScD ’57, former assistant professor of sanitary engineering and Nancy E. McTigueAMERICAN WATER WORKS ASSOCIATION, 2025, $30 The Price of Our Values: The Economic Limits of Moral Life By Augustin Landier, PhD ’02, and David Thesmar, professor of financial economics and financeUNIV. OF CHICAGO PRESS, 2025, $25 Send book news to [email protected] or 196 Broadway, 3rd Floor, Cambridge, MA 02139

A I-designed proteins may help spot cancer

Researchers at MIT and Microsoft have used artificial intelligence to create molecular sensors that could detect early signs of cancer via a urine test. The researchers developed an AI model to design short proteins that are targeted by enzymes called proteases, which are overactive in cancer cells. Nanoparticles coated with these proteins, called peptides, can give off a signal if they encounter cancer-linked proteases once introduced into circulation: The proteases will snip off the peptides, which then form reporter molecules that are excreted in urine. Sangeeta Bhatia, SM ’93, PhD ’97, a senior author of a paper on the work with her former student Ava Amini ’16, a principal researcher at Microsoft Research, led the MIT team that came up with the idea of such particles over a decade ago. But earlier efforts used trial and error to identify peptides that would be cleaved by specific proteases, and the results could be ambiguous. With AI, peptides can be designed to meet specific criteria. “If we know that a particular protease is really key to a certain cancer, and we can optimize the sensor to be highly sensitive and specific to that protease, then that gives us a great diagnostic signal,” Amini says. Bhatia’s lab is now working with ARPA-H on an at-home kit that could potentially detect 30 types of early cancer. Peptides designed using the model could also be incorporated into cancer therapeutics.

Reformulated antibodies could be injected for easier treatment

Antibody treatments for cancer and other diseases are typically delivered intravenously, requiring patients to go to a hospital and potentially spend hours receiving infusions. Now Professor Patrick Doyle and his colleagues have taken a major step toward reformulating antibodies so that they can be injected with a standard syringe, making treatment easier and more accessible. The obstacle to injecting these drugs is that they are formulated at low concentrations, so very large volumes are needed per dose. Decreasing the volume to the capacity of a standard syringe would mean increasing the concentration so much that the solution would be too thick to be injected. In 2023, Doyle’s lab developed a way to generated highly concentrated antibody formulations by encapsulating them into hydrogel particles. However, that requires centrifugation, a step that would be difficult to scale up for manufacturing. In their new study, the researchers took a different approach that instead uses a microfluidic setup. Droplets containing antibodies dissolved in a watery prepolymer solution are suspended in an organic solvent and can then be dehydrated, leaving behind highly concentrated solid antibodies within a hydrogel matrix. Finally, the solvent is removed and replaced with an aqueous solution. Using semi-solid particles 100 microns in diameter, the team showed that the force needed to push the plunger of a syringe containing the solution was less than 20 newtons. “That is less than half of the maximum acceptable force that people usually try to aim for,” says Talia Zheng, an MIT graduate student who is the lead author of the new study. More than 700 milligrams of the antibody—enough for most therapeutic applications—could be administered at once with a two-milliliter syringe. The formulations remained stable under refrigeration for at least four months. The researchers now plan to test the particles in animals and work on scaling up the manufacturing process.

A retinal reboot for amblyopia

In the vision disorder amblyopia (or “lazy eye”), impaired vision in one eye early in life causes neural connections in the brain’s visual system to shift toward supporting the other eye, leaving the amblyopic eye less capable even if the original impairment is corrected. Current interventions don’t work after infancy and early childhood, when the brain connections are fully formed. Now a study in mice by MIT neuroscientist Mark Bear and colleagues shows that if the retina of the amblyopic eye is anesthetized just for a couple of days, those crucial connections can be restored, even in adulthood. Bear’s team, which has been studying amblyopia for decades, had previously shown that this effect could be achieved by anesthetizing both eyes or the non-amblyopic eye, analogous to having a child wear a patch over the healthy eye to strengthen the “lazy” one. The new study delved into the mechanism behind this effect by pursuing an earlier observation: that blocking the retina from sending signals to neurons in the part of the brain that relays information from the eyes to the visual cortex caused those neurons to fire “bursts” of electrical pulses. Similar patterns of activity occur in the visual system before birth and guide early synaptic development.

The experiments confirmed that the bursting is necessary for the treatment to work—and, crucially, that it occurs when either retina is targeted. After some mice modeling amblyopia had the affected eye anesthetized for two days, the researchers measured activity in the visual cortex to calculate a ratio of inputs from the two eyes. This ratio was much more even in the treated mice, indicating that the amblyopic eye was communicating with the brain about as well as the other one. A key next step will be to show that this approach works in other animals and, ultimately, people. “If it does, it’s a pretty substantial step forward, because it would be reassuring to know that vision in the good eye would not have to be interrupted by treatment,” says Bear. “The amblyopic eye, which is not doing much, could be inactivated and ‘brought back to life’ instead.”

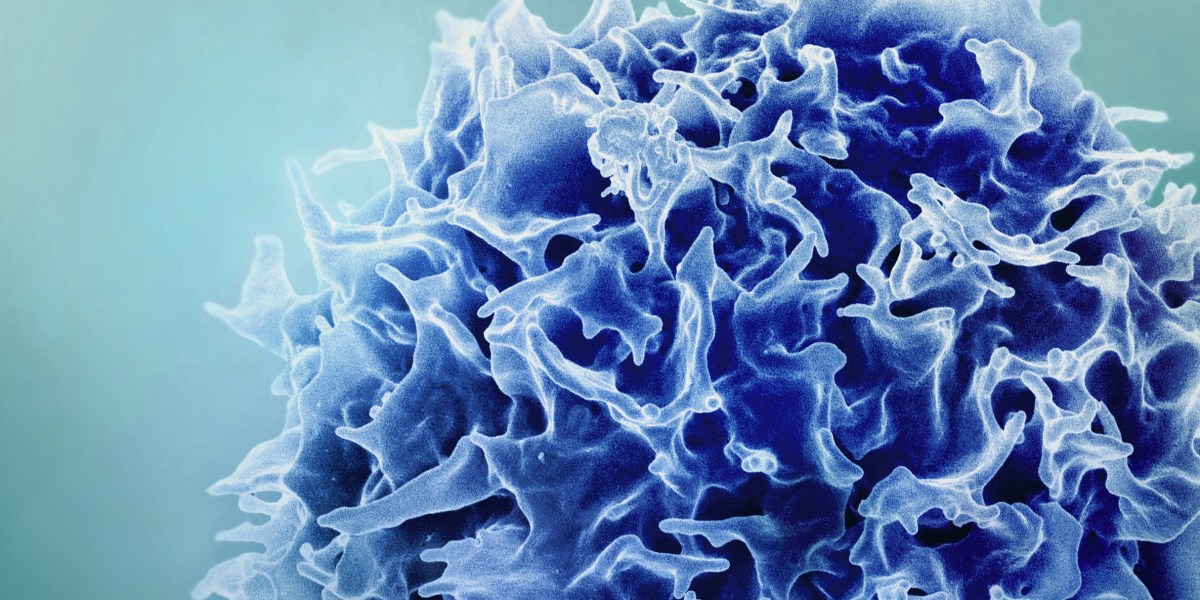

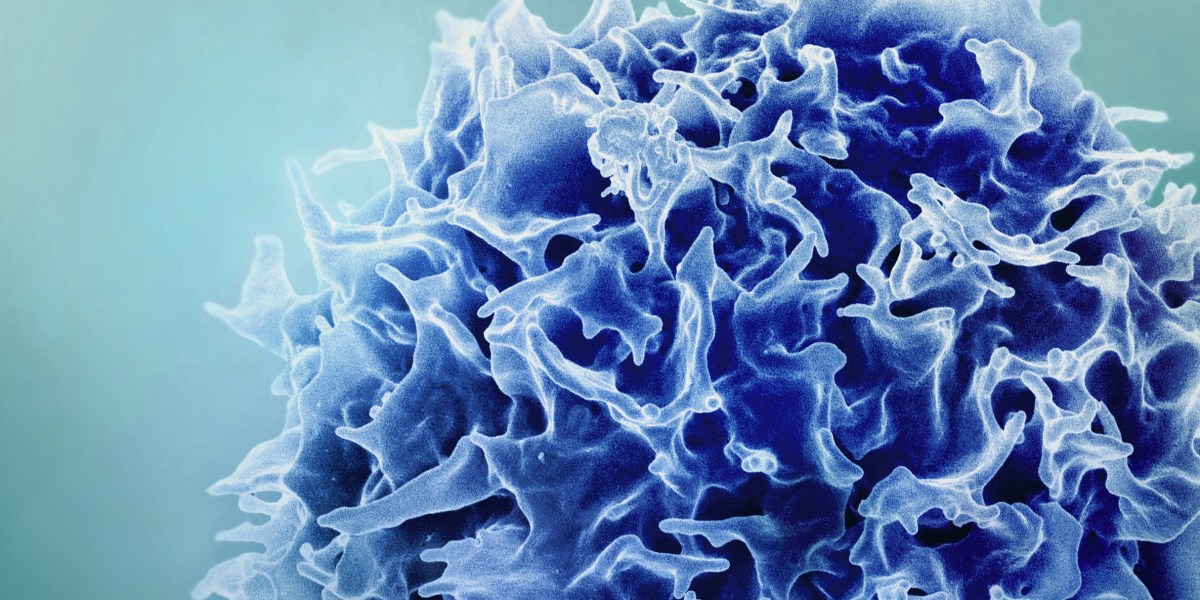

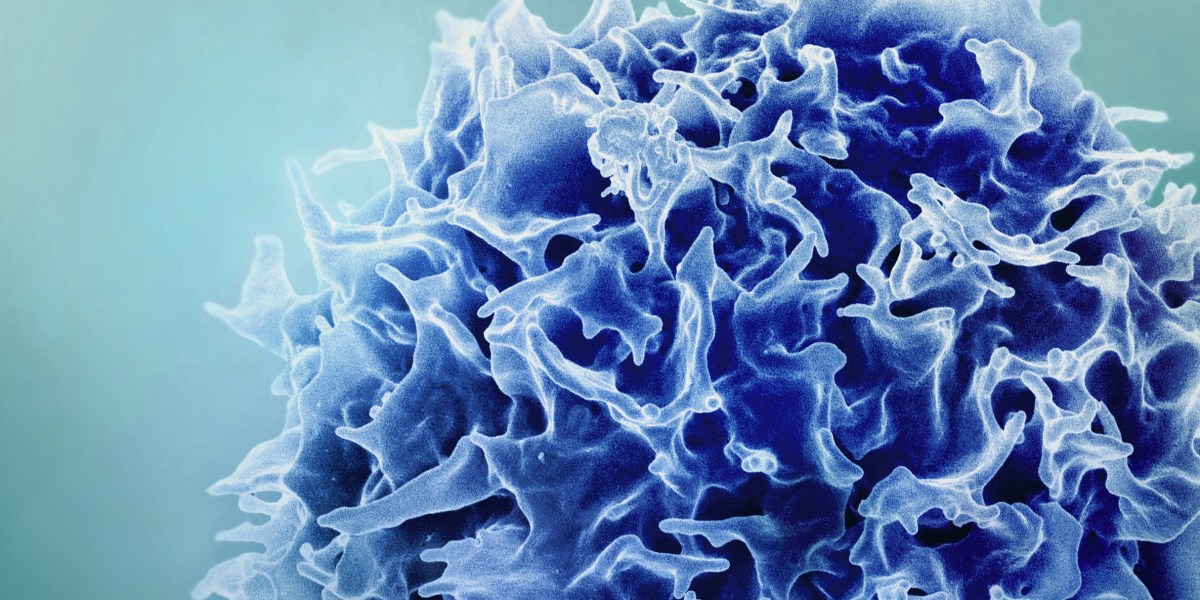

A new way to rejuvenate the immune system

As people age, their immune function weakens. Owing to shrinkage of the thymus, where T cells normally mature and diversify, populations of these immune cells become smaller and can’t react to pathogens as quickly. But researchers at MIT and the Broad Institute have now found a way to overcome that decline by temporarily programming cells in the liver to improve T-cell function. To create a “factory” for the T-cell-stimulating signals that are normally produced by the thymus, the researchers identified three key factors that usually promote T cells’ maturation and encoded them into mRNA sequences that could be delivered by lipid nanoparticles. When injected into the bloodstream, these particles accumulate in the liver and the mRNA is taken up by the organ’s main cells, hepatocytes, which begin to manufacture the proteins encoded by the mRNA. Aged mice that received the treatment showed much larger and more diverse T-cell populations in response to vaccination, and they also responded better to cancer immunotherapy treatments. If this type of treatment is developed for human use, says Professor Feng Zhang, the senior author of a paper on the work, “hopefully we can help people stay free of disease for a longer span of their life.”

Just pull a string to turn these tile patterns into useful 3D structures

MIT researchers have developed a new method for designing 3D structures that can spring up from a flat sheet of interconnected tiles with a single pull of a string. The technique could be used to make foldable bike helmets and medical devices, emergency shelters and field hospitals for disaster zones, and much more. Mina Konaković Luković, head of the Algorithmic Design Group at the Computer Science and Artificial Intelligence Laboratory (CSAIL), and her colleagues were inspired by kirigami, the ancient Japanese art of paper cutting, to create an algorithm that converts a user-specified 3D structure into a flat shape made up of tiles connected by rotating hinges at the corners.

[embedded content]

The algorithm uses a two-step method to find the optimal path through the tile pattern for a string that can be tightened to actuate the structure. It computes the minimum number of points that the string must lift to create the desired shape and finds the shortest path that connects those lift points, while including all areas of the object’s boundary that must be connected to guide the structure into its 3D configuration. It does these calculations in such a way that the string path minimizes friction, enabling the structure to be smoothly actuated with just one pull. The actuation method is easily reversible to return the structure to its flat configuration. The patterns could be produced using 3D printing, CNC milling, molding, or other techniques.

This method could enable complex 3D structures to be stored and transported more efficiently and with less cost. Applications could include transportable medical devices, foldable robots that can flatten to enter hard-to-reach spaces, or even modular space habitats deployed by robots on the surface of Mars. “The simplicity of the whole actuation mechanism is a real benefit of our approach,” says Akib Zaman, a graduate student in electrical engineering and computer science and lead author of a paper on the work. “The user just needs to provide their intended design, and then our method optimizes it in such a way that it holds the shape after just one pull on the string, so the structure can be deployed very easily. I hope people will be able to use this method to create a wide variety of different, deployable structures.” The researchers used their method to design several objects of different sizes, from personalized medical items including a splint and a posture corrector to an igloo-like portable structure. They also designed and fabricated a human-scale chair. The technique could be used to create items ranging in size from tiny objects actuated inside the body to architectural structures, like the frame of a building, that are deployed on-site using cranes. In the future, the researchers want to further explore designs at both ends of that range. In addition, they want to create a self-deploying mechanism, so the structures do not need to be actuated by a human or robot.

Recent books from the MIT community

Launching from the Lab: Building a Deep-Tech StartupBy Lita Nelsen ’64, SM ’66, SM ’79, former director of the MIT Technology Licensing Office, and Maureen StancikBoyce, SM ’91, SM ’93, PhD ’95, with Sophie Hagerty MIT PRESS, 2026, $35 Empty Vessel: The Story of the Global Economy in One BargeBy Ian Kumekawa, lecturer in historyPENGUIN RANDOM HOUSE, 2025, $29 Taxation and Resentment: Race, Party, and Class in American Tax Attitudes By Andrea Louise Campbell, professor of political science PRINCETON UNIVERSITY PRESS, 2025, $29.95 Dr. Adventure: Danger and Discovery from Pole to PoleBy Warren M. Zapol ’62 THE ZAPOL FAMILY, 2025, $37.99

Long-Term Care Around the WorldEdited by Jonathan Gruber ’87, professor of economics and department head, and Kathleen McGarryUNIV. OF CHICAGO PRESS, 2025, $35 Plain Talk About Drinking Water (6th edition) By James Symons, SM ’55, ScD ’57, former assistant professor of sanitary engineering and Nancy E. McTigueAMERICAN WATER WORKS ASSOCIATION, 2025, $30 The Price of Our Values: The Economic Limits of Moral Life By Augustin Landier, PhD ’02, and David Thesmar, professor of financial economics and financeUNIV. OF CHICAGO PRESS, 2025, $25 Send book news to [email protected] or 196 Broadway, 3rd Floor, Cambridge, MA 02139

A I-designed proteins may help spot cancer

Researchers at MIT and Microsoft have used artificial intelligence to create molecular sensors that could detect early signs of cancer via a urine test. The researchers developed an AI model to design short proteins that are targeted by enzymes called proteases, which are overactive in cancer cells. Nanoparticles coated with these proteins, called peptides, can give off a signal if they encounter cancer-linked proteases once introduced into circulation: The proteases will snip off the peptides, which then form reporter molecules that are excreted in urine. Sangeeta Bhatia, SM ’93, PhD ’97, a senior author of a paper on the work with her former student Ava Amini ’16, a principal researcher at Microsoft Research, led the MIT team that came up with the idea of such particles over a decade ago. But earlier efforts used trial and error to identify peptides that would be cleaved by specific proteases, and the results could be ambiguous. With AI, peptides can be designed to meet specific criteria. “If we know that a particular protease is really key to a certain cancer, and we can optimize the sensor to be highly sensitive and specific to that protease, then that gives us a great diagnostic signal,” Amini says. Bhatia’s lab is now working with ARPA-H on an at-home kit that could potentially detect 30 types of early cancer. Peptides designed using the model could also be incorporated into cancer therapeutics.

Reformulated antibodies could be injected for easier treatment

Antibody treatments for cancer and other diseases are typically delivered intravenously, requiring patients to go to a hospital and potentially spend hours receiving infusions. Now Professor Patrick Doyle and his colleagues have taken a major step toward reformulating antibodies so that they can be injected with a standard syringe, making treatment easier and more accessible. The obstacle to injecting these drugs is that they are formulated at low concentrations, so very large volumes are needed per dose. Decreasing the volume to the capacity of a standard syringe would mean increasing the concentration so much that the solution would be too thick to be injected. In 2023, Doyle’s lab developed a way to generated highly concentrated antibody formulations by encapsulating them into hydrogel particles. However, that requires centrifugation, a step that would be difficult to scale up for manufacturing. In their new study, the researchers took a different approach that instead uses a microfluidic setup. Droplets containing antibodies dissolved in a watery prepolymer solution are suspended in an organic solvent and can then be dehydrated, leaving behind highly concentrated solid antibodies within a hydrogel matrix. Finally, the solvent is removed and replaced with an aqueous solution. Using semi-solid particles 100 microns in diameter, the team showed that the force needed to push the plunger of a syringe containing the solution was less than 20 newtons. “That is less than half of the maximum acceptable force that people usually try to aim for,” says Talia Zheng, an MIT graduate student who is the lead author of the new study. More than 700 milligrams of the antibody—enough for most therapeutic applications—could be administered at once with a two-milliliter syringe. The formulations remained stable under refrigeration for at least four months. The researchers now plan to test the particles in animals and work on scaling up the manufacturing process.

A retinal reboot for amblyopia

In the vision disorder amblyopia (or “lazy eye”), impaired vision in one eye early in life causes neural connections in the brain’s visual system to shift toward supporting the other eye, leaving the amblyopic eye less capable even if the original impairment is corrected. Current interventions don’t work after infancy and early childhood, when the brain connections are fully formed. Now a study in mice by MIT neuroscientist Mark Bear and colleagues shows that if the retina of the amblyopic eye is anesthetized just for a couple of days, those crucial connections can be restored, even in adulthood. Bear’s team, which has been studying amblyopia for decades, had previously shown that this effect could be achieved by anesthetizing both eyes or the non-amblyopic eye, analogous to having a child wear a patch over the healthy eye to strengthen the “lazy” one. The new study delved into the mechanism behind this effect by pursuing an earlier observation: that blocking the retina from sending signals to neurons in the part of the brain that relays information from the eyes to the visual cortex caused those neurons to fire “bursts” of electrical pulses. Similar patterns of activity occur in the visual system before birth and guide early synaptic development.

The experiments confirmed that the bursting is necessary for the treatment to work—and, crucially, that it occurs when either retina is targeted. After some mice modeling amblyopia had the affected eye anesthetized for two days, the researchers measured activity in the visual cortex to calculate a ratio of inputs from the two eyes. This ratio was much more even in the treated mice, indicating that the amblyopic eye was communicating with the brain about as well as the other one. A key next step will be to show that this approach works in other animals and, ultimately, people. “If it does, it’s a pretty substantial step forward, because it would be reassuring to know that vision in the good eye would not have to be interrupted by treatment,” says Bear. “The amblyopic eye, which is not doing much, could be inactivated and ‘brought back to life’ instead.”

A new way to rejuvenate the immune system

As people age, their immune function weakens. Owing to shrinkage of the thymus, where T cells normally mature and diversify, populations of these immune cells become smaller and can’t react to pathogens as quickly. But researchers at MIT and the Broad Institute have now found a way to overcome that decline by temporarily programming cells in the liver to improve T-cell function. To create a “factory” for the T-cell-stimulating signals that are normally produced by the thymus, the researchers identified three key factors that usually promote T cells’ maturation and encoded them into mRNA sequences that could be delivered by lipid nanoparticles. When injected into the bloodstream, these particles accumulate in the liver and the mRNA is taken up by the organ’s main cells, hepatocytes, which begin to manufacture the proteins encoded by the mRNA. Aged mice that received the treatment showed much larger and more diverse T-cell populations in response to vaccination, and they also responded better to cancer immunotherapy treatments. If this type of treatment is developed for human use, says Professor Feng Zhang, the senior author of a paper on the work, “hopefully we can help people stay free of disease for a longer span of their life.”

Just pull a string to turn these tile patterns into useful 3D structures

MIT researchers have developed a new method for designing 3D structures that can spring up from a flat sheet of interconnected tiles with a single pull of a string. The technique could be used to make foldable bike helmets and medical devices, emergency shelters and field hospitals for disaster zones, and much more. Mina Konaković Luković, head of the Algorithmic Design Group at the Computer Science and Artificial Intelligence Laboratory (CSAIL), and her colleagues were inspired by kirigami, the ancient Japanese art of paper cutting, to create an algorithm that converts a user-specified 3D structure into a flat shape made up of tiles connected by rotating hinges at the corners.

[embedded content]

The algorithm uses a two-step method to find the optimal path through the tile pattern for a string that can be tightened to actuate the structure. It computes the minimum number of points that the string must lift to create the desired shape and finds the shortest path that connects those lift points, while including all areas of the object’s boundary that must be connected to guide the structure into its 3D configuration. It does these calculations in such a way that the string path minimizes friction, enabling the structure to be smoothly actuated with just one pull. The actuation method is easily reversible to return the structure to its flat configuration. The patterns could be produced using 3D printing, CNC milling, molding, or other techniques.

This method could enable complex 3D structures to be stored and transported more efficiently and with less cost. Applications could include transportable medical devices, foldable robots that can flatten to enter hard-to-reach spaces, or even modular space habitats deployed by robots on the surface of Mars. “The simplicity of the whole actuation mechanism is a real benefit of our approach,” says Akib Zaman, a graduate student in electrical engineering and computer science and lead author of a paper on the work. “The user just needs to provide their intended design, and then our method optimizes it in such a way that it holds the shape after just one pull on the string, so the structure can be deployed very easily. I hope people will be able to use this method to create a wide variety of different, deployable structures.” The researchers used their method to design several objects of different sizes, from personalized medical items including a splint and a posture corrector to an igloo-like portable structure. They also designed and fabricated a human-scale chair. The technique could be used to create items ranging in size from tiny objects actuated inside the body to architectural structures, like the frame of a building, that are deployed on-site using cranes. In the future, the researchers want to further explore designs at both ends of that range. In addition, they want to create a self-deploying mechanism, so the structures do not need to be actuated by a human or robot.

Ovintiv to divest Anadarko assets for $3 billion

In a release Feb. 17, Brendan McCracken, Ovintiv president and chief executive officer, said the company has “built one of the deepest premium inventory positions in our industry in the two most valuable plays in North America, the Permian and the Montney,” and that the Anadarko assets sale “positions [Ovintiv] to deliver superior returns for our shareholders for many years to come.” Ovintiv in 2025 had noted plans to sell the asset to help offset the cost of its acquisition of NuVista Energy Ltd. That $2.7-billion cash and stock deal, which closed earlier this month, added about 930 net 10,000-ft equivalent well locations and about 140,000 net acres (70% undeveloped) in the core of the oil-rich Alberta Montney. Proceeds from the Anadarko assets sale are earmarked for accelerated debt reduction, the company said. Ovintiv’s sale of its Anadarko assets is expected to close early in this year’s second quarter, subject to customary conditions, with an effective date of Jan. 1, 2026.

ExxonMobil transporting, storing captured CO2 from second operation in Louisiana

@import url(‘https://fonts.googleapis.com/css2?family=Inter:[email protected]&display=swap’); a { color: var(–color-primary-main); } .ebm-page__main h1, .ebm-page__main h2, .ebm-page__main h3, .ebm-page__main h4, .ebm-page__main h5, .ebm-page__main h6 { font-family: Inter; } body { line-height: 150%; letter-spacing: 0.025em; font-family: Inter; } button, .ebm-button-wrapper { font-family: Inter; } .label-style { text-transform: uppercase; color: var(–color-grey); font-weight: 600; font-size: 0.75rem; } .caption-style { font-size: 0.75rem; opacity: .6; } #onetrust-pc-sdk [id*=btn-handler], #onetrust-pc-sdk [class*=btn-handler] { background-color: #c19a06 !important; border-color: #c19a06 !important; } #onetrust-policy a, #onetrust-pc-sdk a, #ot-pc-content a { color: #c19a06 !important; } #onetrust-consent-sdk #onetrust-pc-sdk .ot-active-menu { border-color: #c19a06 !important; } #onetrust-consent-sdk #onetrust-accept-btn-handler, #onetrust-banner-sdk #onetrust-reject-all-handler, #onetrust-consent-sdk #onetrust-pc-btn-handler.cookie-setting-link { background-color: #c19a06 !important; border-color: #c19a06 !important; } #onetrust-consent-sdk .onetrust-pc-btn-handler { color: #c19a06 !important; border-color: #c19a06 !important; } ExxonMobil Corp. is now transporting and storing captured CO2 from the New Generation Gas Gathering (NG3) project in Gillis, La. Natural gas produced from East Texas and Louisiana is gathered through the NG3 gathering system for treatment at the NG3 Gillis plant, where up to 1.2 million metric tons/year (tpy) of CO2 is expected to be removed from the natural gas stream before the product is redelivered to Gulf Coast markets, including LNG plants, ExxonMobil said. <!–> –><!–> –> July 13, 2023 <!–> –><!–> –> June 1, 2023 <!–> –><!–> –> July 26, 2024 <!–> This startup marks the second active commercial carbon capture and storage (CCS) operation for ExxonMobil in Louisiana. In July 2025, the company began transporting and storing CO2 from Illinois-based CF Industries Holdings Inc.’s Donaldsonville Complex, enabling the production of low-carbon ammonia. ]–> Photo from CF Industries CF Industries’ Donaldsonville Complex is located on 1,400 acres along the west bank of the Mississippi River in southeastern Louisiana. <!–> ]–> <!–> The CO2 contracted for the company’s two active projects accounts for up to 3.2 million tpy, about one-third of ExxonMobil’s committed CCS volumes. The company is currently storing

Azule Energy discovers oil offshore Angola

Azule Energy and partners discovered oil in Block 15/06 in the offshore Lower Congo basin, offshore Angola. Preliminary estimates indicate oil in place of around 500 million bbl, and the presence of existing nearby production infrastructure—about 18 km from Olombendo FPSO—improves development prospects, the operator said in a release Feb. 13. The Algaita-01 exploration well, spudded on Jan. 10, 2026, was drilled by the Saipem 12000 drillship in a water depth of 667 m. The well encountered oil-bearing sandstones in multiple Upper Miocene intervals. Drilling operations were completed Jan. 26, followed by advanced formation evaluation logs to assess reservoir quality and fluid characteristics. Preliminary interpretation of wireline logging and fluid sampling indicates the presence of multiple reservoir intervals with excellent petrophysical properties and fluid mobilities, the company said. Azule Energy is an incorporated joint venture equally owned by bp plc Eni SpA. The company currently produces around 200,000 boe/d in Angola. Block 15/06 is operated by Azule Energy (36.84%), in partnership with SSI (26.32%) and Sonangol E&P (36.84%).

Cameroon opens nine exploration opportunities spanning two proven oil, gas basins

Cameroon’s National Hydrocarbons Corp. (SNH) is offering nine exploration and production blocks spanning two proven basins in its latest licensing round. The round includes three blocks in the Rio del Rey (RDR) basin (Ndian River, Bolongo Exploration, and Bakassi), and six in the Douala-Kribi Campo (DKC) basin (Etinde Exploration, Bomono, Nkombe Nsepe, Tilapia, Ntem, and Elombo), the African Energy Chamber said in a release Feb. 19. These blocks feature prior drilling, 2D and 3D seismic coverage, identified leads and undrilled prospects, and are located near existing producing fields. The licensing round accommodates multiple contractual frameworks, including Concession Contracts, Production Sharing Contracts, and Risk Service Contracts. Exploration periods vary by block: Bolongo, Bomono, Etinde Exploration, Tilapia, Ntem, and Elombo have an initial 3-year term, renewable twice for 2-year periods, while Bakassi, Kombe-Nsepe, and Ndian River have 5-year initial terms, also renewable. Companies must submit proposals including technical evaluations, minimum work programs, budgets, environmental, and social commitments and local content plans. Minimum work programs require drilling exploration wells, seismic acquisition, and geoscience studies. Proposals are being accepted until Mar. 30, 2026, ahead of a final decision in late April.

Vertex Energy’s Alabama refinery expands base oil production

Vertex Energy Inc. has started production of a new high-viscosity Group III re-refined base oil (RRBO) at subsidiary Vertex Refining Alabama LLC’s 75,000-b/d refining and petrochemical complex in Mobile, Ala. Following first commercial production of its VTX-R4 RRBO at the Mobile refinery in November 2025, Vertex is now producing VTX-R6—a higher-viscosity 6 centistoke (cSt) Group III RRBO—to provide lubricant manufacturers an option that supports thicker finished-lubricant formulations for applications requiring greater film thickness and durability, the operator confirmed on Feb. 18. Commonly used in applications including heavy-duty engine oils, passenger car motor oils, and select gear, transmission, compressor, and hydraulic oils, addition of the 6-cSt viscosity RRBO grade specifically comes as part of Vertex’s program of expanding its portfolio to provide customers increased flexibility to optimize formulations across a broader range of finished lubricant viscosity grades, the company said. Produced at the Mobile refinery from used motor oil collected through Vertex’s integrated network, VTX-R4 and R6 base oil grades are aimed at providing blenders and manufacturers reliable and readily available US-produced alternatives to imported Group III base oils, according to the operator. Vertex did not reveal the Mobile refinery’s current rates of production for either VTX-R4 or R6.

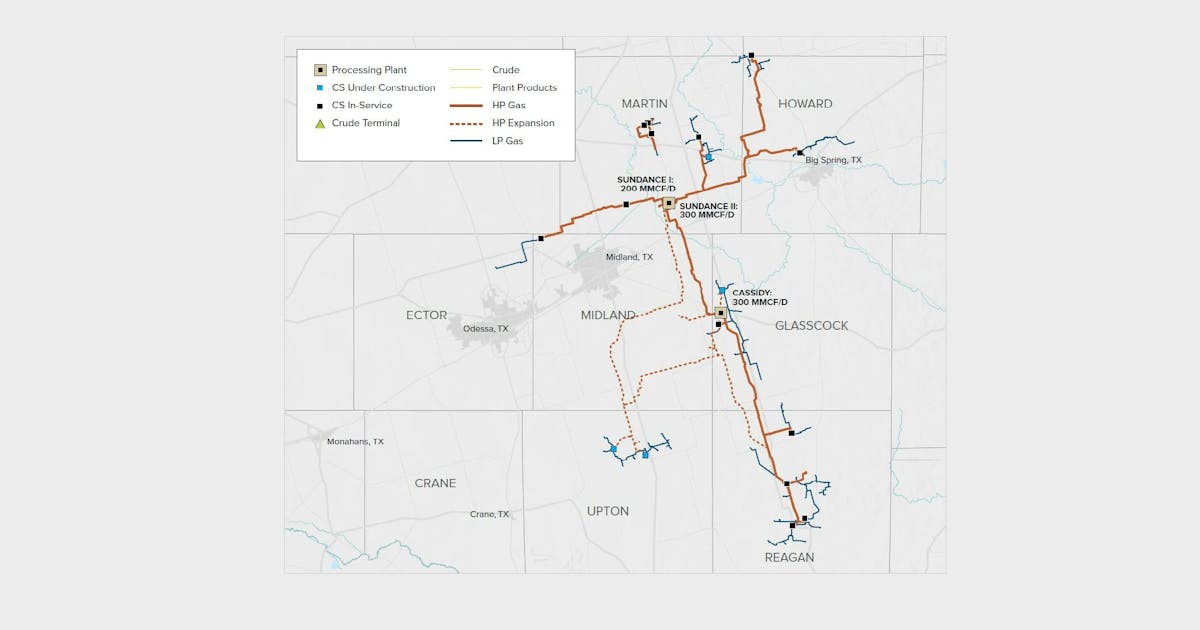

Brazos expands Texas Permian cryogenic gas processing network

Brazos Midstream Holdings LLC has commissioned a new 300-MMcfd cryogenic natural gas processing plant in Martin County, Tex., and started construction of an additional 300-MMcfd facility in neighboring Glasscock County as part of its operational expansion in the Permian’s Midland basin. The newly online Sundance II plant increases the company’s operated Midland basin processing capacity to 500 MMcfd when combined with the existing 200-MMcfd Sundance I plant, which entered service in mid-2024. The two plants form the Sundance complex in Martin County. The largest cryogenic plant Brazos has built to date, Sundance II also expands incremental residue gas and NGL takeaway capacity in the core of the Midland basin, where producer drilling activity remains concentrated, the company said. Cassidy complex under construction Brazos also confirmed construction of the Cassidy processing complex is now under way in Glasscock County. The initial phase, Cassidy I, includes a 300-MMcfd cryogenic plant targeted for completion by yearend 2026. Upon startup of Cassidy I, Brazos’ total operated natural gas processing capacity in the Midland basin will reach 800 MMcfd, according to the operator. Brazos said it has secured grid power and related infrastructure to support future expansion phases at the Cassidy site as producer drilling programs drive additional processing demand. Gathering system expansion Alongside gas processing additions, Brazos said it is expanding its Midland basin gathering footprint with more than 70 miles of new 20-in. and 24-in. high-pressure natural gas gathering pipeline currently under construction. The new lines specifically aim to relieve existing constraints for producers in Reagan, Glasscock, Midland, and Upton counties, the company said. When the pipeline work is completed in mid-2026, the company’s Midland basin system is expected to include about 525 miles of natural gas gathering pipelines and 16 compressor stations. Brazos said its Midland operations are supported by long-term acreage dedications

LG rolls out new AI services to help consumers with daily tasks

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More LG kicked off the AI bandwagon today with a new set of AI services to help consumers in their daily tasks at home, in the car and in the office. The aim of LG’s CES 2025 press event was to show how AI will work in a day of someone’s life, with the goal of redefining the concept of space, said William Joowan Cho, CEO of LG Electronics at the event. The presentation showed LG is fully focused on bringing AI into just about all of its products and services. Cho referred to LG’s AI efforts as “affectionate intelligence,” and he said it stands out from other strategies with its human-centered focus. The strategy focuses on three things: connected devices, capable AI agents and integrated services. One of things the company announced was a strategic partnership with Microsoft on AI innovation, where the companies pledged to join forces to shape the future of AI-powered spaces. One of the outcomes is that Microsoft’s Xbox Ultimate Game Pass will appear via Xbox Cloud on LG’s TVs, helping LG catch up with Samsung in offering cloud gaming natively on its TVs. LG Electronics will bring the Xbox App to select LG smart TVs. That means players with LG Smart TVs will be able to explore the Gaming Portal for direct access to hundreds of games in the Game Pass Ultimate catalog, including popular titles such as Call of Duty: Black Ops 6, and upcoming releases like Avowed (launching February 18, 2025). Xbox Game Pass Ultimate members will be able to play games directly from the Xbox app on select LG Smart TVs through cloud gaming. With Xbox Game Pass Ultimate and a compatible Bluetooth-enabled

Big tech must stop passing the cost of its spiking energy needs onto the public

Julianne Malveaux is an MIT-educated economist, author, educator and political commentator who has written extensively about the critical relationship between public policy, corporate accountability and social equity. The rapid expansion of data centers across the U.S. is not only reshaping the digital economy but also threatening to overwhelm our energy infrastructure. These data centers aren’t just heavy on processing power — they’re heavy on our shared energy infrastructure. For Americans, this could mean serious sticker shock when it comes to their energy bills. Across the country, many households are already feeling the pinch as utilities ramp up investments in costly new infrastructure to power these data centers. With costs almost certain to rise as more data centers come online, state policymakers and energy companies must act now to protect consumers. We need new policies that ensure the cost of these projects is carried by the wealthy big tech companies that profit from them, not by regular energy consumers such as family households and small businesses. According to an analysis from consulting firm Bain & Co., data centers could require more than $2 trillion in new energy resources globally, with U.S. demand alone potentially outpacing supply in the next few years. This unprecedented growth is fueled by the expansion of generative AI, cloud computing and other tech innovations that require massive computing power. Bain’s analysis warns that, to meet this energy demand, U.S. utilities may need to boost annual generation capacity by as much as 26% by 2028 — a staggering jump compared to the 5% yearly increases of the past two decades. This poses a threat to energy affordability and reliability for millions of Americans. Bain’s research estimates that capital investments required to meet data center needs could incrementally raise consumer bills by 1% each year through 2032. That increase may

Final 45V hydrogen tax credit guidance draws mixed response

Dive Brief: The final rule for the 45V clean hydrogen production tax credit, which the U.S. Treasury Department released Friday morning, drew mixed responses from industry leaders and environmentalists. Clean hydrogen development within the U.S. ground to a halt following the release of the initial guidance in December 2023, leading industry participants to call for revisions that would enable more projects to qualify for the tax credit. While the final rule makes “significant improvements” to Treasury’s initial proposal, the guidelines remain “extremely complex,” according to the Fuel Cell and Hydrogen Energy Association. FCHEA President and CEO Frank Wolak and other industry leaders said they look forward to working with the Trump administration to refine the rule. Dive Insight: Friday’s release closed what Wolak described as a “long chapter” for the hydrogen industry. But industry reaction to the final rule was decidedly mixed, and it remains to be seen whether the rule — which could be overturned as soon as Trump assumes office — will remain unchanged. “The final 45V rule falls short,” Marty Durbin, president of the U.S. Chamber’s Global Energy Institute, said in a statement. “While the rule provides some of the additional flexibility we sought, … we believe that it still will leave billions of dollars of announced projects in limbo. The incoming Administration will have an opportunity to improve the 45V rules to ensure the industry will attract the investments necessary to scale the hydrogen economy and help the U.S. lead the world in clean manufacturing.” But others in the industry felt the rule would be sufficient for ending hydrogen’s year-long malaise. “With this added clarity, many projects that have been delayed may move forward, which can help unlock billions of dollars in investments across the country,” Kim Hedegaard, CEO of Topsoe’s Power-to-X, said in a statement. Topsoe

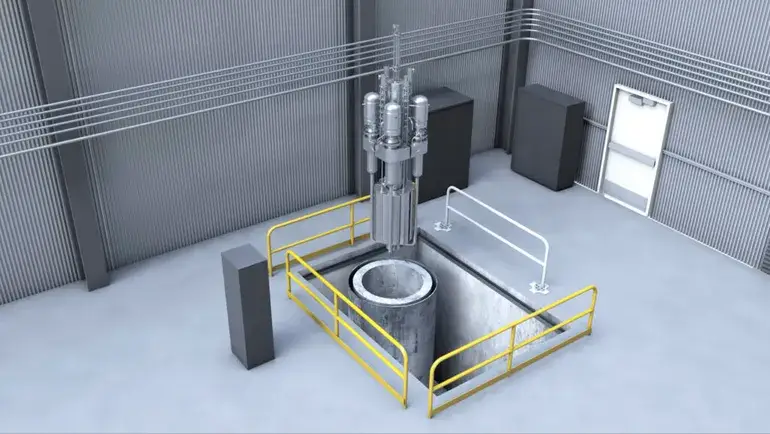

Texas, Utah, Last Energy challenge NRC’s ‘overburdensome’ microreactor regulations

Dive Brief: A 69-year-old Nuclear Regulatory Commission rule underpinning U.S. nuclear reactor licensing exceeds the agency’s statutory authority and creates an unreasonable burden for microreactor developers, the states of Texas and Utah and advanced nuclear technology company Last Energy said in a lawsuit filed Dec. 30 in federal court in Texas. The plaintiffs asked the Eastern District of Texas court to exempt Last Energy’s 20-MW reactor design and research reactors located in the plaintiff states from the NRC’s definition of nuclear “utilization facilities,” which subjects all U.S. commercial and research reactors to strict regulatory scrutiny, and order the NRC to develop a more flexible definition for use in future licensing proceedings. Regardless of its merits, the lawsuit underscores the need for “continued discussion around proportional regulatory requirements … that align with the hazards of the reactor and correspond to a safety case,” said Patrick White, research director at the Nuclear Innovation Alliance. Dive Insight: Only three commercial nuclear reactors have been built in the United States in the past 28 years, and none are presently under construction, according to a World Nuclear Association tracker cited in the lawsuit. “Building a new commercial reactor of any size in the United States has become virtually impossible,” the plaintiffs said. “The root cause is not lack of demand or technology — but rather the [NRC], which, despite its name, does not really regulate new nuclear reactor construction so much as ensure that it almost never happens.” More than a dozen advanced nuclear technology developers have engaged the NRC in pre-application activities, which the agency says help standardize the content of advanced reactor applications and expedite NRC review. Last Energy is not among them. The pre-application process can itself stretch for years and must be followed by a formal application that can take two

Qualcomm unveils AI chips for PCs, cars, smart homes and enterprises

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Qualcomm unveiled AI technologies and collaborations for PCs, cars, smart homes and enterprises at CES 2025. At the big tech trade show in Las Vegas, Qualcomm Technologies showed how it’s using AI capabilities in its chips to drive the transformation of user experiences across diverse device categories, including PCs, automobiles, smart homes and into enterprises. The company unveiled the Snapdragon X platform, the fourth platform in its high-performance PC portfolio, the Snapdragon X Series, bringing industry-leading performance, multi-day battery life, and AI leadership to more of the Windows ecosystem. Qualcomm has talked about how its processors are making headway grabbing share from the x86-based AMD and Intel rivals through better efficiency. Qualcomm’s neural processing unit gets about 45 TOPS, a key benchmark for AI PCs. The Snapdragon X family of AI PC processors. Additionally, Qualcomm Technologies showcased continued traction of the Snapdragon X Series, with over 60 designs in production or development and more than 100 expected by 2026. Snapdragon for vehicles Qualcomm demoed chips that are expanding its automotive collaborations. It is working with Alpine, Amazon, Leapmotor, Mobis, Royal Enfield, and Sony Honda Mobility, who look to Snapdragon Digital Chassis solutions to drive AI-powered in-cabin and advanced driver assistance systems (ADAS). Qualcomm also announced continued traction for its Snapdragon Elite-tier platforms for automotive, highlighting its work with Desay, Garmin, and Panasonic for Snapdragon Cockpit Elite. Throughout the show, Qualcomm will highlight its holistic approach to improving comfort and focusing on safety with demonstrations on the potential of the convergence of AI, multimodal contextual awareness, and cloudbased services. Attendees will also get a first glimpse of the new Snapdragon Ride Platform with integrated automated driving software stack and system definition jointly

Oil, Gas Execs Reveal Where They Expect WTI Oil Price to Land in the Future

Executives from oil and gas firms have revealed where they expect the West Texas Intermediate (WTI) crude oil price to be at various points in the future as part of the fourth quarter Dallas Fed Energy Survey, which was released recently. The average response executives from 131 oil and gas firms gave when asked what they expect the WTI crude oil price to be at the end of 2025 was $71.13 per barrel, the survey showed. The low forecast came in at $53 per barrel, the high forecast was $100 per barrel, and the spot price during the survey was $70.66 per barrel, the survey pointed out. This question was not asked in the previous Dallas Fed Energy Survey, which was released in the third quarter. That survey asked participants what they expect the WTI crude oil price to be at the end of 2024. Executives from 134 oil and gas firms answered this question, offering an average response of $72.66 per barrel, that survey showed. The latest Dallas Fed Energy Survey also asked participants where they expect WTI prices to be in six months, one year, two years, and five years. Executives from 124 oil and gas firms answered this question and gave a mean response of $69 per barrel for the six month mark, $71 per barrel for the year mark, $74 per barrel for the two year mark, and $80 per barrel for the five year mark, the survey showed. Executives from 119 oil and gas firms answered this question in the third quarter Dallas Fed Energy Survey and gave a mean response of $73 per barrel for the six month mark, $76 per barrel for the year mark, $81 per barrel for the two year mark, and $87 per barrel for the five year mark, that

Reformulated antibodies could be injected for easier treatment

Antibody treatments for cancer and other diseases are typically delivered intravenously, requiring patients to go to a hospital and potentially spend hours receiving infusions. Now Professor Patrick Doyle and his colleagues have taken a major step toward reformulating antibodies so that they can be injected with a standard syringe, making treatment easier and more accessible. The obstacle to injecting these drugs is that they are formulated at low concentrations, so very large volumes are needed per dose. Decreasing the volume to the capacity of a standard syringe would mean increasing the concentration so much that the solution would be too thick to be injected. In 2023, Doyle’s lab developed a way to generated highly concentrated antibody formulations by encapsulating them into hydrogel particles. However, that requires centrifugation, a step that would be difficult to scale up for manufacturing. In their new study, the researchers took a different approach that instead uses a microfluidic setup. Droplets containing antibodies dissolved in a watery prepolymer solution are suspended in an organic solvent and can then be dehydrated, leaving behind highly concentrated solid antibodies within a hydrogel matrix. Finally, the solvent is removed and replaced with an aqueous solution. Using semi-solid particles 100 microns in diameter, the team showed that the force needed to push the plunger of a syringe containing the solution was less than 20 newtons. “That is less than half of the maximum acceptable force that people usually try to aim for,” says Talia Zheng, an MIT graduate student who is the lead author of the new study. More than 700 milligrams of the antibody—enough for most therapeutic applications—could be administered at once with a two-milliliter syringe. The formulations remained stable under refrigeration for at least four months. The researchers now plan to test the particles in animals and work on scaling up the manufacturing process.

A I-designed proteins may help spot cancer

Researchers at MIT and Microsoft have used artificial intelligence to create molecular sensors that could detect early signs of cancer via a urine test. The researchers developed an AI model to design short proteins that are targeted by enzymes called proteases, which are overactive in cancer cells. Nanoparticles coated with these proteins, called peptides, can give off a signal if they encounter cancer-linked proteases once introduced into circulation: The proteases will snip off the peptides, which then form reporter molecules that are excreted in urine. Sangeeta Bhatia, SM ’93, PhD ’97, a senior author of a paper on the work with her former student Ava Amini ’16, a principal researcher at Microsoft Research, led the MIT team that came up with the idea of such particles over a decade ago. But earlier efforts used trial and error to identify peptides that would be cleaved by specific proteases, and the results could be ambiguous. With AI, peptides can be designed to meet specific criteria. “If we know that a particular protease is really key to a certain cancer, and we can optimize the sensor to be highly sensitive and specific to that protease, then that gives us a great diagnostic signal,” Amini says. Bhatia’s lab is now working with ARPA-H on an at-home kit that could potentially detect 30 types of early cancer. Peptides designed using the model could also be incorporated into cancer therapeutics.

Recent books from the MIT community

Launching from the Lab: Building a Deep-Tech StartupBy Lita Nelsen ’64, SM ’66, SM ’79, former director of the MIT Technology Licensing Office, and Maureen StancikBoyce, SM ’91, SM ’93, PhD ’95, with Sophie Hagerty MIT PRESS, 2026, $35 Empty Vessel: The Story of the Global Economy in One BargeBy Ian Kumekawa, lecturer in historyPENGUIN RANDOM HOUSE, 2025, $29 Taxation and Resentment: Race, Party, and Class in American Tax Attitudes By Andrea Louise Campbell, professor of political science PRINCETON UNIVERSITY PRESS, 2025, $29.95 Dr. Adventure: Danger and Discovery from Pole to PoleBy Warren M. Zapol ’62 THE ZAPOL FAMILY, 2025, $37.99

Long-Term Care Around the WorldEdited by Jonathan Gruber ’87, professor of economics and department head, and Kathleen McGarryUNIV. OF CHICAGO PRESS, 2025, $35 Plain Talk About Drinking Water (6th edition) By James Symons, SM ’55, ScD ’57, former assistant professor of sanitary engineering and Nancy E. McTigueAMERICAN WATER WORKS ASSOCIATION, 2025, $30 The Price of Our Values: The Economic Limits of Moral Life By Augustin Landier, PhD ’02, and David Thesmar, professor of financial economics and financeUNIV. OF CHICAGO PRESS, 2025, $25 Send book news to [email protected] or 196 Broadway, 3rd Floor, Cambridge, MA 02139

Using big data for good

A photogenic green-eyed Russian Blue named Petra might just be the world’s most sequenced cat. Petra was rescued from an animal shelter in Reno, Nevada, by Charlie Lieu, MBA ’05, SM ’05, a data whiz, serial entrepreneur, investor, and cofounder of Darwin’s Ark, a community science nonprofit focused on pet genetics. Since becoming Lieu’s furry friend, Petra has had her DNA fully sequenced six times and extracted nearly 60 times, all in the name of science. Petra is just one of more than 67,000 cats and dogs whose information has been entered by their human caretakers into the Darwin’s Ark databases, which the organization’s researchers and collaborators are using to try to better understand pet health and behavior. Since its founding in 2018, Darwin’s Ark has helped researchers probe everything from cancer to sociability to whether or not trainability is inherited, allowing them to debunk stereotypes about dog breeds and investigate similarities between complex diseases in humans and animals. Petra is always ready for a close-up.COURTESY OF CHARLIE LIEU DNA testing for dogs is common at this point, with multiple for-profit companies offering to break down your pet’s breed background for a fee. But Lieu and her Darwin’s Ark cofounder, Elinor K. Karlsson, wanted to go beyond offering individualized DNA reports and invite humans to participate in surveys about how their pets play and socialize, and even whether or not they get the zoomies right after using the litter box. This approach pairs DNA with vast amounts of behavioral data collected by the people who know these animals best, thus harnessing the power of humans’ love for their pets to advance cutting-edge science. In the process, Darwin’s Ark has solved a problem that is often an obstacle in human medicine: how to get the enormous quantity of data needed to actually understand, and eventually solve, medical problems.

It was this problem that initially interested Lieu, who is chief of research operations for Darwin’s Ark, in pet genetics. Lieu spent some of the early, formative years of her career working on the Human Genome Project at the Broad Institute, where she first collaborated with Karlsson—and remembers sleeping under her desk in the late ’90s while “babysitting” servers in case they needed to be rebooted in the middle of the night. For many years, her North Star was cancer research: Her mom had died of cancer, “nearly everyone” on her mom’s side of the family got cancer at some point, and Lieu herself had her first of multiple tumors removed at age 17. Researchers used data collected by Darwin’s Ark to show that just 9% of variations in dog behavior can be predicted by breed. Throughout her nearly 30 years working with the Broad and other initiatives related to such research, Lieu has often felt struck by how difficult it is to study complex diseases like cancer. Gathering extensive data about people while maintaining their legally mandated privacy can be tricky, as is getting them to participate in strict protocols over the course of many years (an issue she has also experienced from the other side, since she is enrolled in multiple longitudinal studies).

About a decade ago, Lieu reconnected with Karlsson, who had moved on from the Human Genome Project to work on animal genetics and was engaging with pet owners in her research. Karlsson bemoaned how hard it was to get the large-scale genomic data needed to advance scientific understanding, and something clicked. What if they could tap into Lieu’s expertise with big data platforms and her experience starting nonprofits to collect genomic data from pets as a proxy for understanding complex diseases and behavior? “We talked a lot about how we [might] enable a platform that could help us collect the right kinds of data at the level that’s necessary in order to do the kinds of science that the world needs,” Lieu says. That might be hard with humans, but “everybody wants to talk about their dogs and cats, right?” Thus Darwin’s Ark was born. Initially it focused on dogs, and using its data, Karlsson and a team from the Broad and elsewhere were able to demonstrate that just 9% of variations in behavior can be predicted by breed—much less than people might think. Lieu hopes the finding will help certain much-maligned breeds such as pit bulls, which tend to be adopted at lower rates and sometimes are even put down on the basis of faulty assumptions about their behavior. But the work Darwin’s Ark is doing isn’t just helping pets—it could benefit humans, too, as researchers increasingly probe the links between human and animal cancers. Darwin’s Ark initially focused on collecting DNA data from dogs; the nonprofit also invites humans to take part in surveys on such things as how their pets play and socialize.GETTY IMAGES “We were involved in some early dog work in cancer, where we collaborated with another group to understand whether or not you could take a blood draw and figure out whether or not the animal has cancer,” says Lieu. “Turns out you could. And in the last couple of years, an FDA-approved test has been available for humans to figure out whether or not you have lung cancer. All that work started in dogs, so you could start to see the power of doing something in animals that then impacts human health.” Darwin’s Ark broadened its focus to cats in 2024, and while it’s too soon for any results, even the research methods are proving interesting. The usual way to extract DNA from a living animal is by swabbing the inside of a cheek. Dogs don’t mind the process, but cats are not as amenable to having things stuck in their mouths. Nor do cats appreciate having hairs plucked out with their follicles, another potential source of DNA for sequencing. So Chad Nusbaum, PhD ’91, another Human Genome Project colleague that Lieu recruited, helped the Darwin’s Ark team figure out how to effectively extract DNA from fur or hair that has been shed—a big breakthrough for the field. (This means, in practice, that cats’ DNA is collected by brushing their fur. Now the cats “not only don’t mind sample collection—some of them really enjoy it,” Nusbaum says with a laugh.) That’s good for cats, but it could also have far-reaching implications in the world of conservation, where obtaining DNA from endangered or sensitive animals via blood or skin samples can be prohibitively difficult or distressing to the animals. Being able to rely instead on a few strands of naturally shed hair could unlock new frontiers for conservationists working with sensitive species. The knowledge that progress on such crucial issues could come from inside or outside the organization was what led Lieu and Karlsson to structure Darwin’s Ark as a nonprofit and make its data available for free to researchers outside commercial settings. While it already periodically shares its sequence data in various public repositories, those repositories are managed by different entities, making it more difficult for scientists to use the information. So researchers must often write in, explain what they’re trying to do, and put in a custom request.Darwin’s Ark just got a grant that will allow it to begin building a public portal for the data, making it far easier for researchers to access, match, and use. “Our hope is that we would be able to create a data set that scientists around the world would be able to leverage to elucidate whatever it is that they’re doing,” Lieu says. “Whether you’re a cancer scientist or a neurological scientist or an immunology-focused scientist, any number of complex disease areas could be helped by having very massive data sets.”

For Lieu, Darwin’s Ark is but the latest line in a long and wide-ranging résumé that includes stints at Amazon and NASA. “The thread that ties it all together is big data,” she says. After living and breathing data in her work on the Human Genome Project, Lieu tackled a very different big data challenge at Amazon on a team that collected data on warehouse fulfillment. Drawing on her biological sciences background, she developed an evolutionary algorithm for outbound logistics that made it possible—without constantly analyzing the data—to dynamically optimize storage and dramatically lower fulfillment costs. The founder or cofounder of at least a dozen ventures to date, she built on her experience at Amazon with her most recent startup, a logistics company called AirTerra that helps e-commerce retailers streamline delivery by bringing together highly fragmented last-mile shipping providers under one umbrella. Officially founded in 2020, it quickly achieved unicorn status and was acquired by the fashion company American Eagle Outfitters in 2021. While Lieu chalks some of that success up to luck (“You start a shipping and logistics organization in the pandemic—of course you’re going to get acquired”), her cofounder Brent Beabout, MBA ’02, is quick to point to the skill and work ethic that made her “luck” possible. Besides being “highly collaborative” and “super knowledgeable,” Lieu gave her all in a way that set her apart, according to Beabout. “She is a passionate person,” he says. “I’ve never seen a person that worked as many hours as Charlie did … I don’t think she ever slept.” Lieu jokes that she’s in a “midlife crisis” as she sorts out what to do next, because there’s so much she could do. So she’s looking for the “biggest thing” she can do for the world. Though Lieu has made out well as an entrepreneur, she grew up “well below the poverty line.” Both those experiences shaped the kind of investor she’s become: one who is distinctly interested in helping other entrepreneurs confront barriers. “I wanted to look back on all the obstacles that I had faced coming up,” she says. “Not just as a woman, not just as a person of color, but [also] the economic barriers of not having the network, not being able to access other people who have been successful, not even understanding the basics of financial markets.” To that end, she’s spent much of her career trying to give back through mentorship and direct investment in ventures started by founders from underrepresented backgrounds. Her passion for social causes doesn’t end there. Lieu has also volunteered with her local trails association and served on a wide range of boards near her home in the Seattle area. In the mid 2010s, an outdoors-focused organization where she was on the board came under fire for having given a platform to a rock climber who had been credibly accused of sexual assault. As a climber herself, Lieu had assumed that sexual assault wasn’t a major problem in those circles—but, being data-minded as always, she came up with a plan to conduct a survey about the issue while protecting respondents’ anonymity. Lieu on a hike with her goddaughter, Mary Ann Seek (center), and Darwin’s Ark cofounder Elinor Karlsson.COURTESY OF CHARLIE LIEU That survey grew into SafeOutside, a grassroots movement focusing on combating sexual assault in the outdoors community. After parsing the data—and realizing just how widespread the problem was—Lieu spent years interviewing individual survivors about their experiences and eventually partnered with Alpinist magazine to publicize and share the results of the survey. Beyond sparking much-needed conversation, the initiative turned out to be instrumental in getting Charlie Barrett, a once-celebrated professional climber, put behind bars. He is now serving a life sentence after his conviction for repeatedly sexually assaulting a female climber at Yosemite National Park. Three additional women testified at his trial that they had also been sexually assaulted by Barrett. Katie Ives, the editor Lieu worked with on the project at Alpinist, remembers being impressed by Lieu’s “sense of caring and compassion and her determination to amplify the voices of people who have been marginalized by history or by the climbing community.” She describes Lieu as a person “whose life is very much driven by a sense of ethical purpose.” At first Lieu worked on SafeOutside quietly; fearing professional repercussions, she asked that her name be omitted or mentioned only in passing in reporting on the project. She reasoned that the subject made people uncomfortable. But in early 2025, she began to discuss it more openly. “That’s actually part of the problem, right? People who have status refusing to talk about an issue that’s so prevalent,” she says. Today, she’s more outspoken than ever and wants to encourage others with any kind of social clout to speak up as well.

In some ways, this reevaluation of her approach reflects the crossroads at which Lieu now finds herself. After years of starting new ventures, serving on seemingly endless boards, and typically getting by on three to five hours of sleep a night, she’s finally taking a step back: saying no to board positions, pressing pause on new venture ideas, and even hiring a team that allows her to pass off more of her Darwin’s Ark work to other people. Lieu has always liked—and is especially good at—shepherding new companies through the startup and early growth stages. So she’s been recruiting a new leadership team to take over the reins as Darwin’s Ark prepares for its next phase of growth. She’s aiming to step away from day-to-day operations this spring and will remain a board member and active advisor—and jokes that she’s in a sort of “midlife crisis” at age 50 as she tries to sort out what to do next, because there’s so much she could do. In this new chapter, Lieu says, she’s trying to identify the “biggest thing” she can be doing for the world in this moment. For now, she’s leaning toward working on economic inequality and reproductive health access, which she says are inextricably tied not only to each other but also to ecology and sustainability. If her past endeavors—from promoting the well-being of cats to pursuing cures for cancer—are any indication, any cause she devotes herself to will be lucky to have her. “She’s just somebody who gets things done,” says Ives. And all the data on Lieu says that’s not going to change.

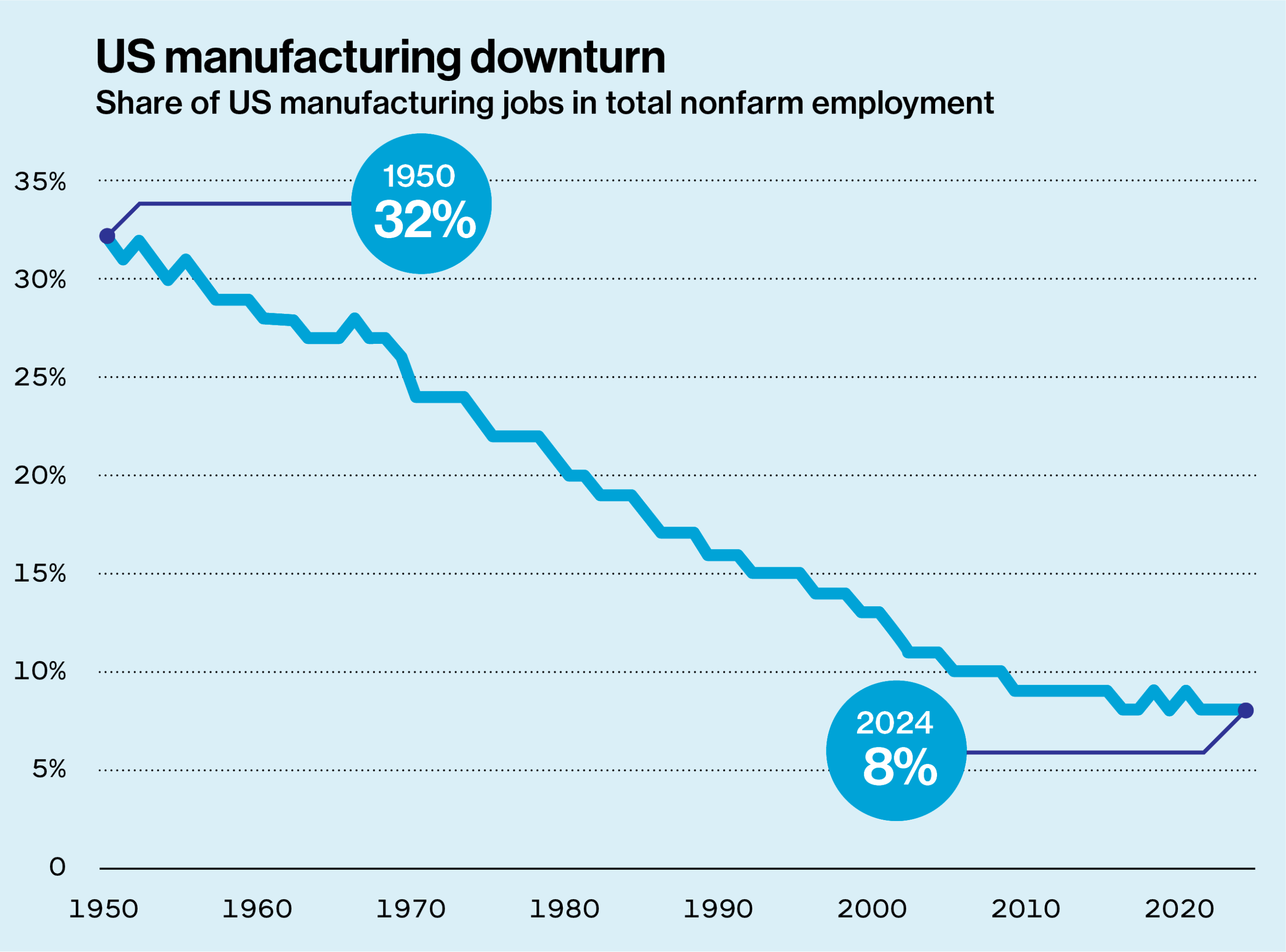

A boost for manufacturing

Several years ago, Suzanne Berger was visiting a manufacturing facility in Ohio, talking to workers on the shop floor, when a machinist offered a thought that could serve as her current credo. “Technology takes a step forward—workers take a step forward too,” the employee said. Berger, to explain, is an MIT political scientist who for decades has advocated for the revitalization of US manufacturing. She has written books and coauthored reports about the subject, visited scores of factories, helped the issue regain traction in America, and in the process earned the title of Institute Professor, MIT’s highest faculty honor. Over time, Berger has developed a distinctive viewpoint about manufacturing, seeing it as an arena where technological advances can drive economic growth and nimble firms can thrive.

This stands in contrast to the view that manufacturing is a sunsetting part of the US economy, lagging behind knowledge work and service industries and no longer a prime source of jobs. To Berger, the sector might have suffered losses, but we should think about it differently now: Rather than being threatened by change, it can thrive on innovation. She is keenly interested in medium-size and small manufacturers, not just huge factories, given that 98% of US manufacturers have 500 or fewer employees. And she is interested, especially, in how technology can help them. Roughly one-tenth of US manufacturers use robots, for instance, a number that clearly disappoints her.

Her focus on these smaller manufacturers is pragmatic. The US is not going to bring back textile manufacturing or steelmaking jobs anytime soon. And although the tech giants have made some concessions to domestic manufacturing, all major product lines from all tech companies are made largely overseas. Small and midsize firms may also have more opportunities to be flexible and innovative. And in the middle of Ohio, there it was, in a simple sentence: Technology takes a step forward—workers take a step forward too. “I think workers do recognize that,” Berger says, sitting in her MIT office, with a view of East Cambridge out the window. “People don’t want to work on technologies of the 1940s. People do want to feel they’re moving to the future, and that’s what young workers also want. They want decent pay. They want to feel they’re advancing, the company is advancing, and they are somehow part of the future. That’s what we all want in jobs.” Now Berger is part of a new campus-wide effort to do something tangible about these issues. She is a co-director of MIT’s Initiative for New Manufacturing (INM), launched in May 2025, which aims to reinvigorate the business of making things in the US. The idea is to enhance innovation and encourage companies to tightly link their innovation and production processes. This lets them rapidly fine-tune new products and new production technologies—and create good jobs along the way. “We want to work with firms big and small, in cities, small towns, and everywhere in between, to help them adopt new approaches for increased productivity,” MIT President Sally A. Kornbluth explained at the launch of INM. “We want to deliberately design high-quality, human-centered manufacturing jobs that bring new life to communities across the country.” An unexpected product Whether she is examining data, talking to visitors about manufacturing, or venturing into yet another plant to look around and ask questions, Berger’s involvement with the Initiative for New Manufacturing is just the latest chapter in a fascinating, unpredictable career. Once upon a time—her first two decades in academia—Berger was a political scientist who didn’t study either the US or manufacturing. She was a highly regarded scholar of French and European politics, whose research focused on rural workers, other laborers, and the persistence of political polarization. After growing up in New Jersey, she attended the University of Chicago and got her PhD from Harvard, where she studied with the famed political scientist Stanley Hoffmann. Berger joined the MIT faculty in 1968 and soon began publishing extensively. Her 1972 book, Peasants Against Politics, argued that geographical political divisions in contemporary France largely replicated those seen at the time of the French Revolution. Her other books include The French Political System (1974) and Dualism and Discontinuity in Industrial Societies (1980), the latter written with the MIT economist Michael Piore.

By the mid-1980s, Berger was a well-established, tenured professor who had never set foot in a factory. In 1986, however, she was named to MIT’s newly formed Commission on Industrial Productivity on the strength of her studies about worker politics and economic change. The commission was a multiyear study group examining broad trends in US industry: By the 1980s, after decades of postwar dominance, US manufacturing had found itself challenged by other countries, most famously by Japan in areas like automaking and consumer electronics. US BUREAU OF LABOR STATISTICS Two unexpected things emerged from that group. One was a best-selling book. Made in America: Regaining the Productive Edge, coauthored by Michael Dertouzos, Richard Lester, and Robert Solow, rapidly sold 300,000 copies, a sign of how much industrial decline was weighing on Americans. Looking at eight industries, Made in America found, among other things, that US manufacturers overemphasized short-term thinking and were neglecting technology transfer—that is, they were missing chances to turn lab innovations into new products. The other unexpected thing to materialize from the Commission on Industrial Productivity was the rest of Suzanne Berger’s career. Once she started studying manufacturing in close empirical fashion, she never really stopped. “MIT really changed me,” Berger told MIT News in 2019, referring to her move into the study of manufacturing. “I’ve learned a lot at MIT.” At first she started examining some of the US’s important competitors, including Hong Kong and Taiwan. She and Richard Lester co-edited the books Made by Hong Kong (1997) and Global Taiwan (2005), scrutinizing those countries’ manufacturing practices. Christopher Love, a co-director of MIT’s Initiative for New ManufacturingWEBB CHAPPELL Over time, though, Berger has mostly turned her attention to US manufacturing. She was a core player in a five-year MIT examination of manufacturing that led her to write How We Compete (2006), a book about why and when multinational companies start outsourcing work to other firms and moving their operations overseas. She followed that up by cochairing the MIT commission known as Production in the Innovation Economy (PIE), formed in 2010, which looked closely at US manufacturing, and coauthored the 2013 book Making in America, summarizing the ways manufacturing had started incorporating advanced technologies. Then she participated extensively in MIT’s Work of the Future study group, whose research concluded that while AI and other technologies are changing the workplace, they will not necessarily wipe out whole cohorts of employees. “Suzanne is amazing,” says Christopher Love, the Raymond A. (1921) and Helen E. St. Laurent Professor of Chemical Engineering at MIT and another co-director of the Initiative for New Manufacturing. “She’s been in this space and thinking about these questions for decades. Always asking, ‘What does it look like to be successful in manufacturing? What are the requirements around it?’ She’s obviously had a really large role to play here on the MIT campus in any number of important studies.”

“If I have a great idea for a new drug or food product … if I have to ship it off somewhere to figure out if I can make it or not, I lose time, I lose momentum, I lose financing.” Christopher Love “She always asks challenging questions and really values the collaboration between engineering and social science and management,” says John Hart, head of the MIT Department of Mechanical Engineering, director of the Center for Advanced Production Technologies, and the third co-director, with Berger and Love, of the Initiative for New Manufacturing. Moreover, Love adds, “the number of people she’s trained and mentored and brought along through the years reflects her commitment.”

For instance, Berger was the PhD advisor of Richard Locke, currently dean of the MIT Sloan School of Management. Separately, she spent nearly two decades as director of MISTI, the MIT program that sends students abroad for internships and study. Basically, Berger’s footprints are all around MIT. And now, in her 80s, she is helping to lead the Initiative for New Manufacturing. Indeed, she came up with its name herself. The initiative raises a couple of questions. What is new in the world of US manufacturing? And what can MIT do to help it? Home alone To start with, the Initiative for New Manufacturing is an ongoing project designed to enhance many aspects of US manufacturing. Berger’s previous efforts ended in written summaries—which have helped shape public dialogue around manufacturing. But the new initiative was not designed with an endpoint in mind. Since last spring, the Initiative for New Manufacturing has signed up industry partners—Amgen, Autodesk, Flex, GE Vernova, PTC, Sanofi, and Siemens—with which it may collaborate on manufacturing advances. It has also launched a 12-month certificate program, the Technologist Advanced Manufacturing Program (TechAMP), in partnership with six universities, community colleges, and technology centers. The courses, held at the partner institutions, give manufacturing employees and other students the chance to study basic manufacturing principles developed at MIT. “We hope that the program equips manufacturing technologists to be innovators and problem-solvers in their organizations, and to effectively deploy new technologies that can improve manufacturing productivity,” says Hart, an expert in, among other things, 3D printing, an area where firms can find new manufacturing applications. But to really grasp what MIT can do today, we need to look at how manufacturing in the US has shrunk.

The first few decades after World War II were a golden age of American manufacturing. The country led the world in making things, and the sector accounted for about a quarter of US GDP throughout the 1950s. In recent years, that figure has hovered around 10%. In 1959 there were 15 million manufacturing jobs in the US. By 1979, the rapidly growing country had around 20 million such jobs, even as the economy was diversifying. But the 1980s and the first decade of the 2000s saw big losses of manufacturing jobs, and there are about 12.8 million in the US today. As even Berger will acknowledge, the situation is not going to turn around instantly. “Manufacturing at the moment is really still in decline,” she says. “The number of workers has gone down, and investment in manufacturing has actually gone down over the last year.”