Your Gateway to Power, Energy, Datacenters, Bitcoin and AI

Dive into the latest industry updates, our exclusive Paperboy Newsletter, and curated insights designed to keep you informed. Stay ahead with minimal time spent.

Discover What Matters Most to You

AI

Lorem Ipsum is simply dummy text of the printing and typesetting industry.

Bitcoin:

Lorem Ipsum is simply dummy text of the printing and typesetting industry.

Datacenter:

Lorem Ipsum is simply dummy text of the printing and typesetting industry.

Energy:

Lorem Ipsum is simply dummy text of the printing and typesetting industry.

Discover What Matter Most to You

Featured Articles

From packets to prompts: What Cisco’s AITECH certification means for IT pros

Cisco positions the AITECH learning path as a bridge from “traditional knowledge-based work” to innovation-driven roles augmented by AI, explicitly targeting professionals who need to design technical solutions, automate tasks, and lead teams using modern AI tools and methodologies. The curriculum spans AI-assisted code generation, AI-driven data analysis, model customization (including RAG), and workflow automation wrapped in governance and security best practices. Why this certification matters now The timing of AITECH aligns with the reality facing most IT organizations: AI is already creeping into operations, security, networking, and collaboration, but skills lag badly. Cisco explicitly describes AITECH as meant to “close the AI skills gap” and prepare technical staff to confidently embed AI into daily operations and drive adoption inside their organizations. Instead of creating yet another “AI expert” badge, Cisco is acknowledging that: AI is becoming a first-class consumer of infrastructure resources, from GPUs to storage to high-bandwidth networking. Network and infrastructure teams need to understand AI workflows well enough to support and optimize them, not just keep the pipes up. Everyday technical tasks—writing code, troubleshooting, analyzing logs, creating reports—can be materially improved by AI if practitioners know how to use it safely and effectively. In that context, AITECH is less about learning isolated AI theory and more about hardening the applied AI skills that will define the next generation of infrastructure roles. For enterprises staring down a flood of AI projects, having a common competency baseline around prompt engineering, ethics, data practices, and automation is increasingly nonnegotiable. At Cisco Live, I caught up with Par Merat, vice president of learning at Cisco, and we talked about this certification and the thought process behind it. “We are focused on reskilling engineers around AI and how that can help them with their current jobs while preparing for the future,” Merat said. “This looks at

HPE’s latest Juniper routers target large‑scale AI fabrics

The three new models give customers several options for configurations and throughput capacity, but they all share support for the same deep buffers, security, and optics for AI network fabric buildouts, Francis said. In addition to the new hardware, HPE added new AI support, including a Model Context Protocol (MCP) server, to the Juniper Routing Director to help customers build, configure, and optimize networks, Francis said. The Routing Director is the vendor’s routing automation and traffic engineering platform. Juniper Routing Director provides structured, real-time context from across the WAN, HPE says, and it enables agentic AI, including a MCP server, to expose data and actions in a model-friendly way. “The result? With natural language, an AI assistant can go beyond analysis—it can act (with the right permissions) to orchestrate changes, validate configurations, run active tests, optimize services, and even help manage security patch workflows,” HPE wrote in a blog post about the enhancement.

New Relic connects observability platform to business outcomes

Industry watchers believe that vision will take some time to become a reality across enterprise organizations. “Every organization is a snowflake in its adoption curve and readiness timeline,” says Stephen Elliot, global group vice president at IDC. “IT behavioral change is one of the most underreported requirements for agentic AI adoption. Trust is the required ingredient.” New Relic also expanded its Digital Experience Monitoring suite to support micro frontend (MFE) architectures, where web applications are broken into smaller, team-managed components. Engineers can now monitor every component and collect metrics on performance timing, errors, renders, and lifecycle methods to trace how dependencies affect the end-user experience. Separate agentic AI monitoring capabilities add a service map of agent-to-agent interactions and drill-down traces for individual agents and tools, which New Relic says will address a visibility gap as multi-agent deployments grow. IDC’s Elliot says the business-outcome framing is an industry-wide trend, but that New Relic’s extension of digital experience management into revenue intelligence is meaningful. “Every vendor needs to communicate value in both technology and business terms,” he explains. “One is no longer enough.” Elliott also says New Relic’s hybrid OpenTelemetry approach, which lets customers use OTEL instrumentation without separate collector infrastructure, is increasingly table stakes for enterprise buyers. “OTEL is here to stay, and its adoption continues to increase. It is increasingly a product requirement to support as more enterprises make it part of their observability strategies,” Elliot says. Intelligent Workloads is available as a preview for users of New Relic’s transaction monitoring solution, Transaction 360. The remaining capabilities are available in preview to all New Relic platform users.

The Download: radioactive rhinos, and the rise and rise of peptides

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology. Why conservationists are making rhinos radioactive Every year, poachers shoot hundreds of rhinos, fishing crews haul millions of sharks out of protected seas, and smugglers carry countless animals and plants across borders. This illegal activity is incredibly hard to disrupt, since it’s backed by sophisticated criminal networks and the perpetrators know that their chances of being caught are slim. With an annual value of $20 billion, according to Interpol, it’s the world’s fourth-most-lucrative criminal enterprise after trafficking in drugs, weapons, and people.The environmental guardians facing up to these nefarious networks—dispersed alliances of rangers, community groups, and law enforcement officers—have long been ill equipped and underfunded.Still, there is genuine hope that tech could help turn the tide—and prevent poaching at the source. Read the full story. —Matthew Ponsford

This story is from the next print issue of MIT Technology Review magazine, which is all about crime. If you haven’t already, subscribe now to receive future issues once they land.

Peptides are everywhere. Here’s what you need to know. Want to lose weight? Get shredded? Stay mentally sharp? A wellness influencer might tell you to take peptides, the latest cure-all in the alternative medicine arsenal. They’re everywhere on social media, and that popularity seems poised to grow.The benefits and risks of many of these compounds, however, are largely unknown. Some of the most popular peptides have never been tested in human trials. They are sold for research purposes, not human consumption, and some are illegal knockoffs of wildly successful weight-loss medicines. That raises big questions about their safety and effectiveness, which are still unresolved. Read the full story. —Cassandra Willyard This story is part of MIT Technology Review Explains: our series untangling the complex, messy world of technology to help you understand what’s coming next. You can read more from the series here. The human work behind humanoid robots is being hidden In January, Nvidia’s Jensen Huang proclaimed that we are entering the era of physical AI, when artificial intelligence will move beyond language and chatbots into physically capable machines. (He also said the same thing the year before, by the way.) The implication—fueled by new demonstrations of humanoid robots putting away dishes or assembling cars—is that mimicking human limbs with single-purpose robot arms is the old way of automation. The new way is to replicate the way humans think, learn, and adapt while they work. The problem is that the lack of transparency about the human labor involved in training and operating such robots leaves the public both misunderstanding what robots can actually do and failing to see the strange new forms of work forming around them.

Just as our words became training data for large language models, our movements are now poised to follow the same path. Except this future might leave humans with an even worse deal, and it’s already beginning. Read the full story. —James O’Donnell This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here. The must-reads I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology. 1 Anthropic has accused DeepSeek of using Claude to train its own model It claims three Chinese companies siphoned its data to help their systems catch up. (WSJ $)+ OpenAI made similar allegations against DeepSeek the other week. (CNN)+ DeepSeek’s latest model was reportedly trained on banned US Nvidia chips. (Reuters)2 Donald Trump’s global 10% tariff has come into effect But the US President is still hoping to increase it to 15%. (FT $)+ Tariffs are bad news for batteries. (MIT Technology Review)3 What the US stands to lose if China invades TaiwanAccess to crucial chips, for one. (NYT $)+ Apple is moving some of its Mac Mini production to Houston from Asia. (WSJ $)+ Taiwan’s “silicon shield” could be weakening. (MIT Technology Review)4 The UK’s first baby has been born using a womb transplanted from a dead donorIt’s positive news for people born without a womb that hope to give birth. (BBC)+ Everything you need to know about artificial wombs. (MIT Technology Review)5 Binance sent $1.7 billion to sanctioned Iranian entitiesIt comes after the crypto exchange promised to clean up its act in the wake of its founder being sent to prison. (NYT $)+ Binance fired workers who raised concerns about the transactions. (WSJ $) 6 ICE is using free walkie-talkie app Zello to communicateIt had previously been used by at least two of the January 6 insurrectionists. (404 Media)+ ICE has resurrected pandemic-style shelter in place orders. (Vox)

7 Meta built an app for teens, but never released itBell was supposed to bring high school classmates together, a court filing has revealed. (NBC News) 8 Battery storage is a rare US clean energy success storyThings are looking up for the sector, surprisingly. (Wired $)+ What a massive thermal battery means for energy storage. (MIT Technology Review)

9 How to play Tetris on the cover of a magazineIt’s a whole new way of looking at portable gaming devices. (The Verge)10 Meta’s director of AI safety allowed OpenClaw to accidentally delete her inboxA cautionary tale, if ever there was one. (TechCrunch)+ It wouldn’t stop, dispute her repeatedly ordering it to. (404 Media)+ Moltbook was peak AI theater. (MIT Technology Review) Quote of the day “Shameless people stealing everyone’s data then complaining about other people stealing from them.” —AI researcher Timnit Gebru has little sympathy for Anthropic’s complaints that DeepSeek and other Chinese companies violated its terms by using Claude to train their models, she explains in a post on X.

One more thing How sounds can turn us on to the wonders of the universeAstronomy should, in principle, be a welcoming field for blind researchers. But across the board, science is full of charts, graphs, databases, and images that are designed to be seen.So researcher Sarah Kane, who is legally blind, was thrilled three years ago when she encountered a technology known as sonification, designed to transform information into sound. Since then she’s been working with a project called Astronify, which presents astronomical information in audio form.For millions of blind and visually impaired people, sonification could be transformative—opening access to education, to once unimaginable careers, and even to the secrets of the universe. Read the full story. —Corey S. Powell

Why conservationists are making rhinos radioactive

Every year, poachers shoot hundreds of rhinos, fishing crews haul millions of sharks out of protected seas, and smugglers carry countless animals and plants across borders. This illegal activity is incredibly hard to disrupt, since it’s backed by sophisticated criminal networks and the perpetrators know that their chances of being caught are slim. With an annual value of $20 billion, according to Interpol, it’s the world’s fourth-most-lucrative criminal enterprise after trafficking in drugs, weapons, and people. The United Nations seeks to end trafficking in protected species by 2030. But the environmental guardians facing up to these nefarious networks—dispersed alliances of rangers, community groups, and law enforcement officers—have long been ill equipped and underfunded. A recent report by the UN Office on Drugs and Crime found “no reason for confidence” that the 2030 target would be reached. Still, there is genuine hope that tech could help turn the tide. Tools initially developed for cities and research facilities are increasingly moving into the planet’s wild places, allowing environmental agencies and self-motivated communities in both richer and poorer countries to step up their efforts to detect illegal goods, trace smuggling networks, and prevent poaching at the source. In December, Interpol announced it had seized record numbers of live animals, thanks in part to a set of sophisticated tools that had helped to expose hidden networks behind trafficking. Its Operation Thunder 2025 coordinated law enforcement agencies from 134 countries and seized 30,000 live animals, from apes to butterflies, using a suite of technologies including digital forensics and AI-driven detection. “The success of Thunder 2025 shows that modern threats demand modern tools,” says José Adrián Sanchez Romero, an operations coordinator at Interpol’s environmental security subdirectorate. Here are five examples of technologies that are arming conservationists and others in the battle to end wildlife crime. COURTESY OF THE RHISOTOPE PROJECT Tagging rhinos In July, a group of South African researchers announced they had won government approval for one of the most eyebrow-raising attempts to prevent wildlife crime: drilling radioactive substances into the horns of rhinoceroses.

In an effort dubbed the Rhisotope Project, the group worked in 2024 and 2025 to fit 33 rhinos from Limpopo Rhino Orphanage in South Africa with pellets containing low-level radioactive isotopes. The project is supported by the International Atomic Energy Agency. Blood samples and veterinary exams have shown that the pellets don’t affect the health of the rhinos, the rangers, or the surrounding environment. But the isotope emits enough radiation for the horns to be detected by radiation portal monitors, devices that can scan cargo containers and vehicles to detect illicit sources of radiation. Eleven thousand such monitors are already in operation at airports and shipping terminals worldwide, in addition to thousands of personal monitors worn by border security. In November 2024, Rhisotope tested the system at New York airports and harbors in collaboration with the US Customs and Border Patrol. The group found that border guards could detect an individual horn the team had planted inside a full 40-foot shipping container.

The project was pioneered by James Larkin, director of the radiation and health physics unit at the University of the Witwatersrand in South Africa. Though the country is currently home to 15,000 rhinos, the majority of Africa’s total population, poachers have killed 10,000 rhinos there since 2007. In the past, the common approach to deterring poachers was to eliminate the part they’re seeking, preemptively cutting off the animal’s entire horn. But dehorning requires rhinos to be sedated for long periods, and it’s a stressful and costly process that must be repeated every 18 to 24 months, as rhino horns grow back. The act also renders rhinos less able to protect themselves, and they tend to withdraw from social interactions and competition for mates. The new approach is far less painful and time-consuming. Each dose costs 21,500 South African rand (about $1,300) per animal and remains active for five years. Warning signs along perimeter fences make it clear the animals have been tagged, helping to deter poachers. Larkin, who spent his career as a nuclear safety expert, says he was initially wary when conservationists suggested to him that radioactive substances could help prevent rhino poaching, joking that he didn’t want to end up in jail if anyone got hurt. But he changed his mind when he realized there was a dose that would be harmless to bystanders while making the horns both worthless to smugglers and readily detectable. Poachers will kill a rhino for even a small amount of horn, which can fetch $60,000 per kilogram as an ingredient for traditional medicines. Adding isotopes, though, renders the horns potentially unsafe to consume, and it’s hard for smugglers to reverse: “It’s almost impossible to remove isotopes unless you are a skilled radiation protection officer who knows what they are looking for,” Larkin says. Even so, he’s tight-lipped about the compound the pellets are made from and what they look like: “I don’t want to help criminals,” he explains. The South African health agency has now approved Rhisotope to roll out the program across the country. “We have a goal ultimately to treat up to 500 rhinos a year,” says Jessica Babich, chief executive of the project. At the same time, the group is working to adapt its approach to other popular poaching targets—elephant tusks and pangolin scales—as well as trafficked plants like cycads. COURTESY OF TARONGA CONSERVATION SOCIETY COURTESY OF TARONGA CONSERVATION SOCIETY Scanning signatures For many exotic pets, from birds to pythons, there are two parallel trades: a legal one in farmed or captive-bred animals and an illicit one in creatures taken from the wild. But faced with a lizard or a parrot, how can law enforcement know its origin story?

In Australia, some conservationists have been trying to follow the numbers. It’s very hard to breed the egg-laying mammals known as short-beaked echidnas. US zoos have yielded only 19 echidna babies, or “puggles,” in a century of efforts. So Indonesia’s yearly export of dozens of “captive-bred” echidnas has long raised suspicions. To address the issue, a team at Australia’s Taronga Conservation Society, led by Kate Brandis, has developed an x-ray fluorescence (XRF) gun that can analyze elemental signatures in keratin—the stuff of quills, feathers, and hair. Wild echidnas, for instance, forage for a diverse diet of beetle larvae, ants, and grubs, while captive animals tend to be raised on a low-diversity diet of commercial feed. Each of these dietary histories leaves a record in the mammals’ porcupine-like spines, which can be read with high accuracy using a handheld XRF gun. Similar evidence can be found in other species, like cockatoos, pangolins, and turtles, which the team has used to test the device. There is certainly plenty more to be done: Australia, home to many unique species that live nowhere else on the planet, is a target for collectors from Asia, Europe, and the US. Brandis is targeting some of the species most often trafficked out of the country, including shingleback and blue-tongue lizards. Not long ago, Australian environmental authorities led a trial study at post offices across the country, using the XRF gun alongside AI-equipped parcel scanners, which Brandis’s team had trained to recognize concealed species in real time. The trial uncovered more than 100 legally protected lizards that were being shipped out of Australia; a distributor was sentenced to more than three years in jail. COURTESY OF SKYLIGHT AI COURTESY OF SKYLIGHT AI AI in the sky Commercial fishing, scuba diving, and oil exploration are all prohibited in the Papahānaumokuākea Marine National Monument near Hawaii, an expanse of the Pacific larger than all US national parks combined. It is just one of a number of vast marine protected areas that have emerged in recent years, along with global pacts to conserve 30% of Earth’s land and sea.

But establishing these reserves is just one step. Enforcing their protection is another matter. And for many marine reserves—especially those in the Global South—there is no real way to do that, says Ted Schmitt, senior director of conservation at the nonprofit Allen Institute for AI (AI2). Thousands of square kilometers of open ocean is a lot to monitor. Even with satellites scanning the marine areas, the reality until recently was that you had to know what you were looking for: “When you have the vastness of the ocean, you can have analysts who are very well trained, looking for vessels,” he says. Even then, there is little chance of finding wrongdoing without intelligence from the ground. In 2017, Microsoft cofounder Paul Allen began developing a tool called Skylight to provide analysts with more of that intelligence, using AI to help analyze satellite and ship-tracking data to detect suspicious behavior. The project moved to AI2 after Allen’s death in 2018, and the technology has since been adopted by more than 200 organizations in more than 70 countries. “We’re basically monitoring the entire ocean 24-7-365, and surfacing all these vessels,” says Schmitt. To see how coast guards use the system, Schmitt points to a series of arrests in Panama in early 2025. That January, satellites found 16 boats about 200 kilometers off the coast inside the Coiba Ridge marine reserve, which serves as a migratory highway for sharks, rays, and large fish like yellowfin tuna. Skylight’s AI algorithms, trained to recognize the signature movements of various types of fishing, detected long-line fishing and requested higher-resolution images of the site from a commercial satellite flying overhead. The images and Skylight’s analysis were used by Panama’s environmental agency and military, which deployed ships and aircraft to the area, ultimately seizing six vessels and thousands of kilograms of illegally taken fish. Skylight AI detects around 300,000 vessels per week, according to the company’s platform analytics. Stories like Coiba Ridge make clear that AI can benefit partners who are working tirelessly on the ground, says Schmitt: “The Panama case really was one of those ‘wow’ moments, not because the technology finally proved itself, but because the agencies that needed to operationalize it, and actually take it to a legal finish, did it.”

COURTESY OF WILDTECHDNA Rapid DNA tests When the conservation scientist Natalie Schmitt was researching snow leopards in remote areas of Nepal, she worked with people who could point out signs of these elusive big cats—often a pile of droppings. But the results weren’t reliable: Leopard scat can easily be confused with the poop of wolves and foxes, which share the same habitat and prey, she explains. What Schmitt wanted was a tool that could identify the animal involved, right on the spot—ideally by finding a way to sequence the DNA in the scat. While some laboratories can take DNA samples of such material and identify species of interest, they are few and far between in rich countries and usually nonexistent in poorer countries, meaning that this process can be weeks long and involve shipping samples cross-country or across borders. This is a problem not just for field research but for wildlife trafficking enforcement. Imagine a border agent who has just opened a box of shark-like fins or a shipment of live parrots and needs to know whether the particular species is one that can legally be captured and transported. People in this situation don’t have weeks to spare. In 2020, Schmitt founded WildTechDNA, which has developed a DNA test that aims to do that work on the fly. The test, which is about as easy and fast as a home pregnancy test, employs a simple two-step process. First, a new extraction method—“Literally, put the sample in the extraction tube and squeeze 10 times,” she says—can cut the time it takes to pull DNA out of a sample from a day to about three minutes. Then, to actually test that DNA, the company took inspiration from the covid pandemic. The researchers found they could use technology similar to rapid at-home tests to identify whether the DNA in question belongs to a specific species: “Our tests use very simple lateral-flow strips to tell you whether a sample belongs to your target species of interest, yes or no.” The strips can be tailored to test for a wide range of targets, from big cats to microbes, opening up diverse applications in the wild. They can tell if samples of hair belong to a snow leopard, or if a frog has been infected with the fungi that cause chytridiomycosis, a disease that has devastated amphibians worldwide and wiped out at least 90 species. WildTechDNA’s earliest adopter was the Canadian government, which wanted to detect European eels—a critically endangered species that is effectively impossible to identify by appearance. This confusion has allowed €3 billion of European eels to be smuggled each year, disguised as other eel species. Some of that passes into Canada on its way to suppliers in Japan and China, and in some cases on to Canadian restaurants and consumers. “When a shipment is suspected to contain European eel, they’ll randomly sample it and they’ll send those samples off to a lab across the country, which will take three weeks,” says Schmitt of traditional tracking methods. WildTechDNA developed tests specific to European eels and taught Canadian enforcement officers how to use them, so that they could launch a “nationwide European eel blitz,” she says. In a 2025 campaign, European eels turned up in fewer than 1% of shipments. Schmitt says Canadian authorities have not disclosed details about investigations but are encouraged by the results—significantly below the rates detected using older technologies in 2016, an improvement they attribute to better surveillance.

COURTESY OF RAINFOREST CONNECTION Listening in The world’s forests are increasingly filled with snooping devices. In addition to affordable camera traps and animal-mounted GPS tags, low-cost solar-powered microphones have proved to be strikingly effective at revealing what’s living in some of the planet’s most densely inhabited and biodiverse environments. Rainforest Connection, a nonprofit founded by the physicist turned conservation-tech entrepreneur Topher White in 2014, was a pioneer in bioacoustic monitoring for conservation. The group initially repurposed old phones into low-cost monitoring devices but has since developed a standardized device called the Guardian that has now been deployed in more than 600 locations.

Guardians are designed to capture a broad soundscape of the rainforest: “They sit out in the rainforest for long periods of time, up in treetops. They’re solar-powered, they can last for years, and we listen to all the sounds continuously and transmit that up to the cloud, where we are then able to analyze it for all sorts of things,” says White. From the outset, the aim was to use these devices to pick up immediate threats—“chainsaws, logging trucks, gunshots, things like that,” White says—and relay real-time alerts to local partners, including police, Indigenous groups, and local communities that protect the land. COURTESY OF RAINFOREST CONNECTION COURTESY OF RAINFOREST CONNECTION Bioacoustic monitoring devices have rapidly advanced in recent years. Many can now analyze data before transmitting it, and they’ve become cheaper to make as batteries have gotten smaller. By today’s standards, Rainforest Connection’s sensors are “over-engineered,” says White. But having a large number of detectors already deployed means there is ample data that can be mined for signals beyond well-known red flags, like gunshots. “An area for a lot more innovation going forward is to use the soundscape itself as a detector,” White says. Rainforest Connection and the German software firm SAP tested this approach on the island of Sumatra and found they could identify human intruders by using machine learning to hunt for “uncharacteristic sudden changes to the soundscape.” For example, tracking animal calls—and noting when those animals go silent—could reveal the arrival of poachers. In 2026, Rainforest Connection will roll out this approach to reserves in Thailand, Jamaica, and Romania by building a unique model for each environment, trained on thousands of hours of audio and verified using camera traps. “We have a lot of eyes and ears in the forest already, all of which are aware and reacting to each other and to new stimuli,” White says. For the rest of us, Rainforest Connection’s unfiltered stream has another use: an app where you can listen to the livestream from the Ecuadorian rainforest, taking in the complete soundscape of birdsong, frog chatter, and cicada chirps. Matthew Ponsford is a freelance reporter based in London.

Energy Secretary Keeps Critical Generation Online in Mid-Atlantic

Emergency order keeps critical generation online and addresses critical grid reliability issues facing the Mid-Atlantic region of the United States WASHINGTON—U.S. Secretary of Energy Chris Wright issued an emergency order to address critical grid reliability issues facing the Mid-Atlantic region of the United States. The emergency order directs PJM Interconnection, L.L.C. (PJM), in coordination with Constellation Energy Corporation, to ensure Units 3 and 4 of the Eddystone Generating Station in Pennsylvania remain available for operation and to employ economic dispatch to minimize costs for the American people. The units were originally slated to shut down on May 31, 2025. “The energy sources that perform when you need them most are inherently the most valuable—that’s why natural gas and oil were valuable during recent winter storms,” Secretary Wright said. “Hundreds of American lives have likely been saved because of President Trump’s actions keeping critical generation online, including this Pennsylvania generating station which ran during Winter Storm Fern. This emergency order will mitigate the risk of blackouts and maintain affordable, reliable, and secure electricity access across the region.” The Eddystone Units were integral in stabilizing the grid during Winter Storm Fern. Between January 26-29, the units ran for over 124 hours cumulatively, providing critical generation in the midst of the energy emergency. As outlined in DOE’s Resource Adequacy Report, power outages could increase by 100 times in 2030 if the U.S. continues to take reliable power offline. Furthermore, NERC’s 2025 Long-Term Reliability Assessment warns, “The continuing shift in the resource mix toward weather-dependent resources and less fuel diversity increases risks of supply shortfalls during winter months.” Secretary Wright ordered that the two Eddystone Generating Station units remain online past their planned retirement date in a May 30, 2025 emergency order. Subsequent orders were issued on August 28, 2025 and November 26, 2025. Keeping these units operational

From packets to prompts: What Cisco’s AITECH certification means for IT pros

Cisco positions the AITECH learning path as a bridge from “traditional knowledge-based work” to innovation-driven roles augmented by AI, explicitly targeting professionals who need to design technical solutions, automate tasks, and lead teams using modern AI tools and methodologies. The curriculum spans AI-assisted code generation, AI-driven data analysis, model customization (including RAG), and workflow automation wrapped in governance and security best practices. Why this certification matters now The timing of AITECH aligns with the reality facing most IT organizations: AI is already creeping into operations, security, networking, and collaboration, but skills lag badly. Cisco explicitly describes AITECH as meant to “close the AI skills gap” and prepare technical staff to confidently embed AI into daily operations and drive adoption inside their organizations. Instead of creating yet another “AI expert” badge, Cisco is acknowledging that: AI is becoming a first-class consumer of infrastructure resources, from GPUs to storage to high-bandwidth networking. Network and infrastructure teams need to understand AI workflows well enough to support and optimize them, not just keep the pipes up. Everyday technical tasks—writing code, troubleshooting, analyzing logs, creating reports—can be materially improved by AI if practitioners know how to use it safely and effectively. In that context, AITECH is less about learning isolated AI theory and more about hardening the applied AI skills that will define the next generation of infrastructure roles. For enterprises staring down a flood of AI projects, having a common competency baseline around prompt engineering, ethics, data practices, and automation is increasingly nonnegotiable. At Cisco Live, I caught up with Par Merat, vice president of learning at Cisco, and we talked about this certification and the thought process behind it. “We are focused on reskilling engineers around AI and how that can help them with their current jobs while preparing for the future,” Merat said. “This looks at

HPE’s latest Juniper routers target large‑scale AI fabrics

The three new models give customers several options for configurations and throughput capacity, but they all share support for the same deep buffers, security, and optics for AI network fabric buildouts, Francis said. In addition to the new hardware, HPE added new AI support, including a Model Context Protocol (MCP) server, to the Juniper Routing Director to help customers build, configure, and optimize networks, Francis said. The Routing Director is the vendor’s routing automation and traffic engineering platform. Juniper Routing Director provides structured, real-time context from across the WAN, HPE says, and it enables agentic AI, including a MCP server, to expose data and actions in a model-friendly way. “The result? With natural language, an AI assistant can go beyond analysis—it can act (with the right permissions) to orchestrate changes, validate configurations, run active tests, optimize services, and even help manage security patch workflows,” HPE wrote in a blog post about the enhancement.

New Relic connects observability platform to business outcomes

Industry watchers believe that vision will take some time to become a reality across enterprise organizations. “Every organization is a snowflake in its adoption curve and readiness timeline,” says Stephen Elliot, global group vice president at IDC. “IT behavioral change is one of the most underreported requirements for agentic AI adoption. Trust is the required ingredient.” New Relic also expanded its Digital Experience Monitoring suite to support micro frontend (MFE) architectures, where web applications are broken into smaller, team-managed components. Engineers can now monitor every component and collect metrics on performance timing, errors, renders, and lifecycle methods to trace how dependencies affect the end-user experience. Separate agentic AI monitoring capabilities add a service map of agent-to-agent interactions and drill-down traces for individual agents and tools, which New Relic says will address a visibility gap as multi-agent deployments grow. IDC’s Elliot says the business-outcome framing is an industry-wide trend, but that New Relic’s extension of digital experience management into revenue intelligence is meaningful. “Every vendor needs to communicate value in both technology and business terms,” he explains. “One is no longer enough.” Elliott also says New Relic’s hybrid OpenTelemetry approach, which lets customers use OTEL instrumentation without separate collector infrastructure, is increasingly table stakes for enterprise buyers. “OTEL is here to stay, and its adoption continues to increase. It is increasingly a product requirement to support as more enterprises make it part of their observability strategies,” Elliot says. Intelligent Workloads is available as a preview for users of New Relic’s transaction monitoring solution, Transaction 360. The remaining capabilities are available in preview to all New Relic platform users.

The Download: radioactive rhinos, and the rise and rise of peptides

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology. Why conservationists are making rhinos radioactive Every year, poachers shoot hundreds of rhinos, fishing crews haul millions of sharks out of protected seas, and smugglers carry countless animals and plants across borders. This illegal activity is incredibly hard to disrupt, since it’s backed by sophisticated criminal networks and the perpetrators know that their chances of being caught are slim. With an annual value of $20 billion, according to Interpol, it’s the world’s fourth-most-lucrative criminal enterprise after trafficking in drugs, weapons, and people.The environmental guardians facing up to these nefarious networks—dispersed alliances of rangers, community groups, and law enforcement officers—have long been ill equipped and underfunded.Still, there is genuine hope that tech could help turn the tide—and prevent poaching at the source. Read the full story. —Matthew Ponsford

This story is from the next print issue of MIT Technology Review magazine, which is all about crime. If you haven’t already, subscribe now to receive future issues once they land.

Peptides are everywhere. Here’s what you need to know. Want to lose weight? Get shredded? Stay mentally sharp? A wellness influencer might tell you to take peptides, the latest cure-all in the alternative medicine arsenal. They’re everywhere on social media, and that popularity seems poised to grow.The benefits and risks of many of these compounds, however, are largely unknown. Some of the most popular peptides have never been tested in human trials. They are sold for research purposes, not human consumption, and some are illegal knockoffs of wildly successful weight-loss medicines. That raises big questions about their safety and effectiveness, which are still unresolved. Read the full story. —Cassandra Willyard This story is part of MIT Technology Review Explains: our series untangling the complex, messy world of technology to help you understand what’s coming next. You can read more from the series here. The human work behind humanoid robots is being hidden In January, Nvidia’s Jensen Huang proclaimed that we are entering the era of physical AI, when artificial intelligence will move beyond language and chatbots into physically capable machines. (He also said the same thing the year before, by the way.) The implication—fueled by new demonstrations of humanoid robots putting away dishes or assembling cars—is that mimicking human limbs with single-purpose robot arms is the old way of automation. The new way is to replicate the way humans think, learn, and adapt while they work. The problem is that the lack of transparency about the human labor involved in training and operating such robots leaves the public both misunderstanding what robots can actually do and failing to see the strange new forms of work forming around them.

Just as our words became training data for large language models, our movements are now poised to follow the same path. Except this future might leave humans with an even worse deal, and it’s already beginning. Read the full story. —James O’Donnell This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here. The must-reads I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology. 1 Anthropic has accused DeepSeek of using Claude to train its own model It claims three Chinese companies siphoned its data to help their systems catch up. (WSJ $)+ OpenAI made similar allegations against DeepSeek the other week. (CNN)+ DeepSeek’s latest model was reportedly trained on banned US Nvidia chips. (Reuters)2 Donald Trump’s global 10% tariff has come into effect But the US President is still hoping to increase it to 15%. (FT $)+ Tariffs are bad news for batteries. (MIT Technology Review)3 What the US stands to lose if China invades TaiwanAccess to crucial chips, for one. (NYT $)+ Apple is moving some of its Mac Mini production to Houston from Asia. (WSJ $)+ Taiwan’s “silicon shield” could be weakening. (MIT Technology Review)4 The UK’s first baby has been born using a womb transplanted from a dead donorIt’s positive news for people born without a womb that hope to give birth. (BBC)+ Everything you need to know about artificial wombs. (MIT Technology Review)5 Binance sent $1.7 billion to sanctioned Iranian entitiesIt comes after the crypto exchange promised to clean up its act in the wake of its founder being sent to prison. (NYT $)+ Binance fired workers who raised concerns about the transactions. (WSJ $) 6 ICE is using free walkie-talkie app Zello to communicateIt had previously been used by at least two of the January 6 insurrectionists. (404 Media)+ ICE has resurrected pandemic-style shelter in place orders. (Vox)

7 Meta built an app for teens, but never released itBell was supposed to bring high school classmates together, a court filing has revealed. (NBC News) 8 Battery storage is a rare US clean energy success storyThings are looking up for the sector, surprisingly. (Wired $)+ What a massive thermal battery means for energy storage. (MIT Technology Review)

9 How to play Tetris on the cover of a magazineIt’s a whole new way of looking at portable gaming devices. (The Verge)10 Meta’s director of AI safety allowed OpenClaw to accidentally delete her inboxA cautionary tale, if ever there was one. (TechCrunch)+ It wouldn’t stop, dispute her repeatedly ordering it to. (404 Media)+ Moltbook was peak AI theater. (MIT Technology Review) Quote of the day “Shameless people stealing everyone’s data then complaining about other people stealing from them.” —AI researcher Timnit Gebru has little sympathy for Anthropic’s complaints that DeepSeek and other Chinese companies violated its terms by using Claude to train their models, she explains in a post on X.

One more thing How sounds can turn us on to the wonders of the universeAstronomy should, in principle, be a welcoming field for blind researchers. But across the board, science is full of charts, graphs, databases, and images that are designed to be seen.So researcher Sarah Kane, who is legally blind, was thrilled three years ago when she encountered a technology known as sonification, designed to transform information into sound. Since then she’s been working with a project called Astronify, which presents astronomical information in audio form.For millions of blind and visually impaired people, sonification could be transformative—opening access to education, to once unimaginable careers, and even to the secrets of the universe. Read the full story. —Corey S. Powell

Why conservationists are making rhinos radioactive

Every year, poachers shoot hundreds of rhinos, fishing crews haul millions of sharks out of protected seas, and smugglers carry countless animals and plants across borders. This illegal activity is incredibly hard to disrupt, since it’s backed by sophisticated criminal networks and the perpetrators know that their chances of being caught are slim. With an annual value of $20 billion, according to Interpol, it’s the world’s fourth-most-lucrative criminal enterprise after trafficking in drugs, weapons, and people. The United Nations seeks to end trafficking in protected species by 2030. But the environmental guardians facing up to these nefarious networks—dispersed alliances of rangers, community groups, and law enforcement officers—have long been ill equipped and underfunded. A recent report by the UN Office on Drugs and Crime found “no reason for confidence” that the 2030 target would be reached. Still, there is genuine hope that tech could help turn the tide. Tools initially developed for cities and research facilities are increasingly moving into the planet’s wild places, allowing environmental agencies and self-motivated communities in both richer and poorer countries to step up their efforts to detect illegal goods, trace smuggling networks, and prevent poaching at the source. In December, Interpol announced it had seized record numbers of live animals, thanks in part to a set of sophisticated tools that had helped to expose hidden networks behind trafficking. Its Operation Thunder 2025 coordinated law enforcement agencies from 134 countries and seized 30,000 live animals, from apes to butterflies, using a suite of technologies including digital forensics and AI-driven detection. “The success of Thunder 2025 shows that modern threats demand modern tools,” says José Adrián Sanchez Romero, an operations coordinator at Interpol’s environmental security subdirectorate. Here are five examples of technologies that are arming conservationists and others in the battle to end wildlife crime. COURTESY OF THE RHISOTOPE PROJECT Tagging rhinos In July, a group of South African researchers announced they had won government approval for one of the most eyebrow-raising attempts to prevent wildlife crime: drilling radioactive substances into the horns of rhinoceroses.

In an effort dubbed the Rhisotope Project, the group worked in 2024 and 2025 to fit 33 rhinos from Limpopo Rhino Orphanage in South Africa with pellets containing low-level radioactive isotopes. The project is supported by the International Atomic Energy Agency. Blood samples and veterinary exams have shown that the pellets don’t affect the health of the rhinos, the rangers, or the surrounding environment. But the isotope emits enough radiation for the horns to be detected by radiation portal monitors, devices that can scan cargo containers and vehicles to detect illicit sources of radiation. Eleven thousand such monitors are already in operation at airports and shipping terminals worldwide, in addition to thousands of personal monitors worn by border security. In November 2024, Rhisotope tested the system at New York airports and harbors in collaboration with the US Customs and Border Patrol. The group found that border guards could detect an individual horn the team had planted inside a full 40-foot shipping container.

The project was pioneered by James Larkin, director of the radiation and health physics unit at the University of the Witwatersrand in South Africa. Though the country is currently home to 15,000 rhinos, the majority of Africa’s total population, poachers have killed 10,000 rhinos there since 2007. In the past, the common approach to deterring poachers was to eliminate the part they’re seeking, preemptively cutting off the animal’s entire horn. But dehorning requires rhinos to be sedated for long periods, and it’s a stressful and costly process that must be repeated every 18 to 24 months, as rhino horns grow back. The act also renders rhinos less able to protect themselves, and they tend to withdraw from social interactions and competition for mates. The new approach is far less painful and time-consuming. Each dose costs 21,500 South African rand (about $1,300) per animal and remains active for five years. Warning signs along perimeter fences make it clear the animals have been tagged, helping to deter poachers. Larkin, who spent his career as a nuclear safety expert, says he was initially wary when conservationists suggested to him that radioactive substances could help prevent rhino poaching, joking that he didn’t want to end up in jail if anyone got hurt. But he changed his mind when he realized there was a dose that would be harmless to bystanders while making the horns both worthless to smugglers and readily detectable. Poachers will kill a rhino for even a small amount of horn, which can fetch $60,000 per kilogram as an ingredient for traditional medicines. Adding isotopes, though, renders the horns potentially unsafe to consume, and it’s hard for smugglers to reverse: “It’s almost impossible to remove isotopes unless you are a skilled radiation protection officer who knows what they are looking for,” Larkin says. Even so, he’s tight-lipped about the compound the pellets are made from and what they look like: “I don’t want to help criminals,” he explains. The South African health agency has now approved Rhisotope to roll out the program across the country. “We have a goal ultimately to treat up to 500 rhinos a year,” says Jessica Babich, chief executive of the project. At the same time, the group is working to adapt its approach to other popular poaching targets—elephant tusks and pangolin scales—as well as trafficked plants like cycads. COURTESY OF TARONGA CONSERVATION SOCIETY COURTESY OF TARONGA CONSERVATION SOCIETY Scanning signatures For many exotic pets, from birds to pythons, there are two parallel trades: a legal one in farmed or captive-bred animals and an illicit one in creatures taken from the wild. But faced with a lizard or a parrot, how can law enforcement know its origin story?

In Australia, some conservationists have been trying to follow the numbers. It’s very hard to breed the egg-laying mammals known as short-beaked echidnas. US zoos have yielded only 19 echidna babies, or “puggles,” in a century of efforts. So Indonesia’s yearly export of dozens of “captive-bred” echidnas has long raised suspicions. To address the issue, a team at Australia’s Taronga Conservation Society, led by Kate Brandis, has developed an x-ray fluorescence (XRF) gun that can analyze elemental signatures in keratin—the stuff of quills, feathers, and hair. Wild echidnas, for instance, forage for a diverse diet of beetle larvae, ants, and grubs, while captive animals tend to be raised on a low-diversity diet of commercial feed. Each of these dietary histories leaves a record in the mammals’ porcupine-like spines, which can be read with high accuracy using a handheld XRF gun. Similar evidence can be found in other species, like cockatoos, pangolins, and turtles, which the team has used to test the device. There is certainly plenty more to be done: Australia, home to many unique species that live nowhere else on the planet, is a target for collectors from Asia, Europe, and the US. Brandis is targeting some of the species most often trafficked out of the country, including shingleback and blue-tongue lizards. Not long ago, Australian environmental authorities led a trial study at post offices across the country, using the XRF gun alongside AI-equipped parcel scanners, which Brandis’s team had trained to recognize concealed species in real time. The trial uncovered more than 100 legally protected lizards that were being shipped out of Australia; a distributor was sentenced to more than three years in jail. COURTESY OF SKYLIGHT AI COURTESY OF SKYLIGHT AI AI in the sky Commercial fishing, scuba diving, and oil exploration are all prohibited in the Papahānaumokuākea Marine National Monument near Hawaii, an expanse of the Pacific larger than all US national parks combined. It is just one of a number of vast marine protected areas that have emerged in recent years, along with global pacts to conserve 30% of Earth’s land and sea.

But establishing these reserves is just one step. Enforcing their protection is another matter. And for many marine reserves—especially those in the Global South—there is no real way to do that, says Ted Schmitt, senior director of conservation at the nonprofit Allen Institute for AI (AI2). Thousands of square kilometers of open ocean is a lot to monitor. Even with satellites scanning the marine areas, the reality until recently was that you had to know what you were looking for: “When you have the vastness of the ocean, you can have analysts who are very well trained, looking for vessels,” he says. Even then, there is little chance of finding wrongdoing without intelligence from the ground. In 2017, Microsoft cofounder Paul Allen began developing a tool called Skylight to provide analysts with more of that intelligence, using AI to help analyze satellite and ship-tracking data to detect suspicious behavior. The project moved to AI2 after Allen’s death in 2018, and the technology has since been adopted by more than 200 organizations in more than 70 countries. “We’re basically monitoring the entire ocean 24-7-365, and surfacing all these vessels,” says Schmitt. To see how coast guards use the system, Schmitt points to a series of arrests in Panama in early 2025. That January, satellites found 16 boats about 200 kilometers off the coast inside the Coiba Ridge marine reserve, which serves as a migratory highway for sharks, rays, and large fish like yellowfin tuna. Skylight’s AI algorithms, trained to recognize the signature movements of various types of fishing, detected long-line fishing and requested higher-resolution images of the site from a commercial satellite flying overhead. The images and Skylight’s analysis were used by Panama’s environmental agency and military, which deployed ships and aircraft to the area, ultimately seizing six vessels and thousands of kilograms of illegally taken fish. Skylight AI detects around 300,000 vessels per week, according to the company’s platform analytics. Stories like Coiba Ridge make clear that AI can benefit partners who are working tirelessly on the ground, says Schmitt: “The Panama case really was one of those ‘wow’ moments, not because the technology finally proved itself, but because the agencies that needed to operationalize it, and actually take it to a legal finish, did it.”

COURTESY OF WILDTECHDNA Rapid DNA tests When the conservation scientist Natalie Schmitt was researching snow leopards in remote areas of Nepal, she worked with people who could point out signs of these elusive big cats—often a pile of droppings. But the results weren’t reliable: Leopard scat can easily be confused with the poop of wolves and foxes, which share the same habitat and prey, she explains. What Schmitt wanted was a tool that could identify the animal involved, right on the spot—ideally by finding a way to sequence the DNA in the scat. While some laboratories can take DNA samples of such material and identify species of interest, they are few and far between in rich countries and usually nonexistent in poorer countries, meaning that this process can be weeks long and involve shipping samples cross-country or across borders. This is a problem not just for field research but for wildlife trafficking enforcement. Imagine a border agent who has just opened a box of shark-like fins or a shipment of live parrots and needs to know whether the particular species is one that can legally be captured and transported. People in this situation don’t have weeks to spare. In 2020, Schmitt founded WildTechDNA, which has developed a DNA test that aims to do that work on the fly. The test, which is about as easy and fast as a home pregnancy test, employs a simple two-step process. First, a new extraction method—“Literally, put the sample in the extraction tube and squeeze 10 times,” she says—can cut the time it takes to pull DNA out of a sample from a day to about three minutes. Then, to actually test that DNA, the company took inspiration from the covid pandemic. The researchers found they could use technology similar to rapid at-home tests to identify whether the DNA in question belongs to a specific species: “Our tests use very simple lateral-flow strips to tell you whether a sample belongs to your target species of interest, yes or no.” The strips can be tailored to test for a wide range of targets, from big cats to microbes, opening up diverse applications in the wild. They can tell if samples of hair belong to a snow leopard, or if a frog has been infected with the fungi that cause chytridiomycosis, a disease that has devastated amphibians worldwide and wiped out at least 90 species. WildTechDNA’s earliest adopter was the Canadian government, which wanted to detect European eels—a critically endangered species that is effectively impossible to identify by appearance. This confusion has allowed €3 billion of European eels to be smuggled each year, disguised as other eel species. Some of that passes into Canada on its way to suppliers in Japan and China, and in some cases on to Canadian restaurants and consumers. “When a shipment is suspected to contain European eel, they’ll randomly sample it and they’ll send those samples off to a lab across the country, which will take three weeks,” says Schmitt of traditional tracking methods. WildTechDNA developed tests specific to European eels and taught Canadian enforcement officers how to use them, so that they could launch a “nationwide European eel blitz,” she says. In a 2025 campaign, European eels turned up in fewer than 1% of shipments. Schmitt says Canadian authorities have not disclosed details about investigations but are encouraged by the results—significantly below the rates detected using older technologies in 2016, an improvement they attribute to better surveillance.

COURTESY OF RAINFOREST CONNECTION Listening in The world’s forests are increasingly filled with snooping devices. In addition to affordable camera traps and animal-mounted GPS tags, low-cost solar-powered microphones have proved to be strikingly effective at revealing what’s living in some of the planet’s most densely inhabited and biodiverse environments. Rainforest Connection, a nonprofit founded by the physicist turned conservation-tech entrepreneur Topher White in 2014, was a pioneer in bioacoustic monitoring for conservation. The group initially repurposed old phones into low-cost monitoring devices but has since developed a standardized device called the Guardian that has now been deployed in more than 600 locations.

Guardians are designed to capture a broad soundscape of the rainforest: “They sit out in the rainforest for long periods of time, up in treetops. They’re solar-powered, they can last for years, and we listen to all the sounds continuously and transmit that up to the cloud, where we are then able to analyze it for all sorts of things,” says White. From the outset, the aim was to use these devices to pick up immediate threats—“chainsaws, logging trucks, gunshots, things like that,” White says—and relay real-time alerts to local partners, including police, Indigenous groups, and local communities that protect the land. COURTESY OF RAINFOREST CONNECTION COURTESY OF RAINFOREST CONNECTION Bioacoustic monitoring devices have rapidly advanced in recent years. Many can now analyze data before transmitting it, and they’ve become cheaper to make as batteries have gotten smaller. By today’s standards, Rainforest Connection’s sensors are “over-engineered,” says White. But having a large number of detectors already deployed means there is ample data that can be mined for signals beyond well-known red flags, like gunshots. “An area for a lot more innovation going forward is to use the soundscape itself as a detector,” White says. Rainforest Connection and the German software firm SAP tested this approach on the island of Sumatra and found they could identify human intruders by using machine learning to hunt for “uncharacteristic sudden changes to the soundscape.” For example, tracking animal calls—and noting when those animals go silent—could reveal the arrival of poachers. In 2026, Rainforest Connection will roll out this approach to reserves in Thailand, Jamaica, and Romania by building a unique model for each environment, trained on thousands of hours of audio and verified using camera traps. “We have a lot of eyes and ears in the forest already, all of which are aware and reacting to each other and to new stimuli,” White says. For the rest of us, Rainforest Connection’s unfiltered stream has another use: an app where you can listen to the livestream from the Ecuadorian rainforest, taking in the complete soundscape of birdsong, frog chatter, and cicada chirps. Matthew Ponsford is a freelance reporter based in London.

Energy Secretary Keeps Critical Generation Online in Mid-Atlantic

Emergency order keeps critical generation online and addresses critical grid reliability issues facing the Mid-Atlantic region of the United States WASHINGTON—U.S. Secretary of Energy Chris Wright issued an emergency order to address critical grid reliability issues facing the Mid-Atlantic region of the United States. The emergency order directs PJM Interconnection, L.L.C. (PJM), in coordination with Constellation Energy Corporation, to ensure Units 3 and 4 of the Eddystone Generating Station in Pennsylvania remain available for operation and to employ economic dispatch to minimize costs for the American people. The units were originally slated to shut down on May 31, 2025. “The energy sources that perform when you need them most are inherently the most valuable—that’s why natural gas and oil were valuable during recent winter storms,” Secretary Wright said. “Hundreds of American lives have likely been saved because of President Trump’s actions keeping critical generation online, including this Pennsylvania generating station which ran during Winter Storm Fern. This emergency order will mitigate the risk of blackouts and maintain affordable, reliable, and secure electricity access across the region.” The Eddystone Units were integral in stabilizing the grid during Winter Storm Fern. Between January 26-29, the units ran for over 124 hours cumulatively, providing critical generation in the midst of the energy emergency. As outlined in DOE’s Resource Adequacy Report, power outages could increase by 100 times in 2030 if the U.S. continues to take reliable power offline. Furthermore, NERC’s 2025 Long-Term Reliability Assessment warns, “The continuing shift in the resource mix toward weather-dependent resources and less fuel diversity increases risks of supply shortfalls during winter months.” Secretary Wright ordered that the two Eddystone Generating Station units remain online past their planned retirement date in a May 30, 2025 emergency order. Subsequent orders were issued on August 28, 2025 and November 26, 2025. Keeping these units operational

Gran Tierra signs Azerbaijan exploration agreement, moves to exit Simonette

Gran Tierra Energy Inc. signed an exploration, development, and production sharing agreement with the State Oil Company of the Republic of Azerbaijan (SOCAR) on a prospective onshore field in Azerbaijan, while also moving to exit its Simonette asset through a signed sale agreement. Under the terms of the SOCAR agreement, Gran Tierra Energy will act as the operator of the in the Guba-Caspian region project with a 65% stake. The contract area surrounds a 65-km-long structure that has produced more than 100 million bbl of oil and more than 200 bcf of natural gas, “underscoring the scale and quality of the petroleum system in Azerbaijan,” Gran Tierra said in a release Feb. 19. The EDPSA includes a 5-year exploration and appraisal phase, and 25 years for development of any economic discoveries, with potential to extend development an additional 5 years. The exploration period consists of an initial 3-year phase followed by a second 2-year phase. The initial phase includes acquisition of a gravity study, together with a commitment to drill two wells and acquire 250 sq km of 3D seismic. Upon completion of the initial phase, the company has the option to proceed into the second phase, which carries a further commitment to drill two wells and acquire an additional 250 sq km of 3D seismic. Gran Tierra expects begin an airborne gravity study this year, with seismic acquisition and drilling activities planned to begin in 2027. The EDPSA remains subject to certain customary and legal conditions, including approval by the legislature of the Republic of Azerbaijan and other legal formalities and procedures. Simonette exit The same day, the company noted an agreement with an undisclosed buyer to sell its remaining working interest in the Simonette asset in Alberta for total cash consideration of $62.5 million (Can.). The company in

SCOTUS limits presidential tariff authority, injecting new oil and gas industry uncertainty

The US Supreme Court ruled that President Donald Trump lacks authority to impose broad tariffs on US trading partners, deciding 6–3 that such powers rest with Congress. Justices Sonia Sotomayor, Elena Kagan, Neil Gorsuch, Amy Coney Barrett, Ketanji Brown Jackson, and Chief Justice John Roberts formed the majority. Justices Samuel Alito, Clarence Thomas, and Brett Kavanaugh dissented. The decision voids most tariffs imposed over the past year under the International Emergency Economic Powers Act (IEEPA), including reciprocal tariffs used as leverage in trade talks. Steel and aluminum import fees enacted during Pres. Trump’s first term and continued by former Pres. Joe Biden, remain in place under separate statutory authority. At a Friday morning White House event with governors, Pres. Trump called the ruling “a disgrace,” according to a CNN report that also noted sources citing the president’s reference to a “backup plan.” In a press conference following the ruling, Pres. Trump said SCOTUS “incorrectly rejected” the authority, but that the administration will now go “in a different direction…that is even stronger.” Trade policy mechanisms Ken Medlock, III, PhD, Senior Director of the Center for Energy Studies at Rice University, said the ruling “will force the administration to seek other means to impose tariffs or regulate trade through other means to accomplish its various goals. It does not necessarily reset trade policy, just the mechanisms that can be used to implement it.” In an email to OGJ he said he expects “additional uncertainty in the near term,” which he characterized as disruptive for investment and planning by commercial actors. Medlock noted that “some tariffs will likely remain if they were sector‑specific because they were not motivated by IEEPA,” adding that “there are other sections of different Trade Acts that the president has used previously that could come into play to re‑implement

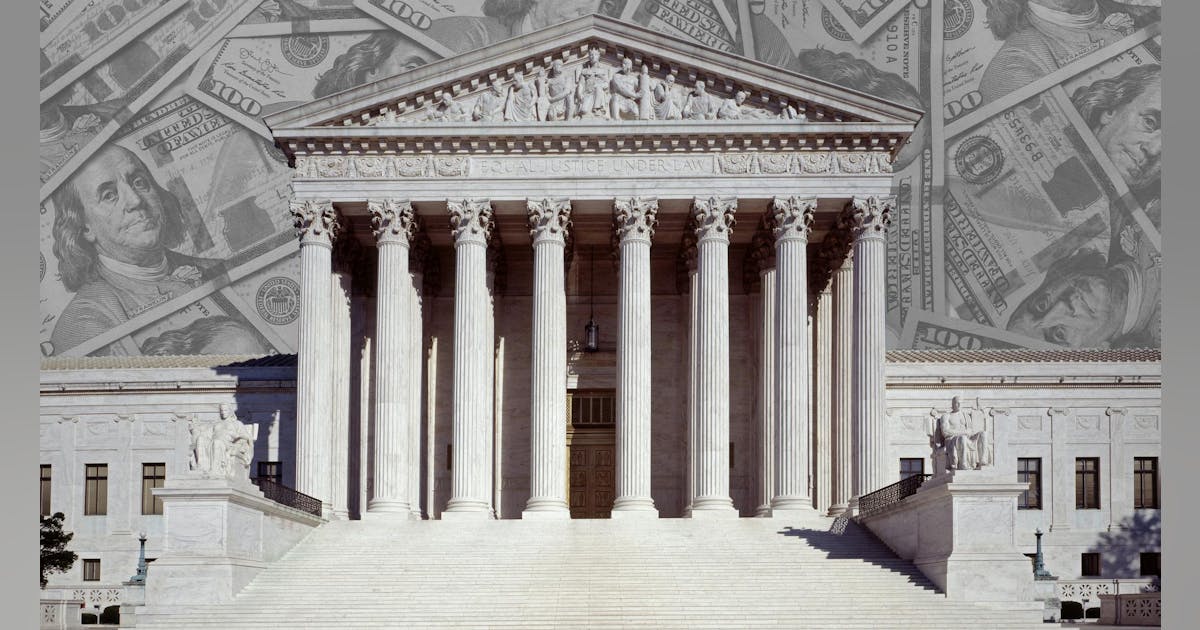

EIA: US natural gas production to hit record highs in 2026-27

US natural gas marketed production is expected to rise 2% to average 120.8 bcfd in 2026, then increase further to a record 122.3 bcfd in 2027, according to the US Energy Information Administration (EIA)’s latest Short-Term Energy Outlook (STEO). About 69% of total forecast output over the next 2 years will come from the Appalachia, Haynesville, and Permian regions. Haynesville output is forecast to increase by 1.2 bcfd in 2026 and by 1.6 bcfd in 2027, supported by relatively strong natural gas prices through the outlook period. EIA expects Henry Hub prices to rise from $3.52/MMbtu in 2025 to $4.31/MMbtu in 2026 and $4.38/MMbtu in 2027, keeping Haynesville drilling economics attractive despite deeper, higher-cost well development. The region’s proximity to LNG export terminals and major industrial consumers along the US Gulf Coast also continues to support activity. The Permian basin is projected to add 1.4 bcfd of production growth in 2026 and 0.6 bcfd in 2027. Output gains are largely driven by associated gas production from oil drilling. EIA estimates oil-directed rig activity will remain relatively subdued as West Texas Intermediate (WTI) crude prices decline from $65/bbl in 2025 to an average $53/bbl in 2026 and $49/bbl in 2027. Even so, rising gas-to-oil ratios (GOR) are expected to support continued natural gas production growth in the basin. Appalachia has supplied the largest share of Lower 48 natural gas production in recent years, accounting for about 32% annually since 2016. However, growth has slowed due to pipeline constraints. In June 2024, the Federal Energy Regulatory Commission (FERC) authorized the Mountain Valley Pipeline to begin operations, adding new takeaway capacity. As a result, EIA estimates Appalachian production will increase modestly by 0.3 bcfd in 2026 and by 0.5 bcfd in 2027.

Verde Clean Fuels shifts strategy away from large-scale plant development

Verde Clean Fuels, Houston, aims to reduce its operating costs by 50% this year as part of a larger ‘capital‑lite’ strategy aimed at identifying the most effective and financially disciplined path to commercialize its liquid fuels processing technology. The move comes weeks after the company suspended development of a natural gas-to-gasoline (GTG) project in the Permian basin. At the time, the company cited “changing market conditions driven by increasing demand for natural gas,” in the region, while Verde’s chief executive officer, Ernest Miller, said knowledge collected from the work completed would be useful as the company explored other opportunities to deploy its proprietary synthesis gas (syngas)-to-gasoline plus (STG+) liquid fuels technology. At the forefront is a move away from capital‑intensive plant development. Over $110 million has been invested in development and demonstrating the STG+ technology since 2007, including the construction and operation of the demonstration plant that completed over 10,500 hours of operation. Strategy shift The company now plans to eliminate roles tied to large‑scale plant development and shift its business model toward technology licensing and providing engineering, technical, and operational services. “We own a proprietary advanced-fuel conversion technology platform designed to convert low-value or stranded feedstocks into higher-value clean transportation fuels through an integrated, scalable, process-driven system. We are focused on the most optimal path to deploy our STG+® technology while being extremely disciplined with our resources. We are evaluating strategic alternatives that may be available to maximize shareholder value,” said Ron Hulme, Verde’s chairman of board of directors. The company is also streamlining its board of directors, cutting director cash compensation by 80%, with two directors not standing for reelection. A newly formed Restructuring Committee, with Verde director Jonathan Siegler—who serves as managing director and chief financial officer of Bluescape Energy Partners, an affiliate of Verde’s primary shareholder—appointed

Equinor discovers oil, gas at Granat prospect in North Sea

Equinor Energy AS and its partners have discovered oil and gas in the Granat prospect in the North Sea near Gullfaks, 190 km northwest of Bergen, the Norwegian Offshore Directorate reported Feb. 16. Preliminary estimates place the discovery at 0.2-0.6 million std cu m of recoverable oil equivalent (1.3-3.8 million bbl). The licensees are considering tying the discovery back to existing infrastructure in the Gullfaks area. Wildcat wells 33/12-N-3 HH and 33/12-N-3 GH were drilled in connection with drilling an oil development well on the Gullfaks Satellites (33/12-N-3 IH) in production license 152 (PL 152). The discovery was made in 33/12-N-3 HH in production license 277. This is the first exploration well in the license. Well 33/12-N-3 GH, which had drilling targets in PL 152, was dry. The wells were drilled from production licence 050 using the Askeladden rig. Geological information The objective of wellbore 33/12-3GH (N2A) was to prove hydrocarbons in reservoir rocks in the Tarbert Formation, with secondary targets in the Ness and Etive formations, all in the Brent Group from the Middle Jurassic. The well encountered the Tarbert Formation in a total of 115 m with 48 m of sandstone layers with moderate to good reservoir properties and a total of 59 m in the Ness formation with 10 m of sandstone layers with good reservoir properties. Both formations were aquiferous. Data was collected, including pressure data. Wellbore 33/12-3-GH was drilled to respective measured and vertical depths of 6,708 and 3,460 m and was terminated in the Ness formation. The objective of wellbore 33/12-N-3 HH was to prove hydrocarbons in reservoir rocks in the Tarbert formation, with secondary targets in the Ness and Etive formations, all in the Brent Group from the Middle Jurassic. Wellbore 33/12-N-3 HHT2 encountered a total of 153 m in the Tarbert formation with 58 m of sandstone layers with moderate

Caturus Energy advances LNG business through $950-million asset deal with SM Energy

Caturus Energy LLC plans to expand its LNG business through a $950‑million agreement to acquire South Texas assets from SM Energy Co. The transaction—for SM Energy’s Galvan Ranch assets—includes about 61,000 net acres, roughly 250 MMcfed of production as of December 2025 from 260 wells in the southern Maverick basin in Webb County, Tex., and related infrastructure. SM Energy expects the assets to produce 37,000–39,000 boe/d this year (45% liquids, 9% oil). Net proved reserves totaled about 168 MMboe as of Dec. 31, 2025. “Galvan Ranch significantly expands our footprint in the Eagle Ford and Austin Chalk and comes with existing infrastructure that supports long‑term, capital‑efficient development,” said David Lawler, chief executive officer of Caturus. He added that the largely contiguous Webb County Core position provides “more than a decade of high‑quality drilling inventory across both the wet and dry gas windows, with additional upside beyond that horizon.” Wellhead-to-water Caturus is pursuing a wellhead‑to‑water model supported by its proximity to the Gulf Coast. The company aims to establish the only independent, fully integrated natural gas and LNG export platform in the US through Caturus Energy’s upstream operations and its 9.5-million tonnes/year (tpy) Commonwealth LNG project in Cameron, La. The company is moving toward a final investment decision on the plant and has secured 7 million tpy of long-term offtake agreements. Following the acquisition, Caturus would have proforma net production of about 950 MMcfed across 275,000 net acres along the Gulf Coast. This deal follows a 2025 development agreement with Black Stone Minerals covering 220,000 gross acres in the Shelby Trough for a multi‑year drilling program. Caturus said the Galvan Ranch acquisition and its Haynesville entry position the company “to deliver low‑nitrogen natural gas to key LNG hubs at Gillis and Agua Dulce.” The transaction is expected to close in second‑quarter

National Grid, Con Edison urge FERC to adopt gas pipeline reliability requirements

The Federal Energy Regulatory Commission should adopt reliability-related requirements for gas pipeline operators to ensure fuel supplies during cold weather, according to National Grid USA and affiliated utilities Consolidated Edison Co. of New York and Orange and Rockland Utilities. In the wake of power outages in the Southeast and the near collapse of New York City’s gas system during Winter Storm Elliott in December 2022, voluntary efforts to bolster gas pipeline reliability are inadequate, the utilities said in two separate filings on Friday at FERC. The filings were in response to a gas-electric coordination meeting held in November by the Federal-State Current Issues Collaborative between FERC and the National Association of Regulatory Utility Commissioners. National Grid called for FERC to use its authority under the Natural Gas Act to require pipeline reliability reporting, coupled with enforcement mechanisms, and pipeline tariff reforms. “Such data reporting would enable the commission to gain a clearer picture into pipeline reliability and identify any problematic trends in the quality of pipeline service,” National Grid said. “At that point, the commission could consider using its ratemaking, audit, and civil penalty authority preemptively to address such identified concerns before they result in service curtailments.” On pipeline tariff reforms, FERC should develop tougher provisions for force majeure events — an unforeseen occurence that prevents a contract from being fulfilled — reservation charge crediting, operational flow orders, scheduling and confirmation enhancements, improved real-time coordination, and limits on changes to nomination rankings, National Grid said. FERC should support efforts in New England and New York to create financial incentives for gas-fired generators to enter into winter contracts for imported liquefied natural gas supplies, or other long-term firm contracts with suppliers and pipelines, National Grid said. Con Edison and O&R said they were encouraged by recent efforts such as North American Energy Standard

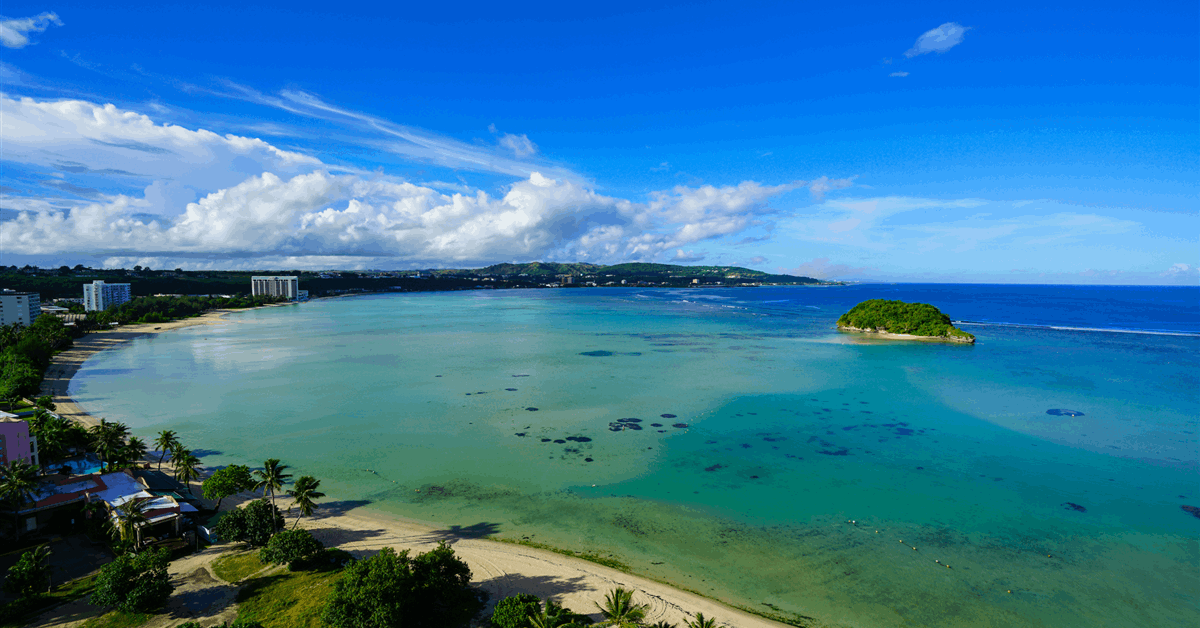

US BOEM Seeks Feedback on Potential Wind Leasing Offshore Guam

The United States Bureau of Ocean Energy Management (BOEM) on Monday issued a Call for Information and Nominations to help it decide on potential leasing areas for wind energy development offshore Guam. The call concerns a contiguous area around the island that comprises about 2.1 million acres. The area’s water depths range from 350 meters (1,148.29 feet) to 2,200 meters (7,217.85 feet), according to a statement on BOEM’s website. Closing April 7, the comment period seeks “relevant information on site conditions, marine resources, and ocean uses near or within the call area”, the BOEM said. “Concurrently, wind energy companies can nominate specific areas they would like to see offered for leasing. “During the call comment period, BOEM will engage with Indigenous Peoples, stakeholder organizations, ocean users, federal agencies, the government of Guam, and other parties to identify conflicts early in the process as BOEM seeks to identify areas where offshore wind development would have the least impact”. The next step would be the identification of specific WEAs, or wind energy areas, in the larger call area. BOEM would then conduct environmental reviews of the WEAs in consultation with different stakeholders. “After completing its environmental reviews and consultations, BOEM may propose one or more competitive lease sales for areas within the WEAs”, the Department of the Interior (DOI) sub-agency said. BOEM Director Elizabeth Klein said, “Responsible offshore wind development off Guam’s coast offers a vital opportunity to expand clean energy, cut carbon emissions, and reduce energy costs for Guam residents”. Late last year the DOI announced the approval of the 2.4-gigawatt (GW) SouthCoast Wind Project, raising the total capacity of federally approved offshore wind power projects to over 19 GW. The project owned by a joint venture between EDP Renewables and ENGIE received a positive Record of Decision, the DOI said in

Biden Bars Offshore Oil Drilling in USA Atlantic and Pacific