Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Investors, including venture capitalists (VCs), are betting $359 million that secure access service edge (SASE) will become a primary consolidator of enterprise security tech stacks.

Cato Network’s oversubscribed Series G round last week demonstrates that investors view SASE as capable of driving significant consolidation across its core and adjacent markets. Now valued at $4.8 billion, Cato recently reported 46% year-over-year (YoY) growth in annual recurring revenue (ARR) for 2024, outpacing the SASE market. Cato will use the funding to advance AI-driven security, accelerate innovation across SASE, extended detection and response (XDR), zero trust network access (ZTNA), SD-WAN, and IoT/OT, and strengthen its global reach by scaling partner and customer-facing teams.

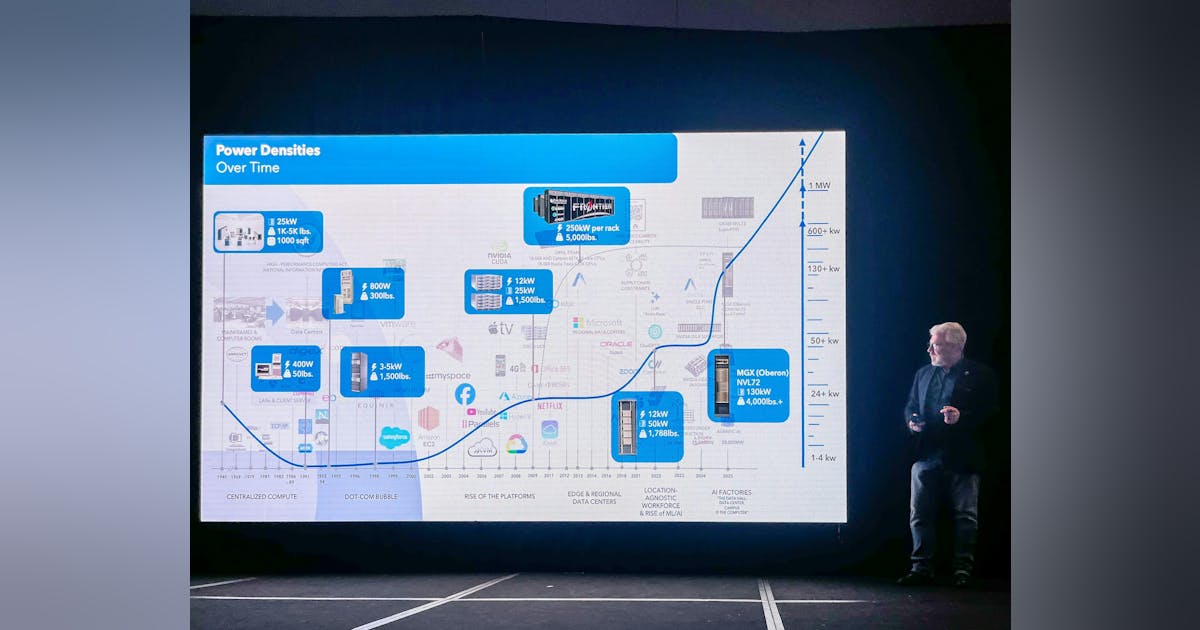

Gartner projects the SASE market will grow at a compound annual growth rate (CAGR) of 26%, reaching $28.5 billion by 2028.

The implied, real message is that SASE will do to security stacks what cloud computing did to data centers: Consolidate dozens of point solutions into unified platforms. Gartner’s latest forecast for worldwide SASE shows organizations favoring a dual-vendor approach, shifting from a 4:1 ratio to 2:1 by 2028, another solid signal that consolidation is on the way.

Cashing in on consolidation

Consolidating tech stacks as a growth strategy is not a new approach in cybersecurity, or in broader enterprise software. Cloud-native application protection platform (CNAPP) and XDR platforms have relied on selling consolidation for years. Investors leading Cato’s latest round are basing their investment thesis on the proven dynamic that CISOs are always looking for ways to reduce the number of apps to improve visibility and lower maintenance costs.

VentureBeat often hears from CISOs that complexity is one of the greatest enemies of security. Tool sprawl is killing the ability to achieve step-wise efficiency gains. While CISOs want greater simplicity and are willing to drive greater consolidation, many have inherited inordinately complex and high-cost legacy technology stacks, complete with a large base of tools and applications for managing networks and security simultaneously.

Nikesh Arora, Palo Alto Networks chairman and CEO, acknowledged the impact of consolidations, saying recently: “Customers are actually onto it. They want consolidation because they are undergoing three of the biggest transformations ever: A network security transformation and a cloud transformation, and many of them are unaware … they’re about to go through a security operations center transformation.”

A recent study by IBM in collaboration with Palo Alto Networks found that the average organization has 83 different security solutions from 29 vendors. The majority of executives (52%) say complexity is the biggest impediment to security operations, and it can cost up to 5% of revenue. Misconfigurations are common, making it difficult and time-consuming to troubleshoot security gaps. Consolidating cybersecurity products reduces complexity, streamlines the number of apps and improves overall efficiency.

When it comes to capitalizing on consolidation in a given market, timing is crucial. Adversaries are famous for mining legacy CVEs and launching living off the land (LOTL) attacks by using standard tools to breach and penetrate networks. Multivendor security architectures often have gaps that IT and security teams are unaware of until an intrusion attempt or breach occurs due to the complexity of multicloud, proprietary app, and platform integrations.

Enterprises lose the ability to protect the proliferating number of ephemeral identities, including Kubernetes containers and machine and human identities, as every endpoint and device is assigned. Closing the gaps in infrastructure, app, cloud, identity and network security fuels consolidation.

What CISOs are saying

Steward Health CISO Esmond Kane advises: “Understand that — at its core — SASE is zero trust. We’re talking about identity, authentication, access control and privilege. Start there and then build out.”

Legacy network architectures are renowned for poor user experiences and wide security gaps. According to Hughes’ 2025 State of Secure Network Access Report, 45% of senior IT and security leaders adopt SASE to consolidate SD-WAN and security into a unified platform. The majority of organizations, 75%, are pursuing vendor consolidation, up from 29% just three years ago. CISOs believe consolidating their tech stacks will help them avoid missing threats (57%) and reduce the need to find qualified security specialists (56%).

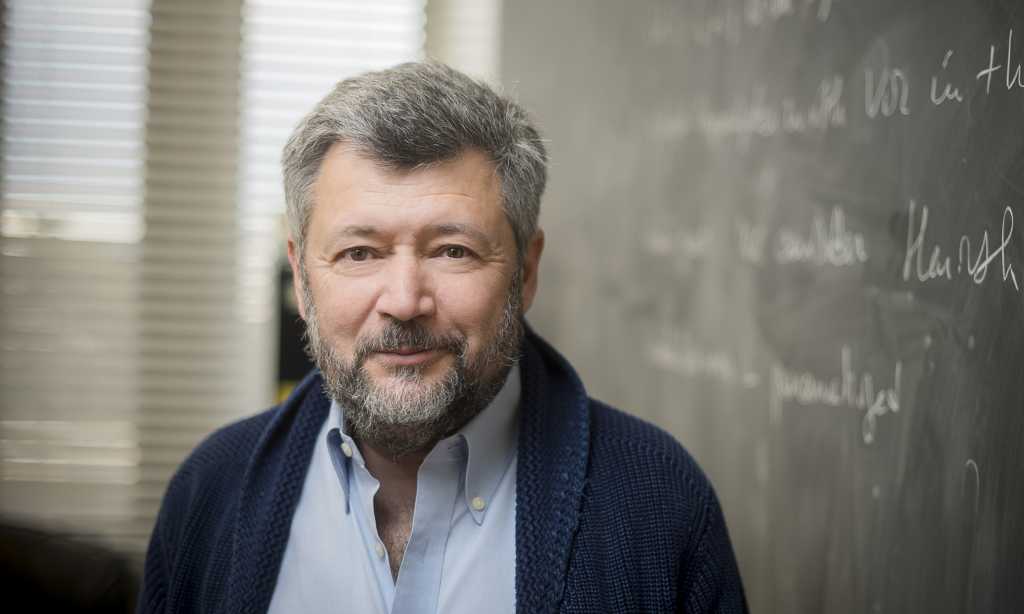

“SASE is an existential threat to all appliance-based network security companies,” Shlomo Kramer, Cato’s CEO, told VentureBeat. “The vast majority of the market is going to be refactored from appliances to cloud service, which means SASE [is going to be] 80% of the market.”

A fundamental architectural transformation is driving that shift. SASE converges traditionally siloed networking and security functions into a single, cloud-native service edge. It combines SD-WAN with critical security capabilities, including secure web gateway (SWG), cloud access security broker (CASB) and ZTNA to enforce policy and protect data regardless of where users or workloads reside.

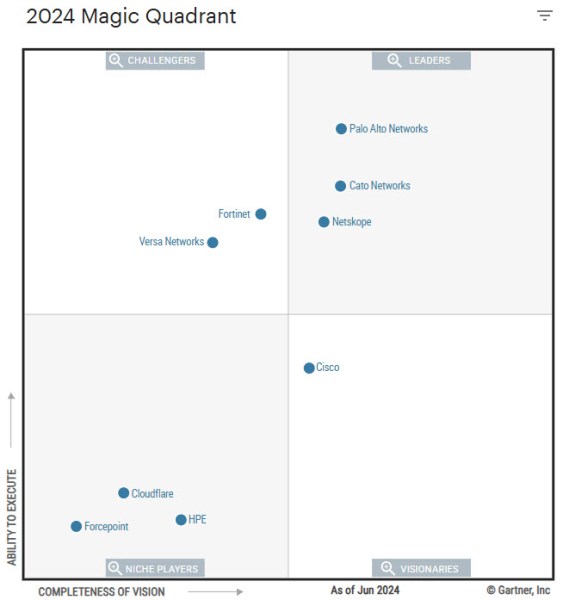

Gartner’s 2024 Magic Quadrant for single-vendor SASE positions Cato Networks, Palo Alto Networks, and Netskope as Leaders, reflecting their maturity, unified platforms and suitability for enterprise-wide deployments.

Why vendor consolidation is reshaping enterprise security strategy

Single-vendor SASE has become a strategic consideration for security and infrastructure leaders. According to Gartner, 65% of new SD-WAN purchases will be part of a single-vendor SASE deployment by 2027, up from 20% in 2024. This projected growth reflects a broader shift toward unified platforms that reduce policy fragmentation and improve visibility across users, devices and applications.

In its Magic Quadrant for Single Vendor SASE, Gartner identified Cato Networks, Palo Alto Networks and Netskope as market leaders based on their differentiated approaches to convergence, user experience and enterprise-scale deployment models.

Cato’s Kramer told VentureBeat: “There is a short window where companies can avoid being caught with fragmented architectures. The attackers are moving faster than integration teams. That is why convergence wins.”

Numbers back Kramer’s warning. AI-enabled attacks are increasingly exploiting the 200-millisecond gaps between tool handoffs in multivendor stacks. Every unmanaged connection becomes a risk surface.

SASE leaders compared

Cato Networks: The Cato SASE Cloud platform combines SD-WAN, security service edge (SSE), ZTNA, CASB, and firewall capabilities in a unified architecture. Gartner highlights Cato’s “above-average customer experience compared to other vendors” and notes its “single, straightforward UI” as a key strength. The report notes that specific capabilities, including SaaS visibility and on-premises firewalling, are still maturing. Gartner also notes that pricing may vary depending on bandwidth requirements, which can impact the total cost, particularly concerning deployment scale. Following its Series G and 46% ARR growth, Cato has emerged as the most investor-validated pure-play in the space.

Palo Alto Networks: PANW “has strong security and networking features, delivered via a unified platform,” and benefits from “a proven track record in this market, and a sizable installed base of customers,” Gartner notes. However, the company’s offering is expensive compared to most of the other vendors. They also flag that the new Strata Cloud Manager is less intuitive than its previous UI.

Netskope: Gartner cites the vendor’s “strong feature breadth and depth for both networking and security,” along with a “strong customer experience” and “a strong geographic strategy” due to localization and data sovereignty support. At the same time, the analysis highlights operational complexity, noting that “administrators must use multiple consoles to access the full functionality of the platform.” Gartner also says that Netskope lacks experience compared to other vendors.

Evaluating the leading SASE vendors

| Vendor | Platform design | Ease of use | AI automation maturity | Pricing clarity | Security scope | Ideal fit |

| Cato Networks | Fully unified, cloud-native | Excellent | Advancing rapidly | Predictable and transparent | End-to-end native stack | Midmarket and enterprise simplicity seekers |

| Palo Alto Prisma | Security-first integration | Moderate | Mature for security ops | Higher TCO | Strong next-generation firewall (NGFW) and ZTNA | Enterprises already using Palo NGFW |

| Netskope | Infrastructure control | Moderate | Improving steadily | Clear and structured | Strong CASB and data loss prevention (DLP) | Regulated industries and compliance-driven |

SASE consolidation signals enterprise security’s architectural shift

The SASE consolidation wave reveals how enterprises are fundamentally rethinking security architecture. With AI attacks exploiting integration gaps instantly, single-vendor SASE has become essential for both protection and operational efficiency.

The reasoning is straightforward. Every vendor handoff creates vulnerability. Each integration adds latency. Security leaders know that unified platforms can help eliminate these risks while enabling business velocity.

CISOs are increasingly demanding a single console, a single agent and unified policies. Multivendor complexity is now a competitive liability. SASE consolidation delivers what matters most with fewer vendors, stronger security and execution at market speed.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.