When Sam Zahr first saw the gray Rolls-Royce Dawn convertible with orange interior and orange roof, he knew he’d found a perfect addition to his fleet. “It was very appealing to our clientele,” he told me. As the director of operations at Dream Luxury Rental, he outfits customers in the Detroit area looking to ride in style to a wedding, a graduation, or any other event with high-end vehicles—Rolls-Royces, Lamborghinis, Bentleys, Mercedes G-Wagons, and more. But before he could rent out the Rolls, Zahr needed to get the car to Detroit from Miami, where he bought it from a used-car dealer. His team posted the convertible on Central Dispatch, an online marketplace that’s popular among car dealers, manufacturers, and owners who want to arrange vehicle shipments. It’s not too complicated, at least in theory: A typical listing includes the type of vehicle, zip codes of the origin and destination, dates for pickup and delivery, and the fee. Anyone with a Central Dispatch account can see the job, and an individual carrier or transport broker who wants it can call the number on the listing. Zahr’s team got a call from a transport company that wanted the job. They agreed on the price and scheduled pickup for January 17, 2025. Zahr watched from a few feet away as the car was loaded into an enclosed trailer. He expected the vehicle to arrive in Detroit just a few days later—by January 21. But it never showed up. Zahr called a contact at the transport company to ask what happened.

“He’s like, I don’t know what you’re talking about.” Zahr told me his contact angrily told him they mostly ship Coca-Cola products, not luxury cars. “He was yelling and screaming about it,” Zahr said.

Over the years, people have broken into his business to steal cars, or they’ve rented them out and never come back. But until this day, he’d never had a car simply disappear during shipping. He’d expected no trouble this time around, especially since he’d used Central Dispatch—“a legit platform that everyone uses to transport cars,” he said. “That’s the scary part about it, you know?” Wreaking havoc Zahr had unwittingly been caught up in a new and growing type of organized criminal enterprise: vehicle transport fraud and theft. Crooks use email phishing, fraudulent paperwork, and other tactics to impersonate legitimate transport companies and get hired to deliver a luxury vehicle. They divert the shipment away from its intended destination and then use a mix of technology, computer skills, and old-school chop shop techniques to erase traces of the vehicle’s original ownership and registration. These vehicles can be retitled and resold in the US or loaded into a shipping container and sent to an overseas buyer. In some cases, the car has been resold or is out of the country by the time the rightful owner even realizes it’s missing. “Criminals have learned that stealing cars via the web portals has become extremely easy, and when I say easy—it’s become seamless,” says Steven Yariv, the CEO of Dealers Choice Auto Transport of West Palm Beach, Florida, one of the country’s largest luxury-vehicle transport brokers. Individual cases have received media coverage thanks to the high value of the stolen cars and the fact that some belong to professional athletes and other celebrities. In late 2024, a Lamborghini Huracán belonging to Colorado Rockies third baseman Kris Bryant went missing en route to his home in Las Vegas; R&B singer Ray J told TMZ the same year that two Mercedes Maybachs never arrived in New York as planned; and last fall, NBA Hall of Famer Shaquille O’Neal had a $180,000 custom Range Rover stolen when the transport company hired to move the vehicle was hacked. “They’re saying they think it’s probably in Dubai by now, to be honest,” an employee of the company that customized the SUV told Shaq in a YouTube video.

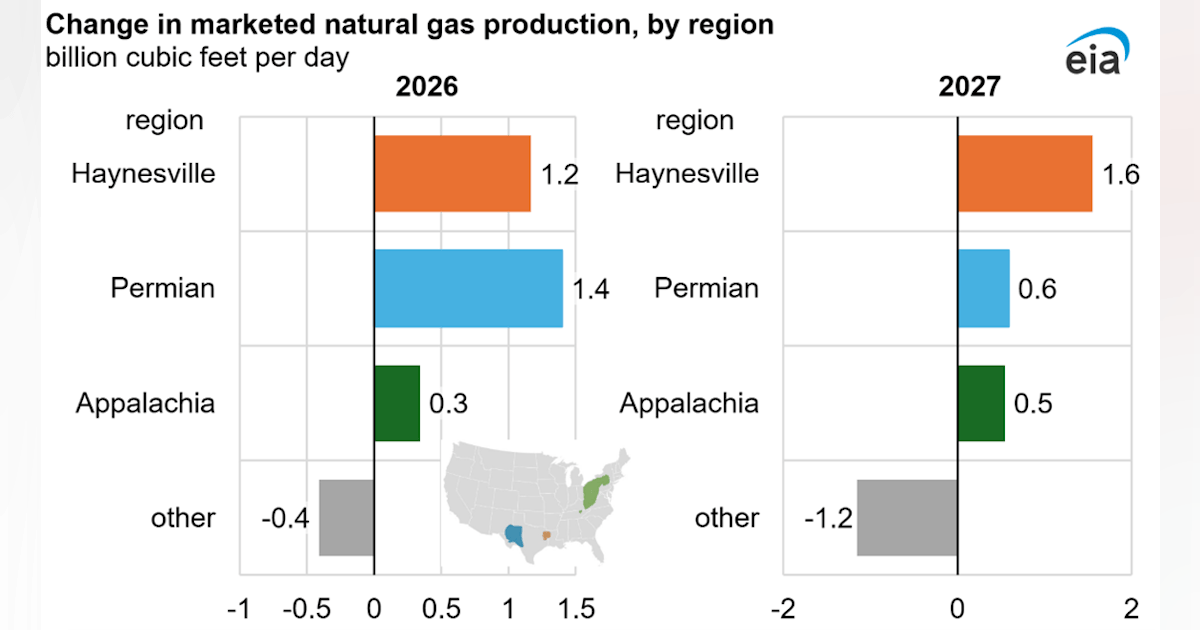

“Criminals have learned that stealing cars via the web portals has become extremely easy, and when I say easy—it’s become seamless.” Steven Yariv, CEO, Dealers Choice Auto Transport of West Palm Beach, Florida But the nationwide epidemic of vehicle transport fraud and theft has remained under the radar, even as it’s rocked the industry over the past two years. MIT Technology Review identified more than a dozen cases involving high-end vehicles, obtained court records, and spoke to law enforcement, brokers, drivers, and victims in multiple states to reveal how transport fraud is wreaking havoc across the country. RICHARD CHANCE It’s challenging to quantify the scale of this type of crime, since there isn’t a single entity or association that tracks it. Still, these law enforcement officials and brokers, as well as the country’s biggest online car-transport marketplaces, acknowledge that fraud and theft are on the rise. When I spoke with him in August, Yariv estimated that around 8,000 exotic and high-end cars had been stolen since the spring of 2024, resulting in over $1 billion in losses. “You’re talking 30 cars a day [on] average is gone,” he said. Multiple state and local law enforcement officials told MIT Technology Review that the number is plausible. (The FBI did not respond to a request for an interview.)

“It doesn’t surprise me,” said J.D. Decker, chief of the Nevada Department of Motor Vehicles’ police division and chair of the fraud subcommittee for the American Association of Motor Vehicle Administrators. “It’s a huge business.” Data from the National Insurance Crime Bureau (NICB), a nonprofit that works with law enforcement and the insurance industry to investigate insurance fraud and related crimes, provides further evidence of this crime wave. NICB tracks both car theft and cargo theft, a broad category that refers to goods, money, or baggage that is stolen while part of a commercial shipment; the category also covers cases in which a vehicle is stolen via a diverted transport truck or a purloined car is loaded into a shipping container. NICB’s statistics about car theft show that it has declined following an increase during the pandemic—but over the same period cargo theft has dramatically increased, to an estimated $35 billion annually. The group projected in June that it was expected to rise 22% in 2025. NICB doesn’t break out data for vehicles as opposed to other types of stolen cargo. But Bill Woolf, a regional director for the organization, said an antifraud initiative at the Port of Baltimore experienced a 200% increase from 2023 to 2024 in the number of stolen vehicles recovered. He said the jump could be due to the increased effort to identify stolen cars moving through the port, but he noted that earlier the day we spoke, agents had recovered two high-end stolen vehicles bound for overseas. “One day, one container—a million dollars,” he said.

Many other vehicles are never recovered—perhaps a result of the speed with which they’re shipped off or sold. Travis Payne, an exotic-car dealer in Atlanta, told me that transport thieves often have buyers lined up before they take a car: “When they steal them, they have a plan.” In 2024, Payne spent months trying to locate a Rolls-Royce he’d purchased after it was stolen via transport fraud. It eventually turned up in the Instagram feed of a Mexican pop star, he says. He never got the car back. The criminals are “gonna keep doing it,” he says, “because they make a couple phone calls, make a couple email accounts, and they get a $400,000 car for free. I mean, it makes them God, you know?” Out-innovating the industry The explosion of vehicle transport fraud follows a pattern that has played out across the economy over the past roughly two decades: A business that once ran on phones, faxes, and personal relationships shifted to online marketplaces that increased efficiency and brought down costs—but the reduction in human-to-human interaction introduced security vulnerabilities that allowed organized and often international fraudsters to enter the industry. In the case of vehicle transport, the marketplaces are online “load boards” where car owners, dealerships, and manufacturers post about vehicles that need to be shipped from one location to another. Central Dispatch claims to be the largest vehicle load board and says on its website that thousands of vehicles are posted on its platform each day. It’s part of Cox Automotive, an industry juggernaut that owns major vehicle auctions, Autotrader, Kelley Blue Book, and other businesses that work with auto dealers, lenders, and buyers. The system worked pretty well until roughly two years ago, when organized fraud rings began compromising broker and carrier accounts and exploiting loopholes in government licensing to steal loads with surprising ease and alarming frequency.

A theft can start with a phishing email that appears to come from a legitimate load board. The recipient, a broker or carrier, clicks a link in the message, which appears to go to the real site—but logging in sends the victim’s username and password to a criminal. The crook logs in as the victim, changes the account’s email and phone number to reroute all communications, and begins claiming loads of high-end vehicles. Cox Automotive declined an interview request but said in a statement that the “load board system still works well” and that “fraud impacts a very small portion” of listings. “Every time we come up with a security measure to prevent the fraudster, they come up with a countermeasure.” Bill Woolf, a regional director, National Insurance Crime Bureau Criminals also gain access to online marketplaces by exploiting a lax regulatory environment. While a valid US Department of Transportation registration is required to access online marketplaces, it’s not hard for bad actors to register sham transport companies and obtain a USDOT number from the Federal Motor Carrier Safety Administration, the agency that regulates commercial motor vehicles. In other cases, criminals compromise the FMCSA accounts of legitimate companies and change their phone numbers and email addresses in order to impersonate them and steal loads. (USDOT did not respond to a request for comment.)

As Bek Abdullayev, the founder of Super Dispatch, one of Central Dispatch’s biggest competitors, explained in an episode of the podcast Auto Transport Co-Pilot, “FMCSA [is] authorizing people that are fraudulent companies—people that are not who they say they are.” He added that people can “game the system and … obtain paperwork that makes [them] look like a legitimate company.” For example, vehicle carrier insurance can be obtained quickly—if temporarily—by submitting an online application with fraudulent payment credentials. The bottom line is that crooks have found myriad ways to present themselves as genuine and permitted vehicle transport brokers and carriers. Once hired to move a vehicle, they often repost the car on a load board using a different fraudulent or compromised account. While this kind of subcontracting, known as “double-brokering,” is sometimes used by companies to save money, it can also be used by criminals to hire an unwitting accomplice to deliver the stolen car to their desired location. “They’re booking cars and then they’re just reposting them and dispatching them out to different routes,” says Yariv, the West Palm Beach transport broker. “A lot of this is cartel operated,” says Decker, of the Nevada DMV, who also serves on a vehicle fraud committee for the International Association of Chiefs of Police. “There’s so much money in it that it rivals selling drugs.” Even though this problem is becoming increasingly well known, fraudsters continue to steal, largely with impunity. Brokers, auto industry insiders, and law enforcement told MIT Technology Review that load boards and the USDOT have been too slow to catch and ban bad actors. (In its statement, Cox Automotive said it has been “dedicated to continually enhancing our processes, technology, and education efforts across the industry to fight fraud.”) Jake MacDonald, who leads Super Dispatch’s fraud monitoring and investigation efforts, put it bluntly on the podcast with Abdullayev: the reason that fraud is “jumping so much” is that “the industry is slowly moving over to a more technologically advanced position, but it’s so slow that fraud is actually [out-]innovating the industry.” A Florida sting As it turns out, the person Zahr’s team hired on Central Dispatch didn’t really work for the transport company. After securing the job, the fraudster reposted the orange-and-gray Rolls convertible to a load board. And instead of saying that the car needed to go from Miami to the real destination of Detroit, the new job listed an end point of Hallandale Beach, Florida, just 20 or so miles away. It was a classic case of malicious double-brokering: the crooks claimed a load and then reposted it in order to find a new, unsuspecting driver to deliver the car into their possession. On January 17 of last year, the legitimate driver showed up in a Dodge Ram and loaded the Rolls into an enclosed trailer as Zahr watched.

“The guy came in and looked very professional, and we took a video of him loading the car, taking pictures of everything,” Zahr told me. He never thought to double-check where the driver was headed or which company he worked for. Not long after a panicked Zahr spoke with his contact at the transport company he thought he was working with, he reported the car as stolen to the Miami police. Detective Ryan Chin was assigned to the case. It fit with a pattern of high-end auto theft that he and his colleagues had recently been tracking. “Over the past few weeks, detectives have been made aware of a new method on the rise for vehicles being stolen by utilizing Central Dispatch,” Chin wrote in records obtained by MIT Technology Review. “Specific brokers are re-routing the truck drivers upon them picking up vehicles posted for transport and routing them to other locations provided by the broker.” Chin used Zahr’s photos and video to identify the truck and driver who’d taken the Rolls. By the time police found him, on January 31, the driver had already dropped off Zahr’s Rolls in Hallandale Beach. He’d also picked up and delivered a black Lamborghini Urus and a White Audi R8 for the same client. Each car had been stolen via double-brokering transport fraud, according to court records. The police department declined to comment or to make Chin available for an interview. But a source with knowledge of the case said the driver was “super cooperative.” (The source asked not to be identified because they were not authorized to speak to the media, and the driver does not appear to have been identified in court records.) The driver told police that he had another load to pick up at a dealership in Naples, Florida, later that same day—a second Lamborghini Urus, this one orange. Police later discovered it was supposed to be shipped to California. But the carrier had been hired to bring the car, which retails for about $250,000, to a mall in nearby Aventura. He told police that he suspected it was going to be delivered to the same person who had booked him for the earlier Rolls, Audi, and Lamborghini deliveries, since “the voice sounds consistent with who [the driver] dealt with prior on the phone.” This drop-off was slated for 4 p.m. at the Waterways Shoppes mall in Aventura. That was when Chin and a fellow detective, Orlando Rodriguez, decided to set up a sting. The officers and colleagues across three law enforcement agencies quickly positioned themselves in the Waterways parking lot ahead of the scheduled delivery of the Urus. They watched as, pretty much right on schedule that afternoon, the cooperative driver of the Dodge Ram rolled to a stop in the palm-tree-lined lot, which was surrounded by a kosher supermarket, Japanese and Middle Eastern restaurants, and a physiotherapy clinic. The driver went inside the trailer and emerged in the orange Lamborghini. He parked it and waited near the vehicle. Roughly 30 minutes later, a green Rolls-Royce Cullinan (price: $400,000 and up) arrived with two men and a teenager inside. They got out, opened the trunk, and sat on the tailgate of the vehicle as one man counted cash. “They’re doing countersurveillance, looking around,” the source told me later. “It’s a little out of the ordinary, you know. They kept being fixated [on] where the truck was parked.” The transport driver and the three males who arrived in the Rolls-Royce did not interact. But soon enough, another luxury vehicle, a Bentley Continental GT, which last year retailed for about $250,000 and up, pulled in. The Bentley driver got out, took the cash from one of the men sitting on the back of the Rolls, and walked over to the transport driver. He handed him $700 and took the keys to the Lamborghini. That’s when more than a dozen officers swooped in. “They had nowhere to go,” the source told me. “We surrounded them.” The two men in the Rolls were later identified as Arman Gevorgyan and Hrant Nazarian, and the man in the Bentley as Yuriy Korotovskyy. The three were arrested and charged with dealing in stolen property, grand theft over $100,000, and organized fraud. (The teenager who arrived in the Rolls was Gevorgyan’s son. He was detained and released, according to Richard Cooper, Gevorgyan’s attorney.) As investigators dug into the case, the evidence suggested that this was part of the criminal pattern they’d been following. “I think it’s organized,” the source told me. It’s something that transport industry insiders have talked about for a while, according to Fred Mills, the owner of Florida-based Advantage Auto Transport, a company that specializes in transporting high-end vehicles. He said there’s even a slang term to describe people engaged in transport fraud: the flip-flop mafia.

.cst-large,

.cst-default {

width: 100%;

}

@media (max-width: 767px) {

.cst-block {

overflow-x: hidden;

}

}

@media (min-width: 630px) {

.cst-large {

margin-left: -25%;

width: 150%;

}

@media (min-width: 960px) {

.cst-large {

margin-left: -16.666666666666664%;

width: 140.26%;

}

}

@media (min-width: 1312px) {

.cst-large {

width: 145.13%;

}

}

} @media (min-width: 60rem) { .flourish-embed { width: 60vw; transform: translateX(-50%); left: 50%; position: relative; } } It has multiple meanings. One is that the people who show up to transport or accept a vehicle “are out there wearing, you know, flip-flops and slides,” Mills says. The second refers to how fraudsters “flip” from one carrier registration to another as they try to stay ahead of regulators and complaints. In addition to needing a USDOT number, carriers working across states need an interstate operating authority (commonly known as an MC number) from the USDOT. Both IDs are typically printed on the driver and passenger doors. But the rise of double-brokering—and of fly-by-night and fraudulent carriers—means that drivers increasingly just tape IDs to their door. Mills says fraudsters will use a USDOT number for 10 or 11 months, racking up violations, and then tape up a new one. “They just wash, rinse, and repeat,” he says. Decker from the Nevada DMV says a lot of high-end vehicles are stolen because dealerships and individual customers don’t properly check the paperwork or identity of the person who shows up to transport them. “‘Flip-flop mafia’ is an apt nickname because it’s surprisingly easy to get a car on a truck and convince somebody that they’re a legitimate transport operation when they’re not,” he says. Roughly a month after it disappeared, Zahr’s Rolls-Royce was recovered by the Miami Beach Police. Video footage obtained by a local TV station showed the gray car with its distinctive orange top being towed into a police garage. What happens in Vegas Among the items confiscated from the men in Florida were $10,796 in cash and a GPS jammer. Law enforcement sources say jammers have become a core piece of technology for modern car thieves—necessary to disable the location tracking provided by GPS navigation systems in most cars. “Once they get the vehicles, they usually park them somewhere [and] put a signal jammer in there or cut out the GPS,” the Florida source told me. This buys them time to swap and reprogram the vehicle identification number (VIN), wipe car computers, and reprogram fobs to remove traces of the car’s provenance. No two VINs are the same, and each is assigned to a specific vehicle by the manufacturer. Where they’re placed inside a vehicle varies by make and model. The NICB’s Woolf says cars also have confidential VINs located in places—including their electronic components—that are supposed to be known only to law enforcement and his organization. But criminals have figured out how to find and change them. “It’s making it more and more difficult for us to identify vehicles as stolen,” Woolf says. “Every time we come up with a security measure to prevent the fraudster, they come up with a countermeasure.” All this doesn’t even take very much time. “If you know what you’re doing, and you steal the car at one o’clock today, you can have it completely done at two o’clock today,” says Woolf. A vehicle can be rerouted, reprogrammed, re-VINed, and sometimes even retitled before an owner files a police report. That appears to have been the plan in the case of the stolen light-gray 2023 Lamborghini Huracán owned by the Rockies’ Kris Bryant. On September 29, 2024, a carrier hired via a load board arrived at Bryant’s home in Cherry Hills, Colorado, to pick up the car. It was supposed to be transported to Bryant’s Las Vegas residence within a few days. It never showed up there—but it was in fact in Vegas. Using Flock traffic cameras, which capture license plate information in areas across the country, Detective Justin Smith of the Cherry Hills Village Police Department tracked the truck and trailer that had picked up the Lambo to Nevada, and he alerted local police. On October 7, a Las Vegas officer spotted a car matching the Lamborghini’s description and pulled it over. The driver said the Huracán had been brought to his auto shop by a man whom the police were able to identify as Dat Viet Tieu. They arrested Tieu later that same day. In an interview with police, he identified himself as a car broker. He said he was going to resell the Lamborghini and that he had no idea that the car was stolen, according to the arrest report. Police searched a Jeep Wrangler that Tieu had parked nearby and discovered it had been stolen—and had been re-VINed, retitled, and registered to his wife. Inside the car, police discovered “multiple fraudulent VIN stickers, key fobs to other high-end stolen vehicles, and fictitious placards,” their report said. One of the fake VINs matched the make and model of Bryant’s Lamborghini. (Representatives for Bryant and the Rockies did not respond to a request for comment.) Tieu was released on bail. But after he returned to LVPD headquarters two days later, on October 9, to reclaim his personal property, officers secretly placed him under surveillance with the hope that he’d lead them to one of the other stolen cars matching the key fobs they’d found in the Jeep. It didn’t take long for them to get lucky. A few hours after leaving the police station, Tieu drove to Harry Reid International Airport, where he picked up an unidentified man. They drove to the Caesars Palace parking garage and pulled in near a GMC Sierra. Over the next three hours, the man worked on a laptop inside and outside the vehicle, according to a police report. At one point, he and Tieu connected jumper cables from Tieu’s rented Toyota Camry to the Sierra. “At 2323 hours, the white male adult enters the GMC Sierra, and the vehicle’s ignition starts. It was readily apparent the [two men] had successfully re-programmed a key fob to the GMC Sierra,” the report said. An officer watched as the man gave two key fobs to Tieu, who handed the man an unknown amount of cash. Still, the police let the men leave the garage. The police kept Tieu and his wife under surveillance for more than a week. Then, on October 18, fearing the couple was about to leave town, officers entered Nora’s Italian Restaurant just off the Vegas Strip and took them into custody. “Obviously, we meet again,” a detective told Tieu. “I’m not surprised,” Tieu replied. Police later searched the VIN on the Sierra from the Caesars lot and found that it had been reported stolen in Tremonton, Utah, roughly two weeks earlier. They eventually returned both the Sierra and Kris Bryant’s Lamborghini to their owners. Tieu pleaded guilty to two felony counts of possession of a stolen vehicle and one count of defacing, altering, substituting, or removing a VIN. In October, he was sentenced to up to one year of probation; if it’s completed successfully, the plea agreement says, the counts of possession of a stolen vehicle will be dismissed. His attorneys, David Z. Chesnoff and Richard A. Schonfeld, said in a statement that they were “pleased” with the court’s decision, “in light of [Tieu’s] acceptance of responsibility.” Taking the heat Many vehicles stolen via transport fraud are never recovered. Experts say the best way to stop this criminal cycle would be to disrupt it before it starts. That would require significant changes to the way that load boards operate. Bryant’s Lamborghini, Zahr’s and Payne’s Rolls-Royces, and the orange Lamborghini Urus in Florida were all posted for transport on Central Dispatch. Both brokers and shippers argue that the company hasn’t taken enough responsibility for what they characterize as weak oversight. “If the crap hits the fan, it’s on us as a broker, or it’s on the trucking company … they have no liability in the whole transaction process. So it definitely frosted a lot of people’s feathers.” Fred Mills, owner of Florida-based Advantage Auto Transport “You’re Cox Automotive—you’re the biggest car company in the world for dealers—and you’re not doing better screenings when you sign people up?” says Payne. (The spokesperson for Cox Automotive said that it has “a robust verification process for all clients … who sign up.”) “If the crap hits the fan, it’s on us as a broker, or it’s on the trucking company, or the clients’ insurance, [which means] that they have no liability in the whole transaction process,” says Mills. “So it definitely frosted a lot of people’s feathers.” Over the last year, Central Dispatch has made changes to further secure its platform. It introduced two-factor authentication for user accounts and started enabling shippers to use its app to track loads in real time, among other measures. It also kicked off an awareness campaign that includes online educational content and media appearances to communicate that the company takes its responsibilities seriously. “We’ve removed over 500 accounts already in 2025, and we’ll continue to take any of that aggressive action where it’s needed,” said Lainey Sibble, Central Dispatch’s head of business, in a sponsored episode of the Auto Remarketing Podcast. “We also recognize this is not going to happen in a silo. Everyone has a role to play here, and it’s really going to take us all working together in partnership to combat this issue.” Mills says Central Dispatch got faster at shutting down fraudulent accounts toward the end of last year. But it’s going to take time to fix the industry, he adds: “I compare it to a 15-year opioid addiction. It’s going to take a while to detox the system.” Yariv, the broker in West Palm Beach, says he has stopped using Central Dispatch and other load boards altogether. “One person has access here, and that’s me. I don’t even log in,” he told me. His team has gone back to working the phones, as evidenced by the din of voices in the background as we spoke. RICHARD CHANCE “[The fraud is] everywhere. It’s constant,” he said. “The only way it goes away is the dispatch boards have to be shut down—and that’ll never happen.” It also remains to be seen what kind of accountability there will be for the alleged thieves in Florida. Korotovskyy and Nazarian pleaded not guilty; as of press time, their trials were scheduled to begin in May. (Korotovskyy’s lawyer, Bruce Prober, said in a statement that the case “is an ongoing matter” and his client is “presumed innocent,” while Nazarian’s attorney, Yale Sanford, said in a statement, “As the investigation continues, Mr. Nazarian firmly asserts his innocence.” A spokesperson with Florida’s Office of the State Attorney emailed a statement: “The circumstances related to these arrests are still a matter of investigation and prosecution. It would be inappropriate to be commenting further.”) In contrast, Gevorgyan, the third man arrested in the Florida sting, pleaded guilty to four charges. Yet he maintains his innocence, according to Cooper, his lawyer: “He was pleading [guilty] to get out and go home.” Cooper describes his client as a wealthy Armenian national who runs a jewelry business back home, adding that he was deported to Armenia in September. Cooper says his client’s “sweetheart” plea deal doesn’t require him to testify or otherwise supply information against his alleged co-conspirators—or to reveal details about how all these luxury cars were mysteriously disappearing across South Florida. Cooper also says prosecutors may have a difficult time convicting the other two men, arguing that police acted prematurely by arresting the trio without first seeing what, if anything, they intended to do with the Lamborghini. “All they ever had,” Cooper says, “was three schmucks sitting outside of the Lamborghini.” Craig Silverman is an award-winning journalist and the cofounder of Indicator, a publication that reports on digital deception.